感谢每一个认真阅读我文章的人,看着粉丝一路的上涨和关注,礼尚往来总是要有的:

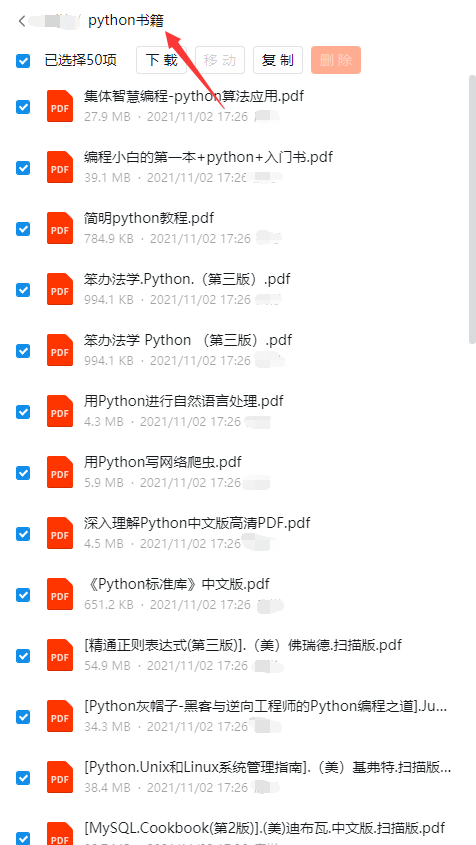

① 2000多本Python电子书(主流和经典的书籍应该都有了)

② Python标准库资料(最全中文版)

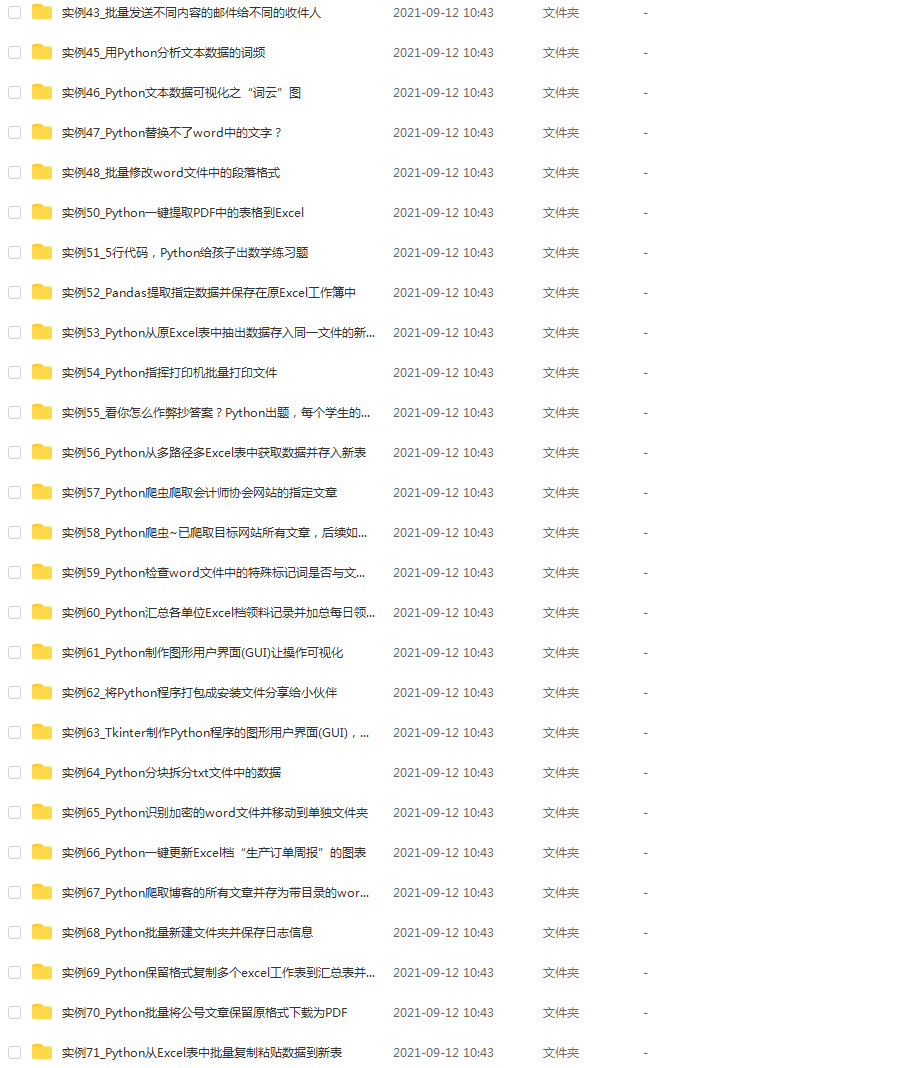

③ 项目源码(四五十个有趣且经典的练手项目及源码)

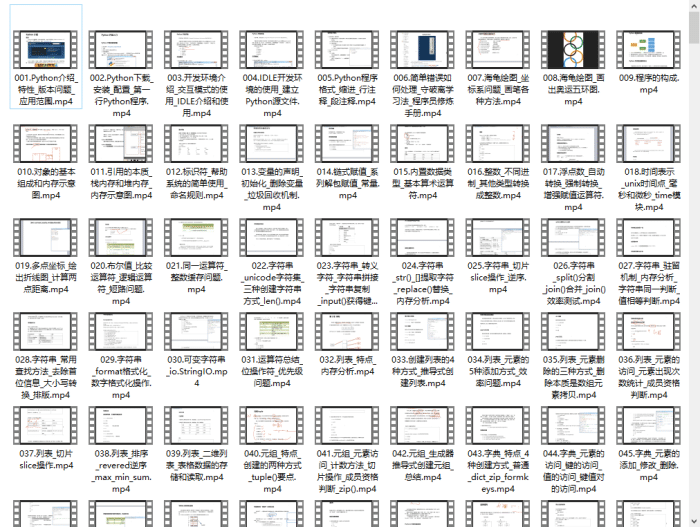

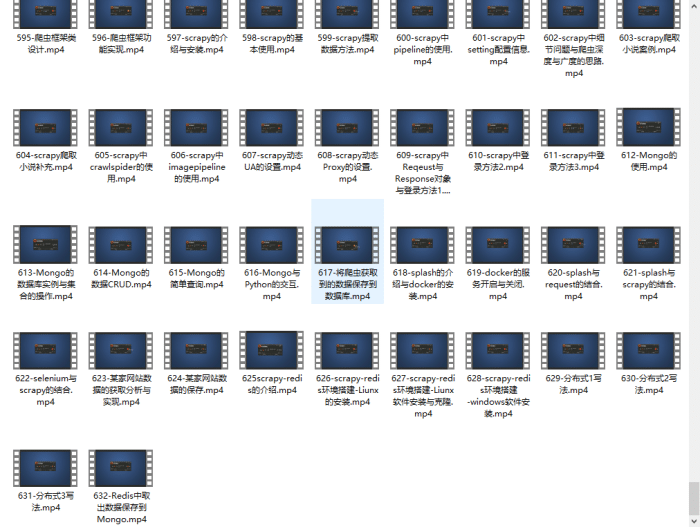

④ Python基础入门、爬虫、web开发、大数据分析方面的视频(适合小白学习)

⑤ Python学习路线图(告别不入流的学习)

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

def init(self, alpha):

self.alpha = alpha

def call(self, w):

return self.alpha * np.linalg.norm(w)

def grad(self, w):

return self.alpha * np.sign(w)

class l2_regularization():

“”" Regularization for Ridge Regression “”"

def init(self, alpha):

self.alpha = alpha

def call(self, w):

return self.alpha * 0.5 * w.T.dot(w)

def grad(self, w):

return self.alpha * w

class l1_l2_regularization():

“”" Regularization for Elastic Net Regression “”"

def init(self, alpha, l1_ratio=0.5):

self.alpha = alpha

self.l1_ratio = l1_ratio

def call(self, w):

l1_contr = self.l1_ratio * np.linalg.norm(w)

l2_contr = (1 - self.l1_ratio) * 0.5 * w.T.dot(w)

return self.alpha * (l1_contr + l2_contr)

def grad(self, w):

l1_contr = self.l1_ratio * np.sign(w)

l2_contr = (1 - self.l1_ratio) * w

return self.alpha * (l1_contr + l2_contr)

class Regression(object):

“”" Base regression model. Models the relationship between a scalar dependent variable y and the independent

variables X.

Parameters:

n_iterations: float

The number of training iterations the algorithm will tune the weights for.

learning_rate: float

The step length that will be used when updating the weights.

“”"

def init(self, n_iterations, learning_rate):

self.n_iterations = n_iterations

self.learning_rate = learning_rate

def initialize_weights(self, n_features):

“”" Initialize weights randomly [-1/N, 1/N] “”"

limit = 1 / math.sqrt(n_features)

self.w = np.random.uniform(-limit, limit, (n_features, ))

def fit(self, X, y):

Insert constant ones for bias weights

X = np.insert(X, 0, 1, axis=1)

self.training_errors = []

self.initialize_weights(n_features=X.shape[1])

Do gradient descent for n_iterations

for i in range(self.n_iterations):

y_pred = X.dot(self.w)

Calculate l2 loss

mse = np.mean(0.5 * (y - y_pred)**2 + self.regularization(self.w))

self.training_errors.append(mse)

Gradient of l2 loss w.r.t w

grad_w = -(y - y_pred).dot(X) + self.regularization.grad(self.w)

Update the weights

self.w -= self.learning_rate * grad_w

def predict(self, X):

Insert constant ones for bias weights

X = np.insert(X, 0, 1, axis=1)

y_pred = X.dot(self.w)

return y_pred

class LinearRegression(Regression):

“”"Linear model.

Parameters:

n_iterations: float

The number of training iterations the algorithm will tune the weights for.

learning_rate: float

The step length that will be used when updating the weights.

gradient_descent: boolean

True or false depending if gradient descent should be used when training. If

false then we use batch optimization by least squares.

“”"

def init(self, n_iterations=100, learning_rate=0.001, gradient_descent=True):

self.gradient_descent = gradient_descent

No regularization

self.regularization = lambda x: 0

self.regularization.grad = lambda x: 0

super(LinearRegression, self).init(n_iterations=n_iterations,

learning_rate=learning_rate)

def fit(self, X, y):

If not gradient descent => Least squares approximation of w

if not self.gradient_descent:

Insert constant ones for bias weights

X = np.insert(X, 0, 1, axis=1)

Calculate weights by least squares (using Moore-Penrose pseudoinverse)

U, S, V = np.linalg.svd(X.T.dot(X))

S = np.diag(S)

X_sq_reg_inv = V.dot(np.linalg.pinv(S)).dot(U.T)

self.w = X_sq_reg_inv.dot(X.T).dot(y)

else:

super(LinearRegression, self).fit(X, y)

class LassoRegression(Regression):

“”"Linear regression model with a regularization factor which does both variable selection

and regularization. Model that tries to balance the fit of the model with respect to the training

data and the complexity of the model. A large regularization factor with decreases the variance of

the model and do para.

Parameters:

degree: int

The degree of the polynomial that the independent variable X will be transformed to.

reg_factor: float

The factor that will determine the amount of regularization and feature

shrinkage.

n_iterations: float

The number of training iterations the algorithm will tune the weights for.

learning_rate: float

The step length that will be used when updating the weights.

“”"

def init(self, degree, reg_factor, n_iterations=3000, learning_rate=0.01):

self.degree = degree

self.regularization = l1_regularization(alpha=reg_factor)

super(LassoRegression, self).init(n_iterations,

learning_rate)

def fit(self, X, y):

X = normalize(polynomial_features(X, degree=self.degree))

super(LassoRegression, self).fit(X, y)

def predict(self, X):

X = normalize(polynomial_features(X, degree=self.degree))

return super(LassoRegression, self).predict(X)

class PolynomialRegression(Regression):

“”"Performs a non-linear transformation of the data before fitting the model

and doing predictions which allows for doing non-linear regression.

Parameters:

degree: int

The degree of the polynomial that the independent variable X will be transformed to.

n_iterations: float

The number of training iterations the algorithm will tune the weights for.

learning_rate: float

The step length that will be used when updating the weights.

“”"

def init(self, degree, n_iterations=3000, learning_rate=0.001):

self.degree = degree

No regularization

self.regularization = lambda x: 0

self.regularization.grad = lambda x: 0

super(PolynomialRegression, self).init(n_iterations=n_iterations,

learning_rate=learning_rate)

def fit(self, X, y):

X = polynomial_features(X, degree=self.degree)

super(PolynomialRegression, self).fit(X, y)

def predict(self, X):

X = polynomial_features(X, degree=self.degree)

return super(PolynomialRegression, self).predict(X)

class RidgeRegression(Regression):

“”"Also referred to as Tikhonov regularization. Linear regression model with a regularization factor.

Model that tries to balance the fit of the model with respect to the training data and the complexity

of the model. A large regularization factor with decreases the variance of the model.

Parameters:

reg_factor: float

The factor that will determine the amount of regularization and feature

shrinkage.

n_iterations: float

如果你也是看准了Python,想自学Python,在这里为大家准备了丰厚的免费学习大礼包,带大家一起学习,给大家剖析Python兼职、就业行情前景的这些事儿。

一、Python所有方向的学习路线

Python所有方向路线就是把Python常用的技术点做整理,形成各个领域的知识点汇总,它的用处就在于,你可以按照上面的知识点去找对应的学习资源,保证自己学得较为全面。

二、学习软件

工欲善其必先利其器。学习Python常用的开发软件都在这里了,给大家节省了很多时间。

三、全套PDF电子书

书籍的好处就在于权威和体系健全,刚开始学习的时候你可以只看视频或者听某个人讲课,但等你学完之后,你觉得你掌握了,这时候建议还是得去看一下书籍,看权威技术书籍也是每个程序员必经之路。

四、入门学习视频

我们在看视频学习的时候,不能光动眼动脑不动手,比较科学的学习方法是在理解之后运用它们,这时候练手项目就很适合了。

四、实战案例

光学理论是没用的,要学会跟着一起敲,要动手实操,才能将自己的所学运用到实际当中去,这时候可以搞点实战案例来学习。

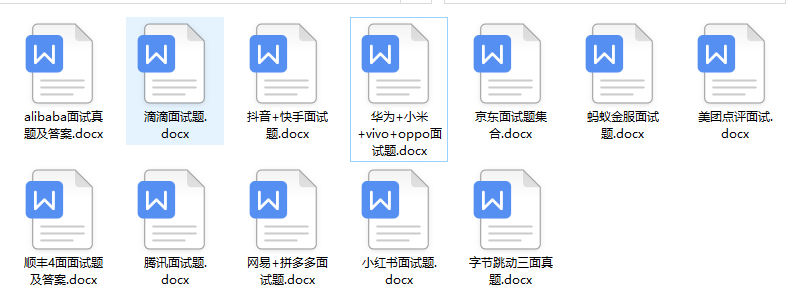

五、面试资料

我们学习Python必然是为了找到高薪的工作,下面这些面试题是来自阿里、腾讯、字节等一线互联网大厂最新的面试资料,并且有阿里大佬给出了权威的解答,刷完这一套面试资料相信大家都能找到满意的工作。

成为一个Python程序员专家或许需要花费数年时间,但是打下坚实的基础只要几周就可以,如果你按照我提供的学习路线以及资料有意识地去实践,你就有很大可能成功!

最后祝你好运!!!

网上学习资料一大堆,但如果学到的知识不成体系,遇到问题时只是浅尝辄止,不再深入研究,那么很难做到真正的技术提升。

一个人可以走的很快,但一群人才能走的更远!不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

8020

8020

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?