优化一

需求背景

公司在做一个采购监督的项目,在权限设计这里,希望能将用户数据权限与公司相关联。即当前登录用户能看到该用户所属公司及其子公司的所有标段数据。

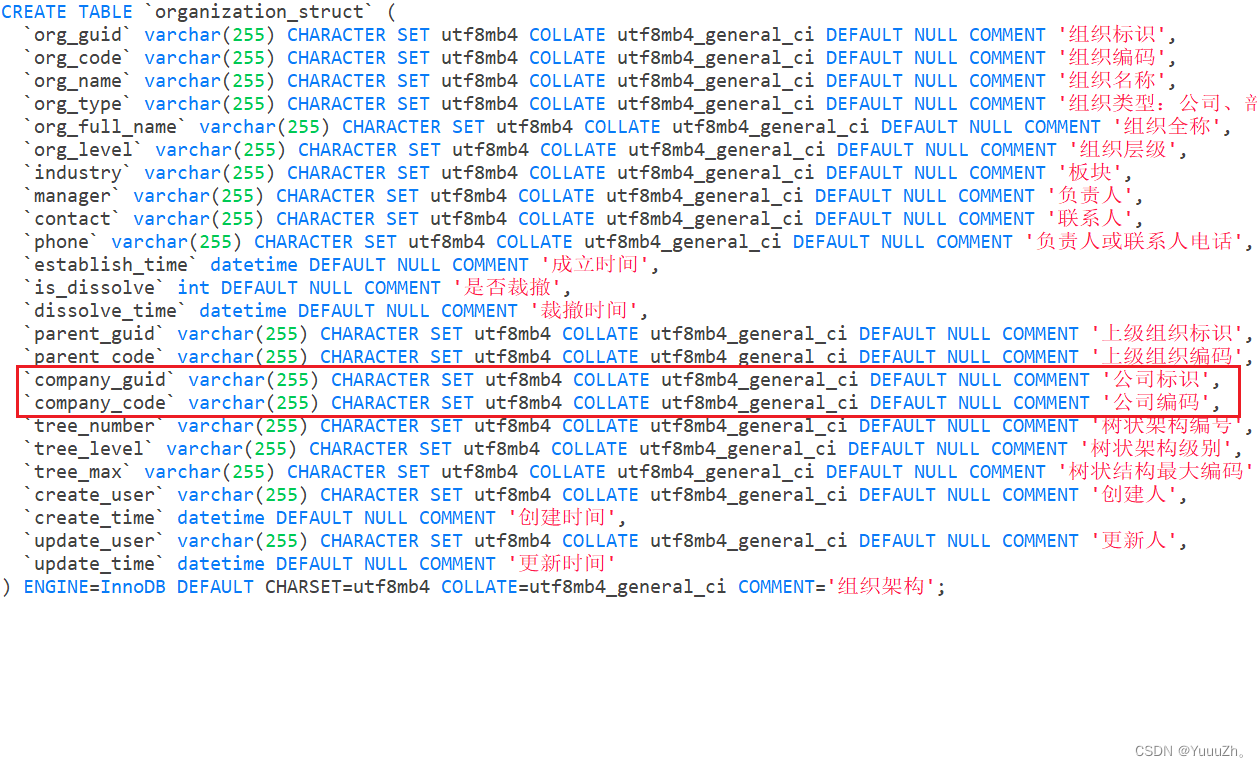

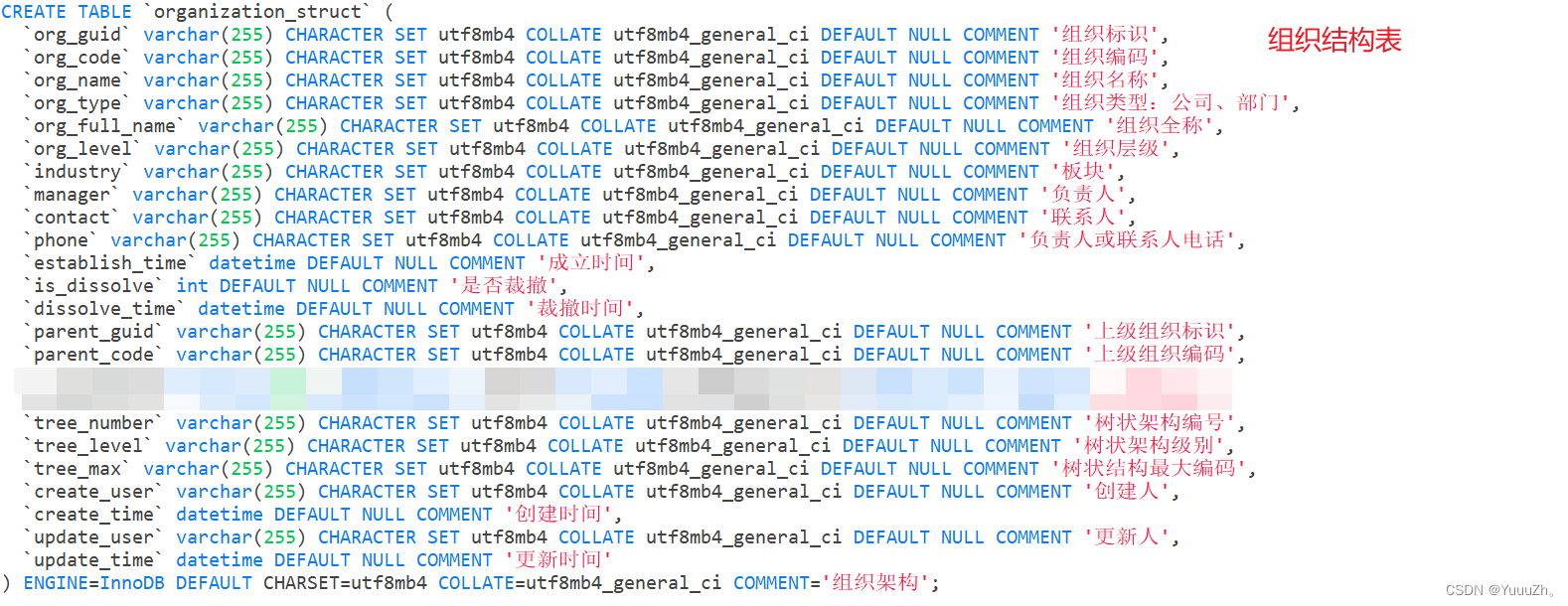

给大家看一下我们项目的表结构

需求分析

这个功能在实现时还是比较简单的,下面给大家分享一下我最开始的思路。首先,我们可以获取到登录用户的公司id,然后递归查询该公司下的所有子公司,然后去标段表里使用in查询,就可以查到所有该公司及其下属公司的所有标段信息。

问题描述

其实到这里大家也能看出来问题了,就是当数据量非常大时,执行效率会特别慢。而且,因为组织架构表中的组织编码包含着非公司的编码(部门编码、集团编码),所以单公司这里就能查出17000+,让本就不高的效率更加雪上加霜(标段信息表中的标段有43W+)。无论我怎么小表驱动大表,执行的时间都在20s+。

解决思路

问题真的没有办法解决吗?答案是否定的。

在项目中,我们在组织架构表中添加了额外的字段,去标记当前组织的公司(管你是部门还是集团,统统以公司去标识)。这样,在组织结构表中进行一个自关联,得到的公司仅有600+,这可是数量级的降低!效率也成功提高,时间耗费也在2s左右。

优化二

上面只是从业务角度对慢查询进行了优化(因为这样的场景下无论你sql怎么优化,都无法满足实际需求),下面来看一个技术层面的优化。

需求背景

仍然是查询不同公司下的标段信息。在解决了上面的慢查询后,愉快的将代码进行合并发版,但是,在测试时发现还是很慢很慢,甚至接口直接报超时了。

问题分析

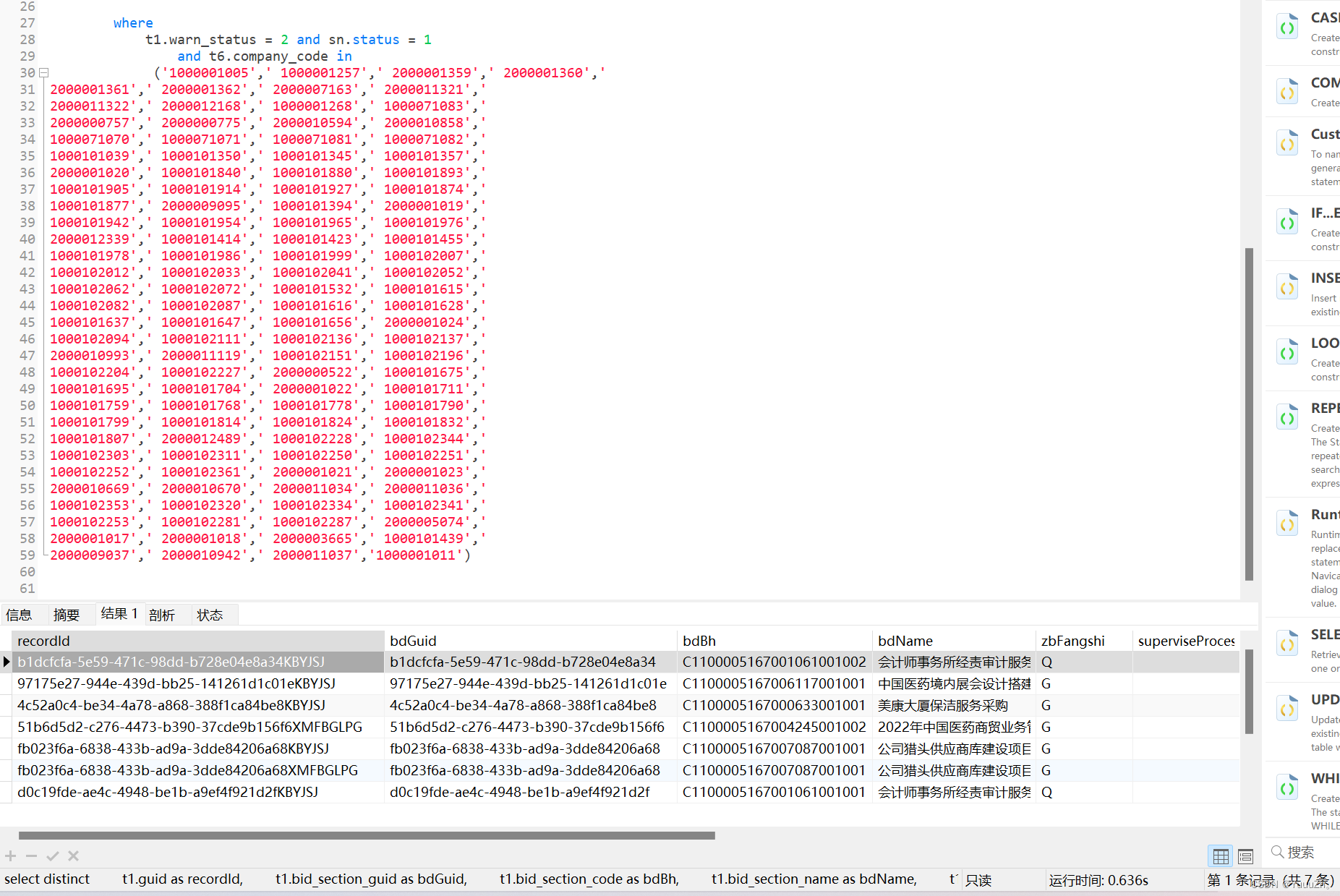

将日志打印的sql在本地进行执行,很快,只有0.636s

终于,经过我不懈的努力,发现是因为mybatis-plus的分页插件导致的,准确的说,是因为limit 导致的。在添加了limit 10后,原本0.636s的查询,时间耗费到12.814s

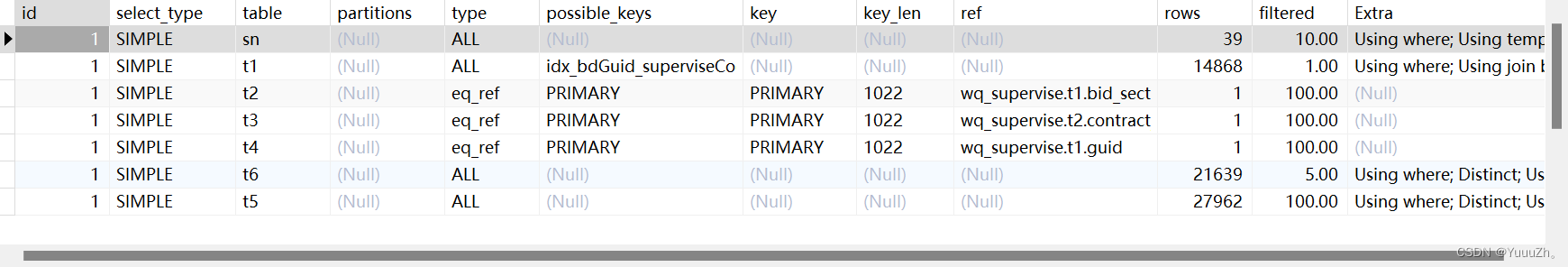

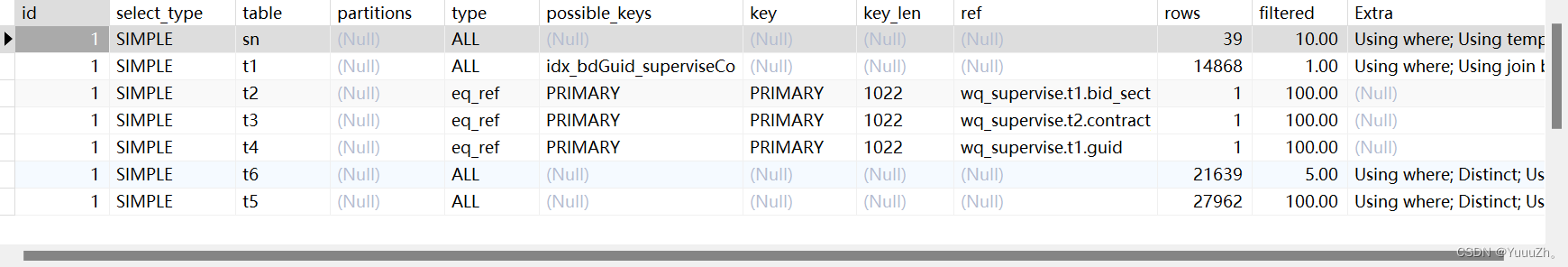

很奇怪,对两条sql使用explain命令分析执行信息

首先是不带limit的

然后是带了limit 10 的

可以看到,mysql的执行是一模一样的

使用explain analyze对添加了limit 10的sql进行分析

-> Limit: 10 row(s) (cost=20841515.38..20841515.49 rows=10) (actual time=14534.920..14534.923 rows=7 loops=1)

-> Table scan on <temporary> (cost=0.01..109643.44 rows=8771275) (actual time=0.012..0.014 rows=7 loops=1)

-> Temporary table with deduplication (cost=20841515.38..20951158.81 rows=8771275) (actual time=14534.911..14534.914 rows=7 loops=1)

-> Limit table size: 10 unique row(s)

-> Left hash join (t5.bid_section_guid = t1.bid_section_guid) (cost=19964387.86 rows=8771275) (actual time=14472.561..14534.677 rows=12 loops=1)

-> Inner hash join (t6.org_guid = t2.build_unit_no) (cost=58059.99 rows=314) (actual time=4635.155..14459.452 rows=7 loops=1)

-> Filter: (t6.company_code in ('1000001005',' 1000001257',' 2000001359',' 2000001360',' \r\n2000001361',' 2000001362',' 2000007163',' 2000011321',' \r\n2000011322',' 2000012168',' 1000001268',' 1000071083',' \r\n2000000757',' 2000000775',' 2000010594',' 2000010858',' \r\n1000071070',' 1000071071',' 1000071081',' 1000071082',' \r\n1000101039',' 1000101350',' 1000101345',' 1000101357',' \r\n2000001020',' 1000101840',' 1000101880',' 1000101893',' \r\n1000101905',' 1000101914',' 1000101927',' 1000101874',' \r\n1000101877',' 2000009095',' 1000101394',' 2000001019',' \r\n1000101942',' 1000101954',' 1000101965',' 1000101976',' \r\n2000012339',' 1000101414',' 1000101423',' 1000101455',' \r\n1000101978',' 1000101986',' 1000101999',' 1000102007',' \r\n1000102012',' 1000102033',' 1000102041',' 1000102052',' \r\n1000102062',' 1000102072',' 1000101532',' 1000101615',' \r\n1000102082',' 1000102087',' 1000101616',' 1000101628',' \r\n1000101637',' 1000101647',' 1000101656',' 2000001024',' \r\n1000102094',' 1000102111',' 1000102136',' 1000102137',' \r\n2000010993',' 2000011119',' 1000102151',' 1000102196',' \r\n1000102204',' 1000102227',' 2000000522',' 1000101675',' \r\n1000101695',' 1000101704',' 2000001022',' 1000101711',' \r\n1000101759',' 1000101768',' 1000101778',' 1000101790',' \r\n1000101799',' 1000101814',' 1000101824',' 1000101832',' \r\n1000101807',' 2000012489',' 1000102228',' 1000102344',' \r\n1000102303',' 1000102311',' 1000102250',' 1000102251',' \r\n1000102252',' 1000102361',' 2000001021',' 2000001023',' \r\n2000010669',' 2000010670',' 2000011034',' 2000011036',' \r\n1000102353',' 1000102320',' 1000102334',' 1000102341',' \r\n1000102253',' 1000102281',' 1000102287',' 2000005074',' \r\n2000001017',' 2000001018',' 2000003665',' 1000101439',' \r\n2000009037',' 2000010942',' 2000011037','1000001011')) (cost=96.25 rows=1082) (actual time=0.021..19.583 rows=165 loops=731)

-> Table scan on t6 (cost=96.25 rows=21639) (actual time=0.012..15.774 rows=21617 loops=731)

-> Hash

-> Nested loop left join (cost=1729.26 rows=6) (actual time=0.415..59.548 rows=731 loops=1)

-> Nested loop left join (cost=1691.51 rows=6) (actual time=0.389..54.113 rows=731 loops=1)

-> Nested loop inner join (cost=1653.76 rows=6) (actual time=0.365..43.577 rows=731 loops=1)

-> Inner hash join (t1.supervise_code = sn.`code`) (cost=1574.22 rows=6) (actual time=0.337..24.415 rows=738 loops=1)

-> Filter: (t1.warn_status = 2) (cost=391.40 rows=149) (actual time=0.156..22.258 rows=738 loops=1)

-> Table scan on t1 (cost=391.40 rows=14868) (actual time=0.093..20.662 rows=15553 loops=1)

-> Hash

-> Filter: (sn.`status` = 1) (cost=4.65 rows=4) (actual time=0.095..0.107 rows=37 loops=1)

-> Table scan on sn (cost=4.65 rows=39) (actual time=0.094..0.102 rows=39 loops=1)

-> Single-row index lookup on t2 using PRIMARY (bid_section_guid=t1.bid_section_guid) (cost=0.14 rows=1) (actual time=0.025..0.025 rows=1 loops=738)

-> Single-row index lookup on t3 using PRIMARY (contract_awarding_guid=t2.contract_awarding_guid) (cost=0.06 rows=1) (actual time=0.014..0.014 rows=1 loops=731)

-> Single-row index lookup on t4 using PRIMARY (guid=t1.guid) (cost=0.06 rows=1) (actual time=0.007..0.007 rows=0 loops=731)

-> Hash

-> Table scan on t5 (cost=30.43 rows=27962) (actual time=0.040..35.242 rows=29130 loops=1)

结果显示,主要是在left join耗时较多(不可信),但是换成join就改需求了。

sql执行原理分析

我开始去对limit的执行原理进行分析,最终得到了一点自己的理解。

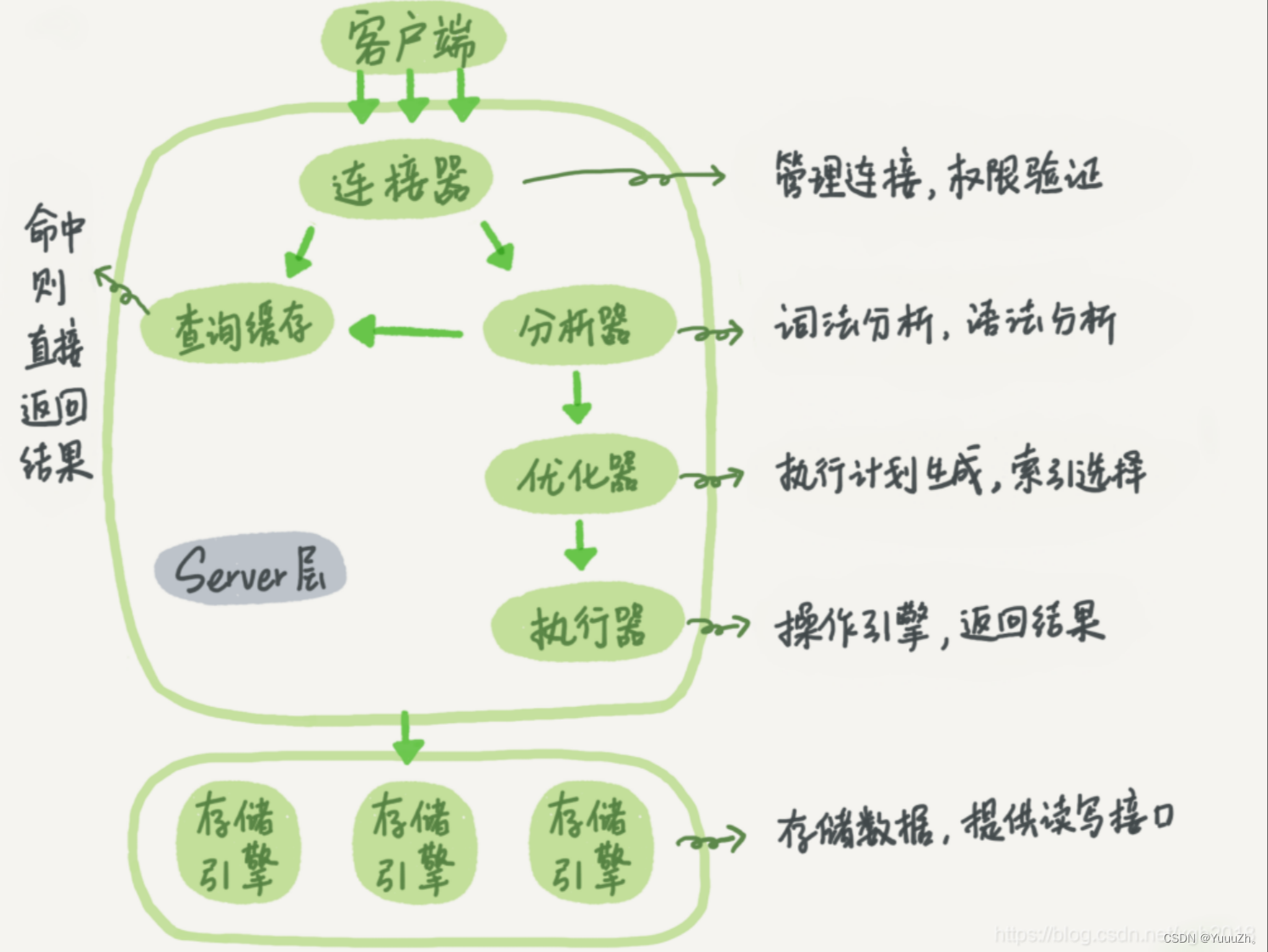

mysql的底层有server层和存储层(InnoDB),而对limit 10的执行是在server层。因此它的执行并不像我们想象的那样,一次得到所有结果,然后从存储层返回给server层对数据进行处理,而是每一次得到一条记录,就从存储层返回给server层,去判断是否满足limit 10的条件。满足则返回给客户端;不满足则告诉存储层,这次的结果不行,继续查找......直到满足了limit 10的条件,才会返回给客户端。看下面的结构图更便于理解(图省事直接搬的,侵权立删)

这样的IO是十分耗时的,对于我们项目中的数据量来说,这样的执行效率也就能理解了

sql优化

知道了这样的结论,那么优化起来就很简单了。

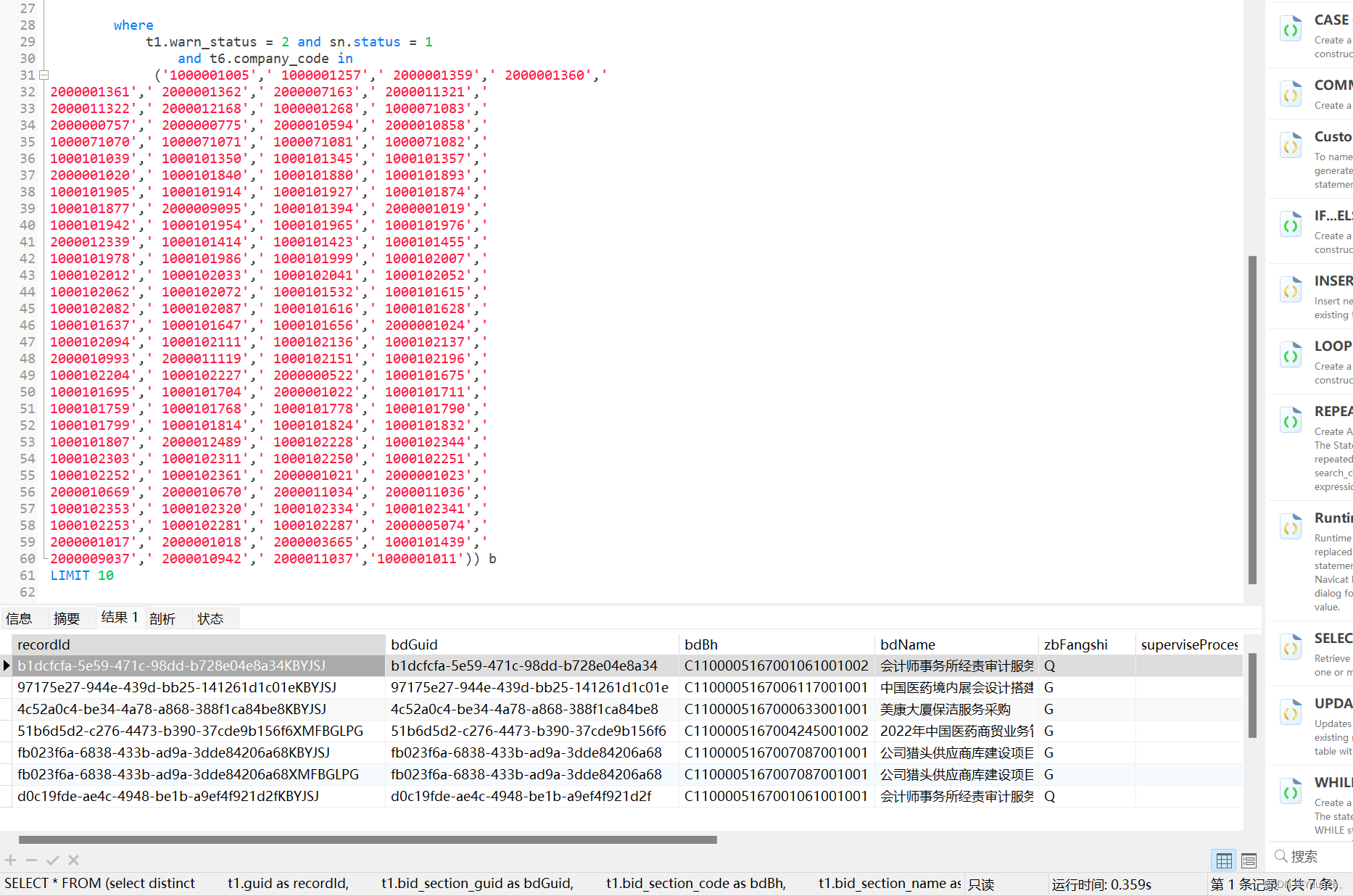

SELECT * FROM (select distinct

t1.guid as recordId,

t1.bid_section_guid as bdGuid,

t1.bid_section_code as bdBh,

t1.bid_section_name as bdName,

t1.bidding_method as zbFangshi,

t1.supervise_id as superviseProcessId,

t1.supervise_code as superviseCode,

t1.warn_status as warnStatus,

t1.warn_level as warnLevel,

t1.UPDATED_TIME as time,

case when t4.status is not null then t4.status else 2 end as status,

'超级管理员' as operator,

sn.name as superviseName,

t3.agent_fzr_name as responsibleName

from procurement_compliance_supervise_result t1

left join supervise_node sn on t1.supervise_code = sn.code

left join bid_section_info t2 on t1.bid_section_guid = t2.bid_section_guid

left join contract_proposal t3 on t2.contract_awarding_guid = t3.contract_awarding_guid

left join work_order_result t4 on t1.guid = t4.guid

left join win_bid_notification t5 on t5.bid_section_guid = t2.bid_section_guid

left JOIN organization_struct t6 on t2.build_unit_no =t6.org_guid

where

t1.warn_status = 2 and sn.status = 1

and t6.company_code in

('1000001005',' 1000001257',' 2000001359',' 2000001360','

2000001361',' 2000001362',' 2000007163',' 2000011321','

2000011322',' 2000012168',' 1000001268',' 1000071083','

2000000757',' 2000000775',' 2000010594',' 2000010858','

1000071070',' 1000071071',' 1000071081',' 1000071082','

1000101039',' 1000101350',' 1000101345',' 1000101357','

2000001020',' 1000101840',' 1000101880',' 1000101893','

1000101905',' 1000101914',' 1000101927',' 1000101874','

1000101877',' 2000009095',' 1000101394',' 2000001019','

1000101942',' 1000101954',' 1000101965',' 1000101976','

2000012339',' 1000101414',' 1000101423',' 1000101455','

1000101978',' 1000101986',' 1000101999',' 1000102007','

1000102012',' 1000102033',' 1000102041',' 1000102052','

1000102062',' 1000102072',' 1000101532',' 1000101615','

1000102082',' 1000102087',' 1000101616',' 1000101628','

1000101637',' 1000101647',' 1000101656',' 2000001024','

1000102094',' 1000102111',' 1000102136',' 1000102137','

2000010993',' 2000011119',' 1000102151',' 1000102196','

1000102204',' 1000102227',' 2000000522',' 1000101675','

1000101695',' 1000101704',' 2000001022',' 1000101711','

1000101759',' 1000101768',' 1000101778',' 1000101790','

1000101799',' 1000101814',' 1000101824',' 1000101832','

1000101807',' 2000012489',' 1000102228',' 1000102344','

1000102303',' 1000102311',' 1000102250',' 1000102251','

1000102252',' 1000102361',' 2000001021',' 2000001023','

2000010669',' 2000010670',' 2000011034',' 2000011036','

1000102353',' 1000102320',' 1000102334',' 1000102341','

1000102253',' 1000102281',' 1000102287',' 2000005074','

2000001017',' 2000001018',' 2000003665',' 1000101439','

2000009037',' 2000010942',' 2000011037','1000001011')) b

LIMIT 10这是优化后的代码。整体思路就是,先得到所有的结果,再对结果进行limit ,这样的执行效率也很可观,简直就是质的飞跃!!~

好啦,以上就是我工作中遇到的慢查询,后面还会继续和大家分享工作中的“趣事”。

谢谢观看

320

320

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?