写请求执行流程

RaftStorage.Write() ---->RaftStorage.raftRouter.SendRaftCommand(request, cb);

------>

func (r *RaftstoreRouter) SendRaftCommand(req *raft_cmdpb.RaftCmdRequest, cb *message.Callback) error {

cmd := &message.MsgRaftCmd{

Request: req,

Callback: cb,

}

regionID := req.Header.RegionId

return r.router.send(regionID, message.NewPeerMsg(message.MsgTypeRaftCmd, regionID, cmd))

}

func (pr *router) send(regionID uint64, msg message.Msg) error {

msg.RegionID = regionID

p := pr.get(regionID)

if p == nil || atomic.LoadUint32(&p.closed) == 1 {

return errPeerNotFound

}

pr.peerSender <- msg

return nil

}发现sender被放到raftWorker里面了,也就是说我们发的request是要给这个raftWorker的raftCh的,那么我们接下来追踪一下raftCh

raftCh

func (rw *raftWorker) run(closeCh <-chan struct{}, wg *sync.WaitGroup) {

defer wg.Done()

var msgs []message.Msg

for {

msgs = msgs[:0]

select {

case <-closeCh:

return

case msg := <-rw.raftCh:

msgs = append(msgs, msg)

}

pending := len(rw.raftCh)

for i := 0; i < pending; i++ {

msgs = append(msgs, <-rw.raftCh)

}

peerStateMap := make(map[uint64]*peerState)

for _, msg := range msgs {

peerState := rw.getPeerState(peerStateMap, msg.RegionID)

if peerState == nil {

continue

}

newPeerMsgHandler(peerState.peer, rw.ctx).HandleMsg(msg)

}

for _, peerState := range peerStateMap {

newPeerMsgHandler(peerState.peer, rw.ctx).HandleRaftReady()

}

}

}newPeerMsgHandler

func newPeerMsgHandler(peer *peer, ctx *GlobalContext) *peerMsgHandler {

return &peerMsgHandler{

peer: peer,

ctx: ctx,

}

}生成一个对象,然后handleRaftReady()

func (d *peerMsgHandler) HandleRaftReady() {

if d.stopped {

return

}

// Your Code Here (2B).

if !d.RaftGroup.HasReady() {

return

}

ready := d.RaftGroup.Ready()

res, _ := d.peer.peerStorage.SaveReadyState(&ready)

if res != nil && !reflect.DeepEqual(res.PrevRegion, res.Region) {

log.Infof("change region id{%v} to id{%v}", res.PrevRegion.Id, res.Region.Id)

d.SetRegion(res.Region)

metaStore := d.ctx.storeMeta

metaStore.Lock()

metaStore.regions[res.Region.Id] = res.Region

metaStore.regionRanges.Delete(®ionItem{res.PrevRegion})

metaStore.regionRanges.ReplaceOrInsert(®ionItem{res.Region})

metaStore.Unlock()

}

d.Send(d.ctx.trans, ready.Messages)

if len(ready.CommittedEntries) > 0 {

KVWB := new(engine_util.WriteBatch)

for _, entry := range ready.CommittedEntries {

KVWB = d.processCommittedEntries(&entry, KVWB)

if d.stopped {

return

}

}

lastEntry := ready.CommittedEntries[len(ready.CommittedEntries)-1]

d.peerStorage.applyState.AppliedIndex = lastEntry.Index

if err := KVWB.SetMeta(meta.ApplyStateKey(d.regionId), d.peerStorage.applyState); err != nil {

log.Panic(err)

}

KVWB.MustWriteToDB(d.ctx.engine.Kv)

}

if d.peerStorage.raftState.LastIndex < d.peerStorage.raftState.HardState.Commit || d.peerStorage.raftState.HardState.Commit < d.peerStorage.AppliedIndex() {

log.Fatalf("Node tag{%v} save ready state lastIndex{%v} commitIdx{%v} trunIdx{%v}, applyIndex{%v}", d.peerStorage.Tag, d.peerStorage.raftState.LastIndex, d.peerStorage.raftState.HardState.Commit,

d.peerStorage.truncatedIndex(), d.peerStorage.applyState.AppliedIndex)

}

log.Infof("Node tag{%v} save ready state lastIndex{%v} commitIdx{%v} trunIdx{%v}, applyIndex{%v}", d.peerStorage.Tag, d.peerStorage.raftState.LastIndex, d.peerStorage.raftState.HardState.Commit,

d.peerStorage.truncatedIndex(), d.peerStorage.applyState.AppliedIndex)

// if err := KVWB.SetMeta(meta.RegionStateKey(d.regionId), d.Region()); err != nil {

// log.Panic(err)

// }

d.RaftGroup.Advance(ready)

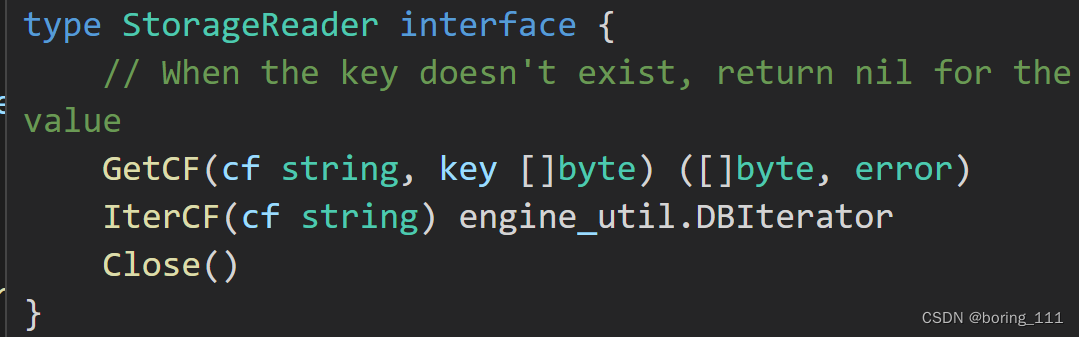

}读请求执行流程

读请求别的还是和写请求一样,不同的是raft_cmdpb.CmdTyoe_snap类型的请求.

func (rs *RaftStorage) Reader(ctx *kvrpcpb.Context) (storage.StorageReader, error) {

header := &raft_cmdpb.RaftRequestHeader{

RegionId: ctx.RegionId,

Peer: ctx.Peer,

RegionEpoch: ctx.RegionEpoch,

Term: ctx.Term,

}

request := &raft_cmdpb.RaftCmdRequest{

Header: header,

Requests: []*raft_cmdpb.Request{{

CmdType: raft_cmdpb.CmdType_Snap,

Snap: &raft_cmdpb.SnapRequest{},

}},

}

cb := message.NewCallback()

if err := rs.raftRouter.SendRaftCommand(request, cb); err != nil {

return nil, err

}

resp := cb.WaitResp()

if err := rs.checkResponse(resp, 1); err != nil {

if cb.Txn != nil {

cb.Txn.Discard()

}

return nil, err

}

if cb.Txn == nil {

panic("can not found region snap")

}

return NewRegionReader(cb.Txn, *resp.Responses[0].GetSnap().Region), nil

}返回一个reader.

RaftGC的流程

func (d *peerMsgHandler) HandleMsg(msg message.Msg) {

switch msg.Type {

case message.MsgTypeRaftMessage:

raftMsg := msg.Data.(*rspb.RaftMessage)

if err := d.onRaftMsg(raftMsg); err != nil {

log.Errorf("%s handle raft message error %v", d.Tag, err)

}

case message.MsgTypeRaftCmd:

raftCMD := msg.Data.(*message.MsgRaftCmd)

d.proposeRaftCommand(raftCMD.Request, raftCMD.Callback)

case message.MsgTypeTick:

d.onTick()

case message.MsgTypeSplitRegion:

split := msg.Data.(*message.MsgSplitRegion)

log.Infof("%s on split with %v", d.Tag, split.SplitKey)

d.onPrepareSplitRegion(split.RegionEpoch, split.SplitKey, split.Callback)

case message.MsgTypeRegionApproximateSize:

d.onApproximateRegionSize(msg.Data.(uint64))

case message.MsgTypeGcSnap:

gcSnap := msg.Data.(*message.MsgGCSnap)

d.onGCSnap(gcSnap.Snaps)

case message.MsgTypeStart:

d.startTicker()

}

}这里假设是message.MsgTypeTick消息。因此触发 onTick 函数、onRaftGCLogTick函数

func (d *peerMsgHandler) onTick() {

if d.stopped {

return

}

d.ticker.tickClock()

if d.ticker.isOnTick(PeerTickRaft) {

d.onRaftBaseTick()

}

if d.ticker.isOnTick(PeerTickRaftLogGC) {

d.onRaftGCLogTick()

}

if d.ticker.isOnTick(PeerTickSchedulerHeartbeat) {

d.onSchedulerHeartbeatTick()

}

if d.ticker.isOnTick(PeerTickSplitRegionCheck) {

d.onSplitRegionCheckTick()

}

d.ctx.tickDriverSender <- d.regionId

}

func (d *peerMsgHandler) onRaftGCLogTick() {

d.ticker.schedule(PeerTickRaftLogGC)

if !d.IsLeader() {

return

}

appliedIdx := d.peerStorage.AppliedIndex()

firstIdx, _ := d.peerStorage.FirstIndex()

var compactIdx uint64

if appliedIdx > firstIdx && appliedIdx-firstIdx >= d.ctx.cfg.RaftLogGcCountLimit {

compactIdx = appliedIdx

} else {

return

}

y.Assert(compactIdx > 0)

compactIdx -= 1

if compactIdx < firstIdx {

// In case compact_idx == first_idx before subtraction.

return

}

term, err := d.RaftGroup.Raft.RaftLog.Term(compactIdx)

if err != nil {

log.Fatalf("appliedIdx: %d, firstIdx: %d, compactIdx: %d", appliedIdx, firstIdx, compactIdx)

panic(err)

}

// Create a compact log request and notify directly.

regionID := d.regionId

request := newCompactLogRequest(regionID, d.Meta, compactIdx, term)

d.proposeRaftCommand(request, nil)

}func (d *peerMsgHandler) processAdminRequest(entry *eraftpb.Entry, request *raft_cmdpb.AdminRequest, KVwb *engine_util.WriteBatch) *engine_util.WriteBatch {

if request.CmdType == raft_cmdpb.AdminCmdType_CompactLog {

compactLog := request.CompactLog

log.Infof("admin request reach compactlog{%v} compactterm{%v}", compactLog.CompactIndex, compactLog.CompactTerm)

// d.peerStorage.applyState.AppliedIndex = compactLog.CompactIndex

if d.peerStorage.applyState.TruncatedState.Index > compactLog.CompactIndex {

log.Warningf("compact rpc delay")

return KVwb

}

d.peerStorage.applyState.TruncatedState.Index = compactLog.CompactIndex

d.peerStorage.applyState.TruncatedState.Term = compactLog.CompactTerm

// add

d.peerStorage.applyState.AppliedIndex = entry.Index

KVwb.SetMeta(meta.ApplyStateKey(d.regionId), d.peerStorage.applyState)

KVwb.WriteToDB(d.ctx.engine.Kv)

KVwb = &engine_util.WriteBatch{}

d.ScheduleCompactLog(compactLog.CompactIndex)

//TODO:? callback

}func (d *peerMsgHandler) ScheduleCompactLog(truncatedIndex uint64) {

raftLogGCTask := &runner.RaftLogGCTask{

RaftEngine: d.ctx.engine.Raft,

RegionID: d.regionId,

StartIdx: d.LastCompactedIdx,

EndIdx: truncatedIndex + 1,

}

d.LastCompactedIdx = raftLogGCTask.EndIdx

d.ctx.raftLogGCTaskSender <- raftLogGCTask

}func (r *raftLogGCTaskHandler) Handle(t worker.Task) {

logGcTask, ok := t.(*RaftLogGCTask)

if !ok {

log.Errorf("unsupported worker.Task: %+v", t)

return

}

log.Debugf("execute gc log. [regionId: %d, endIndex: %d]", logGcTask.RegionID, logGcTask.EndIdx)

collected, err := r.gcRaftLog(logGcTask.RaftEngine, logGcTask.RegionID, logGcTask.StartIdx, logGcTask.EndIdx)

if err != nil {

log.Errorf("failed to gc. [regionId: %d, collected: %d, err: %v]", logGcTask.RegionID, collected, err)

} else {

log.Debugf("collected log entries. [regionId: %d, entryCount: %d]", logGcTask.RegionID, collected)

}

r.reportCollected(collected)

}func (w *Worker) Start(handler TaskHandler) {

w.wg.Add(1)

go func() {

defer w.wg.Done()

if s, ok := handler.(Starter); ok {

s.Start()

}

for {

Task := <-w.receiver

if _, ok := Task.(TaskStop); ok {

return

}

handler.Handle(Task)

}

}()

}func (r *raftLogGCTaskHandler) gcRaftLog(raftDb *badger.DB, regionId, startIdx, endIdx uint64) (uint64, error) {

// Find the raft log idx range needed to be gc.

firstIdx := startIdx

if firstIdx == 0 {

firstIdx = endIdx

err := raftDb.View(func(txn *badger.Txn) error {

startKey := meta.RaftLogKey(regionId, 0)

ite := txn.NewIterator(badger.DefaultIteratorOptions)

defer ite.Close()

if ite.Seek(startKey); ite.Valid() {

var err error

if firstIdx, err = meta.RaftLogIndex(ite.Item().Key()); err != nil {

return err

}

}

return nil

})

if err != nil {

return 0, err

}

}

if firstIdx >= endIdx {

log.Infof("no need to gc, [regionId: %d]", regionId)

return 0, nil

}

raftWb := engine_util.WriteBatch{}

for idx := firstIdx; idx < endIdx; idx += 1 {

key := meta.RaftLogKey(regionId, idx)

raftWb.DeleteMeta(key)

}

if raftWb.Len() != 0 {

if err := raftWb.WriteToDB(raftDb); err != nil {

return 0, err

}

}

return endIdx - firstIdx, nil

})最后是通过 rownode.go 中的 Advance 更新raft中的apply等变量。

生成snapshot快照的流程

raft.go 中的 sendAppend 函数中,调用 raftLog.storage.Snapshot() 函数,新建一个 RegionTaskGen 的任务,异步产生快照,并且将 snapState 的状态改为 Generating 表示正在产生快照。另外,这次调用应该返回一个快照暂时不能获得的Error。

func (ps *PeerStorage) Snapshot() (eraftpb.Snapshot, error) {

var snapshot eraftpb.Snapshot

if ps.snapState.StateType == snap.SnapState_Generating {

select {

case s := <-ps.snapState.Receiver:

if s != nil {

snapshot = *s

}

default:

return snapshot, raft.ErrSnapshotTemporarilyUnavailable

}

ps.snapState.StateType = snap.SnapState_Relax

if snapshot.GetMetadata() != nil {

ps.snapTriedCnt = 0

if ps.validateSnap(&snapshot) {

return snapshot, nil

}

} else {

log.Warnf("%s failed to try generating snapshot, times: %d", ps.Tag, ps.snapTriedCnt)

}

}

if ps.snapTriedCnt >= 5 {

err := errors.Errorf("failed to get snapshot after %d times", ps.snapTriedCnt)

ps.snapTriedCnt = 0

return snapshot, err

}

log.Infof("%s requesting snapshot", ps.Tag)

ps.snapTriedCnt++

ch := make(chan *eraftpb.Snapshot, 1)

ps.snapState = snap.SnapState{

StateType: snap.SnapState_Generating,

Receiver: ch,

}

// schedule snapshot generate task

ps.regionSched <- &runner.RegionTaskGen{

RegionId: ps.region.GetId(),

Notifier: ch,

}

return snapshot, raft.ErrSnapshotTemporarilyUnavailable

}func (snapCtx *snapContext) handleGen(regionId uint64, notifier chan<- *eraftpb.Snapshot) {

snap, err := doSnapshot(snapCtx.engines, snapCtx.mgr, regionId)

if err != nil {

log.Errorf("failed to generate snapshot!!!, [regionId: %d, err : %v]", regionId, err)

notifier <- nil

} else {

notifier <- snap

}

}func doSnapshot(engines *engine_util.Engines, mgr *snap.SnapManager, regionId uint64) (*eraftpb.Snapshot, error) {

log.Debugf("begin to generate a snapshot. [regionId: %d]", regionId)

txn := engines.Kv.NewTransaction(false)

index, term, err := getAppliedIdxTermForSnapshot(engines.Raft, txn, regionId)

if err != nil {

return nil, err

}

key := snap.SnapKey{RegionID: regionId, Index: index, Term: term}

mgr.Register(key, snap.SnapEntryGenerating)

defer mgr.Deregister(key, snap.SnapEntryGenerating)

regionState := new(rspb.RegionLocalState)

err = engine_util.GetMetaFromTxn(txn, meta.RegionStateKey(regionId), regionState)

if err != nil {

panic(err)

}

if regionState.GetState() != rspb.PeerState_Normal {

return nil, errors.Errorf("snap job %d seems stale, skip", regionId)

}

region := regionState.GetRegion()

confState := util.ConfStateFromRegion(region)

snapshot := &eraftpb.Snapshot{

Metadata: &eraftpb.SnapshotMetadata{

Index: key.Index,

Term: key.Term,

ConfState: &confState,

},

}

s, err := mgr.GetSnapshotForBuilding(key)

if err != nil {

return nil, err

}

// Set snapshot data

snapshotData := &rspb.RaftSnapshotData{Region: region}

snapshotStatics := snap.SnapStatistics{}

err = s.Build(txn, region, snapshotData, &snapshotStatics, mgr)

if err != nil {

return nil, err

}

snapshot.Data, err = snapshotData.Marshal()

return snapshot, err

}从GetSnapforBuildong入手,最终调用下面的方法生成snapshot

func NewSnap(dir string, key SnapKey, sizeTrack *int64, isSending, toBuild bool,

deleter SnapshotDeleter) (*Snap, error) {

if !util.DirExists(dir) {

err := os.MkdirAll(dir, 0700)

if err != nil {

return nil, errors.WithStack(err)

}

}

var snapPrefix string

if isSending {

snapPrefix = snapGenPrefix

} else {

snapPrefix = snapRevPrefix

}

prefix := fmt.Sprintf("%s_%s", snapPrefix, key)

displayPath := getDisplayPath(dir, prefix)

cfFiles := make([]*CFFile, 0, len(engine_util.CFs))

for _, cf := range engine_util.CFs {

fileName := fmt.Sprintf("%s_%s%s", prefix, cf, sstFileSuffix)

path := filepath.Join(dir, fileName)

tmpPath := path + tmpFileSuffix

cfFile := &CFFile{

CF: cf,

Path: path,

TmpPath: tmpPath,

}

cfFiles = append(cfFiles, cfFile)

}

metaFileName := fmt.Sprintf("%s%s", prefix, metaFileSuffix)

metaFilePath := filepath.Join(dir, metaFileName)

metaTmpPath := metaFilePath + tmpFileSuffix

metaFile := &MetaFile{

Path: metaFilePath,

TmpPath: metaTmpPath,

}

s := &Snap{

key: key,

displayPath: displayPath,

CFFiles: cfFiles,

MetaFile: metaFile,

SizeTrack: sizeTrack,

}

// load snapshot meta if meta file exists.

if util.FileExists(metaFile.Path) {

err := s.loadSnapMeta()

if err != nil {

if !toBuild {

return nil, err

}

log.Warnf("failed to load existent snapshot meta when try to build %s: %v", s.Path(), err)

if !retryDeleteSnapshot(deleter, key, s) {

log.Warnf("failed to delete snapshot %s because it's already registered elsewhere", s.Path())

return nil, err

}

}

}

return s, nil

}在下一次 Raft 调用 Snapshot时,会检查快照生成是否完成。如果是,Raft应该将快照信息发送给其他 peer,而快照的发送和接收工作则由 snap_runner.go 处理

unc (r *snapRunner) sendSnap(addr string, msg *raft_serverpb.RaftMessage) error {

start := time.Now()

msgSnap := msg.GetMessage().GetSnapshot()

snapKey, err := snap.SnapKeyFromSnap(msgSnap)

if err != nil {

return err

}

r.snapManager.Register(snapKey, snap.SnapEntrySending)

defer r.snapManager.Deregister(snapKey, snap.SnapEntrySending)

snap, err := r.snapManager.GetSnapshotForSending(snapKey)

if err != nil {

return err

}

if !snap.Exists() {

return errors.Errorf("missing snap file: %v", snap.Path())

}

cc, err := grpc.Dial(addr, grpc.WithInsecure(),

grpc.WithInitialWindowSize(2*1024*1024),

grpc.WithKeepaliveParams(keepalive.ClientParameters{

Time: 3 * time.Second,

Timeout: 60 * time.Second,

}))

if err != nil {

return err

}

client := tinykvpb.NewTinyKvClient(cc)

stream, err := client.Snapshot(context.TODO())

if err != nil {

return err

}

err = stream.Send(&raft_serverpb.SnapshotChunk{Message: msg})

if err != nil {

return err

}

buf := make([]byte, snapChunkLen)

for remain := snap.TotalSize(); remain > 0; remain -= uint64(len(buf)) {

if remain < uint64(len(buf)) {

buf = buf[:remain]

}

_, err := io.ReadFull(snap, buf)

if err != nil {

return errors.Errorf("failed to read snapshot chunk: %v", err)

}

err = stream.Send(&raft_serverpb.SnapshotChunk{Data: buf})

if err != nil {

return err

}

}

_, err = stream.CloseAndRecv()

if err != nil {

return err

}

log.Infof("sent snapshot. regionID: %v, snapKey: %v, size: %v, duration: %s", snapKey.RegionID, snapKey, snap.TotalSize(), time.Since(start))

return nil

}

1689

1689

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?