提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

文章目录

前言

提示:这里可以添加本文要记录的大概内容:

聚散匆匆,云边孤雁,水上浮萍。Spark别了与之邂逅的HBase,又一个人孤独地走在了漂泊的路上。孤独的人习惯孤独,可是却不习惯别离,虽说只是几句寒暄,却也难下心头。Spark望着红日已经高高挂起,微风摇曳着路旁的细柳,已而不得已踏上了路在脚下的征程。

斜阳外,古道边,一处酒家在一座石拱桥的尽头,依石而建,伊水而生。令他感到诧异的并不是如此优雅的酒家,而是那么熟悉的背影。一副模糊的轮廓勾起了儿时的回忆,心里忐忑着,难道真的是他?心思难以克制双脚,愈走愈快,没错,果然是他,儿时的玩伴,一生的挚交——Kafka。

提示:以下是本篇文章正文内容,下面案例可供参考

一、安装Kafka

1.下载、解压安装包

Kafka集群的安装很简单,版本需要与Scala保持一致,张医师选择的kafka_2.11-2.0.0.tgz(已上传)

下载完成后,将安装包上传至hadoop01节点中的/export/software目录下,并使用如下代码解压

代码如下(示例):

tar -zxvf kafka_2.11-2.0.0.tgz -C /export/servers/

2.修改配置文件

进入Kafka文件夹下的config目录,修改server.properties配置文件,修改后的内容如下所示

(改动的地方并不多,一定要看好自己的路径)

代码如下(示例):

broker.id=0

port=9092

# The number of threads that the server uses for receiving requests from the network and sending responses to the network

num.network.threads=3

# The number of threads that the server uses for processing requests, which may include disk I/O

num.io.threads=8

# The send buffer (SO_SNDBUF) used by the socket server

socket.send.buffer.bytes=102400

# The receive buffer (SO_RCVBUF) used by the socket server

socket.receive.buffer.bytes=102400

# The maximum size of a request that the socket server will accept (protection against OOM)

socket.request.max.bytes=104857600

############################# Log Basics #############################

# A comma separated list of directories under which to store log files

log.dirs=/root/export/data/kafka/

# The default number of log partitions per topic. More partitions allow greater

# parallelism for consumption, but this will also result in more files across

# the brokers.

num.partitions=2

# The number of threads per data directory to be used for log recovery at startup and flushing at shutdown.

# This value is recommended to be increased for installations with data dirs located in RAID array.

num.recovery.threads.per.data.dir=1

# For anything other than development testing, a value greater than 1 is recommended for to ensure availability such as 3.

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

# The minimum age of a log file to be eligible for deletion due to age

log.retention.hours=1

# A size-based retention policy for logs. Segments are pruned from the log unless the remaining

# segments drop below log.retention.bytes. Functions independently of log.retention.hours.

#log.retention.bytes=1073741824

# The maximum size of a log segment file. When this size is reached a new log segment will be created.

log.segment.bytes=1073741824

# The interval at which log segments are checked to see if they can be deleted according

# to the retention policies

log.retention.check.interval.ms=300000

#日志清理

log.cleaner.enable=true

############################# Zookeeper #############################

# Zookeeper connection string (see zookeeper docs for details).

# This is a comma separated host:port pairs, each corresponding to a zk

# server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002".

# You can also append an optional chroot string to the urls to specify the

# root directory for all kafka znodes.

zookeeper.connect=hadoop01:2181,hadoop02:2181,hadoop03:2181

# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=6000

# However, in production environments the default value of 3 seconds is more suitable as this will help to avoid unnecessary, and potentially expensive, rebalances during application startup.

group.initial.rebalance.delay.ms=0

#删除topic

delete.topic.enable=true

#设置本机ip

host.name=hadoop01

(1)broker.id:集群中每个节点的唯一且永久的名称,改制必须大于等于0

hadoop01: 0

hadoop02: 1

hadoop03: 2

(2)log.dirs:指定运行日志存放的地址,可以指定多个目录,并以逗号分隔

(3)zookeeper.connect:指定zookeeper集群的IP与端口号

(4)delete.topic.enable:是否允许删除Topic,如果设置true,表示允许删除

(5)host.home:设置本机IP地址。若设置错误,则客户端会抛出Producer connection to localhost:9092 unsuccessful的异常信息

3.添加环境变量

vim /etc/profile 直接添加Kafka环境变量

代码如下(示例):

export KAFKA_HOME=/export/servers/kafka_2.11-2.0.0

export PATH=$PATH:$KAFKA_HOME/bin

4.分发文件

修改配置文件后,将kafka本地安装目录/export/servers/kafka_2.11-2.0.0以及环境变量配置文件/etc/profile分发至hadoop02、hadoop03机器

代码如下(示例):

scp -r kafka_2.11-2.0.0/ hadoop02:/export/servers/

scp -r kafka_2.11-2.0.0/ hadoop03:/export/servers/

scp /etc/profile hadoop02:/etc/profile

scp /etc/profile hadoop03:/etc/profile

分发完之后,根据当前节点情况修改broker.id和host.name参数,随后还需要使用source /etc/profile使环境变量生效。至此,Kafka集群配置完毕。

二、启动Kafka服务

Kafka服务启动前,需要先启动zookeeper集群服务

zookeeper启动成功后,就可以在kafka根目录下bin/kafka-server-start.sh脚本启动Kafka服务。

代码如下(示例):

bin/kafka-server-start.sh config/server.properties

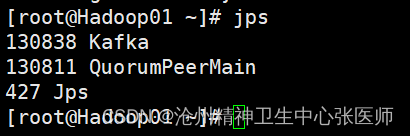

该终端不能关闭,需要克隆一个新的会话,并使用jps查看进程

二、Kafka生产者消费者实例(基于命令行)

1.创建一个itcasttopic的主题

代码如下(示例):

kafka-topics.sh --create --topic itcasttopic --partitions 3 --replication-factor 2 -zookeeper hadoop01:2181,hadoop02:2181,hadoop03:2181

2.hadoop01当生产者

代码如下(示例):

kafka-console-producer.sh --broker-list hadoop01:9092,hadoop02:9092,hadoop03:9092 --topic itcasttopic

3.hadoop02当消费者

代码如下(示例):

kafka-console-consumer.sh --from-beginning --topic itcasttopic --bootstrap-server hadoop01:9092,hadoop02:9092,hadoop03:9092

3.–list查看所有主题

代码如下(示例):

kafka-topics.sh --list --zookeeper hadoop01:2181,hadoop02:2181,hadoop03:2181

4.删除主题

代码如下(示例):

kafka-topics.sh --delete --zookeeper hadoop01:2181,hadoop02:2181,hadoop03:2181 --topic itcasttopic

5.关闭kafka

代码如下(示例):

bin/kafka-server-stop.sh config/server.properties

总结

久旱逢甘霖,他乡遇故知。Spark在漂泊无依的征程中遇到了自己的老友,Spark走到kafka身后,欲言又止,一时之间不知道用哪些言语来表达此刻内心的激动,便只向酒家寻了一副碗筷,互相叙述起与日未见的点点滴滴。

1244

1244

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?