from cProfile import label

from pickletools import optimize

import numpy as np

import torch

from sklearn import datasets

import torch.nn as nn

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

# 搭建神经网络(两个隐藏层)

class NET(nn.Module):

def __init__(self, n_feature, n_hidden1, n_hidden2, n_output):

super(NET, self).__init__()

self.hidden1 = nn.Linear(n_feature, n_hidden1)

self.relu1 = nn.ReLU()

self.hidden2 = nn.Linear(n_hidden1, n_hidden2)

self.relu2 = nn.ReLU()

self.out = nn.Linear(n_hidden2, n_output)

self.softmax = nn.Softmax(dim=1)

def forward(self, x):

hidden1 = self.hidden1(x)

relu1 = self.relu1(hidden1)

hidden2 = self.hidden2(relu1)

relu2 = self.relu2(hidden2)

out = self.out(relu2)

return out

def predict(self, x):

y_pred = self.forward(x)

y_predict = self.softmax(y_pred)

return y_predict

def main():

# 划分训练集、验证集、测试集数据

dataset = datasets.load_iris()

x_train, x_test, y_train, y_test = train_test_split(dataset.data, dataset.target, test_size=0.2, random_state=22)

x_train, x_val, y_train, y_val = train_test_split(x_train, y_train, test_size=0.2, random_state=22)

print(x_train)

print(y_train)

print(y_train.shape[0])

x_train = torch.FloatTensor(x_train)

y_train = torch.LongTensor(y_train)

x_val = torch.FloatTensor(x_val)

y_val = torch.LongTensor(y_val)

x_test = torch.FloatTensor(x_test)

y_test = torch.LongTensor(y_test)

y_train_size = int(np.array(y_train.size()))

print(y_train_size)

# 定义网络结构以及损失函数

model = NET(n_feature=4, n_hidden1=20, n_hidden2=20, n_output=3)

optimizer = torch.optim.SGD(model.parameters(), lr=0.02)

loss_func = torch.nn.CrossEntropyLoss()

costs = []

val_loss_list=[]

train_acc_list=[]

val_acc_list=[]

num_epoch = 2000

# 训练网络

for epoch in range(num_epoch):

cost = 0

out = model.forward(x_train)

loss = loss_func(out, y_train)

optimizer.zero_grad()

loss.backward()

optimizer.step()

cost = cost + loss.cpu().detach().numpy()

costs.append(cost / y_train_size)

print(f"Epoch {epoch}, train loss: {loss.item()}", end=' ')

cost=0

with torch.no_grad():

out=model.forward(x_val)

loss=loss_func(out,y_val)

cost=cost+loss.cpu().detach().numpy()

val_loss_list.append(cost/int(np.array(y_val.size())))

print(f"val loss:{loss.item()}",end=' ')

y_train_pred = model.predict(x_train)

prediction = torch.max(y_train_pred, 1)[1] # 返回index 0返回原值

y_train_pred = prediction.data.numpy()

y_train1 = y_train.data.numpy()

acc = float(np.sum(y_train_pred == y_train1)) / y_train1.shape[0]

train_acc_list.append(acc)

print(f"train acc:{acc*100:.4f}%", end=' ')

y_val_pred = model.predict(x_val)

prediction = torch.max(y_val_pred, 1)[1] # 返回index 0返回原值

y_val_pred = prediction.data.numpy()

y_val1 = y_val.data.numpy()

acc = float(np.sum(y_val_pred == y_val1)) / y_val1.shape[0]

val_acc_list.append(acc)

print(f"val acc:{acc*100:.4f}%")

fig, ax = plt.subplots(figsize=(6, 4))

ax.plot(np.arange(num_epoch), costs,label='train loss',color='r')

ax.plot(np.arange(num_epoch), val_loss_list, label='val loss', color='b')

ax.set_xlabel('iterations')

ax.set_ylabel('losses', rotation=0)

plt.legend()

plt.show()

fig, ax = plt.subplots(figsize=(6, 4))

ax.plot(np.arange(num_epoch), train_acc_list, label='train acc', color='r')

ax.plot(np.arange(num_epoch), val_acc_list, label='val acc', color='b')

ax.set_xlabel('iterations')

ax.set_ylabel('accuracy', rotation=0)

plt.legend()

plt.show()

# 测试训练集准确率

y_train_pred = model.predict(x_train)

prediction = torch.max(y_train_pred, 1)[1] # 返回index 0返回原值

y_train_pred = prediction.data.numpy()

y_train1 = y_train.data.numpy()

train_acc = float(np.sum(y_train_pred == y_train1)) / y_train1.shape[0]

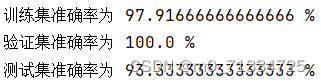

print("训练集准确率为", train_acc * 100, "%")

# 测试验证集准确率

y_val_pred = model.predict(x_val)

prediction = torch.max(y_val_pred, 1)[1] # 返回index 0返回原值

y_val_pred = prediction.data.numpy()

y_val1 = y_val.data.numpy()

val_acc = float(np.sum(y_val_pred == y_val1)) / y_val1.shape[0]

print("验证集准确率为", val_acc * 100, "%")

# 测试测试集准确率

y_test_pred = model.predict(x_test)

prediction = torch.max(y_test_pred, 1)[1] # 返回index 0返回原值

y_test_pred = prediction.data.numpy()

y_test1 = y_test.data.numpy()

test_acc = float(np.sum(y_test_pred == y_test1)) / y_test1.shape[0]

print("测试集准确率为", test_acc * 100, "%")

x_data1, x_data2, y_data1, y_data2 = train_test_split(x_test, y_test, test_size=0.01, random_state=22)

print(len(x_data2))

y_pred=model.predict(x_data2)

prediction = torch.max(y_pred, 1)[1] # 返回index 0返回原值

print(f"prediction:{prediction}")

print(f"true label:{y_data2}")

if __name__=='__main__':

main()

损失函数:

准确率:

647

647

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?