1、pod

1、pod调度

[root@master test]# kubectl taint node node1 a=b:NoSchedule

[root@master test]# kubectl taint node node2 a=c:NoSchedule #将新创建的pod不允许进行调度

#调度到有污点的节点上面

apiVersion: apps/v1

kind: Deployment

metadata:

name: d1

namespace: test

spec:

replicas: 3

selector:

matchLabels:

test1: d1

template:

metadata:

labels:

test1: d1

spec:

containers:

- name: nginx

image: docker.io/library/nginx:1.9.1

imagePullPolicy: IfNotPresent

tolerations:

- key: a

operator: Exists #如果是这个的话不用管value的值是什么,equal的话,就是要key和值相等才行

effect: NoSchedule

[root@master test]# kubectl get pod -n test -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

d1-868fc47c96-bbhcm 1/1 Running 0 87s 10.244.166.141 node1 <none> <none>

d1-868fc47c96-cqhxz 1/1 Running 0 87s 10.244.104.16 node2 <none> <none>

d1-868fc47c96-qrfs6 1/1 Running 0 87s 10.244.166.140 node1 <none> <none>

2、deployment更新

#通过参数来控制更新的速度

[root@master test]# cat deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: d-test1

namespace: test

spec:

replicas: 3

selector:

matchLabels:

d1: test1

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 0 #最少有0个不可用,如果是1的话,更新过程就是n-1个可用,在 更新的过程中,增加的同时删除一个pod

maxSurge: 1 #最多是允许超过副本数量1个,增加的同时,并且运行了,再删除之前的Pod

template:

metadata:

labels:

d1: test1

spec:

containers:

- name: nginx-test

image: docker.io/library/nginx:1.9.1

imagePullPolicy: IfNotPresent

#更新一个镜像源

#先添加,然后在删除之前的pod

[root@master test]# kubectl get pod -n test -w

NAME READY STATUS RESTARTS AGE

d-test1-756bff9b87-5p9kp 1/1 Running 0 5m50s

d-test1-756bff9b87-b47sp 1/1 Running 0 2m2s

d-test1-756bff9b87-vt2ts 1/1 Running 0 2m2s

d-test1-7b47bbf6bf-hphs7 0/1 Pending 0 0s

d-test1-7b47bbf6bf-hphs7 0/1 Pending 0 0s

d-test1-7b47bbf6bf-hphs7 0/1 ContainerCreating 0 0s

d-test1-7b47bbf6bf-hphs7 0/1 ContainerCreating 0 1s

d-test1-7b47bbf6bf-hphs7 1/1 Running 0 2s

d-test1-756bff9b87-b47sp 1/1 Terminating 0 2m14s

d-test1-7b47bbf6bf-m5rcv 0/1 Pending 0 0s

d-test1-7b47bbf6bf-m5rcv 0/1 Pending 0 0s

d-test1-7b47bbf6bf-m5rcv 0/1 ContainerCreating 0 0s

d-test1-756bff9b87-b47sp 1/1 Terminating 0 2m15s

d-test1-7b47bbf6bf-m5rcv 0/1 ContainerCreating 0 1s

d-test1-756bff9b87-b47sp 0/1 Terminating 0 2m15s

d-test1-756bff9b87-b47sp 0/1 Terminating 0 2m15s

d-test1-756bff9b87-b47sp 0/1 Terminating 0 2m15s

d-test1-7b47bbf6bf-m5rcv 1/1 Running 0 2s

d-test1-756bff9b87-vt2ts 1/1 Terminating 0 2m16s

d-test1-7b47bbf6bf-6h84z 0/1 Pending 0 0s

d-test1-7b47bbf6bf-6h84z 0/1 Pending 0 0s

d-test1-7b47bbf6bf-6h84z 0/1 ContainerCreating 0 0s

d-test1-756bff9b87-vt2ts 1/1 Terminating 0 2m16s

d-test1-756bff9b87-vt2ts 0/1 Terminating 0 2m17s

d-test1-756bff9b87-vt2ts 0/1 Terminating 0 2m17s

d-test1-756bff9b87-vt2ts 0/1 Terminating 0 2m17s

d-test1-7b47bbf6bf-6h84z 0/1 ContainerCreating 0 1s

d-test1-7b47bbf6bf-6h84z 1/1 Running 0 2s

d-test1-756bff9b87-5p9kp 1/1 Terminating 0 6m6s

d-test1-756bff9b87-5p9kp 1/1 Terminating 0 6m8s

d-test1-756bff9b87-5p9kp 0/1 Terminating 0 6m8s

d-test1-756bff9b87-5p9kp 0/1 Terminating 0 6m8s

d-test1-756bff9b87-5p9kp 0/1 Terminating 0 6m8s

3、hpa控制器

#当cpu资源达到了20%就自动的扩容

#监控软件的yaml文件

[root@master hpa]# cat components.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

rules:

- apiGroups:

- metrics.k8s.io

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

verbs:

- get

- apiGroups:

- ""

resources:

- pods

- nodes

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

k8s-app: metrics-server

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

ports:

- name: https

port: 443

protocol: TCP

targetPort: https

selector:

k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

k8s-app: metrics-server

name: metrics-server

namespace: kube-system

spec:

selector:

matchLabels:

k8s-app: metrics-server

strategy:

rollingUpdate:

maxUnavailable: 0

template:

metadata:

labels:

k8s-app: metrics-server

spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-server:v0.6.3

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /livez

port: https

scheme: HTTPS

periodSeconds: 10

name: metrics-server

ports:

- containerPort: 4443

name: https

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /readyz

port: https

scheme: HTTPS

initialDelaySeconds: 20

periodSeconds: 10

resources:

requests:

cpu: 100m

memory: 200Mi

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsNonRoot: true

runAsUser: 1000

volumeMounts:

- mountPath: /tmp

name: tmp-dir

nodeSelector:

kubernetes.io/os: linux

priorityClassName: system-cluster-critical

serviceAccountName: metrics-server

volumes:

- emptyDir: {}

name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

k8s-app: metrics-server

name: v1beta1.metrics.k8s.io

spec:

group: metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: metrics-server

namespace: kube-system

version: v1beta1

versionPriority: 100

#编写一个deployment和svc

[root@master test]# cat hpa-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: hpa-1

namespace: test

spec:

replicas: 1

selector:

matchLabels:

hpa1: h1

template:

metadata:

labels:

hpa1: h1

spec:

containers:

- name: nginx

image: docker.io/library/nginx:1.9.1

imagePullPolicy: IfNotPresent

livenessProbe:

httpGet:

port: 80

path: /index.html

resources:

limits:

cpu: 500m

memory: 1Gi

requests:

cpu: 100m

memory: 200Mi

---

apiVersion: v1

kind: Service

metadata:

name: svc1

namespace: test

spec:

selector:

hpa1: h1

type: NodePort

ports:

- targetPort: 80

port: 80

nodePort: 30090

[root@master test]# cat hpa-test.yaml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: hpa-test

namespace: test

spec:

minReplicas: 1

maxReplicas: 20

behavior: #精细化伸缩

scaleUp:

policies:

- periodSeconds: 3

type: Pods #伸缩政策的类型,基于pod数量

value: 5

metrics: #监控的指标

- resource:

name: cpu #cpu或者memory

target:

type: Utilization #cpu平均使用值

averageUtilization: 20 #资源请求达到的百分比

type: Resource #基于cpu或者memory指标

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: hpa-1

#压力测试

[root@master test]# cat curl.sh

while `true`

do

curl 192.168.200.100:30090 &> /dev/null

done

4、volume做成pv和pvc

-

先将本地的存储做成一个pv,做一个pvc从pv里面进行获取

-

将pod和pvc删除后,在创建pvc的话,会处于pending状态,所以的话想要使用这个pv的话,需要删除pv和pvc和pod才能再次使用

[root@master pvc]# cat pv-pvc.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1

namespace: test

spec:

capacity:

storage: "2Gi"

accessModes:

- ReadWriteOnce

nfs:

path: /root/test/pvc/data1

server: 10.104.43.67

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc1

namespace: test

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "1Gi"

#创建一个pod使用这个pvc

[root@master pvc]# cat pvc-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-pvc1

namespace: test

spec:

containers:

- name: busybox

image: docker.io/library/busybox:1.28

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","sleep 360000"]

volumeMounts:

- mountPath: /tmp/config

name: pod-1

volumes:

- name: pod-1

persistentVolumeClaim:

claimName: pvc1

5、configmap和secret做成一个卷

- 都是存储数据的,只不过是存储的类型不一样

[root@master config_secret]# cat configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: c1

namespace: test

data:

username: "1"

password: "1"

---

apiVersion: v1

kind: Pod

metadata:

name: cm-pod1

namespace: test

spec:

containers:

- name: busybox

image: docker.io/library/busybox:1.28

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","sleep 360000"]

volumeMounts:

- mountPath: /tmp/config

name: cm-1

volumes:

- name: cm-1

configMap:

name: c1

#secret

[root@master config_secret]# cat secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: s1

namespace: test

data:

username: YWRtaW4=

---

apiVersion: v1

kind: Pod

metadata:

name: p1

namespace: test

spec:

containers:

- name: busybox

image: docker.io/library/busybox:1.28

imagePullPolicy: IfNotPresent

command: ["/bin/sh","-c","sleep 36000"]

volumeMounts:

- name: s1

mountPath: /tmp/config

volumes:

- name: s1

secret:

secretName: s1

6、rbac策略

-

创建一个用户

-

创建一个sa

#创建一个私钥

openssl genrsa -out devops.key 2048

#利用私钥创建一个请求

openssl req -new -key devops.key -subj "/CN=devops" -out devops.csr

#签发证书

openssl x509 -req -in devops.csr -CA /etc/kubernetes/pki/ca.crt -CAkey /etc/kubernetes/pki/ca.key -CAcreateserial -out devops.crt -days 365

#配置k8s用户

kubectl config set-credentials devops --client-certificate=./devops.crt --client-key=./devops.key --embed-certs=true

#配置用户上下文

kubectl config set-context devops@kubernetes --cluster=kubernetes --user=devops

[root@master devops-role]# kubectl config get-contexts

CURRENT NAME CLUSTER AUTHINFO NAMESPACE

devops@kubernetes kubernetes devops

devuser@kubernetes kubernetes devuser test

* kubernetes-admin@kubernetes kubernetes kubernetes-admin

#配置权限,只允许这个devops用户具有查看pod的权限

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: devops-role

namespace: dev

rules:

- apiGroups: ["*"]

resources: ["pods"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: devops-rolebinding

namespace: dev

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: devops-role

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: devops

#在dev空间下创建了一个pod

[root@master devops-role]# kubectl get pod -n dev

NAME READY STATUS RESTARTS AGE

p1 1/1 Running 0 4s

#切换到devops上下文中,查看

[root@master devops-role]# kubectl config use-context devops@kubernetes

Switched to context "devops@kubernetes".

[root@master devops-role]# kubectl get pod -n dev

NAME READY STATUS RESTARTS AGE

p1 1/1 Running 0 2m33s

[root@master devops-role]# kubectl get pod -n test

Error from server (Forbidden): pods is forbidden: User "devops" cannot list resource "pods" in API group "" in the namespace "test"

创建一个sa账户

#sa和secret关联

[root@master serviceaccount]# cat s1.yaml

apiVersion: v1

kind: Secret

metadata:

name: se1

namespace: dev

data:

username: YWRtaW4=

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: s1

namespace: dev

secrets:

- name: se1

#创建权限

[root@master serviceaccount]# cat s1-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: s1-role

namespace: dev

rules:

- apiGroups: ["*"]

resources: ["pods"]

verbs: ["list"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: s1-rolebinding

namespace: dev

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: s1-role

subjects:

- apiGroup: ""

kind: ServiceAccount

name: s1

#创建pod

[root@master serviceaccount]# cat pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: p1

namespace: dev

spec:

containers:

- name: tomcat

image: docker.io/library/tomcat

imagePullPolicy: IfNotPresent

serviceAccount: s1

7、statefulset控制器

-

有pod唯一的dns,并且有无头服务

-

有顺序的pod的名称

-

创建一个statefulset控制器,然后创建一个svc,通过svc解析到Pod之间的dns

[root@master statefulset]# cat statefulset-nginx.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: s1

namespace: ll

spec:

replicas: 3

serviceName: nginx # 定义了一个service,通过这个可以解析pod之间的dns,从而实现访问了

selector:

matchLabels:

state: pod1

template:

metadata:

labels:

state: pod1

spec:

containers:

- name: nginx

image: docker.io/library/nginx:1.14-alpine

imagePullPolicy: IfNotPresent

---

apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: ll

spec:

selector:

state: pod1

type: NodePort

ports:

- name: s1

targetPort: 80

port: 80

nodePort: 30080

# 定义一个没有service的ip

apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: ll

spec:

selector:

state: pod1

type: ClusterIP

clusterIP: None

ports:

- name: s1

targetPort: 80

port: 80

#可以通过dnsPod之间实现互相访问

# svc没有ip的话,会直接去找对应的pod

[root@master statefulset]# kubectl exec -ti -n ll s1-0 -- /bin/sh

/ # nslookup nginx

nslookup: can't resolve '(null)': Name does not resolve

Name: nginx

Address 1: 10.244.104.50 s1-2.nginx.ll.svc.cluster.local

Address 2: 10.244.104.49 s1-1.nginx.ll.svc.cluster.local

Address 3: 10.244.166.168 s1-0.nginx.ll.svc.cluster.local

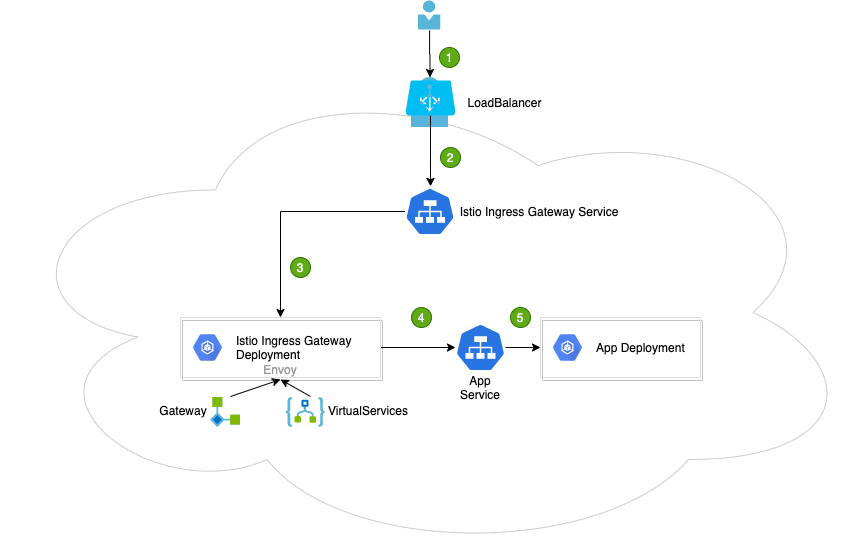

8、istio灰度发布

-

有2个不同版本的镜像,然后发送一个请求,v1占比90,v2占比10

# 给名称空间自动注入sidecar

[root@k8s-master-node1 ~]# kubectl create ns istio

namespace/istio created

# 创建容器的时候,自动注入sidecar容器,也就是envoy和pilot-agent

[root@k8s-master-node1 ~]# kubectl label namespaces istio istio-injection=enabled

namespace/istio labeled

# 创建deployment和svc

[root@k8s-master-node1 istio]# cat deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: c1

spec:

replicas: 1

selector:

matchLabels:

app: v1

template:

metadata:

labels:

app: v1

svc: canary

spec:

containers:

- name: c1

image: xianchao/canary:v1

imagePullPolicy: IfNotPresent

ports:

- name: v1

containerPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: c2

spec:

replicas: 1

selector:

matchLabels:

app: v2

template:

metadata:

labels:

app: v2

svc: canary

spec:

containers:

- name: c2

image: xianchao/canary:v2

imagePullPolicy: IfNotPresent

ports:

- name: v2

containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: canary

spec:

type: ClusterIP

selector:

svc: canary

ports:

- name: canary

port: 80

targetPort: 80

[root@k8s-master-node1 istio]# kubectl get pod

NAME READY STATUS RESTARTS AGE

c1-5b44496d4d-8ndgj 2/2 Running 0 51s

c2-78dc9979d4-4xv6c 2/2 Running 0 51s

[root@k8s-master-node1 istio]# kubectl describe svc

canary kubernetes

[root@k8s-master-node1 istio]# kubectl describe svc canary

Name: canary

Namespace: default

Labels: <none>

Annotations: <none>

Selector: svc=canary

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.96.31.112

IPs: 10.96.31.112

Port: canary 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.1.14:80,10.244.1.15:80

Session Affinity: None

Events: <none>

# 创建一个gateway

[root@k8s-master-node1 istio]# cat gateway.yaml

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: canary

spec:

selector:

istio: ingressgateway

servers:

- port:

name: http

number: 80

protocol: HTTP

hosts:

- "*"

# 请求访问的是v1.com:80和v2.com:80的时候,gateway处理这些请求,其余的不处理

# 创建一个virtualservice

# 访问v1.com的占比90,v2.com占比10

[root@k8s-master-node1 istio]# cat virtual.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: canary

spec:

gateways: # 绑定网关

- canary

hosts:

- "*"

http:

- route:

- destination:

host: canary # 后端svc的服务

subset: v1

weight: 90

- destination:

host: canary

subset: v2

weight: 10

---

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: canary

spec:

host: canary # 后端svc的服务,可以是短名称

subsets:

- name: v1 # svc的子集代理的pod具有的标签

labels:

app: v1

- name: v2

labels:

app: v2

[root@k8s-master-node1 ~]# kubectl get gateways.networking.istio.io

NAME AGE

canary 41m

[root@k8s-master-node1 ~]# kubectl get virtualservices.networking.istio.io

NAME GATEWAYS HOSTS AGE

canary ["canary"] ["*"] 11m

[root@k8s-master-node1 ~]# kubectl get destinationrules.networking.istio.io

NAME HOST AGE

canary canary 11m

# 修改这个yaml文件,添加externalIPs,这样就能进行访问了,因为没有云服务商

[root@k8s-master-node1 ~]# kubectl get svc -n istio-system istio-ingressgateway

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-ingressgateway LoadBalancer 10.96.37.94 10.104.43.47 15021:36448/TCP,80:22247/TCP,443:11086/TCP,31400:4539/TCP,15443:32308/TCP 85m

# 访问的时候,v1占比90,v2占比10

[root@k8s-master-node1 istio]# for i in `seq 1 100`;do curl 10.104.43.47:22247;done >> 1.txt

901

901

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?