I.Sparse versus dense vectors(稀疏向量与密集向量)

1)Difference

- TF-IDF (or PMI) vectors are

- long (length |V|= 20,000 to 50,000)

- sparse (most elements are zero)

- Alternative: learn vectors which are

- short (length 50-1000)

- dense (most elements are non-zero)

2)Why dense vectors?

- Short vectors may be easier to use as features in machine learning (fewer weights to tune)

- Dense vectors may generalize better than explicit counts

- Dense vectors may do better at capturing synonymy:

• car and automobile are synonyms; but are distinct dimensions

• a word with car as a neighbor and a word with automobile as a neighbor should be similar, but aren't

‣ In practice, they work better

3)Classes of embedding

- Static embedding: one fixed embedding for each word in the vocabulary

- Dynamic embedding: the vector for each word is different in different contexts

II.Word2vec

1)Definition:

- Popular embedding method

- Very fast to train

- Idea: predict rather than count

- Word2vec provides various options. We'll do: skip-gram with negative sampling (SGNS)

2)Word2vec

Instead of counting how often each word w occurs near "apricot"

- Train a classifier on a binary prediction task:

Is w likely to show up near "apricot"?

-We don’t actually care about this task:

•But we'll take the learned classifier weights as the word embeddings

-Big idea: self-supervision:

•A word c that occurs near apricot in the corpus cats as the gold "correct answer" for supervised learning

•No need for human labels

• Bengio et al. (2003); Collobert et al. (2011)

3)Approach:

1.Treat the target word t and a neighboring context word c as positive examples.

2.Randomly sample other words in the lexicon to get negative examples

3.Use logistic regression to train a classifier to distinguish those two cases

4.Use the learned weights as the embedding

4)Skip-gram:

1, training data:

Assume a +/- 2 word window, given training sentence:

…lemon, a [tablespoon of apricot jam, a] pinch…

c1 c2 target c3 c4

2,classifier:

(assuming a +/- 2 word window)

…lemon, a [tablespoon of apricot jam, a] pinch…

c1 c2 target c3 c4

Goal: train a classifier that is given a candidate (word, context) pair (apricot, jam) (apricot, aardvark) …

And assigns each pair a probability: P(+|w, c)

P(−|w, c) = 1 − P(+|w, c)

3,Positive or negative

+zany characters and richly applied satire, and some great plot twists.

-It was pathetic. The worst part about it was the boxing scenes...

+...awesome caramel sauce and sweet toasty almonds. I love this place!

-...awful pizza and ridiculously overpriced...

4,Steps

- Vector representation

- Data source: Hand labeling ; kaggle.com ; Internet

- Evaluation metrics:

i.Precision and recall

Precision =True positive/positive

recall=True positive/(True positive+False negative)

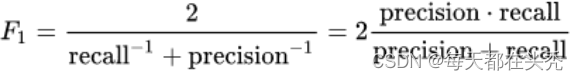

ii.F-score

- The harmonic mean of precision and recall

- F1 gives equal importance to precision and recall

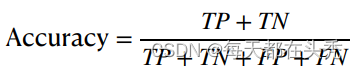

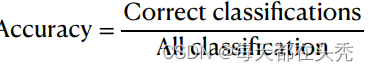

iii. Accuracy

- Binary classification

- Multi-class classification

Note:TP = True positive; FP = False positive; TN = True negative; FN = False negative

5,Scherer Typology of Affective States

Emotion: brief organically synchronized … evaluation of a major event - angry, sad, joyful, fearful, ashamed, proud, elated

Mood: diffuse non-caused low-intensity long-duration change in subjective feeling - cheerful, gloomy, irritable, listless, depressed, buoyant

Interpersonal stances: affective stance toward another person in a specific interaction - friendly, flirtatious, distant, cold, warm, supportive, contemptuous

Attitudes: enduring, affectively colored beliefs, dispositions towards objects or persons - liking, loving, hating, valuing, desiring

Personality traits: stable personality dispositions and typical behavior tendencies - nervous, anxious, reckless, morose, hostile, jealous

III.Emotion

- Definition:

‣ One of the most important affective classes

‣ A relatively brief episode of response to the evaluation of an external or internal event as being of major significance

‣ Detecting emotion has the potential to improve a number of language processing tasks

- Tutoring systems

- Emotions in reviews or customer responses

- Emotion can play a role in medical NLP tasks like helping diagnose depression or suicidal intent

- Two families of theories of emotion

‣ Atomic basic emotions

- A finite list of 6 or 8, from which others are generated

‣ Dimensions of emotion

- Valence (positive negative)

- Arousal (strong, weak)

- Control

- Ekman’s 6 basic emotions:Surprise Happiness anger fear disgust sadness

- Plutchik’s wheel of emotion

‣ 8 basic emotions

‣ four opposing pairs

- joy - sadness

- anger - fear

- trust - disgust

- anticipation - surprise

- Alternative: spatial model

An emotion is a point in 2- or 3-dimensional space

valence: the pleasantness of the stimulus

arousal: the intensity of emotion provoked by the stimulus

(sometimes) dominance: the degree of control exerted by the stimulus

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?