问题描述

tensoflow1.x代码使用tensoflow2.x运行

解决方法

原代码

hparams = tf.contrib.training.HParams(

# Comma-separated list of cleaners to run on text prior to training and eval. For non-English

# text, you may want to use "basic_cleaners" or "transliteration_cleaners".

cleaners='basic_cleaners',

# If you only have 1 GPU or want to use only one GPU, please set num_gpus=0 and specify the GPU idx on run. example:

# expample 1 GPU of index 2 (train on "/gpu2" only): CUDA_VISIBLE_DEVICES=2 python train.py --model='Tacotron' --hparams='tacotron_gpu_start_idx=2'

# If you want to train on multiple GPUs, simply specify the number of GPUs available, and the idx of the first GPU to use. example:

# example 4 GPUs starting from index 0 (train on "/gpu0"->"/gpu3"): python train.py --model='Tacotron' --hparams='tacotron_num_gpus=4, tacotron_gpu_start_idx=0'

# The hparams arguments can be directly modified on this hparams.py file instead of being specified on run if preferred!

# If one wants to train both Tacotron and WaveNet in parallel (provided WaveNet will be trained on True mel spectrograms), one needs to specify different GPU idxes.

# example Tacotron+WaveNet on a machine with 4 or more GPUs. Two GPUs for each model:

# CUDA_VISIBLE_DEVICES=0,1 python train.py --model='Tacotron' --hparams='tacotron_num_gpus=2'

# Cuda_VISIBLE_DEVICES=2,3 python train.py --model='WaveNet' --hparams='wavenet_num_gpus=2'

# IMPORTANT NOTES: The Multi-GPU performance highly depends on your hardware and optimal parameters change between rigs. Default are optimized for servers.

# If using N GPUs, please multiply the tacotron_batch_size by N below in the hparams! (tacotron_batch_size = 32 * N)

# Never use lower batch size than 32 on a single GPU!

# Same applies for Wavenet: wavenet_batch_size = 8 * N (wavenet_batch_size can be smaller than 8 if GPU is having OOM, minimum 2)

# Please also apply the synthesis batch size modification likewise. (if N GPUs are used for synthesis, minimal batch size must be N, minimum of 1 sample per GPU)

# We did not add an automatic multi-GPU batch size computation to avoid confusion in the user's mind and to provide more control to the user for

# resources related decisions.

# Acknowledgement:

# Many thanks to @MlWoo for his awesome work on multi-GPU Tacotron which showed to work a little faster than the original

# pipeline for a single GPU as well. Great work!

# Hardware setup: Default supposes user has only one GPU: "/gpu:0" (Both Tacotron and WaveNet can be trained on multi-GPU: data parallelization)

# Synthesis also uses the following hardware parameters for multi-GPU parallel synthesis.

tacotron_num_gpus=1, # Determines the number of gpus in use for Tacotron training.

wavenet_num_gpus=1, # Determines the number of gpus in use for WaveNet training.

split_on_cpu=True,

# Determines whether to split data on CPU or on first GPU. This is automatically True when more than 1 GPU is used.

# (Recommend: False on slow CPUs/Disks, True otherwise for small speed boost)

###########################################################################################################################################

# Audio

# Audio parameters are the most important parameters to tune when using this work on your personal data. Below are the beginner steps to adapt

# this work to your personal data:

# 1- Determine my data sample rate: First you need to determine your audio sample_rate (how many samples are in a second of audio). This can be done using sox: "sox --i <filename>"

# (For this small tuto, I will consider 24kHz (24000 Hz), and defaults are 22050Hz, so there are plenty of examples to refer to)

# 2- set sample_rate parameter to your data correct sample rate

# 3- Fix win_size and and hop_size accordingly: (Supposing you will follow our advice: 50ms window_size, and 12.5ms frame_shift(hop_size))

# a- win_size = 0.05 * sample_rate. In the tuto example, 0.05 * 24000 = 1200

# b- hop_size = 0.25 * win_size. Also equal to 0.0125 * sample_rate. In the tuto example, 0.25 * 1200 = 0.0125 * 24000 = 300 (Can set frame_shift_ms=12.5 instead)

# 4- Fix n_fft, num_freq and upsample_scales parameters accordingly.

# a- n_fft can be either equal to win_size or the first power of 2 that comes after win_size. I usually recommend using the latter

# to be more consistent with signal processing friends. No big difference to be seen however. For the tuto example: n_fft = 2048 = 2**11

# b- num_freq = (n_fft / 2) + 1. For the tuto example: num_freq = 2048 / 2 + 1 = 1024 + 1 = 1025.

# c- For WaveNet, upsample_scales products must be equal to hop_size. For the tuto example: upsample_scales=[15, 20] where 15 * 20 = 300

# it is also possible to use upsample_scales=[3, 4, 5, 5] instead. One must only keep in mind that upsample_kernel_size[0] = 2*upsample_scales[0]

# so the training segments should be long enough (2.8~3x upsample_scales[0] * hop_size or longer) so that the first kernel size can see the middle

# of the samples efficiently. The length of WaveNet training segments is under the parameter "max_time_steps".

# 5- Finally comes the silence trimming. This very much data dependent, so I suggest trying preprocessing (or part of it, ctrl-C to stop), then use the

# .ipynb provided in the repo to listen to some inverted mel/linear spectrograms. That will first give you some idea about your above parameters, and

# it will also give you an idea about trimming. If silences persist, try reducing trim_top_db slowly. If samples are trimmed mid words, try increasing it.

# 6- If audio quality is too metallic or fragmented (or if linear spectrogram plots are showing black silent regions on top), then restart from step 2.

num_mels=80, # Number of mel-spectrogram channels and local conditioning dimensionality

num_freq=1025, # (= n_fft / 2 + 1) only used when adding linear spectrograms post processing network

rescale=False, # Whether to rescale audio prior to preprocessing

rescaling_max=0.999, # Rescaling value

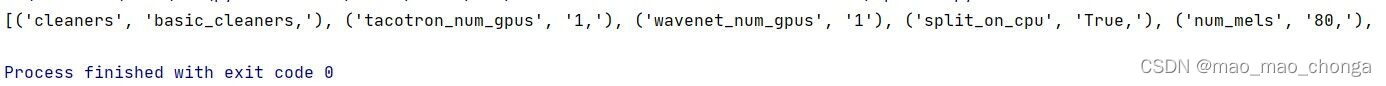

将HParams内的参数写入config.ini文件内

[HParams]

cleaners = basic_cleaners,

tacotron_num_gpus = 1,

wavenet_num_gpus = 1

split_on_cpu = True,

num_mels = 80,

num_freq = 1025,

rescale = False,

rescaling_max = 0.999,

注意将#后的内容删除

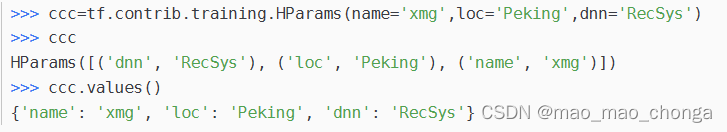

可以用以下代码看一下内容

from configparser import ConfigParser

# Default hyperparameters

cfg = ConfigParser()

cfg.read("config.ini")

print(cfg.items("HParams"))

实际上hparams.values() = cfg.items(“HParams”)是等价的

1374

1374

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?