文章目录

Kafka初识

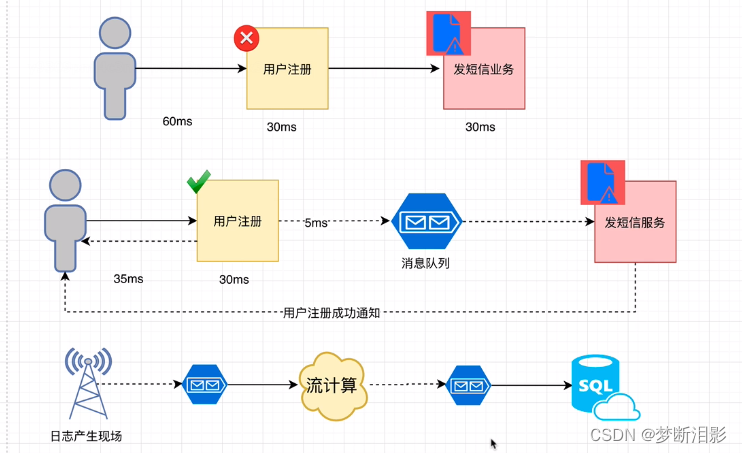

使用场景

Apache Kafka是Apache软件基金会的开源的流处理平台,该平台提供了消息的订阅与发布的消息队列,一般用作系统间解耦、异步通信、削峰填谷等作用。同时Kafka又提供了Kafka streaming插件包实现了实时在线流处理。相比较一些专业的流处理框架不同,Kafka Streaming计算是运行在应用端,具有简单、入门要求低、部署方便等优点。

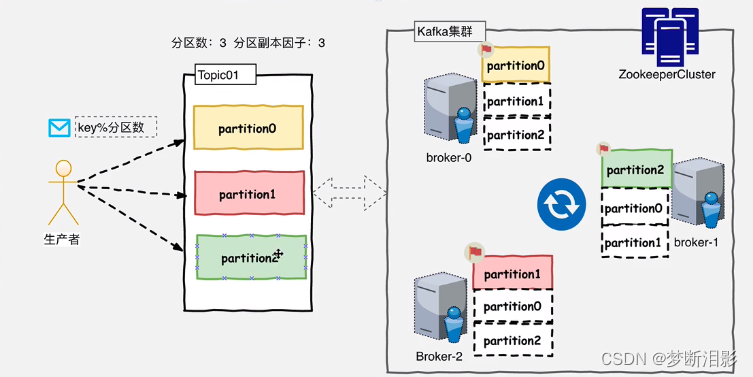

Kafka 基础架构

Kafka集群以Topic形式负责分类集群中的Record每一个Record属于一个Topic。每个Topic底层都会对应一组分区的日志用于持久化Topic中的Record。同时在Kafka集群中,Topic的每一个日志的分区都一定会有1个Borker担当该分区的Leader,其他的Broker担当该分区的follower,Leader负责分区数据的读写操作,follower负责同步改分区的数据。这样如果分区的Leader宕机,该分区的其他follower会选取出新的leader继续负责该分区数据的读写。其中集群的中Leader的监控和Topic的部分元数据是存储在Zookeeper中.

- 生产者

1.Kafka中所有消息是通过Topic为单位进行管理,生产者将数据发布到相应的Topic,然后通过round-robin方式,也可以根据某些语义分区功能(例如基于记录中的Key)进行分发到Partition。

2.每组日志分区是一个有序的不可变的的日志序列,分区中的每一个Record都被分配了唯一的序列编号称为是offset,Kafka 集群会持久化所有发布到Topic中的Record信息,改Record的持久化时间是通过配置文件指定,默认是168小时(log.retention.hours=168),Kafka底层会定期的检查日志文件,然后将过期的数据从log中移除。

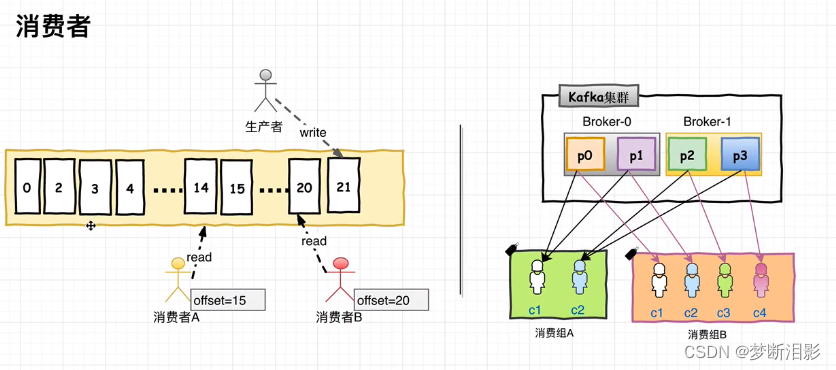

- 消费者

1.在消费者消费Topic中数据的时候,每个消费者会维护本次消费对应分区的偏移量,消费者会在消费完一个批次的数据之后,会将本次消费的偏移量提交给Kafka集群,因此对于每个消费者而言可以随意的控制改消费者的偏移量。因此在Kafka中,消费者可以从一个topic分区中的任意位置读取队列数据,由于每个消费者控制了自己的消费的偏移量,因此多个消费者之间彼此相互独立。

2.消费者使用Consumer Group名称标记自己,Topic会将记录对同一组的Consumer实例进行均分消费;如果所有Consumer实例具有不同的ConsumerGroup,则每条记录将广播到所有Consumer Group进程。

3.Kafka仅提供分区中记录的有序性,不同分区记录之间无顺序,如果需要记录全局有序,则可以通过只有一个分区Topic来实现。

Kafka安装

- 安装JDK1.8以上,配置JAVA_HOME

- jdk下载 https://www.injdk.cn/

- cd /etc/profile

export JAVA_HOME=/usr/local/java/jdk/jdk-11

export PATH=$JAVA_HOME/bin: $PATH

source /etc/profile

- 安装&启动Zookeeper

1.下载官网:https://zookeeper.apache.org/releases.html

2.解压缩,复制配置文件 cp zoo_sample.cfg zoo.cfg,启动zookeeper ./zkServer.sh start zoo.cfg

- 安装kafka

1.下载:https://kafka.apache.org/downloads

2.解压

3.修改配置文件server.properties,1)监听打开:listeners=PLAINTEXT://ip:9092

2)日志地址修改:log.dirs=/usr/local/java/kafka/kafka-logs

3)zk修改zookeeper.connect=ip:2181

4)启动kafka:./bin/kafka-server-start.sh -daemon config/server.properties

- 安装Eagle插件

1.下载:http://download.kafka-eagle.org/

2.配置环境:export KE_HOME=/user/local/xxx

export PATH=$PATH: $JAVA_HOME/bin: $KE_HOME/bin

3.修改配置文件system-config.properties

采用单机:

kafka.eagle.zk.cluster.alias=cluster1

cluster1.zk.list=127.0.0.1:2181

cluster1.kafka.eagle.offset.storage=kafka

kafka.eagle.metrics.charts=true

kafka.eagle.driver=com.mysql.jdbc.Driver

kafka.eagle.url=jdbc:mysql://127.0.0.1:3306/ke?useUnicode=true&characterEncoding=UTF-8&zeroDateTimeBehavior=convertToNull

kafka.eagle.username=root

kafka.eagle.password=root

4.修改kafka 启动文件 kafka-server-start.sh,在KAFKA_HEAP_OPTS中加入export=JMX_HOME=“9999”

5.启动eagle:./bin/ke.sh start

kafka基础API操作

- 增删查操作

public class KafkaConnectionTest {

@Test

public void connection() throws Exception{

//配置连接参数

Properties properties = new Properties();

properties.put(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

//创建连接

KafkaAdminClient client = (KafkaAdminClient) KafkaAdminClient.create(properties);

//获取所有的topic

ListTopicsResult listTopicsResult = client.listTopics();

KafkaFuture<Set<String>> names = listTopicsResult.names();

for (String name : names.get()){

System.out.println(name);

}

//新增topic

client.createTopics(Arrays.asList(new NewTopic("topic01",3, (short) 1)));

//删除topic

client.deleteTopics(Arrays.asList("topic01"));

//获取topic详情

DescribeTopicsResult result = client.describeTopics(Arrays.asList("topic01"));

Map<String, TopicDescription> stringTopicDescriptionMap = result.all().get();

for (Map.Entry<String,TopicDescription> entry:stringTopicDescriptionMap.entrySet()){

System.out.println("key:"+entry.getKey()+"\t"+"value:"+entry.getValue());

}

client.close();

}

}

- 生产者

public class ProduceTest {

@Test

public void producer(){

//构建链接参数

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,StringSerializer.class.getName());

//创建生产者

KafkaProducer producer = new KafkaProducer(properties);

for (int i = 0; i < 10; i++) {

ProducerRecord<String,String> record = new ProducerRecord<String,String>("topic01","key"+i,"value"+i);

producer.send(record);

}

producer.close();

}

}

- 消费者

public class ConsumerTest {

@Test

public void consumer() throws Exception{

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"g1");

KafkaConsumer<String,String> consumer = new KafkaConsumer<String, String>(properties);

consumer.subscribe(Arrays.asList("topic01"));

while (true){

ConsumerRecords<String, String> consumerRecords = consumer.poll(Duration.ofSeconds(1));

Iterator<ConsumerRecord<String, String>> iterator = consumerRecords.iterator();

while (iterator.hasNext()){

ConsumerRecord<String, String> record = iterator.next();

String key = record.key();

String value = record.value();

long offset = record.offset();

int partition = record.partition();

System.out.println("key:"+key+", value:"+value+", offset:"+offset+", partition:"+partition);

}

}

}

}

- 自定义分区

需要去实现Partitioner接口,重写partition方法

public class CustomPartition implements Partitioner {

@Override

public int partition(String s, Object o, byte[] bytes, Object o1, byte[] bytes1, Cluster cluster) {

//处理逻辑,返回自己希望的分区下标

return 0;

}

@Override

public void close() {

}

@Override

public void configure(Map<String, ?> map) {

}

}

public class ProduceTest {

@Test

public void producer(){

//构建链接参数

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,StringSerializer.class.getName());

//自定义分区

properties.put(ProducerConfig.PARTITIONER_CLASS_CONFIG,CustomPartition.class.getName());

//创建生产者

KafkaProducer producer = new KafkaProducer(properties);

for (int i = 0; i < 10; i++) {

ProducerRecord<String,String> record = new ProducerRecord<String,String>("topic02","key"+i,"value"+i);

producer.send(record);

}

producer.close();

}

}

- 序列化

- 自定义实体类

@Data

@Accessors(chain = true)

public class Student implements Serializable {

private String name;

private Integer age;

private String gender;

}

- 自定义序列化接口

public class ObjectSerializer implements Serializer {

@Override

public void configure(Map map, boolean b) {

}

@Override

public byte[] serialize(String s, Object data) {

return SerializationUtils.serialize((Serializable) data);

}

@Override

public void close() {

}

}

- 自定义反序列化接口

public class ObjectDeserializer implements Deserializer {

@Override

public void configure(Map map, boolean b) {

}

@Override

public Object deserialize(String s, byte[] bytes) {

return SerializationUtils.deserialize(bytes);

}

@Override

public void close() {

}

}

- 生产者

public class ProduceTest {

@Test

public void producer(){

//构建链接参数

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

//自定义序列化

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,ObjectSerializer.class.getName());

//创建生产者

KafkaProducer<String,Student> producer = new KafkaProducer<String,Student>(properties);

for (int i = 0; i < 10; i++) {

Student student = new Student().setName("第"+i).setAge(i).setGender("男");

ProducerRecord<String,Student> record = new ProducerRecord<String,Student>("topic01","key"+i,student);

producer.send(record);

}

producer.close();

}

}

- 消费者

public class ConsumerTest {

@Test

public void consumer() throws Exception{

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,ObjectDeserializer.class.getName());

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"g1");

KafkaConsumer<String,Student> consumer = new KafkaConsumer<String, Student>(properties);

consumer.subscribe(Arrays.asList("topic01"));

while (true){

ConsumerRecords<String, Student> consumerRecords = consumer.poll(Duration.ofSeconds(1));

Iterator<ConsumerRecord<String, Student>> iterator = consumerRecords.iterator();

while (iterator.hasNext()){

ConsumerRecord<String, Student> record = iterator.next();

String key = record.key();

Student student = record.value();

long offset = record.offset();

int partition = record.partition();

System.out.println("key:"+key+", value:"+student+", offset:"+offset+", partition:"+partition);

}

}

}

}

- 自定义拦截器

public class CustomInterceptor implements ProducerInterceptor {

@Override

public ProducerRecord onSend(ProducerRecord producerRecord) {

//拦截数据,修改

String value = producerRecord.value().toString();

ProducerRecord record = new ProducerRecord(producerRecord.topic(),producerRecord.key(),value+"拦截添加");

return record;

}

@Override

public void onAcknowledgement(RecordMetadata recordMetadata, Exception e) {

}

@Override

public void close() {

}

@Override

public void configure(Map<String, ?> map) {

}

}

public class ProduceTest {

@Test

public void producer(){

//构建链接参数

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,StringSerializer.class.getName());

//自定义拦截器

properties.put(ProducerConfig.INTERCEPTOR_CLASSES_CONFIG,CustomInterceptor.class.getName());

//创建生产者

KafkaProducer<String,String> producer = new KafkaProducer<String,String>(properties);

for (int i = 0; i < 10; i++) {

ProducerRecord<String,String> record = new ProducerRecord<String,String>("topic01","key"+i,"value"+i);

producer.send(record);

}

producer.close();

}

}

kafka高级api操作

Offset自动控制

Kafka消费者的默认首次消费策略:latest,auto.offset.reset=latest

1.earliest - 自动将偏移量重置为最早的偏移量(消费者初次订阅topic时,会从topic中最开始的消息开始消费)

2.latest - 自动将偏移量重置为最新的偏移量(消费者初次订阅topic时,topic中之前存在的消息,消费者消费不到)

3.none - 如果未找到消费者组的先前偏移量,则向消费者抛出异常

Kafka消费者在消费数据的时候默认会定期的提交消费的偏移量,这样就可以保证所有的消息至少可以被消费者消费1次,用户可以通过以下两个参数配置:

enable.auto.commit = true 默认

auto.commit.interval.ms = 5000 默认

如果用户需要自己管理offset的自动提交,可以关闭offset的自动提交,手动管理offset提交的偏移量,注意用户提交的offset偏移量永远都要比本次消费的偏移量+1,因为提交的offset是kafka消费者下一次抓取数据的位置。

public class ConsumerTest {

@Test

public void consumer() throws Exception{

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"g1");

//offset改为手动提交

properties.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,false);

KafkaConsumer<String,String> consumer = new KafkaConsumer<String, String>(properties);

consumer.subscribe(Arrays.asList("topic01"));

while (true){

ConsumerRecords<String, String> consumerRecords = consumer.poll(Duration.ofSeconds(1));

Iterator<ConsumerRecord<String, String>> iterator = consumerRecords.iterator();

while (iterator.hasNext()){

ConsumerRecord<String, String> record = iterator.next();

String key = record.key();

String value = record.value();

long offset = record.offset();

int partition = record.partition();

Map<TopicPartition, OffsetAndMetadata> offsets = new HashMap<>();

//提交的偏移量+1

offsets.put(new TopicPartition(record.topic(),record.partition()),new OffsetAndMetadata(offset+1));

consumer.commitAsync(offsets, new OffsetCommitCallback() {

@Override

public void onComplete(Map<TopicPartition, OffsetAndMetadata> map, Exception e) {

if (e ==null){

System.out.println("完成:"+offset+"提交!");

}

}

});

System.out.println("key:"+key+", value:"+value+", offset:"+offset+", partition:"+partition);

}

}

}

}

Acks & Retries

Kafka生产者在发送完一个的消息之后,要求Broker在规定的额时间Ack应答答,如果没有在规定时间内应答,Kafka生产者会尝试n次重新发送消息,acks=1 默认

1.acks=1 - Leader会将Record写到其本地日志中,但会在不等待所有Follower的完全确认的情况下做出响应。在这种情况下,如果Leader在确认记录后立即失败,但在Follower复制记录之前失败,则记录将丢失。

2.acks=0 - 生产者根本不会等待服务器的任何确认。该记录将立即添加到套接字缓冲区中并视为已发送。在这种情况下,不能保证服务器已收到记录。

3.acks=all - 这意味着Leader将等待全套同步副本确认记录。这保证了只要至少一个同步副本仍处于活动状态,记录就不会丢失。这是最有力的保证。这等效于acks = -1设置。

如果生产者在规定的时间内,并没有得到Kafka的Leader的Ack应答,Kafka可以开启reties机制。

request.timeout.ms = 30000 默认

retries = 2147483647 默认

消费者会收到4条相同的消息

public class ProduceTest {

@Test

public void producer(){

//构建链接参数

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,StringSerializer.class.getName());

//ack

properties.put(ProducerConfig.ACKS_CONFIG,"-1");

//请求超时时间ms

properties.put(ProducerConfig.REQUEST_TIMEOUT_MS_CONFIG,1);

//重试次数

properties.put(ProducerConfig.RETRIES_CONFIG,3);

//创建生产者

KafkaProducer producer = new KafkaProducer(properties);

String uuid = UUID.randomUUID().toString();

ProducerRecord<String,String> record = new ProducerRecord<String,String>("topic01","key","value:"+uuid);

producer.send(record);

producer.close();

}

}

幂等性

幂等是针对生产者角度的特性。幂等可以保证上生产者发送的消息,不会丢失,而且不会重复。实现幂等的关键点就是服务端可以区分请求是否重复。

Kafka为了实现幂等性,它在底层设计架构中引⼊了ProducerID和SequenceNumber

- ProducerID:在每个新的Producer初始化时,会被分配⼀个唯⼀的ProducerID,这个ProducerID对客户端使⽤者是不可见的。

- SequenceNumber:对于每个ProducerID,Producer发送数据的每个Topic和Partition都对应⼀个从0开始单调递增的SequenceNumber值。

enable.idempotence= false 默认

注意:在使用幂等性的时候,要求必须开启retries和acks=all

public class ProduceTest {

@Test

public void producer(){

//构建链接参数

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,StringSerializer.class.getName());

//ack

properties.put(ProducerConfig.ACKS_CONFIG,"-1");

//请求超时时间ms

properties.put(ProducerConfig.REQUEST_TIMEOUT_MS_CONFIG,1);

//重试次数

properties.put(ProducerConfig.RETRIES_CONFIG,3);

//开启幂等性

properties.put(ProducerConfig.ENABLE_IDEMPOTENCE_CONFIG,true);

//创建生产者

KafkaProducer producer = new KafkaProducer(properties);

String uuid = UUID.randomUUID().toString();

ProducerRecord<String,String> record = new ProducerRecord<String,String>("topic01","key","value:"+uuid);

producer.send(record);

producer.close();

}

}

事务控制

Kafka的幂等性,只能保证一条记录的在分区发送的原子性,但是如果要保证多条记录(多分区)之间的完整性,这个时候就需要开启kafk的事务操作。

- 生产者事务Only

- 消费者&生产者事务

生产者开启事务后,消费者需要开启事务隔离级别(read_committed)

isolation.level = read_uncommitted 默认

开启的生产者事务的时候,只需要指定transactional.id属性即可,一旦开启了事务,默认生产者就已经开启了幂等性。但是要求"transactional.id"的取值必须是唯一的,同一时刻只能有一个"transactional.id"存在,其他的将会被关闭。

- 生产者事务Only

public class ProduceTest {

@Test

public void producer(){

//构建链接参数

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,StringSerializer.class.getName());

//开启事务

properties.put(ProducerConfig.TRANSACTIONAL_ID_CONFIG,"transaction-id");

//创建生产者

KafkaProducer producer = new KafkaProducer(properties);

//初始化事务

producer.initTransactions();

try{

//开始事务

producer.beginTransaction();

//发送消息

for (int i = 0; i < 10; i++) {

String uuid = UUID.randomUUID().toString();

ProducerRecord<String,String> record = new ProducerRecord<String,String>("topic01","key"+i,"value"+i+":"+uuid);

producer.send(record);

}

//提交事务

producer.commitTransaction();

}catch (Exception e){

e.printStackTrace();

//终止事务

producer.abortTransaction();

}finally {

producer.close();

}

}

}

public class ConsumerTest {

@Test

public void consumer() throws Exception{

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.GROUP_ID_CONFIG,"group1");

//开启事务隔离级别

properties.put(ConsumerConfig.ISOLATION_LEVEL_CONFIG,"read_committed");

KafkaConsumer<String,String> consumer = new KafkaConsumer<String, String>(properties);

consumer.subscribe(Arrays.asList("topic01"));

while (true){

ConsumerRecords<String, String> consumerRecords = consumer.poll(Duration.ofSeconds(1));

Iterator<ConsumerRecord<String, String>> iterator = consumerRecords.iterator();

while (iterator.hasNext()){

ConsumerRecord<String, String> record = iterator.next();

String key = record.key();

String value = record.value();

long offset = record.offset();

int partition = record.partition();

System.out.println("key:"+key+", value:"+value+", offset:"+offset+", partition:"+partition);

}

}

}

}

- 消费者&生产者事务

public class ConsumerAndProduceTest {

@Test

public void consumer() throws Exception{

KafkaProducer producer = buildProducer();

KafkaConsumer consumer = buildConsumer("group03");

consumer.subscribe(Arrays.asList("topic01"));

//初始化事务

producer.initTransactions();

try{

while (true){

ConsumerRecords<String, String> consumerRecords = consumer.poll(Duration.ofSeconds(1));

Iterator<ConsumerRecord<String, String>> iterator = consumerRecords.iterator();

//开始事务

producer.beginTransaction();

Map<TopicPartition, OffsetAndMetadata> offsets = new HashMap<>();

while (iterator.hasNext()){

ConsumerRecord<String, String> record = iterator.next();

String key = record.key();

String value = record.value();

long offset = record.offset();

int partition = record.partition();

System.out.println("key:"+key+", value:"+value+", offset:"+offset+", partition:"+partition);

//重新构建ProducerRecord

ProducerRecord producerRecord = new ProducerRecord("topic02",record.key(),record.value()+" 消费者&生产者事务");

producer.send(producerRecord);

//记录offset

offsets.put(new TopicPartition(record.topic(),partition),new OffsetAndMetadata(offset+1));

}

//提交事务

producer.sendOffsetsToTransaction(offsets,"group03");

producer.commitTransaction();

}

}catch (Exception e){

e.printStackTrace();

//终止事务

producer.abortTransaction();

}finally {

producer.close();

}

}

public KafkaProducer buildProducer(){

//构建链接参数

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class.getName());

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,StringSerializer.class.getName());

//开启事务

properties.put(ProducerConfig.TRANSACTIONAL_ID_CONFIG,"transaction-id"+UUID.randomUUID());

//创建生产者

KafkaProducer producer = new KafkaProducer(properties);

return producer;

}

public KafkaConsumer buildConsumer(String group){

Properties properties = new Properties();

properties.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG,"127.0.0.1:9092");

properties.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class.getName());

properties.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG,StringDeserializer.class.getName());

properties.put(ConsumerConfig.GROUP_ID_CONFIG,group);

//开启事务隔离级别

properties.put(ConsumerConfig.ISOLATION_LEVEL_CONFIG,"read_committed");

//创建消费者

KafkaConsumer consumer = new KafkaConsumer(properties);

return consumer;

}

}

kafka集成springboot

- 配置yml

spring:

kafka:

#连接服务器地址

bootstrap-servers: 127.0.0.1:9092

consumer:

#定期提交偏移量ms

auto-commit-interval: 100

#自动将偏移量重置为最早的偏移量

auto-offset-reset: earliest

#offset自动提交

enable-auto-commit: true

#key反序列化

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

properties:

#隔离级别

isolation:

level: read_committed

#value反序列化

value-deserializer: org.apache.kafka.common.serialization.StringDeserializer

producer:

#ack

acks: all

#批量提交

batch-size: 16384

#内存缓冲

buffer-memory: 33554432

#key序列化

key-serializer: org.apache.kafka.common.serialization.StringSerializer

properties:

#幂等性

enable:

idempotence: true

#自定义拦截器

interceptor:

classes: com.kafka.springbootkafka.interceptor.CustomInterceptor

#自定义分区

partitioner:

classes:

#重试次数

retries: 5

#事务

transaction-id-prefix: transaction-id-

#value序列化

value-serializer: org.apache.kafka.common.serialization.StringSerializer

- 自定义拦截器

public class CustomInterceptor implements ProducerInterceptor {

@Override

public ProducerRecord onSend(ProducerRecord producerRecord) {

producerRecord.headers().add("head","springboot测试拦截".getBytes());

return producerRecord;

}

@Override

public void onAcknowledgement(RecordMetadata recordMetadata, Exception e) {

}

@Override

public void close() {

}

@Override

public void configure(Map<String, ?> map) {

}

}

- service接口

public interface SendMessageService {

void send(String topic,String key,String value);

}

@Service

public class SendMessageServiceImpl implements SendMessageService {

@Autowired

private KafkaTemplate kafkaTemplate;

@Transactional

@Override

public void send(String topic, String key, String value) {

kafkaTemplate.send(new ProducerRecord(topic,key,value));

}

}

- 生产者

@SpringBootTest

@RunWith(SpringRunner.class)

public class ProducerTest {

@Autowired

private KafkaTemplate kafkaTemplate;

@Autowired

private SendMessageService service;

/**

* 发送普通消息

*/

@Test

public void produce(){

Student student = new Student().setName("tony").setAge(10).setGender("男");

kafkaTemplate.send(new ProducerRecord("topic01","springboot", JSON.toJSONString(student)));

}

/**

* 发送事务消息

*/

@Test

public void setTransaction(){

kafkaTemplate.executeInTransaction(new KafkaOperations.OperationsCallback() {

@Override

public Object doInOperations(KafkaOperations kafkaOperations) {

Student student = new Student().setName("tom").setAge(10).setGender("男");

return kafkaOperations.send("topic01","springboot 事务",JSON.toJSONString(student));

}

});

}

/**

* 事务消息(同时支持spring的事务)

*/

@Test

public void setTransaction2(){

Student student = new Student().setName("jack").setAge(20).setGender("女");

service.send("topic01","springboot事务另一种",JSON.toJSONString(student));

}

}

- 消费者

@Component

public class ConsumerTest {

@KafkaListeners(value = @KafkaListener(topics = {"topic01"},groupId = "group01"))

public void consumer(ConsumerRecord<String, String> record){

String key = record.key();

Student student = JSON.parseObject(record.value(), Student.class);

long offset = record.offset();

int partition = record.partition();

Headers headers = record.headers();

System.out.println("key:"+key+", value:"+student+", offset:"+offset+", partition:"+partition+", header:key"+headers);

}

}

1440

1440

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?