http://f.qidian.com/all?size=-1&sign=-1&tag=-1&chanId=-1&subCateId=-1&orderId=&update=-1&page=1&month=-1&style=1&action=-1

这是网站的第一页,观察发现,网址中只有page一个变量,便想到用for循环来进行网址的变换。

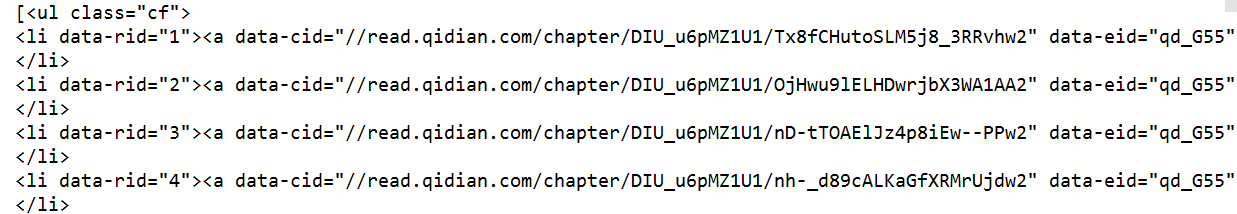

因为这是整个网站的小说,所以想着先把小说名字及其链接爬下来,再通过每本小说的链接将小说章节、链接及其内容爬下来,在爬取过程中,我遇到的最大问题是不知道怎么通过链接将小说章节的名字和章节链接爬下来,即把下面的文字内容爬下来

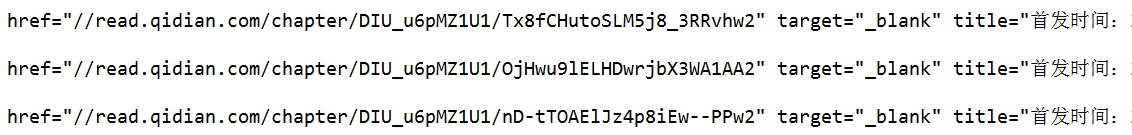

后来通过各种查资料,发现只需要把正则写出来,进行匹配即可,其中用到了finditer

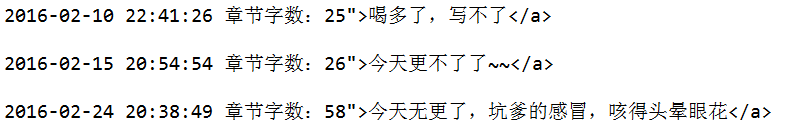

reg = re.finditer(r'<a data-cid="(.*?)" data-eid="qd_G55" href=".*?" target="_blank" title="首发时间:.*?章节字数:.*?">(.*?)</a>',tent)1**

for i in url2:

url2 = 'http:' + i2**

for i in reg:

# print i.group(1)

page_url = "http:"+ i.group(1)

page_name = i.group(2)3**

content7 = soup.find("div", class_="read-content j_readContent").get_text('\n').encode('utf-8')4**

with open(name + '.txt','a') as f:

f.write(page_name + '\n' + content7 + '\n')

# -*- coding:utf-8 -*-

from bs4 import BeautifulSoup

import urllib2

import re

import time

for p in range(1,2):

url = 'http://f.qidian.com/all?size=-1&sign=-1&tag=-1&chanId=-1&subCateId=-1&orderId=&update=-1&page=%d&month=-1&style=1&action=-1' % p

user_agent = "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:49.0) Gecko/20100101 Firefox/49.0"

headers = {'User-Agent': user_agent}

response = urllib2.Request(url, headers=headers)

html = urllib2.urlopen(response).read()

soup = BeautifulSoup(html, 'html.parser', from_encoding='utf-8')

content1 = soup.find("div",class_="all-book-list").find_all('h4')

for k in content1:

name = k.find('a').get_text(strip=True)

print name

k = str(k)

url2 = re.findall(r'.*?<a.*?href="(.*?)".*?>', k)

for i in url2:

url2 = 'http:' + i

#print url2

user_agent = "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:49.0) Gecko/20100101 Firefox/49.0"

headers = {'User-Agent': user_agent}

response = urllib2.Request(url2, headers=headers)

html3 = urllib2.urlopen(response).read()

soup = BeautifulSoup(html3, 'html.parser')

tent = soup.find_all("ul",class_="cf")

tent = str(tent)

reg = re.finditer(r'<a data-cid="(.*?)" data-eid="qd_G55" href=".*?" target="_blank" title="首发时间:.*?章节字数:.*?">(.*?)</a>',tent)

for i in reg:

#print i.group(1)

page_url = "http:"+ i.group(1)

page_name = i.group(2)

print page_name

user_agent = "Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:49.0) Gecko/20100101 Firefox/49.0"

headers = {'User-Agent': user_agent}

response = urllib2.Request(page_url, headers=headers)

html4 = urllib2.urlopen(response).read()

soup = BeautifulSoup(html4, 'html.parser', from_encoding='utf-8')

content7 = soup.find("div", class_="read-content j_readContent").get_text('\n').encode('utf-8')

with open(name + '.txt','a') as f:

f.write(page_name + '\n' + content7 + '\n')

print 'OK1'

time.sleep(1)

***当然,这里只是爬了一页的。

2655

2655

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?