难点分析

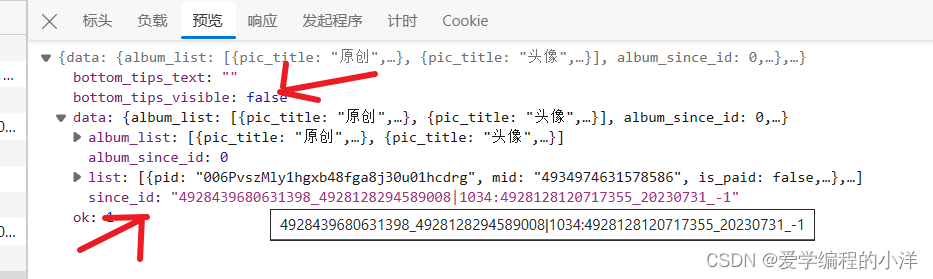

主要是在抓包分析怎么实现分页获取,通过抓包分析,我们知道每次请求获取照片的时候,返回的数据中都会有一个since_id 和 bottom_tips_visible

当请求为最后一页数据的时候,since_id为0和bottom_tips_visible为true

用过上述分析我们就可以轻松获取数据了

获取数据

def get_page_info(username, since_id):

url = 'https://weibo.com/ajax/profile/getImageWall'

headers = {

'Cookie': 'SINAGLOBAL=666731024991.3516.1692108672688; SUB=_2A25J3_fyDeRhGeBK41IY9CbIzDyIHXVrI5m6rDV8PUJbkNANLRnTkW1NR5DGKlU-7QQXFUn8kCO7tHZWHa2lstgT; SUBP=0033WrSXqPxfM725Ws9jqgMF55529P9D9WhfqYIORlaYHk9cTmVipRvw5NHD95QcShn71KBRShM7Ws4Dqcj.i--ciK.RiKLsi--ci-88iKyFi--ci-2Ei-2ci--Ni-2EiKy8; XSRF-TOKEN=j1xKHXp5NhQe6PQfbcsrCsbK; _s_tentry=weibo.com; Apache=7785653743983.5625.1692144415457; ULV=1692144415526:3:3:3:7785653743983.5625.1692144415457:1692112430474; PC_TOKEN=7b320ce9a2; WBStorage=4d96c54e|undefined; WBPSESS=-hAVHrSkMC8S4jXFC4-lqnFhFtNlrZmAL-r5XJQqEZxHyzIHGIPV0NHIisq9ALS32gvYmuib-sMSj5_PnjsfSxrsrk42crVW65GaxDPbJhzjy5gXZg19j07R0Qy1OJfS5oZKop_lRJX1ZHkKrd5B7w==',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36 Edg/115.0.1901.203',

'Referer': 'https://weibo.com/',

}

params = {

'uid': username,

'sinceid': since_id,

}

try:

res = requests.get(url=url, headers=headers, params=params)

if res.status_code == 200:

return res.json()

return None

except requests.exceptions.RequestException as e:

print(e)

return None解析数据

def parse_json_data(json_data, username):

try:

headers = {

'Cookie': 'SINAGLOBAL=666731024991.3516.1692108672688; SUB=_2A25J3_fyDeRhGeBK41IY9CbIzDyIHXVrI5m6rDV8PUJbkNANLRnTkW1NR5DGKlU-7QQXFUn8kCO7tHZWHa2lstgT; SUBP=0033WrSXqPxfM725Ws9jqgMF55529P9D9WhfqYIORlaYHk9cTmVipRvw5NHD95QcShn71KBRShM7Ws4Dqcj.i--ciK.RiKLsi--ci-88iKyFi--ci-2Ei-2ci--Ni-2EiKy8; XSRF-TOKEN=j1xKHXp5NhQe6PQfbcsrCsbK; _s_tentry=weibo.com; Apache=7785653743983.5625.1692144415457; ULV=1692144415526:3:3:3:7785653743983.5625.1692144415457:1692112430474; PC_TOKEN=7b320ce9a2; WBStorage=4d96c54e|undefined; WBPSESS=-hAVHrSkMC8S4jXFC4-lqnFhFtNlrZmAL-r5XJQqEZxHyzIHGIPV0NHIisq9ALS32gvYmuib-sMSj5_PnjsfSxrsrk42crVW65GaxDPbJhzjy5gXZg19j07R0Qy1OJfS5oZKop_lRJX1ZHkKrd5B7w==',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36 Edg/115.0.1901.203',

'Referer': 'https://weibo.com/',

}

img_prefix_url = 'https://wx2.sinaimg.cn/webp720/'

# pprint(json_data['data']['list'])

imgs = json_data['data']['list']

for img in imgs:

img_url = img_prefix_url + img['pid'] + '.jpg'

img_name = img['pid']

# print(img_url)

img_content = requests.get(url=img_url, headers=headers).content

if not os.path.exists(f'E:/Python/project/网络爬虫/12-爬取微博用户视频/imgs/{username}'):

os.mkdir(f'E:/Python/project/网络爬虫/12-爬取微博用户视频/imgs/{username}')

path = img_name + '.' + 'jpg'

with open(f'imgs/{username}/{path}', mode='wb') as f:

f.write(img_content)

print(f'{img_name}下载完毕')

return json_data['bottom_tips_visible'], json_data['data']['since_id']

except Exception as e:

print(e)主函数

def main(username):

# username = '5252751519'

# username = '2150318380'

since_id = 0

while True:

json_data = get_page_info(username=username, since_id=since_id)

is_stop, since_id = parse_json_data(json_data=json_data, username=username)

print(f'当前状态:{is_stop},since_id:{since_id}')

if str(is_stop) == 'True' or str(since_id) == '0':

print("已经到底了...")

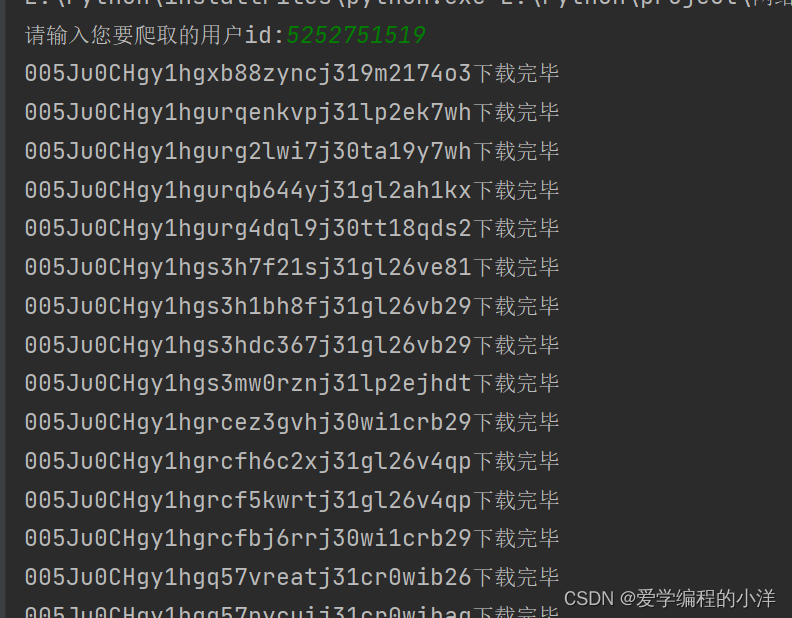

break效果展示

完整代码

import requests

import os

from pprint import pprint

def get_page_info(username, since_id):

url = 'https://weibo.com/ajax/profile/getImageWall'

headers = {

'Cookie': 'SINAGLOBAL=666731024991.3516.1692108672688; SUB=_2A25J3_fyDeRhGeBK41IY9CbIzDyIHXVrI5m6rDV8PUJbkNANLRnTkW1NR5DGKlU-7QQXFUn8kCO7tHZWHa2lstgT; SUBP=0033WrSXqPxfM725Ws9jqgMF55529P9D9WhfqYIORlaYHk9cTmVipRvw5NHD95QcShn71KBRShM7Ws4Dqcj.i--ciK.RiKLsi--ci-88iKyFi--ci-2Ei-2ci--Ni-2EiKy8; XSRF-TOKEN=j1xKHXp5NhQe6PQfbcsrCsbK; _s_tentry=weibo.com; Apache=7785653743983.5625.1692144415457; ULV=1692144415526:3:3:3:7785653743983.5625.1692144415457:1692112430474; PC_TOKEN=7b320ce9a2; WBStorage=4d96c54e|undefined; WBPSESS=-hAVHrSkMC8S4jXFC4-lqnFhFtNlrZmAL-r5XJQqEZxHyzIHGIPV0NHIisq9ALS32gvYmuib-sMSj5_PnjsfSxrsrk42crVW65GaxDPbJhzjy5gXZg19j07R0Qy1OJfS5oZKop_lRJX1ZHkKrd5B7w==',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36 Edg/115.0.1901.203',

'Referer': 'https://weibo.com/',

}

params = {

'uid': username,

'sinceid': since_id,

}

try:

res = requests.get(url=url, headers=headers, params=params)

if res.status_code == 200:

return res.json()

return None

except requests.exceptions.RequestException as e:

print(e)

return None

def parse_json_data(json_data, username):

try:

headers = {

'Cookie': 'SINAGLOBAL=666731024991.3516.1692108672688; SUB=_2A25J3_fyDeRhGeBK41IY9CbIzDyIHXVrI5m6rDV8PUJbkNANLRnTkW1NR5DGKlU-7QQXFUn8kCO7tHZWHa2lstgT; SUBP=0033WrSXqPxfM725Ws9jqgMF55529P9D9WhfqYIORlaYHk9cTmVipRvw5NHD95QcShn71KBRShM7Ws4Dqcj.i--ciK.RiKLsi--ci-88iKyFi--ci-2Ei-2ci--Ni-2EiKy8; XSRF-TOKEN=j1xKHXp5NhQe6PQfbcsrCsbK; _s_tentry=weibo.com; Apache=7785653743983.5625.1692144415457; ULV=1692144415526:3:3:3:7785653743983.5625.1692144415457:1692112430474; PC_TOKEN=7b320ce9a2; WBStorage=4d96c54e|undefined; WBPSESS=-hAVHrSkMC8S4jXFC4-lqnFhFtNlrZmAL-r5XJQqEZxHyzIHGIPV0NHIisq9ALS32gvYmuib-sMSj5_PnjsfSxrsrk42crVW65GaxDPbJhzjy5gXZg19j07R0Qy1OJfS5oZKop_lRJX1ZHkKrd5B7w==',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/115.0.0.0 Safari/537.36 Edg/115.0.1901.203',

'Referer': 'https://weibo.com/',

}

img_prefix_url = 'https://wx2.sinaimg.cn/webp720/'

# pprint(json_data['data']['list'])

imgs = json_data['data']['list']

for img in imgs:

img_url = img_prefix_url + img['pid'] + '.jpg'

img_name = img['pid']

# print(img_url)

img_content = requests.get(url=img_url, headers=headers).content

if not os.path.exists(f'E:/Python/project/网络爬虫/12-爬取微博用户视频/imgs/{username}'):

os.mkdir(f'E:/Python/project/网络爬虫/12-爬取微博用户视频/imgs/{username}')

path = img_name + '.' + 'jpg'

with open(f'imgs/{username}/{path}', mode='wb') as f:

f.write(img_content)

print(f'{img_name}下载完毕')

return json_data['bottom_tips_visible'], json_data['data']['since_id']

except Exception as e:

print(e)

def main(username):

# username = '5252751519'

# username = '2150318380'

since_id = 0

while True:

json_data = get_page_info(username=username, since_id=since_id)

is_stop, since_id = parse_json_data(json_data=json_data, username=username)

print(f'当前状态:{is_stop},since_id:{since_id}')

if str(is_stop) == 'True' or str(since_id) == '0':

print("已经到底了...")

break

if __name__ == '__main__':

username = input("请输入您要爬取的用户id:")

main(username=username)

4512

4512

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?