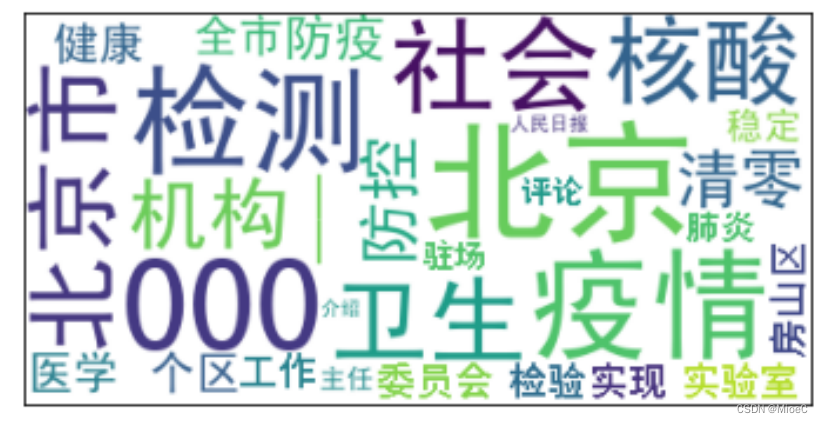

今天刷新闻的时候,想到能不能有个软件可以快速帮我总结新闻的要点,我直接通过关键字就能大概浏览文章的内容。说干就干

# -*- coding: utf-8 -*-

import jieba

from sklearn.feature_extraction.text import TfidfVectorizer

from wordcloud import WordCloud

import matplotlib.pyplot as plt

import re

import requests

from bs4 import BeautifulSoup

def model(s):

tf = TfidfVectorizer(stop_words=None, token_pattern='(?u)\\b\\w\\w+\\b', max_features=30)

weight = tf.fit_transform(s).toarray()

word = tf.get_feature_names()

vocab = tf.vocabulary_.items()

vocab = sorted(vocab, key= lambda v: v)

dir = {}

print(weight)

for i in range(len(weight)):

for j in range(len(word)):

dir[word[j]] = weight[i][j]

print(dir)

return dir

def showImg(weight):

wordClould = WordCloud(font_path='./SimHei.ttf', background_color='white', max_font_size=70)

wordClould.fit_words(weight)

plt.imshow(wordClould)

plt.xticks([])

plt.yticks([])

plt.show()

def load_text():

article = load_url_text()

#with open('./article.txt', encoding= "utf-8") as f:

#article = f.read()

article = article.strip()

article = re.sub("[1-9\,\。\:\.\、\·\—\“\”]\\n","", article)

seg_list = jieba.cut(article, cut_all = False)

arr = []

tmp = ' '

for i in seg_list:

arr.append(i)

tmp = tmp.join(arr)

return tmp

def load_url_text():

headers = {

"cookie":"s_v_web_id=verify_l3paknge_fo4iQ6FK_Sl6o_4gXi_Bq1M_QsNhnzjQHYHr; local_city_cache=%E5%8E%A6%E9%97%A8; csrftoken=50ebce478fdb72f8e18eff8fdeaf6727; _tea_utm_cache_24=undefined; tt_webid=7091827241689515551; MONITOR_WEB_ID=2897349e-681b-4319-9b25-f56e05444364; ttwid=1%7CkKdmscTcdpXdUa43CSJOJKkbwJHkrqqnvLSfqmnr92Y%7C1653720989%7C4a2c329e498e864641ddb482670e608110c9461cc101bcdb46a10a586db340be; tt_scid=-NPtai0Iso3HxyvBeDq1HsEGXtheapKHGt1n1xxQOs41rJHZ4Cba1FZTcbRZm8PN2fbb",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/54.0.2840.99 Safari/537.36"}

url = "https://www.toutiao.com/article/7102612270106083881/?log_from=96bbf32ce5e7c_1653720623948"

html_text = requests.get(url, headers = headers)

html_text.encoding='utf-8'

result = re.sub('<[^<]+?>', '', html_text.text).replace('\n', '').strip()

result = re.sub('[0-9A-Za-z\,\。\:\.\、\·\—\“\”\!\&\!\%\\]\\[\{\}\_]', "", result)

print(result)

return result

if __name__ == '__main__':

tmp = load_text()

weight = model([tmp])

showImg(weight)

830

830

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?