DbUpdaterJob是比较关键的一个任务,它负责将上一步由种子url解析出来的outlink urls更新到数据库中,以便于以后下一轮的抓取。相当于承担了一个开枝散叶的责任,所谓“不孝有三,无后为大”……

首先:

package org.apache.nutch.crawl;还是先从job开始看起。

Job

currentJob = new NutchJob(getConf(), "update-table");

if (crawlId != null) {

currentJob.getConfiguration().set(Nutch.CRAWL_ID_KEY, crawlId);

}

// Partition by {url}, sort by {url,score} and group by {url}.

// This ensures that the inlinks are sorted by score when they enter

// the reducer.

currentJob.setPartitionerClass(UrlOnlyPartitioner.class);

currentJob.setSortComparatorClass(UrlScoreComparator.class);

currentJob.setGroupingComparatorClass(UrlOnlyComparator.class);

MapFieldValueFilter<String, WebPage> batchIdFilter = getBatchIdFilter(batchId);

StorageUtils.initMapperJob(currentJob, fields, UrlWithScore.class,

NutchWritable.class, DbUpdateMapper.class, batchIdFilter);

StorageUtils.initReducerJob(currentJob, DbUpdateReducer.class);

currentJob.waitForCompletion(true);中间的那段英文注释已经说得很清楚了,根据url来划分,根据url和分值来排序,根据url来分组。一目了然。

然后,可以看出map的输出为<UrlWithScore, NutchWritable>。肯定得看看这两究竟是何方神圣。

先看NutchWritable。

NutchWritable

public class NutchWritable extends GenericWritableConfigurable {

private static Class<? extends Writable>[] CLASSES = null;

static {

CLASSES = (Class<? extends Writable>[]) new Class<?>[] {

org.apache.nutch.scoring.ScoreDatum.class,

org.apache.nutch.util.WebPageWritable.class };

}

public NutchWritable() {

}

public NutchWritable(Writable instance) {

set(instance);

}

@Override

protected Class<? extends Writable>[] getTypes() {

return CLASSES;

}

}短短几行代码却道出了真正的目的。其实就是相当于更上一层的some sort of “raw type”,用来装两种数据类型,ScoreDatum和WebPageWritable。大家往后看就明白了。

UrlWithScore

public final class UrlWithScore implements WritableComparable<UrlWithScore> {

private static final Comparator<UrlWithScore> comp = new UrlScoreComparator();

private Text url;

private FloatWritable score;

@Override

public int compareTo(UrlWithScore other) {

return comp.compare(this, other);

}

/**

* A partitioner by {url}.

*/

public static final class UrlOnlyPartitioner extends

Partitioner<UrlWithScore, NutchWritable> {

@Override

public int getPartition(UrlWithScore key, NutchWritable val, int reduces) {

return (key.url.hashCode() & Integer.MAX_VALUE) % reduces;

}

}

/**

* Compares by {url,score}. Scores are sorted in descending order, that is

* from high scores to low.

*/

public static final class UrlScoreComparator implements

RawComparator<UrlWithScore> {

private final WritableComparator textComp = new Text.Comparator();

private final WritableComparator floatComp = new FloatWritable.Comparator();

@Override

public int compare(UrlWithScore o1, UrlWithScore o2) {

int cmp = o1.getUrl().compareTo(o2.getUrl());

if (cmp != 0) {

return cmp;

}

// reverse order

return -o1.getScore().compareTo(o2.getScore());

}

@Override

public int compare(byte[] b1, int s1, int l1, byte[] b2, int s2, int l2) {

try {

int deptLen1 = WritableUtils.decodeVIntSize(b1[s1])

+ WritableComparator.readVInt(b1, s1);

int deptLen2 = WritableUtils.decodeVIntSize(b2[s2])

+ WritableComparator.readVInt(b2, s2);

int cmp = textComp.compare(b1, s1, deptLen1, b2, s2, deptLen2);

if (cmp != 0) {

return cmp;

}

// reverse order

return -floatComp.compare(b1, s1 + deptLen1, l1 - deptLen1, b2, s2

+ deptLen2, l2 - deptLen2);

} catch (IOException e) {

throw new IllegalArgumentException(e);

}

}

/**

* Compares by {url}.

*/

public static final class UrlOnlyComparator implements

RawComparator<UrlWithScore> {

private final WritableComparator textComp = new Text.Comparator();

@Override

public int compare(UrlWithScore o1, UrlWithScore o2) {

return o1.getUrl().compareTo(o2.getUrl());

}

@Override

public int compare(byte[] b1, int s1, int l1, byte[] b2, int s2, int l2) {

try {

int deptLen1 = WritableUtils.decodeVIntSize(b1[s1])

+ WritableComparator.readVInt(b1, s1);

int deptLen2 = WritableUtils.decodeVIntSize(b2[s2])

+ WritableComparator.readVInt(b2, s2);

return textComp.compare(b1, s1, deptLen1, b2, s2, deptLen2);

} catch (IOException e) {

throw new IllegalArgumentException(e);

}

}

}

}

}乍一看,就只有url和score两个成员变量。但是后面却大有乾坤。分别是一开始英文注释里所指的那三个类,用来partation,sort,group。这三个类挺简单的,没什么好说的。

DbUpdateMapper

public class DbUpdateMapper extends

GoraMapper<String, WebPage, UrlWithScore, NutchWritable> {

private ScoringFilters scoringFilters;

private final List<ScoreDatum> scoreData = new ArrayList<ScoreDatum>();

private Utf8 batchId;

// reuse writables

private UrlWithScore urlWithScore = new UrlWithScore();

private NutchWritable nutchWritable = new NutchWritable();

private WebPageWritable pageWritable;

@Override

public void map(String key, WebPage page, Context context)

throws IOException, InterruptedException {

if (Mark.GENERATE_MARK.checkMark(page) == null) { /**跳过未generate的Url*/

return;

}

String url = TableUtil.unreverseUrl(key); /**得到正常的Url*/

scoreData.clear(); /**清空此list*/

Map<CharSequence, CharSequence> outlinks = page.getOutlinks();

if (outlinks != null) { /**!!!ATTENTION 以后用到的url都是从这里来的*/

for (Entry<CharSequence, CharSequence> e : outlinks.entrySet()) {

int depth = Integer.MAX_VALUE;

CharSequence depthUtf8 = page.getMarkers().get(DbUpdaterJob.DISTANCE);

if (depthUtf8 != null) /**距离种子Url的距离*/

depth = Integer.parseInt(depthUtf8.toString());

scoreData.add(new ScoreDatum(0.0f, e.getKey().toString(), e.getValue()

.toString(), depth));

}

}

// TODO: Outlink filtering (i.e. "only keep the first n outlinks")

try {

scoringFilters.distributeScoreToOutlinks(url, page, scoreData,

(outlinks == null ? 0 : outlinks.size()));

} catch (ScoringFilterException e) {

LOG.warn("Distributing score failed for URL: " + key + " exception:"

+ StringUtils.stringifyException(e));

}

urlWithScore.setUrl(key);

urlWithScore.setScore(Float.MAX_VALUE);

pageWritable.setWebPage(page);

nutchWritable.set(pageWritable);

context.write(urlWithScore, nutchWritable); /**输出<urlWithcore, page>*/

for (ScoreDatum scoreDatum : scoreData) {

String reversedOut = TableUtil.reverseUrl(scoreDatum.getUrl());

scoreDatum.setUrl(url);

urlWithScore.setUrl(reversedOut);

urlWithScore.setScore(scoreDatum.getScore());

nutchWritable.set(scoreDatum);

context.write(urlWithScore, nutchWritable); /**输出<urlWithcore, scoreDatum>*/

}

}

@Override

public void setup(Context context) {

scoringFilters = new ScoringFilters(context.getConfiguration());

pageWritable = new WebPageWritable(context.getConfiguration(), null);

batchId = new Utf8(context.getConfiguration().get(Nutch.BATCH_NAME_KEY,

Nutch.ALL_BATCH_ID_STR));

}

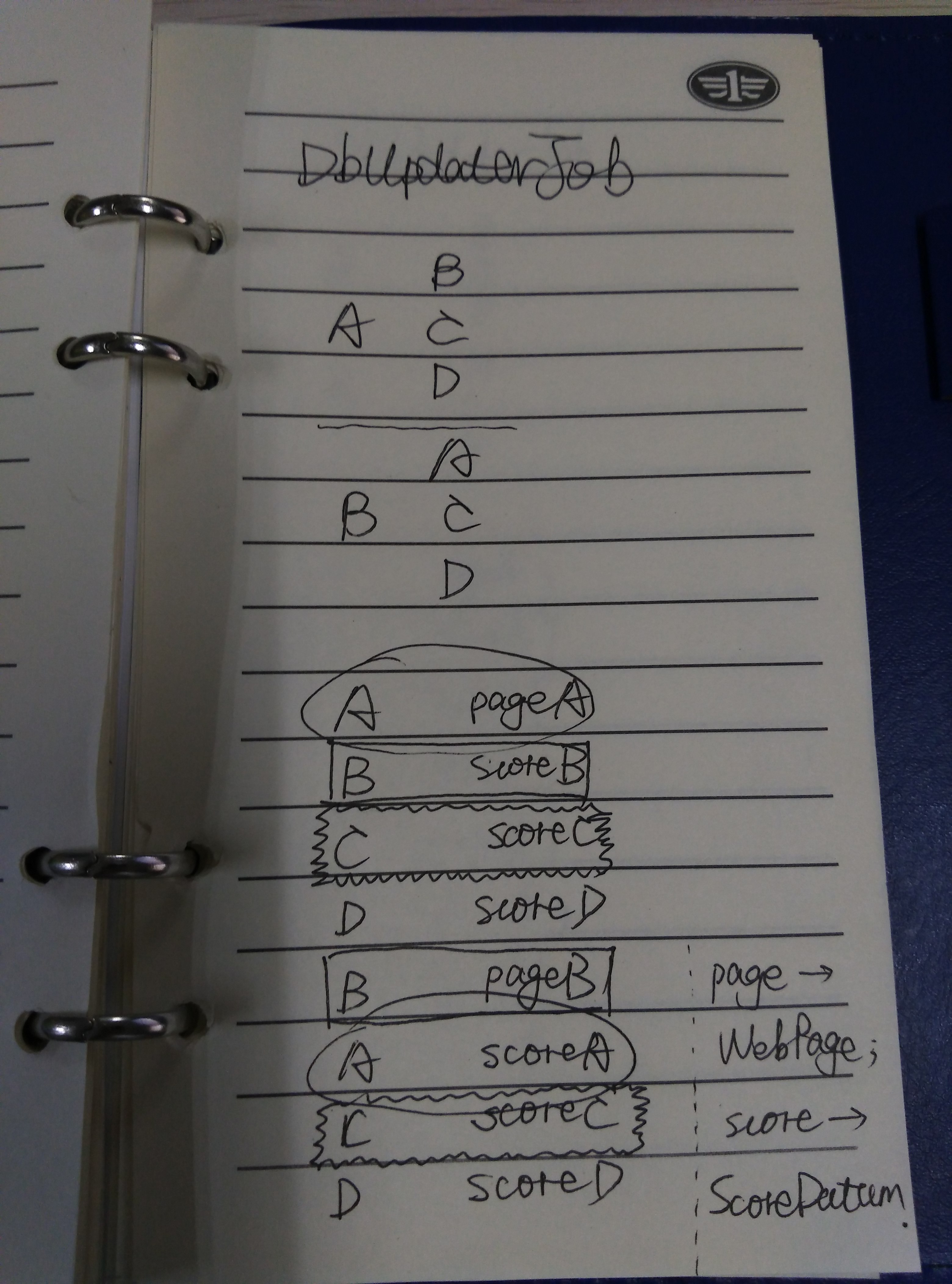

}下面会有一张图来给大家说明该工作流程的。

DbUpdateReducer

public class DbUpdateReducer extends

GoraReducer<UrlWithScore, NutchWritable, String, WebPage> {

public static final String CRAWLDB_ADDITIONS_ALLOWED = "db.update.additions.allowed";

public static final Logger LOG = DbUpdaterJob.LOG;

private int retryMax; /**最大可重试的次数*/

private boolean additionsAllowed; /**如果为真,则updatedb可以增加新发现的Url*/

private int maxInterval; /**距离上一次fetch的间隔时间,过了这个时间每一个在db中的页面都会被重新fetch*/

private FetchSchedule schedule; /**操控fetch的时间和重新fetch的间隔*/

private ScoringFilters scoringFilters; /**得分的插件*/

private List<ScoreDatum> inlinkedScoreData = new ArrayList<ScoreDatum>();

private int maxLinks; /**当更新一个Url的得分时,考虑的最大的inlink的数目*/

@Override

protected void setup(Context context) throws IOException,

InterruptedException {

Configuration conf = context.getConfiguration();

retryMax = conf.getInt("db.fetch.retry.max", 3);

additionsAllowed = conf.getBoolean(CRAWLDB_ADDITIONS_ALLOWED, true);

maxInterval = conf.getInt("db.fetch.interval.max", 0);

schedule = FetchScheduleFactory.getFetchSchedule(conf);

scoringFilters = new ScoringFilters(conf);

maxLinks = conf.getInt("db.update.max.inlinks", 10000);

}

@Override

protected void reduce(UrlWithScore key, Iterable<NutchWritable> values,

Context context) throws IOException, InterruptedException {

String keyUrl = key.getUrl().toString();

WebPage page = null;

inlinkedScoreData.clear(); /**清空这个list*/

for (NutchWritable nutchWritable : values) {

Writable val = nutchWritable.get();

if (val instanceof WebPageWritable) { /**获得混在其中的webPage*/

page = ((WebPageWritable) val).getWebPage();

} else { /**获得其中的scoreDatum*/

inlinkedScoreData.add((ScoreDatum) val);

if (inlinkedScoreData.size() >= maxLinks) { /**注意只要得分前maxLinks的scoreDatum*/

LOG.info("Limit reached, skipping further inlinks for " + keyUrl);

break;

}

}

}

String url;

try {

url = TableUtil.unreverseUrl(keyUrl);

} catch (Exception e) {

// this can happen because a newly discovered malformed link

// may slip by url filters

// TODO: Find a better solution

return;

}

if (page == null) { // new row

if (!additionsAllowed) {

return;

}

page = WebPage.newBuilder().build();

schedule.initializeSchedule(url, page);

page.setStatus((int) CrawlStatus.STATUS_UNFETCHED);

try {

scoringFilters.initialScore(url, page);

} catch (ScoringFilterException e) {

page.setScore(0.0f);

}

} else {

byte status = page.getStatus().byteValue();

switch (status) {

case CrawlStatus.STATUS_FETCHED: // succesful fetch

case CrawlStatus.STATUS_REDIR_TEMP: // successful fetch, redirected

case CrawlStatus.STATUS_REDIR_PERM:

case CrawlStatus.STATUS_NOTMODIFIED: // successful fetch, notmodified

int modified = FetchSchedule.STATUS_UNKNOWN;

if (status == CrawlStatus.STATUS_NOTMODIFIED) {

modified = FetchSchedule.STATUS_NOTMODIFIED;

}

ByteBuffer prevSig = page.getPrevSignature();

ByteBuffer signature = page.getSignature();

if (prevSig != null && signature != null) {

if (SignatureComparator.compare(prevSig, signature) != 0) {

modified = FetchSchedule.STATUS_MODIFIED;

} else {

modified = FetchSchedule.STATUS_NOTMODIFIED;

}

}

long fetchTime = page.getFetchTime();

long prevFetchTime = page.getPrevFetchTime();

long modifiedTime = page.getModifiedTime();

long prevModifiedTime = page.getPrevModifiedTime();

CharSequence lastModified = page.getHeaders().get(

new Utf8("Last-Modified"));

if (lastModified != null) {

try {

modifiedTime = HttpDateFormat.toLong(lastModified.toString());

prevModifiedTime = page.getModifiedTime();

} catch (Exception e) {

}

}

schedule.setFetchSchedule(url, page, prevFetchTime, prevModifiedTime,

fetchTime, modifiedTime, modified);

/**对于一个成功fetch的页面,设置其fetchTime和fetch间隔 */

if (maxInterval < page.getFetchInterval()) /**强制再次fetch*/

schedule.forceRefetch(url, page, false); /**不用asap*/

break;

case CrawlStatus.STATUS_RETRY:

schedule.setPageRetrySchedule(url, page, 0L,

page.getPrevModifiedTime(), page.getFetchTime());

if (page.getRetriesSinceFetch() < retryMax) { /**判断retry的次数*/

page.setStatus((int) CrawlStatus.STATUS_UNFETCHED);

} else {

page.setStatus((int) CrawlStatus.STATUS_GONE);

}

break;

case CrawlStatus.STATUS_GONE:

schedule.setPageGoneSchedule(url, page, 0L, page.getPrevModifiedTime(),

page.getFetchTime());

/**设置如何安排被标记为GONE的页面的重新fetch*/

break;

}

}

if (page.getInlinks() != null) {

page.getInlinks().clear();

}

// Distance calculation.

// Retrieve smallest distance from all inlinks distances

// Calculate new distance for current page: smallest inlink distance plus 1.

// If the new distance is smaller than old one (or if old did not exist

// yet),

// write it to the page.

int smallestDist = Integer.MAX_VALUE;

for (ScoreDatum inlink : inlinkedScoreData) {

int inlinkDist = inlink.getDistance();

if (inlinkDist < smallestDist) {

smallestDist = inlinkDist;

}

page.getInlinks().put(new Utf8(inlink.getUrl()),

new Utf8(inlink.getAnchor()));

}

if (smallestDist != Integer.MAX_VALUE) {

int oldDistance = Integer.MAX_VALUE;

CharSequence oldDistUtf8 = page.getMarkers().get(DbUpdaterJob.DISTANCE);

if (oldDistUtf8 != null)

oldDistance = Integer.parseInt(oldDistUtf8.toString());

int newDistance = smallestDist + 1;

if (newDistance < oldDistance) {

page.getMarkers().put(DbUpdaterJob.DISTANCE,

new Utf8(Integer.toString(newDistance)));

}

}

try {

scoringFilters.updateScore(url, page, inlinkedScoreData);

/**根据inlinked pages贡献的分值,为当前页计算出一个新的分值*/

} catch (ScoringFilterException e) {

LOG.warn("Scoring filters failed with exception "

+ StringUtils.stringifyException(e));

}

// clear markers

// But only delete when they exist. This is much faster for the underlying

// store. The markers are on the input anyway.

if (page.getMetadata().get(FetcherJob.REDIRECT_DISCOVERED) != null) {

page.getMetadata().put(FetcherJob.REDIRECT_DISCOVERED, null);

}

/**取消一坨前三步的marker*/

Mark.GENERATE_MARK.removeMarkIfExist(page);

Mark.FETCH_MARK.removeMarkIfExist(page);

Utf8 parse_mark = Mark.PARSE_MARK.checkMark(page);

if (parse_mark != null) {

Mark.UPDATEDB_MARK.putMark(page, parse_mark);

Mark.PARSE_MARK.removeMark(page);

}

context.write(keyUrl, page);

}

}图示流程

Okay~看图:

Webpage A解析出新的outlink urls分别为B、C、D,而Webpage B解析出新的outlink urls分别为A、C、D。其中Webpage A中的B、C和D,Webpage B中的A、C和D是新出现的url所以就在map步中放入ScoreDatum中,而Webpage A和B就以WebPage的方式存储,为了能将这两种数据类型都写入Fileds中,所以使用了NutchWritable。注意在map中就已经实现了开枝散叶的工作。接下来WebPage A和WebPage B中的A就会被partation在一起(肯定的),还有其他的,根据url和分值排序,WebPage A和WebPage B中的A也同样会被group在一起,按顺序进入reduce中,然后reduce再做做自己的工作比如:重新记录一些信息啊,安排一下下次的schedule啊,计算下距离啊,计算下分值啊,清理下markers之类的之后,就将它们写入到比如数据库之后。mission accomplished!

References

……

1390

1390

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?