前言

kubeadm是Kubernetes官方提供的用于快速部署Kubernetes集群的工具,本次使用kubeadm部署包含1个master节点及2个node节点的k8s集群。

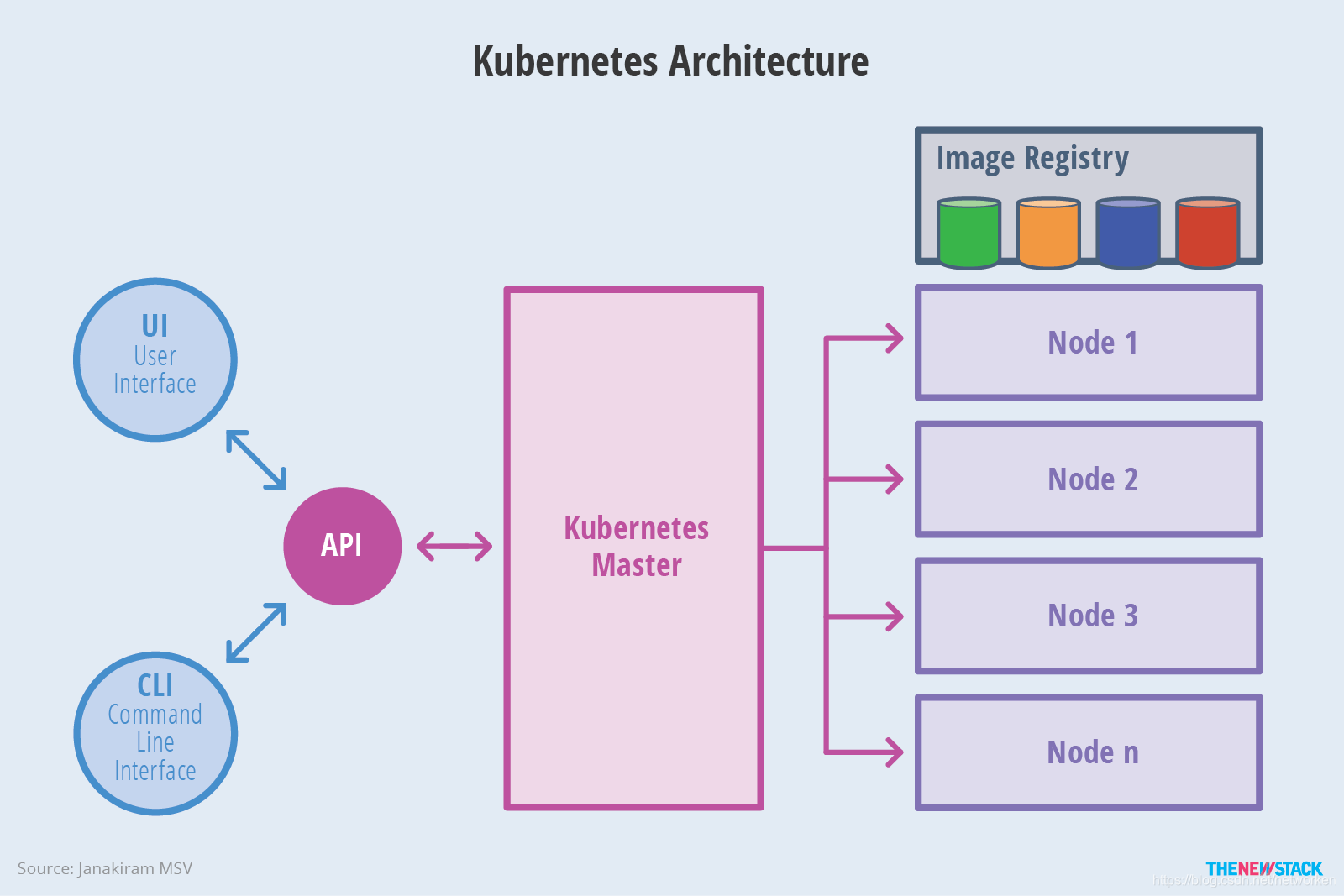

Kubernetes节点架构图:

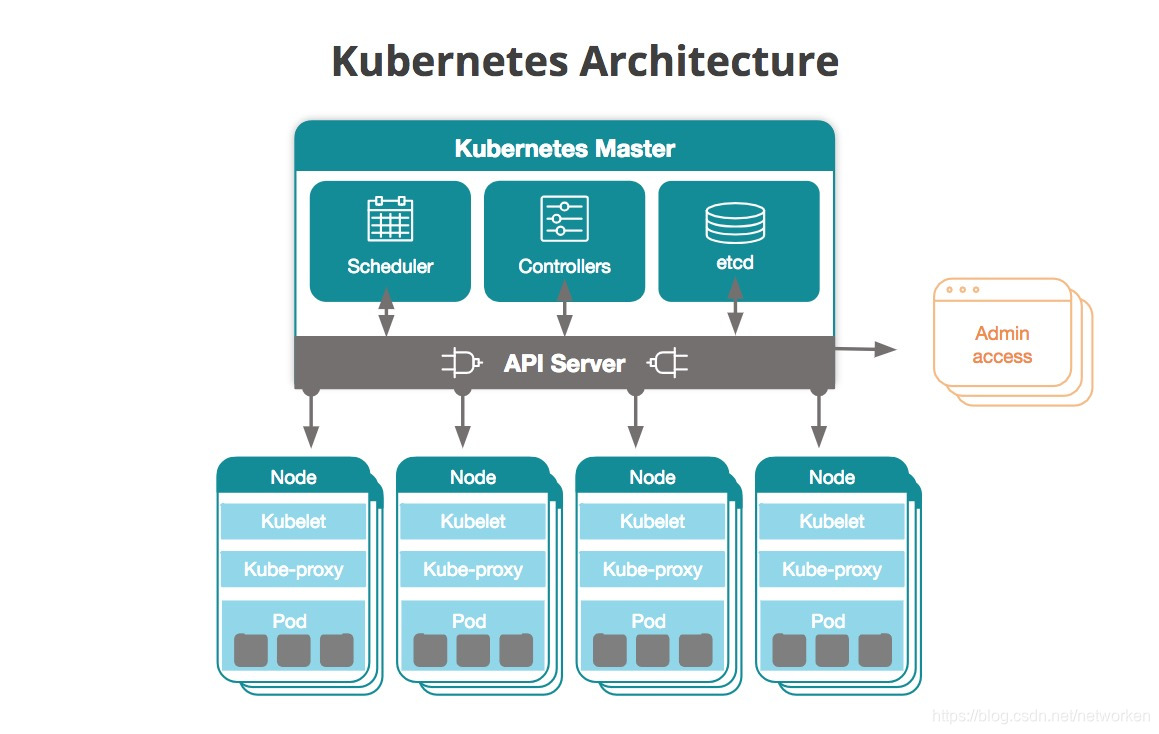

kubernetes组件架构图:

1.准备基础环境

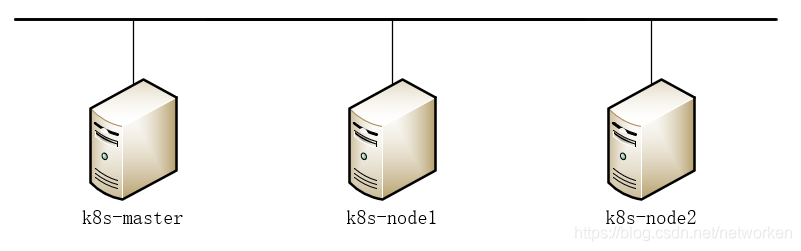

使用kubeadm部署3个节点的 Kubernetes Cluster,整体结构图:

节点详细信息:

| 主机名 | IP地址 | 角色 | OS | 组件 | 配置 |

|---|---|---|---|---|---|

| k8smaster | 172.16.30.31 | master | CentOS7.7.1908 | kube-apiserver kube-controller-manager kube-scheduler kube-proxy etcd coredns calico | 2C2G |

| k8snode01 | 172.16.30.32 | node | CentOS7.7.1908 | kube-proxy calico | 2C2G |

| k8snode02 | 172.16.30.33 | node | CentOS7.7.1908 | kube-proxy calico | 2C2G |

无特殊说明以下操作在所有节点执行:

#各个节点配置主机名

hostnamectl set-hostname k8smaster

hostnamectl set-hostname k8snode01

hostnamectl set-hostname k8snode02

#配置hosts解析

cat >> /etc/hosts << EOF

172.16.30.31 k8smaster

172.16.30.32 k8snode01

172.16.30.33 k8snode02

EOF

#关闭防火墙

systemctl disable --now firewalld

#关闭selinux

sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config && setenforce 0

#关闭swap

sed -i '/swap/d' /etc/fstab

swapoff -a

#确认时间同步

yum install -y chrony

systemctl enable --now chronyd

chronyc sources && timedatectl

加载ipvs模块

参考:https://github.com/kubernetes/kubernetes/tree/master/pkg/proxy/ipvs

kuber-proxy代理支持iptables和ipvs两种模式,使用ipvs模式需要在初始化集群前加载要求的ipvs模块并安装ipset工具。另外,针对Linux kernel 4.19以上的内核版本使用nf_conntrack 代替nf_conntrack_ipv4。

cat > /etc/modules-load.d/ipvs.conf <<EOF

# Load IPVS at boot

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

EOF

systemctl enable --now systemd-modules-load.service

#确认内核模块加载成功

lsmod | grep -e ip_vs -e nf_conntrack_ipv4

#安装ipset、ipvsadm

yum install -y ipset ipvsadm

安装Docker

docker安装参考:

https://kubernetes.io/docs/setup/cri/

查看kubernetes当前版本兼容的docker版本:

https://kubernetes.io/docs/setup/release/notes/

# 安装依赖软件包

yum install -y yum-utils device-mapper-persistent-data lvm2

# 添加Docker repository,这里使用国内阿里云yum源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装docker-ce,这里直接安装最新版本

yum install -y docker-ce

#修改docker配置文件

mkdir /etc/docker

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"registry-mirrors": ["https://uyah70su.mirror.aliyuncs.com"]

}

EOF

# 注意,由于国内拉取镜像较慢,配置文件最后增加了registry-mirrors

mkdir -p /etc/systemd/system/docker.service.d

# 重启docker服务

systemctl daemon-reload && systemctl enable --now docker

安装kubeadm、kubelet、kubectl

官方文档:https://kubernetes.io/docs/setup/independent/install-kubeadm/

添加kubernetes源

由于官方源国内无法访问,这里使用阿里云yum源进行替换:

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg \

https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

或者使用华为云yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://repo.huaweicloud.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://repo.huaweicloud.com/kubernetes/yum/doc/yum-key.gpg https://repo.huaweicloud.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

安装kubelet kubeadm kubectl

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

#或者安装指定版本

yum install -y kubeadm-1.18.1 kubelet-1.18.1 kubectl-1.18.1

启动kubelet服务

systemctl enable --now kubelet

配置内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl --system

2.部署master节点

官方参考:https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

在master节点运行以下命令初始化master节点:

kubeadm init \

--apiserver-advertise-address=172.16.30.31 \

--image-repository=registry.aliyuncs.com/google_containers \

--pod-network-cidr=192.168.0.0/16

初始化命令说明:

- –apiserver-advertise-address(可选) :kubeadm 会使用默认网关所在的网络接口广播其主节点的 IP 地址。若需使用其他网络接口,请给 kubeadm init 设置 --apiserver-advertise-address= 参数。

- –pod-network-cidr:选择一个 Pod 网络插件,并检查是否在 kubeadm 初始化过程中需要传入什么参数。这个取决于您选择的网络插件,您可能需要设置 --Pod-network-cidr 来指定网络驱动的

CIDR。Kubernetes 支持多种网络方案,而且不同网络方案对 --pod-network-cidr

有自己的要求,flannel设置为 10.244.0.0/16,calico设置为192.168.0.0/16 - –image-repository:Kubenetes默认Registries地址是k8s.gcr.io,国内无法访问,在1.13版本后可以增加–image-repository参数,将其指定为可访问的镜像地址,这里使用registry.aliyuncs.com/google_containers。

kubeadm init 首先会执行一系列的运行前检查来确保机器满足运行 Kubernetes 的条件。 这些检查会抛出警告并在发现错误的时候终止整个初始化进程。 然后 kubeadm init 会下载并安装集群的控制面组件,这可能会花费几分钟时间,其输出如下所示:

[root@k8smaster ~]# kubeadm init \

> --apiserver-advertise-address=172.16.30.31 \

> --image-repository registry.aliyuncs.com/google_containers \

> --pod-network-cidr=192.168.0.0/16

W1205 03:44:00.899847 4956 version.go:101] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

W1205 03:44:00.899918 4956 version.go:102] falling back to the local client version: v1.16.3

[init] Using Kubernetes version: v1.16.3

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 19.03.5. Latest validated version: 18.09

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8smaster kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 172.16.30.31]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8smaster localhost] and IPs [172.16.30.31 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8smaster localhost] and IPs [172.16.30.31 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 20.506862 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.16" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8smaster as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8smaster as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: 3ug4r5.lsneyn354n01mzbk

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.16.30.31:6443 --token 3ug4r5.lsneyn354n01mzbk \

--discovery-token-ca-cert-hash sha256:1d6e7e49732eb504fbba2fdf171648af9651587b59c6416ea5488dc127ac2d64

(注意记录下初始化结果中的kubeadm join命令,部署worker节点时会用到)

配置 kubectl

kubectl 是管理 Kubernetes Cluster 的命令行工具, Master 初始化完成后需要做一些配置工作才能使用kubectl,参考初始化结果给出的命令进行以下配置:

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

3.部署网络插件

参考:https://github.com/containernetworking/cni

必须安装pod网络插件,以便pod之间可以相互通信,必须在任何应用程序之前部署网络,CoreDNS不会在安装网络插件之前启动。

安装calico网络插件:

官方文档参考:

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/#pod-network

https://docs.projectcalico.org/v3.10/getting-started/kubernetes/

为使calico正常工作,你需要传递–pod-network-cidr=192.168.0.0/16到kubeadm init或更新calico.yml文件,以与您的pod网络相匹配。

kubectl apply -f https://docs.projectcalico.org/v3.10/manifests/calico.yaml

如果安装flannel网络插件,必须通过kubeadm init配置–pod-network-cidr=10.244.0.0/16参数。

验证网络插件

安装了pod网络后,确认coredns以及其他pod全部运行正常,查看master节点状态为Ready

[root@k8smaster ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8smaster Ready master 5m24s v1.16.3

[root@k8smaster ~]# kubectl -n kube-system get pods

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-6b64bcd855-95pbb 1/1 Running 0 106s

calico-node-l7988 1/1 Running 0 106s

coredns-58cc8c89f4-rhqft 1/1 Running 0 5m10s

coredns-58cc8c89f4-tpbqc 1/1 Running 0 5m10s

etcd-k8smaster 1/1 Running 0 4m7s

kube-apiserver-k8smaster 1/1 Running 0 4m17s

kube-controller-manager-k8smaster 1/1 Running 0 4m25s

kube-proxy-744dr 1/1 Running 0 5m10s

kube-scheduler-k8smaster 1/1 Running 0 4m21s

至此,Kubernetes 的 Master 节点就部署完成了。如果只需要一个单节点的 Kubernetes,现在你就可以使用了。

4.部署worker节点

在 k8snode01 和 k8snode02 上分别执行初始化结果中的命令,将其注册到 Cluster 中:

#执行以下命令将节点加入集群

kubeadm join 172.16.30.31:6443 --token 3ug4r5.lsneyn354n01mzbk \

--discovery-token-ca-cert-hash sha256:1d6e7e49732eb504fbba2fdf171648af9651587b59c6416ea5488dc127ac2d64

#如果执行kubeadm init时没有记录下加入集群的命令,可以通过以下命令重新创建

kubeadm token create --print-join-command

然后根据提示,通过 kubectl get nodes 查看节点的状态:

[root@k8smaster ~]# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8smaster Ready master 40m v1.16.3 172.16.30.31 <none> CentOS Linux 7 (Core) 3.10.0-1062.4.1.el7.x86_64 docker://19.3.5

k8snode01 Ready <none> 29m v1.16.3 172.16.30.32 <none> CentOS Linux 7 (Core) 3.10.0-1062.4.1.el7.x86_64 docker://19.3.5

k8snode02 Ready <none> 29m v1.16.3 172.16.30.33 <none> CentOS Linux 7 (Core) 3.10.0-1062.4.1.el7.x86_64 docker://19.3.5

另外确认所有pod也处于running状态:

[root@k8smaster ~]# kubectl -n kube-system get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-6b64bcd855-95pbb 1/1 Running 0 37m 192.168.16.130 k8smaster <none> <none>

calico-node-l7988 1/1 Running 0 37m 172.16.30.31 k8smaster <none> <none>

calico-node-qqmzh 1/1 Running 0 30m 172.16.30.32 k8snode01 <none> <none>

calico-node-tjfh5 1/1 Running 0 30m 172.16.30.33 k8snode02 <none> <none>

coredns-58cc8c89f4-rhqft 1/1 Running 0 40m 192.168.16.131 k8smaster <none> <none>

coredns-58cc8c89f4-tpbqc 1/1 Running 0 40m 192.168.16.129 k8smaster <none> <none>

etcd-k8smaster 1/1 Running 0 39m 172.16.30.31 k8smaster <none> <none>

kube-apiserver-k8smaster 1/1 Running 0 39m 172.16.30.31 k8smaster <none> <none>

kube-controller-manager-k8smaster 1/1 Running 0 39m 172.16.30.31 k8smaster <none> <none>

kube-proxy-744dr 1/1 Running 0 40m 172.16.30.31 k8smaster <none> <none>

kube-proxy-85gcq 1/1 Running 0 30m 172.16.30.33 k8snode02 <none> <none>

kube-proxy-kvdjc 1/1 Running 0 30m 172.16.30.32 k8snode01 <none> <none>

kube-scheduler-k8smaster 1/1 Running 0 39m 172.16.30.31 k8smaster <none> <none>

5.kube-proxy开启ipvs

修改kube-proxy的configmap,在config.conf中找到mode参数,改为mode: "ipvs"然后保存:

kubectl -n kube-system get cm kube-proxy -o yaml | sed 's/mode: ""/mode: "ipvs"/g' | kubectl replace -f -

#或者手动修改

kubectl -n kube-system edit cm kube-proxy

kubectl -n kube-system get cm kube-proxy -o yaml | grep mode

mode: "ipvs"

#重启kube-proxy pod

kubectl -n kube-system delete pods -l k8s-app=kube-proxy

#确认ipvs模式开启成功

[root@kmaster ~]# kubectl -n kube-system logs -f -l k8s-app=kube-proxy | grep ipvs

I1026 04:11:46.474911 1 server_others.go:176] Using ipvs Proxier.

I1026 04:11:42.842141 1 server_others.go:176] Using ipvs Proxier.

I1026 04:11:46.198116 1 server_others.go:176] Using ipvs Proxier.

日志中打印出Using ipvs Proxier,说明ipvs模式已经开启。

6.master节点调度pod

在默认情况下出于安全原因,集群不会在master节点上调度pod,但对于用于开发的单机Kubernetes环境希望在master节点运行用户pod,执行以下命令:

#master节点默认打了taints

[root@master ~]# kubectl describe nodes | grep Taints

Taints: node-role.kubernetes.io/master:NoSchedule

#执行以下命令去掉taints污点

[root@master ~]# kubectl taint nodes --all node-role.kubernetes.io/master-

node/master untainted

#再次查看 taint字段为none

[root@master ~]# kubectl describe nodes | grep Taints

Taints: <none>

#如果要恢复Master Only状态,执行如下命令:

kubectl taint node k8smaster node-role.kubernetes.io/master=:NoSchedule

7.运行应用测试集群

部署一个 Nginx Deployment,包含3个Pod副本

参考:

https://kubernetes.io/docs/concepts/workloads/controllers/deployment/#creating-a-deployment

cat > nginx.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

EOF

执行yaml,并为该deployment创建nodeport类型的service

kubectl apply -f nginx.yaml

kubectl expose deployment nginx-deployment --type=NodePort --name=nginx-service

查看pod运行状态,并在集群外使用nodepod访问nginx 服务:

[root@k8smaster ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-54f57cf6bf-8lsfc 1/1 Running 0 70s

nginx-deployment-54f57cf6bf-gjbk8 1/1 Running 0 70s

nginx-deployment-54f57cf6bf-mwbzj 1/1 Running 0 70s

[root@k8smaster ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 112m

nginx-service NodePort 10.98.221.175 <none> 80:31190/TCP 67s

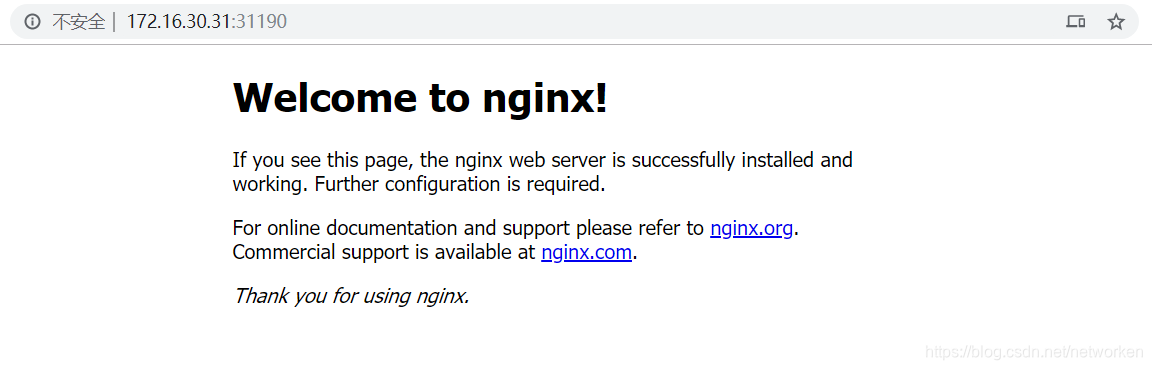

可以通过任意 NodeIP:Port 在集群外部访问这个服务:

[centos@k8s-master ~]$ curl 172.16.30.31:31190

[centos@k8s-master ~]$ curl 172.16.30.32:31190

[centos@k8s-master ~]$ curl 172.16.30.33:31190

集群外访问:

8.集群故障排查

kubernetes集群可能出现pod或node节点状态异常等情况,可以通过查看日志分析错误原因

#查看pod日志

kubectl -n kube-system logs -f <pod name>

#查看pod运行状态及事件

kubectl -n kube-system describe pods <pod name>

node notready可以通过分析node相关日志

kubectl describe nodes <node name>

systemctl status docker

systemctl status kubelet

journalctl -xeu docker

journalctl -xeu kubelet

tail -f /var/log/messages

9.移除节点和集群

kubernetes集群移除节点

以移除k8snode02节点为例,在Master节点上运行:

kubectl drain k8snode02 --delete-local-data --force --ignore-daemonsets

kubectl delete node k8snode02

上面两条命令执行完成后,在k8snode02节点执行清理命令,重置kubeadm的安装状态:

kubeadm reset -f

5043

5043

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?