高可用集群简介

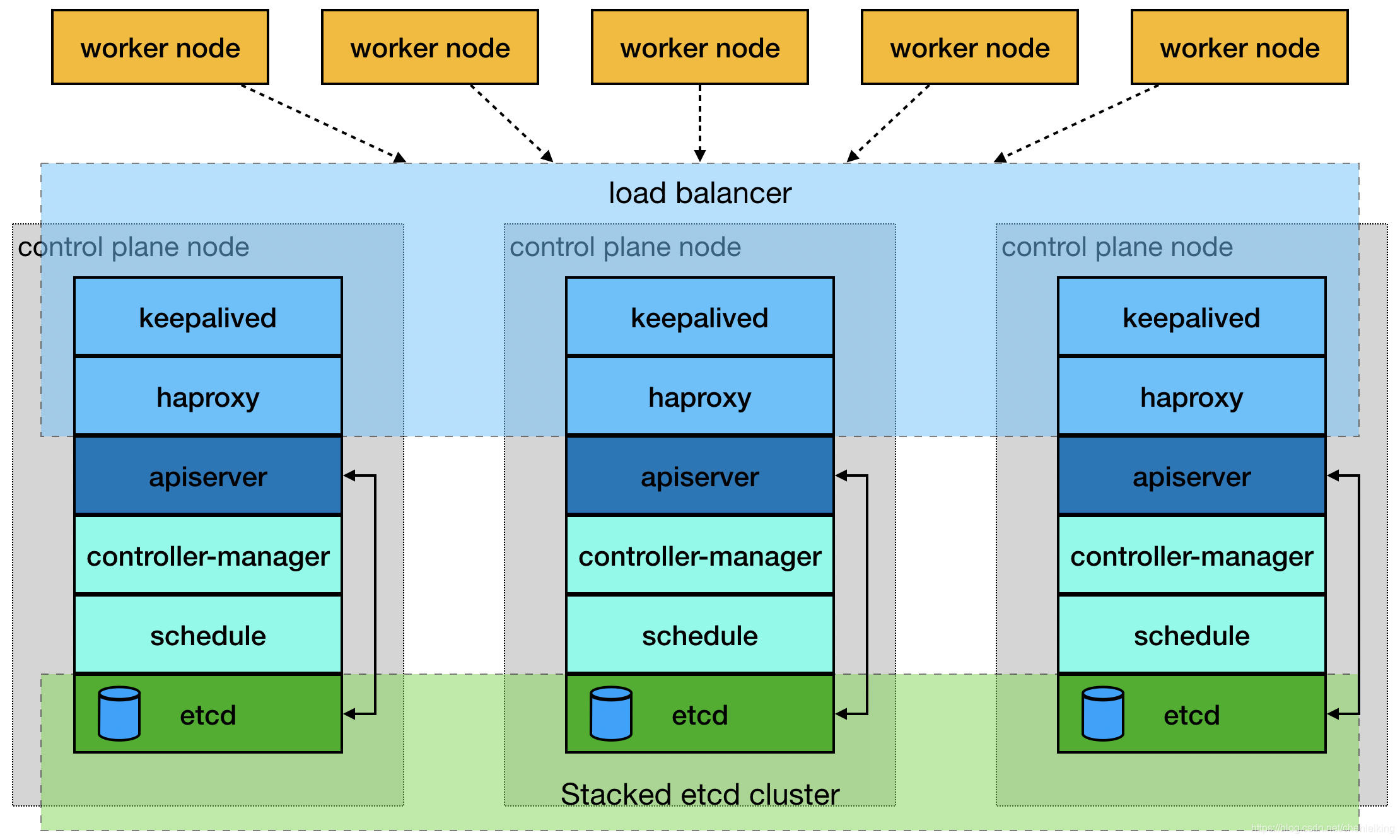

Kubernetes HA 集群搭建,主要包含 Etcd HA 和 Master HA。Etcd HA 通过搭建 Etcd 集群即可(注意 Etcd 集群只能有奇数个节点)。Master HA 多主就是多个 Kubernetes Master 节点组成,任意一个 Master 挂掉后,自动切换到另一个备用 Master,可以采用 haproxy + keepalived 的方案实现负载均衡,然后使用 VIP(虚地址) 方式来实现高可用。

对于从虚拟IP提供负载均衡,keepalived和haproxy的组合已经有很多用例:

- keepalived提供了一个由可配置的健康检查管理的虚拟IP。由于虚拟IP的实施方式,协商虚拟IP的所有主机必须在同一个IP子网中。

- haproxy服务可以配置为简单的基于流的负载平衡,从而允许TLS终止由其后面的API服务器实例处理。

这种组合既可以作为操作系统上的服务运行,也可以在master节点上作为静态Pods运行。两种情况下的配置是相同的。

基本架构如下图所示:

环境信息

本示例中的安装部署Kubernetes集群将基于以下环境进行:

- OS: Ubuntu Server 22.04 LTS

- Kubernetes:v1.28.1

- Container Runtime: Containerd

前置要求:

- 至少2个CPU、2G内存

- 禁用swap交换分区

- 允许 iptables 检查桥接流量

示例集群有3个主节点,1个工作节点,3个用于负载均衡的节点与3个k8s 主节点合并部署,以及一个虚拟 IP 地址。本示例中的虚拟 IP 地址也可称为“浮动 IP 地址”。这意味着在节点故障的情况下,该 IP 地址可在节点之间漂移,从而实现高可用。

节点清单:

| 节点名称 | 节点IP | 节点角色 | CPU | 内存 | 磁盘 | OS |

|---|---|---|---|---|---|---|

| master1 | 192.168.72.30 | master | 2C | 4G | 100G | Ubutnu 22.04 LTS |

| master2 | 192.168.72.31 | master | 2C | 4G | 100G | Ubutnu 22.04 LTS |

| master3 | 192.168.72.32 | master | 2C | 4G | 100G | Ubutnu 22.04 LTS |

| node1 | 192.168.72.33 | worker | 2C | 4G | 100G | Ubutnu 22.04 LTS |

| keepalived-vip | 192.168.72.200 |

节点初始化

说明:以下操作在所有节点执行。

1、配置主机名

hostnamectl set-hostname master1

hostnamectl set-hostname master2

hostnamectl set-hostname master3

hostnamectl set-hostname node1

2、配置hosts解析

cat >> /etc/hosts << EOF

192.168.72.30 master1

192.168.72.31 master2

192.168.72.32 master3

192.168.72.33 node1

EOF

3、关闭swap

sed -ri '/\sswap\s/s/^#?/#/' /etc/fstab

mount -a

swapoff -a

4、确认时间同步

apt install -y chrony

systemctl enable --now chrony

chronyc sources

5、加载ipvs内核模块

参考:https://github.com/kubernetes/kubernetes/tree/master/pkg/proxy/ipvs

另外,针对Linux kernel 4.19以上的内核版本使用nf_conntrack 代替nf_conntrack_ipv4。

cat <<EOF | tee /etc/modules-load.d/ipvs.conf

# Load IPVS at boot

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

EOF

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

#确认内核模块加载成功

lsmod | grep -e ip_vs -e nf_conntrack

#安装ipset和ipvsadm

apt install -y ipset ipvsadm

安装containerd

说明:以下操作在所有节点执行。

1、安装containerd容器运行时的前置条件

cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# 设置必需的 sysctl 参数,这些参数在重新启动后仍然存在。

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

# 应用 sysctl 参数而无需重新启动

sudo sysctl --system

2、安装containerd容器运行时,如果网络较差,建议使用浏览器下载到本地,在上传到服务器。

下载地址:https://github.com/containerd/nerdctl/releases

wget https://github.com/containerd/nerdctl/releases/download/v1.5.0/nerdctl-full-1.5.0-linux-amd64.tar.gz

tar Cxzvvf /usr/local nerdctl-full-1.5.0-linux-amd64.tar.gz

3、创建containerd配置文件

sudo mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml

4、配置使用 systemd cgroup 驱动程序

sed -i "s#SystemdCgroup = false#SystemdCgroup = true#g" /etc/containerd/config.toml

5、修改基础设施镜像,安装kubeadm后使用kubeadm config images list命令确认pause默认镜像tag

old_image='sandbox_image = "registry.k8s.io/pause:.*"'

new_image='sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"'

sed -i "s|$old_image|$new_image|" /etc/containerd/config.toml

6、启动containerd服务

systemctl enable --now containerd

7、查看containerd运行状态

systemctl status containerd

安装kubeadm

说明:以下操作在所有节点执行。

1、添加kubernetes源,使用阿里云apt源进行替换:

apt update -y

apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

2、查看可安装的版本

apt-get update

apt-cache madison kubectl | more

3、安装指定版本kubeadm、kubelet及kubectl

export KUBERNETES_VERSION=1.28.1-00

apt update -y

apt-get install -y kubelet=${KUBERNETES_VERSION} kubeadm=${KUBERNETES_VERSION} kubectl=${KUBERNETES_VERSION}

apt-mark hold kubelet kubeadm kubectl

4、启动kubelet服务

systemctl enable --now kubelet

部署haproxy和keepalived

说明:以下操作在所有master节点执行。

1、 安装haproxy和keepalived

apt install -y haproxy keepalived

2、创建haproxy配置文件,3个master节点配置相同,注意修改变量适配自身机器环境

export APISERVER_DEST_PORT=6444

export APISERVER_SRC_PORT=6443

export MASTER1_ADDRESS=192.168.72.30

export MASTER2_ADDRESS=192.168.72.31

export MASTER3_ADDRESS=192.168.72.32

cp /etc/haproxy/haproxy.cfg{,.bak}

cat >/etc/haproxy/haproxy.cfg<<EOF

global

log 127.0.0.1 local0

log 127.0.0.1 local1 notice

maxconn 20000

daemon

spread-checks 2

defaults

mode http

log global

option tcplog

option dontlognull

option http-server-close

option redispatch

timeout http-request 2s

timeout queue 3s

timeout connect 1s

timeout client 1h

timeout server 1h

timeout http-keep-alive 1h

timeout check 2s

maxconn 18000

backend stats-back

mode http

balance roundrobin

stats uri /haproxy/stats

stats auth admin:1111

frontend stats-front

bind *:8081

mode http

default_backend stats-back

frontend apiserver

bind *:${APISERVER_DEST_PORT}

mode tcp

option tcplog

default_backend apiserver

backend apiserver

mode tcp

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server kube-apiserver-1 ${MASTER1_ADDRESS}:${APISERVER_SRC_PORT} check

server kube-apiserver-2 ${MASTER2_ADDRESS}:${APISERVER_SRC_PORT} check

server kube-apiserver-3 ${MASTER3_ADDRESS}:${APISERVER_SRC_PORT} check

EOF

3、创建keepalived配置文件,3个master节点配置相同,注意修改变量适配自身机器环境

export APISERVER_VIP=192.168.72.200

export INTERFACE=ens33

export ROUTER_ID=51

cp /etc/keepalived/keepalived.conf{,.bak}

cat >/etc/keepalived/keepalived.conf<<EOF

global_defs {

router_id ${ROUTER_ID}

vrrp_version 2

vrrp_garp_master_delay 1

vrrp_garp_master_refresh 1

script_user root

enable_script_security

}

vrrp_script check_apiserver {

script "/usr/bin/killall -0 haproxy"

timeout 3

interval 5 # check every 5 second

fall 3 # require 3 failures for KO

rise 2 # require 2 successes for OK

}

vrrp_instance lb-kube-vip {

state BACKUP

interface ${INTERFACE}

virtual_router_id ${ROUTER_ID}

priority 51

advert_int 1

nopreempt

track_script {

check_apiserver

}

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

${APISERVER_VIP} dev ${INTERFACE}

}

}

EOF

说明:这里所有节点都为BACKUP状态,由keepalvied根据优先级自行选举master节点。

4、启动haproxy和keepalived服务

systemctl enable --now haproxy

systemctl enable --now keepalived

5、检查3个master节点,确认VIP地址192.168.72.200生成在哪个节点上

以下示例显示VIP绑定在master1节点的ens33网卡上,可以通过重启该节点确认VIP是否能够自动切换到其他节点。

root@master1:~# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:aa:75:9f brd ff:ff:ff:ff:ff:ff

altname enp2s1

inet 192.168.72.30/24 brd 192.168.72.255 scope global ens33

valid_lft forever preferred_lft forever

inet 192.168.72.200/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:feaa:759f/64 scope link

valid_lft forever preferred_lft forever

提前拉取k8s镜像

备注:以下操作在所有节点执行。

默认初始化集群时kubeadm能够自动拉取镜像,提前拉取镜像能够缩短集群初次初始化的时间,该操作为可选项。

1、查看可安装的kubernetes版本

kubectl version --short

2、查看对应kubernetes版本的容器镜像

由于registry.k8s.io 项目由谷歌 GCP 和 AWS 托管并捐赠支持,registry.k8s.io已被屏蔽,K8S官方社区表示无能为力,因此需要通过--image-repository参数指定使用国内阿里云k8s镜像仓库。

kubeadm config images list \

--kubernetes-version=v1.28.1 \

--image-repository registry.aliyuncs.com/google_containers

3、在所有节点执行以下命令,提前拉取镜像

kubeadm config images pull \

--kubernetes-version=v1.28.1 \

--image-repository registry.aliyuncs.com/google_containers

4、查看拉取的镜像

root@master1:~# nerdctl -n k8s.io images |grep -v none

REPOSITORY TAG IMAGE ID CREATED PLATFORM SIZE BLOB SIZE

registry.aliyuncs.com/google_containers/coredns v1.10.1 90d3eeb2e210 About a minute ago linux/amd64 51.1 MiB 15.4 MiB

registry.aliyuncs.com/google_containers/etcd 3.5.9-0 b124583790d2 About a minute ago linux/amd64 283.8 MiB 98.1 MiB

registry.aliyuncs.com/google_containers/kube-apiserver v1.28.1 1e9a3ea7d1d4 2 minutes ago linux/amd64 123.1 MiB 33.0 MiB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.28.1 f6838231cb74 2 minutes ago linux/amd64 119.5 MiB 31.8 MiB

registry.aliyuncs.com/google_containers/kube-proxy v1.28.1 feb6017bf009 About a minute ago linux/amd64 73.6 MiB 23.4 MiB

registry.aliyuncs.com/google_containers/kube-scheduler v1.28.1 b76ea016d6b9 2 minutes ago linux/amd64 60.6 MiB 17.9 MiB

registry.aliyuncs.com/google_containers/pause 3.9 7031c1b28338 About a minute ago linux/amd64 728.0 KiB 314.0 KiB

创建集群配置文件

说明:以下操作仅在第一个master节点执行。

1、生成默认的集群初始化配置文件。

kubeadm config print init-defaults --component-configs KubeProxyConfiguration > kubeadm.yaml

2、修改集群配置文件

$ cat kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.72.30

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: master1

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

certSANs:

- master1

- master2

- master3

- node1

- 192.168.72.200

- 192.168.72.30

- 192.168.72.31

- 192.168.72.32

- 192.168.72.33

controlPlaneEndpoint: 192.168.72.200:6444

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.28.1

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

scheduler: {}

---

apiVersion: kubelet.config.k8s.io/v1beta1

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/pki/ca.crt

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 0s

cacheUnauthorizedTTL: 0s

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerRuntimeEndpoint: ""

cpuManagerReconcilePeriod: 0s

evictionPressureTransitionPeriod: 0s

fileCheckFrequency: 0s

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 0s

imageMinimumGCAge: 0s

kind: KubeletConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

verbosity: 0

memorySwap: {}

nodeStatusReportFrequency: 0s

nodeStatusUpdateFrequency: 0s

resolvConf: /run/systemd/resolve/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 0s

shutdownGracePeriod: 0s

shutdownGracePeriodCriticalPods: 0s

staticPodPath: /etc/kubernetes/manifests

streamingConnectionIdleTimeout: 0s

syncFrequency: 0s

volumeStatsAggPeriod: 0s

---

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

bindAddressHardFail: false

clientConnection:

acceptContentTypes: ""

burst: 0

contentType: ""

kubeconfig: /var/lib/kube-proxy/kubeconfig.conf

qps: 0

clusterCIDR: ""

configSyncPeriod: 0s

conntrack:

maxPerCore: null

min: null

tcpCloseWaitTimeout: null

tcpEstablishedTimeout: null

detectLocal:

bridgeInterface: ""

interfaceNamePrefix: ""

detectLocalMode: ""

enableProfiling: false

healthzBindAddress: ""

hostnameOverride: ""

iptables:

localhostNodePorts: null

masqueradeAll: false

masqueradeBit: null

minSyncPeriod: 0s

syncPeriod: 0s

ipvs:

excludeCIDRs: null

minSyncPeriod: 0s

scheduler: ""

strictARP: false

syncPeriod: 0s

tcpFinTimeout: 0s

tcpTimeout: 0s

udpTimeout: 0s

kind: KubeProxyConfiguration

logging:

flushFrequency: 0

options:

json:

infoBufferSize: "0"

verbosity: 0

metricsBindAddress: ""

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: null

portRange: ""

showHiddenMetricsForVersion: ""

winkernel:

enableDSR: false

forwardHealthCheckVip: false

networkName: ""

rootHnsEndpointName: ""

sourceVip: ""

3、在默认值基础之上需要配置的参数说明:

InitConfiguration

kind: InitConfiguration

apiVersion: kubeadm.k8s.io/v1beta3

localAPIEndpoint:

advertiseAddress: 192.168.72.30

bindPort: 6443

nodeRegistration:

name: master1

ClusterConfiguration

kind: ClusterConfiguration

apiVersion: kubeadm.k8s.io/v1beta3

controlPlaneEndpoint: 192.168.72.200:6444

imageRepository: registry.aliyuncs.com/google_containers

kubernetesVersion: 1.28.1

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

podSubnet: 10.244.0.0/16

apiServer:

certSANs:

- master1

- master2

- master3

- node1

- 192.168.72.200

- 192.168.72.30

- 192.168.72.31

- 192.168.72.32

- 192.168.72.33

KubeletConfiguration

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerRuntimeEndpoint: ""

KubeProxyConfiguration

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

mode: "ipvs"

初始化第一个master节点

1、在第一个master节点运行以下命令开始初始化master节点:

kubeadm init --upload-certs --config kubeadm.yaml

如果初始化报错可以执行以下命令检查kubelet相关日志。

journalctl -xeu kubelet

2、记录日志输出中的join control-plane和join worker命令。

......

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.72.200:6444 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:293b145b86ee0839a650befd4d32d706852ac5c77848a55e0cb186be29ff38de \

--control-plane --certificate-key 578ad0ee6a1052703962b0a8591d0036f23a514d4456bd08fa253eda00128fca

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.72.200:6444 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:293b145b86ee0839a650befd4d32d706852ac5c77848a55e0cb186be29ff38de

3、master节点初始化完成后参考最后提示配置kubectl客户端连接

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

4、查看节点状态,当前还未安装网络插件节点处于NotReady状态

root@master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node NotReady control-plane 40s v1.28.1

5、查看pod状态,当前还未安装网络插件coredns pod处于Pending状态

root@master1:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-66f779496c-44fcc 0/1 Pending 0 32s

kube-system coredns-66f779496c-9cjmf 0/1 Pending 0 32s

kube-system etcd-node 1/1 Running 1 44s

kube-system kube-apiserver-node 1/1 Running 1 44s

kube-system kube-controller-manager-node 1/1 Running 1 44s

kube-system kube-proxy-g4kns 1/1 Running 0 32s

kube-system kube-scheduler-node 1/1 Running 1 44s

安装calico网络插件

说明:以下操作仅在第一个master节点执行。

参考:https://projectcalico.docs.tigera.io/getting-started/kubernetes/quickstart

1、在第一个master节点安装helm

version=v3.12.3

curl -LO https://repo.huaweicloud.com/helm/${version}/helm-${version}-linux-amd64.tar.gz

tar -zxvf helm-${version}-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/helm && rm -rf linux-amd64

2、添加calico helm 仓库

helm repo add projectcalico https://projectcalico.docs.tigera.io/charts

3、部署calico,如果无法访问dockerhub,可能需要提前拉取镜像

helm install calico projectcalico/tigera-operator \

--namespace tigera-operator --create-namespace

4、查看节点变为Ready状态

root@master1:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

node Ready control-plane 17m v1.28.1

5、查看coredns pod状态变为Running

root@master1:~# kubectl get pods -A

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-apiserver calico-apiserver-c7b9c94d5-ck6n8 1/1 Running 0 4m6s

calico-apiserver calico-apiserver-c7b9c94d5-fjbbh 1/1 Running 0 4m6s

calico-system calico-kube-controllers-9c4d4576f-rtbm5 1/1 Running 0 11m

calico-system calico-node-vwkhs 1/1 Running 0 11m

calico-system calico-typha-76c649f99d-7gdsv 1/1 Running 0 11m

calico-system csi-node-driver-fzlck 2/2 Running 0 11m

kube-system coredns-66f779496c-44fcc 1/1 Running 0 17m

kube-system coredns-66f779496c-9cjmf 1/1 Running 0 17m

kube-system etcd-node 1/1 Running 1 17m

kube-system kube-apiserver-node 1/1 Running 1 17m

kube-system kube-controller-manager-node 1/1 Running 1 17m

kube-system kube-proxy-g4kns 1/1 Running 0 17m

kube-system kube-scheduler-node 1/1 Running 1 17m

tigera-operator tigera-operator-94d7f7696-lzrrz 1/1 Running 0 11m

master节点加入集群

1、分别在第2和第3个master节点执行以下命令

kubeadm join 192.168.72.200:6444 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:7e25d6bc9368f5ab747c8c0b3349c5441005e0e15914cd4be1eac6351fb1e320 \

--control-plane --certificate-key fbae5ace48cf806fe5df818174859e3a837cf316156cd6e26ec14c7fca28fba3

2、如果master初始化后未记录节点加入集群命令,可以通过运行以下命令重新生成:

kubeadm token create --print-join-command --ttl 0

worker节点加入集群

1、在node1节点上执行如下命令,将其注册到 Cluster 中:

kubeadm join 192.168.72.200:6444 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:7e25d6bc9368f5ab747c8c0b3349c5441005e0e15914cd4be1eac6351fb1e320

2、通过 kubectl get nodes 查看节点的状态。

root@master1:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master1 Ready control-plane 22m v1.28.1 192.168.72.30 <none> Ubuntu 22.04.2 LTS 5.15.0-76-generic containerd://1.7.3

master2 Ready control-plane 4m34s v1.28.1 192.168.72.31 <none> Ubuntu 22.04.2 LTS 5.15.0-76-generic containerd://1.7.3

master3 Ready control-plane 3m48s v1.28.1 192.168.72.32 <none> Ubuntu 22.04.2 LTS 5.15.0-76-generic containerd://1.7.3

node1 Ready <none> 3m41s v1.28.1 192.168.72.33 <none> Ubuntu 22.04.2 LTS 5.15.0-76-generic containerd://1.7.3

3、最终运行的pods

root@master1:~# kubectl get pods -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-apiserver calico-apiserver-866fdb498d-2jndc 1/1 Running 0 18m 10.244.137.69 master1 <none> <none>

calico-apiserver calico-apiserver-866fdb498d-672gj 1/1 Running 0 18m 10.244.137.70 master1 <none> <none>

calico-system calico-kube-controllers-57b4847fc8-zlf8t 1/1 Running 0 18m 10.244.137.66 master1 <none> <none>

calico-system calico-node-bxjhp 1/1 Running 0 18m 192.168.72.30 master1 <none> <none>

calico-system calico-node-j2zxw 1/1 Running 0 12m 192.168.72.31 master2 <none> <none>

calico-system calico-node-kjfhh 1/1 Running 0 11m 192.168.72.33 node1 <none> <none>

calico-system calico-node-zmngb 1/1 Running 0 11m 192.168.72.32 master3 <none> <none>

calico-system calico-typha-548dd44bd-hzj8b 1/1 Running 0 18m 192.168.72.30 master1 <none> <none>

calico-system calico-typha-548dd44bd-pjpn5 1/1 Running 0 11m 192.168.72.32 master3 <none> <none>

calico-system csi-node-driver-48xkx 2/2 Running 0 18m 10.244.137.65 master1 <none> <none>

calico-system csi-node-driver-rtxt2 2/2 Running 0 11m 10.244.136.1 master3 <none> <none>

calico-system csi-node-driver-ssfbh 2/2 Running 0 11m 10.244.166.129 node1 <none> <none>

calico-system csi-node-driver-v8h9l 2/2 Running 0 12m 10.244.180.1 master2 <none> <none>

kube-system coredns-66f779496c-ldfpv 1/1 Running 0 23m 10.244.137.68 master1 <none> <none>

kube-system coredns-66f779496c-zx8rs 1/1 Running 0 23m 10.244.137.67 master1 <none> <none>

kube-system etcd-master1 1/1 Running 0 23m 192.168.72.30 master1 <none> <none>

kube-system etcd-master2 1/1 Running 0 12m 192.168.72.31 master2 <none> <none>

kube-system etcd-master3 1/1 Running 0 12m 192.168.72.32 master3 <none> <none>

kube-system kube-apiserver-master1 1/1 Running 0 23m 192.168.72.30 master1 <none> <none>

kube-system kube-apiserver-master2 1/1 Running 0 12m 192.168.72.31 master2 <none> <none>

kube-system kube-apiserver-master3 1/1 Running 1 (12m ago) 12m 192.168.72.32 master3 <none> <none>

kube-system kube-controller-manager-master1 1/1 Running 1 (12m ago) 23m 192.168.72.30 master1 <none> <none>

kube-system kube-controller-manager-master2 1/1 Running 0 12m 192.168.72.31 master2 <none> <none>

kube-system kube-controller-manager-master3 1/1 Running 0 11m 192.168.72.32 master3 <none> <none>

kube-system kube-proxy-4dzjw 1/1 Running 0 23m 192.168.72.30 master1 <none> <none>

kube-system kube-proxy-cqkbr 1/1 Running 0 11m 192.168.72.32 master3 <none> <none>

kube-system kube-proxy-vcr8w 1/1 Running 0 11m 192.168.72.33 node1 <none> <none>

kube-system kube-proxy-x6tkn 1/1 Running 0 12m 192.168.72.31 master2 <none> <none>

kube-system kube-scheduler-master1 1/1 Running 1 (12m ago) 23m 192.168.72.30 master1 <none> <none>

kube-system kube-scheduler-master2 1/1 Running 0 12m 192.168.72.31 master2 <none> <none>

kube-system kube-scheduler-master3 1/1 Running 0 12m 192.168.72.32 master3 <none> <none>

tigera-operator tigera-operator-94d7f7696-b5fbl 1/1 Running 1 (12m ago) 19m 192.168.72.30 master1 <none> <none>

验证集群高可用

1、关闭master1节点

root@master1:~# shutdown -h now

2、查看vip地址自动转移到master3节点

root@master3:~# ip a |grep ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

inet 192.168.72.32/24 brd 192.168.72.255 scope global ens33

inet 192.168.72.200/32 scope global ens33

3、在master3节点配置kubectl客户端连接信息

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

chown $(id -u):$(id -g) $HOME/.kube/config

4、在master3节点验证依然能够正常访问集群

root@master3:~# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1 NotReady control-plane 39m v1.28.1

master2 Ready control-plane 27m v1.28.1

master3 Ready control-plane 27m v1.28.1

node1 Ready <none> 27m v1.28.1

至此,基于haproxy和keepalived的高可用集群部署完成。

1440

1440

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?