Springboot+ELK实现日志查询

日志查询一直头疼的问题就是日志文件太大、请求数据纵横交错很不方便查找定位。最近公司难得的清闲就整合ELK可针对日志进行日期、接口地址、用户查询日志明细

文章目录

一、elasticsearch+logstash+filebeat+kibana安装部署 (统一版本:7.7.0)

1. docker 安装部署elasticsearch

下载镜像

docker pull elasticsearch:7.7.0

创建挂载的目录

mkdir -p /data/elk/elasticsearch/data

mkdir -p /data/elk/elasticsearch/config

mkdir -p /data/elk/elasticsearch/plugins

// 创建配置文件

echo "http.host: 0.0.0.0" >> /data/elk/elasticsearch/config/elasticsearch.yml

创建容器并启动

docker run --name elasticsearch -p 9200:9200 -p 9300:9300 \

-e "discovery.type=single-node" \

-e ES_JAVA_OPTS="-Xms512m -Xmx512m" \

-v /data/elk/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml \

-v /dataelk//elasticsearch/data:/usr/share/elasticsearch/data \

-v /dataelk//elasticsearch/plugins:/usr/share/elasticsearch/plugins \

-d elasticsearch:7.7.0

访问http://ip:9200如下图

2. docker 安装部署logstash

下载镜像

docker pull logstash:7.7.0

启动logstash(拷贝数据到持久化目录)

docker run -d --name=logstash logstash:7.7.0

mkdir -p /data/elk/logstash/config/conf.d

docker cp logstash:/usr/share/logstash/config /data/elk/logstash/

docker cp logstash:/usr/share/logstash/data /data/elk/logstash/

docker cp logstash:/usr/share/logstash/pipeline /data/elk/logstash/

// 授予权限

chmod 777 -R /data/elk/logstash

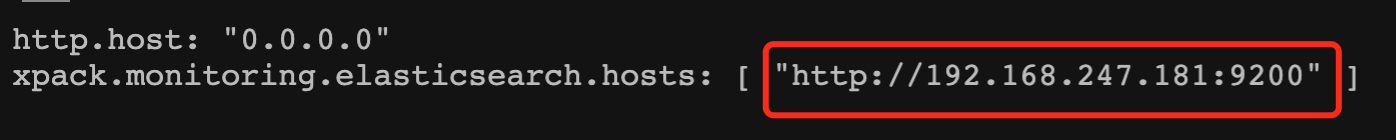

修改配置文件中的elasticsearch地址

vi /data/elk/logstash/config/logstash.yml

重新启动logstash

docker rm -f logstash

docker run \

--name logstash \

--restart=always \

-p 5044:5044 \

-p 9600:9600 \

-v /data/elk/logstash/config:/usr/share/logstash/config \

-v /data/elk/logstash/data:/usr/share/logstash/data \

-v /data/elk/logstash/pipeline:/usr/share/logstash/pipeline \

-d logstash:7.7.0

3. docker 安装部署filebeat

安装在应用服务器直接读取log文件

下载镜像

docker pull elastic/filebeat:7.7.0

临时启动

docker run -d --name=filebeat elastic/filebeat:7.7.0

拷贝数据文件

docker cp filebeat:/usr/share/filebeat /data/

chmod 777 -R /data/filebeat

chmod go-w /data/filebeat/filebeat.yml

编辑配置文件(输出到logstash)

vi /data/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/messages/*.log

filebeat.config:

modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

processors:

- add_cloud_metadata: ~

- add_docker_metadata: ~

output.logstash:

hosts: '192.168.247.181:5044'

indices:

- index: "filebeat-%{+yyyy.MM.dd}"

重新启动filebeat

docker rm -f filebeat

/data/logs为项目日志存放目录

docker run \

--name=filebeat \

--restart=always \

-v /data/filebeat:/usr/share/filebeat \

-v /data/logs:/var/log/messages \

-d elastic/filebeat:7.7.0

在logstash中添加filebeat配置文件

日志格式解析可根据自己项目来变更,我这里把请求头日志放在request索引下,请求过程日志放在backend索引下

// logback日志输出格式 与grok 解析格式对应

<pattern>[%X{appName:-admin}] [%X{nodeName:-127.0.0.1}] [%d{DEFAULT}] [%p] [%t] [traceId:%X{traceId:-0}] [user:%X{userName:-root}] [ip:%X{ip:-127.0.0.1}] [%c:%L] %m%n</pattern>

vi /data/elk/logstash/config/conf.d/beat.conf

input {

beats {

port => 5044

}

}

filter {

mutate {

copy => { "message" => "logmessage" }

}

grok {

match =>{

"message" => [

"\A\[%{NOTSPACE:moduleName}\]\s+\[%{NOTSPACE:nodeName}\]\s+\[%{DATA:datetime}]\s+\[%{NOTSPACE:level}]\s+\[%{NOTSPACE:thread}\]\s+\[traceId:%{NOTSPACE:traceId}\]\s+\[user:%{NOTSPACE:user}\]\s+\[ip:%{NOTSPACE:ip}\]\s+\[%{NOTSPACE:localhost}\]\s+%{GREEDYDATA:message}[\n|\t](?<exception>(?<exceptionType>^.*[Exception|Error]):\s+(?<exceptionMessage>(.)*?)[\n|\t](?<stacktrace>(?m)(.*)))",

"\A\[%{NOTSPACE:moduleName}\]\s+\[%{NOTSPACE:nodeName}\]\s+\[%{DATA:datetime}]\s+\[%{NOTSPACE:level}]\s+\[%{NOTSPACE:thread}\]\s+\[traceId:%{NOTSPACE:traceId}\]\s+\[user:%{NOTSPACE:user}\]\s+\[ip:%{NOTSPACE:ip}\]\s+\[%{NOTSPACE:localhost}\]\s+(?<message>(?m).*)"

]

}

overwrite => [ "message" ]

}

date {

match => ["timestamp", "yyyy-MM-dd HH:mm:ss,SSS"]

remove_field => ["timestamp"]

}

ruby {

code => 'event.set("document_type", "request") if event.get("message").include?"requestUri"'

}

if [document_type] == 'request' {

json {

source => "message"

skip_on_invalid_json => true

remove_field => ["message"]

}

}

}

output {

if [document_type] == 'request' {

elasticsearch {

hosts => "192.168.247.181:9200"

index => "request-%{+YYYY.MM.dd}"

sniffing => true

manage_template => false

template_overwrite => true

}

} else {

elasticsearch {

hosts => "192.168.247.181:9200"

index => "backend-%{+YYYY.MM.dd}"

sniffing => true

manage_template => false

template_overwrite => true

}

}

}

指定filebeats配置文件路径

vi /data/elk/logstash/config/logstash.yml

// docker容器内部路径

path.config: /usr/share/logstash/config/conf.d/*.conf

path.logs: /usr/share/logstash/logs

重启logstash

docker restart logstash

4. docker 安装kibana查看日志

下载镜像

docker pull kibana:7.7.0

配置文件

注意替换自己的elasticsearch ip

mkdir -p /data/elk/kibana/config/

vi /data/elk/kibana/config/kibana.yml

内容如下

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.name: kibana

server.host: "0"

elasticsearch.hosts: [ "http://192.168.247.181:9200" ]

xpack.monitoring.ui.container.elasticsearch.enabled: true

启动kibana

docker run -d \

--name=kibana \

--restart=always \

-p 5601:5601 \

-v /data/elk/kibana/config/kibana.yml:/usr/share/kibana/config/kibana.yml \

kibana:7.7.0

访问http://ip:5601

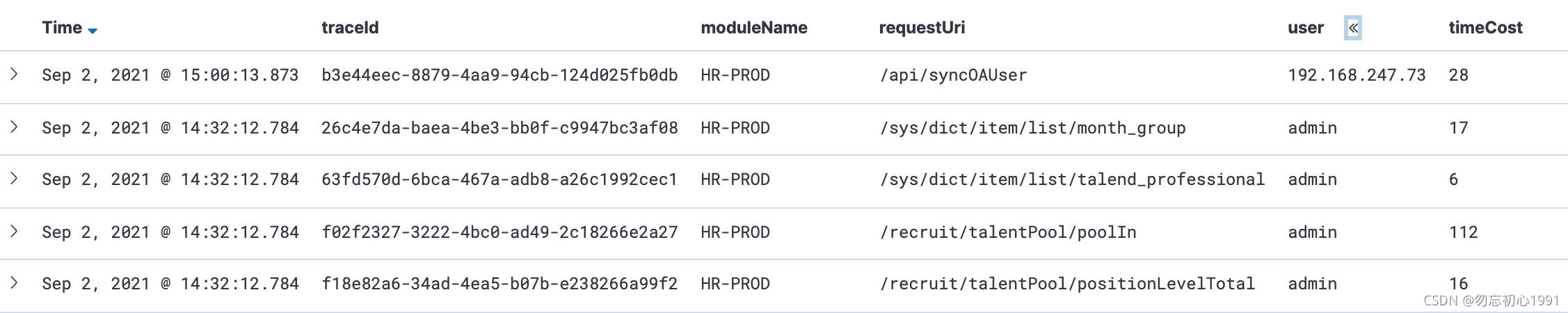

request*索引

backend*索引

二、整合Springboot可视化查询

1.pom.xml中添加依赖

<dependency>

<groupId>org.elasticsearch.client</groupId>

<artifactId>elasticsearch-rest-high-level-client</artifactId>

<version>7.1.0</version>

</dependency>

<dependency>

<groupId>org.elasticsearch</groupId>

<artifactId>elasticsearch</artifactId>

<version>7.1.0</version>

</dependency>

2.ES配置类

@Configuration

public class ElasticsearchConfiguration {

private String host = "192.168.247.181";

private int port = 9200;

private int connTimeout = 3000;

private int socketTimeout = 5000;

private int connectionRequestTimeout = 500;

@Bean(destroyMethod = "close", name = "client")

public RestHighLevelClient initRestClient() {

RestClientBuilder builder = RestClient.builder(new HttpHost(host, port))

.setRequestConfigCallback(requestConfigBuilder -> requestConfigBuilder

.setConnectTimeout(connTimeout)

.setSocketTimeout(socketTimeout)

.setConnectionRequestTimeout(connectionRequestTimeout));

return new RestHighLevelClient(builder);

}

}

3.数据查询

@PostMapping("searchRequest")

public Map<String, Object> searchRequest(@RequestBody RequestLogForm param) throws Exception {

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();

if (StringUtils.isNotBlank(param.getModuleName())) {

boolQueryBuilder.must(QueryBuilders.termQuery("moduleName.keyword", param.getModuleName()));

}

if (StringUtils.isNotBlank(param.getRequestUri())) {

boolQueryBuilder.must(QueryBuilders.matchPhraseQuery("requestUri", param.getRequestUri()));

}

if (StringUtils.isNotBlank(param.getTraceId())) {

boolQueryBuilder.must(QueryBuilders.termQuery("traceId.keyword", param.getTraceId()));

}

if (StringUtils.isNotBlank(param.getUser())) {

boolQueryBuilder.must(QueryBuilders.termQuery("user.keyword", param.getUser()));

}

if (param.getBeginTime() != null || param.getEndTime() != null) {

RangeQueryBuilder rangeQueryBuilder = QueryBuilders.rangeQuery("@timestamp");

if (param.getBeginTime() != null) {

rangeQueryBuilder.gte(new java.util.Date(param.getBeginTime().getTime() + 3600 * 1000));

}

if (param.getEndTime() != null) {

rangeQueryBuilder.lte(new java.util.Date(param.getEndTime().getTime() + 3600 * 1000));

}

boolQueryBuilder.must(rangeQueryBuilder);

}

sourceBuilder.query(boolQueryBuilder);

sourceBuilder.from((param.getPage() - 1) * param.getLimit());

sourceBuilder.size(param.getLimit());

sourceBuilder.timeout(new TimeValue(60, TimeUnit.SECONDS));

sourceBuilder.sort("@timestamp", SortOrder.DESC);

SearchRequest searchRequest = new SearchRequest();

searchRequest.source(sourceBuilder);

// 该索引包含的日志每个请求一条记录,所以筛选的记录可以代表请求的调用情况

searchRequest.indices("request-*");

log.info("ES Query: \n{}", sourceBuilder.toString());

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

log.info("ES Response: \n{}", JSON.toJSONString(searchResponse));

List<RequestLog> list = new ArrayList<>();

for (SearchHit documentFields : searchResponse.getHits().getHits()) {

RequestLog requestLog = JSON.parseObject(documentFields.getSourceAsString(), RequestLog.class);

list.add(requestLog);

}

Map<String, Object> result = new HashMap<>(4);

result.put("code", 0);

result.put("message", "SUCCESS");

result.put("data", list);

result.put("count", searchResponse.getHits().getTotalHits().value);

return result;

}

@GetMapping("searchBackend/{traceId}")

public String searchBackend(@PathVariable String traceId) throws Exception {

SearchSourceBuilder sourceBuilder = new SearchSourceBuilder();

BoolQueryBuilder boolQueryBuilder = QueryBuilders.boolQuery();

boolQueryBuilder.must(QueryBuilders.termQuery("traceId.keyword", traceId));

sourceBuilder.query(boolQueryBuilder);

sourceBuilder.from(0);

// ElasticSearch的最大查询结果树,跟ElasticSearch的配置保持一致

sourceBuilder.size(10000);

sourceBuilder.timeout(new TimeValue(60, TimeUnit.SECONDS));

sourceBuilder.sort("datetime.keyword", SortOrder.ASC);

sourceBuilder.sort("log.offset", SortOrder.ASC);

SearchRequest searchRequest = new SearchRequest();

searchRequest.source(sourceBuilder);

// 该索引包含的日志是每个请求执行过程中写的日志,一个请求会有多条记录

searchRequest.indices("backend-*");

log.info("ES Query: \n" + sourceBuilder.toString());

SearchResponse searchResponse = client.search(searchRequest, RequestOptions.DEFAULT);

for (ShardSearchFailure ssf : searchResponse.getShardFailures()) {

System.err.println(ssf.reason());

}

StringBuffer concatMessage = new StringBuffer();

for (SearchHit documentFields : searchResponse.getHits().getHits()) {

BackendLog backendLog = JSON.parseObject(documentFields.getSourceAsString(), BackendLog.class);

concatMessage.append(backendLog.getDatetime() + " " + backendLog.getLocalhost() + " " + backendLog.getMessage() + "<br/>");

concatMessage.append(" <br/>");

}

return concatMessage.toString();

}

1352

1352

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?