Linux PPP实现源码分析

作者:kwest <exboy@163.com> 版本:v0.7

©所有版权保留

转载请保留作者署名,严禁用于商业用途 。

前言:

PPP(Point to Point Protocol)协议是一种广泛使用的数据链路层协议,在国内广泛使用的宽带拨号协议PPPoE其基础就是PPP协议,此外和PPP相关的协议PPTP,L2TP也常应用于VPN虚拟专用网络。随着智能手机系统Android的兴起,PPP协议还被应用于GPRS拨号,3G/4G数据通路的建立,在嵌入式通信设备及智能手机中有着广泛的应用基础。本文主要分析Linux中PPP协议实现的关键代码和基本数据收发流程,对PPP协议的详细介绍请自行参考RFC和相关协议资料。

模块组成:

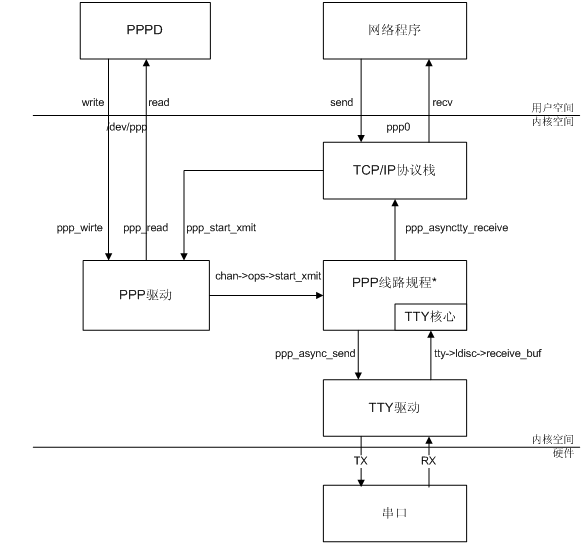

上图为PPP模块组成示意图,包括:

PPPD:PPP用户态应用程序。

PPP驱动:PPP在内核中的驱动部分,kernel源码在/drivers/net/下的ppp_generic.c, slhc.c。

PPP线路规程*:PPP TTY线路规程,kernel源码在/drivers/net/下的ppp_async.c, ppp_synctty.c,本文只考虑异步PPP。

TTY核心:TTY驱动,线路规程的通用框架层。

TTY驱动:串口TTY驱动,和具体硬件相关,本文不讨论。

说明:本文引用的pppd源码来自于android 2.3源码包,kernel源码版本为linux-2.6.18。

Linux中PPP实现主要分成两大部分:PPPD和PPPK。PPPD是用户态应用程序,负责PPP协议的具体配置,如MTU、拨号模式、认证方式、认证所需用户名/密码等。 PPPK指的是PPP内核部分,包括上图中的PPP驱动和PPP线路规程。PPPD通过PPP驱动提供的设备文件接口/dev/ppp来对PPPK进行管理控制,将用户需要的配置策略通过PPPK进行有效地实现,并且PPPD还会负责PPP协议从LCP到PAP/CHAP认证再到IPCP三个阶段协议建立和状态机的维护。因此,从Linux的设计思想来看,PPPD是策略而PPPK是机制;从数据收发流程看,所有控制帧(LCP,PAP/CHAP/EAP,IPCP/IPXCP等)都通过PPPD进行收发协商,而链路建立成功后的数据报文直接通过PPPK进行转发,如果把Linux当做通信平台,PPPD就是Control Plane而PPPK是DataPlane。

在Linux中PPPD和PPPK联系非常紧密,虽然理论上也可以有其他的应用层程序调用PPPK提供的接口来实现PPP协议栈,但目前使用最广泛的还是PPPD。PPPD的源码比较复杂,支持众多类UNIX平台,里面包含TTY驱动,字符驱动,以太网驱动这三类主要驱动,以及混杂了TTY,PTY,Ethernet等各类接口,导致代码量大且难于理解,下文我们就抽丝剥茧将PPPD中的主干代码剥离出来,遇到某些重要的系统调用,我会详细分析其在Linux内核中的具体实现。

源码分析:

PPPD的主函数main:

第一阶段:

| pppd/main.c -> main(): |

| …… new_phase(PHASE_INITIALIZE); //PPPD中的状态机,目前是初始化阶段 /* * Initialize magic number generator now so that protocols may * use magic numbers in initialization. */ magic_init();

/* * Initialize each protocol. */ for(i=0;(protp=protocols[i])!= NULL;++i) //protocols[]是全局变量的协议数组 (*protp->init)(0); //初始化协议数组中所有协议

/* * Initialize the default channel. */ tty_init(); //channel初始化,默认就是全局的tty_channel,里面包括很多TTY函数指针 if(!options_from_file(_PATH_SYSOPTIONS,!privileged,0,1)//解析/etc/ppp/options中的参数 ||!options_from_user() ||!parse_args(argc-1,argv+1)) //解析PPPD命令行参数 exit(EXIT_OPTION_ERROR); devnam_fixed=1; /* can no longer change device name */

/* * Work out the device name, if it hasn't already been specified, * and parse the tty's options file. */ if(the_channel->process_extra_options) (*the_channel->process_extra_options)(); //实际上是调用tty_process_extra_options解析TTY 参数 if(!ppp_available()){ //检测/dev/ppp设备文件是否有效 option_error("%s",no_ppp_msg); exit(EXIT_NO_KERNEL_SUPPORT); } /* * Check that the options given are valid and consistent. */ check_options(); //检查选项参数 if(!sys_check_options()) //检测系统参数,比如内核是否支持Multilink等 exit(EXIT_OPTION_ERROR); auth_check_options(); //检查认证相关的参数 #ifdef HAVE_MULTILINK mp_check_options(); #endif for(i=0;(protp=protocols[i])!= NULL;++i) if(protp->check_options!= NULL) (*protp->check_options)(); //检查每个控制协议的参数配置 if(the_channel->check_options) (*the_channel->check_options)(); //实际上是调用tty_check_options检测TTY参数

…… /* * Detach ourselves from the terminal, if required, * and identify who is running us. */ if(!nodetach&&!updetach) detach(); //默认放在后台以daemon执行,也可配置/etc/ppp/option中的nodetach参数放在前台执行 …… syslog(LOG_NOTICE,"pppd %s started by %s, uid %d",VERSION,p,uid); //熟悉的log,现在准备执行了 script_setenv("PPPLOGNAME",p,0);

if(devnam[0]) script_setenv("DEVICE",devnam,1); slprintf(numbuf,sizeof(numbuf),"%d",getpid()); script_setenv("PPPD_PID",numbuf,1);

setup_signals(); //设置信号处理函数

create_linkpidfile(getpid()); //创建PID文件

waiting=0;

/* * If we're doing dial-on-demand, set up the interface now. */ if(demand){ //以按需拨号方式运行,可配置 /* * Open the loopback channel and set it up to be the ppp interface. */ fd_loop=open_ppp_loopback(); //详见下面分析 set_ifunit(1); //设置IFNAME环境变量为接口名称如ppp0 /* * Configure the interface and mark it up, etc. */ demand_conf(); } (第二阶段)…… |

PPP协议里包括各种控制协议如LCP,PAP,CHAP,IPCP等,这些控制协议都有很多共同的地方,因此PPPD将每个控制协议都用结构protent表示,并放在控制协议数组protocols[]中,一般常用的是LCP,PAP,CHAP,IPCP这四个协议。

| /* * PPP Data Link Layer "protocol" table. * One entry per supported protocol. * The last entry must be NULL. */ struct protent*protocols[]={ &lcp_protent, //LCP协议 &pap_protent, //PAP协议 &chap_protent, //CHAP协议 #ifdef CBCP_SUPPORT &cbcp_protent, #endif &ipcp_protent, //IPCP协议,IPv4 #ifdef INET6 &ipv6cp_protent, //IPCP协议,IPv6 #endif &ccp_protent, &ecp_protent, #ifdef IPX_CHANGE &ipxcp_protent, #endif #ifdef AT_CHANGE &atcp_protent, #endif &eap_protent, NULL }; |

每个控制协议由protent结构来表示,此结构包含每个协议处理用到的函数指针:

| /* * The following struct gives the addresses of procedures to call * for a particular protocol. */ struct protent{ u_short protocol; /* PPP protocol number */ /* Initialization procedure */ void(*init)__P((int unit)); //初始化指针,在main()中被调用 /* Process a received packet */ void(*input)__P((int unit, u_char *pkt,int len)); //接收报文处理 /* Process a received protocol-reject */ void(*protrej)__P((int unit)); //协议错误处理 /* Lower layer has come up */ void(*lowerup)__P((int unit)); //当下层协议UP起来后的处理 /* Lower layer has gone down */ void(*lowerdown)__P((int unit)); //当下层协议DOWN后的处理 /* Open the protocol */ void(*open)__P((int unit)); //打开协议 /* Close the protocol */ void(*close)__P((int unit,char*reason)); //关闭协议 /* Print a packet in readable form */ int (*printpkt)__P((u_char*pkt,int len, void(*printer)__P((void*,char*,...)), void*arg)); //打印报文信息,调试用。 /* Process a received data packet */ void(*datainput)__P((int unit, u_char *pkt,int len)); //处理已收到的数据包 boolenabled_flag; /* 0 iff protocol is disabled */ char*name; /* Text name of protocol */ char*data_name; /* Text name of corresponding data protocol */ option_t*options; /* List of command-line options */ /* Check requested options, assign defaults */ void(*check_options)__P((void)); //检测和此协议有关的选项参数 /* Configure interface for demand-dial */ int (*demand_conf)__P((int unit)); //将接口配置为按需拨号需要做的 动作 /* Say whether to bring up link for this pkt */ int (*active_pkt)__P((u_char*pkt,int len)); //判断报文类型并激活链路 }; |

在main()函数中会调用所有支持的控制协议的初始化函数init(),之后初始化TTY channel,解析配置文件或命令行参数,接着检测内核是否支持PPP驱动:

| pppd/sys_linux.c main() -> ppp_avaiable(): |

| intppp_available(void) { …… no_ppp_msg= "This system lacks kernel support for PPP. This could be because\n" "the PPP kernel module could not be loaded, or because PPP was not\n" "included in the kernel configuration. If PPP was included as a\n" "module, try `/sbin/modprobe -v ppp'. If that fails, check that\n" "ppp.o exists in /lib/modules/`uname -r`/net.\n" "See README.linux file in the ppp distribution for more details.\n";

/* get the kernel version now, since we are called before sys_init */ uname(&utsname); osmaj=osmin=ospatch=0; sscanf(utsname.release,"%d.%d.%d",&osmaj,&osmin,&ospatch); kernel_version=KVERSION(osmaj,osmin,ospatch);

fd=open("/dev/ppp", O_RDWR); if(fd>=0){ new_style_driver=1; //支持PPPK

/* XXX should get from driver */ driver_version=2; driver_modification=4; driver_patch=0; close(fd); return1; } …… } |

函数ppp_available会尝试打开/dev/ppp设备文件来判断PPP驱动是否已加载在内核中,如果此设备文件不能打开则通过uname判断内核版本号来区分当前内核版本是否支持PPP驱动,要是内核版本很老(2.3.x以下),则打开PTY设备文件并设置PPP线路规程。目前常用的内核版本基本上都是2.6以上,绝大多数情况下使用的内核都支持PPP驱动,因此本文不分析使用PTY的old driver部分。

接下来会检查选项的合法性,这些选项可以来自于配置文件/etc/ppp/options,也可以是命令行参数,PPPD里面对选项的处理比较多,这里不一一分析了。

后面是把PPPD以daemon方式执行或保持在前台运行并设置一些环境变量和信号处理函数,最后进入到第一个关键部分,当demand这个变量为1时,表示PPPD以按需拨号方式运行。

什么是按需拨号呢?如果大家用过无线路由器就知道,一般PPPoE拨号配置页面都会有一个“按需拨号”的选项,若没有到外部网络的数据流,PPP链路就不会建立,当检测到有流量访问外部网络时,PPP就开始拨号和ISP的拨号服务器建立连接,拨号成功后才产生计费。反之,如果在一定时间内没有访问外网的流量,PPP就会断开连接,为用户节省流量费用。在宽带网络普及的今天,宽带费用基本上都是包月收费了,对家庭宽带用户此功能意义不大。不过对于3G/4G网络这种按流量收费的数据访问方式,按需拨号功能还是有其用武之地。

PPP的按需拨号功能如何实现的呢?首先调用open_ppp_loopback:

| pppd/sys-linux.c main() -> open_ppp_loopback(): |

| int open_ppp_loopback(void) { intflags;

looped=1; //设置全局变量looped为1,后面会用到 if(new_style_driver){ /* allocate ourselves a ppp unit */ if(make_ppp_unit()<0) //创建PPP网络接口 die(1); modify_flags(ppp_dev_fd,0, SC_LOOP_TRAFFIC); //通过ioctl设置SC_LOOP_TRAFFIC set_kdebugflag(kdebugflag); ppp_fd=-1; returnppp_dev_fd; }

……(下面是old driver,忽略) } |

全局变量new_style_driver,这个变量已经在ppp_avaliable函数里被设置为1了。接下来调用make_ppp_unit打开/dev/ppp设备文件并请求建立一个新的unit。

| pppd/sys-linux.c main() -> open_ppp_loopback() -> make_ppp_unit(): |

| staticintmake_ppp_unit() { intx,flags;

if(ppp_dev_fd>=0){ //如果已经打开过,先关闭 dbglog("in make_ppp_unit, already had /dev/ppp open?"); close(ppp_dev_fd); } ppp_dev_fd=open("/dev/ppp", O_RDWR); //打开/dev/ppp if(ppp_dev_fd<0) fatal("Couldn't open /dev/ppp: %m"); flags=fcntl(ppp_dev_fd, F_GETFL); if(flags==-1 ||fcntl(ppp_dev_fd, F_SETFL,flags| O_NONBLOCK)==-1) //设置为非阻塞 warn("Couldn't set /dev/ppp to nonblock: %m");

ifunit=req_unit; //传入请求的unit number,可通过/etc/ppp/options配置 x=ioctl(ppp_dev_fd, PPPIOCNEWUNIT,&ifunit); //请求建立一个新unit if(x<0&&req_unit>=0&& errno == EEXIST){ warn("Couldn't allocate PPP unit %d as it is already in use",req_unit); ifunit=-1; x=ioctl(ppp_dev_fd, PPPIOCNEWUNIT,&ifunit); } if(x<0) error("Couldn't create new ppp unit: %m"); returnx; } |

这里的unit可以理解为一个PPP接口,在Linux中通过ifconfig看到的ppp0就是通过ioctl(ppp_dev_fd, PPPIOCNEWUNIT, &ifunit)建立起来的,unit number是可以配置的,不过一般都不用配置,传入-1会自动分配一个未使用的unit number,默认从0开始。这个ioctl调用的是PPPK中注册的ppp_ioctl:

| linux-2.6.18/drivers/net/ppp_generic.c main() -> open_ppp_loopback() -> make_ppp_unit() -> ioctl(ppp_dev_fd,PPPIOCNEWUNIT,&ifunit) -> ppp_ioctl(): |

| staticintppp_ioctl(struct inode *inode,struct file*file, unsignedintcmd,unsignedlongarg) { struct ppp_file*pf=file->private_data; …… if(pf==0) returnppp_unattached_ioctl(pf,file,cmd,arg); |

TIPS:这里还要解释一下PPPK中channel和unit的关系,一个channel相当于一个物理链路,而unit相当于一个接口。在Multilink PPP中,一个unit可以由多个channel组合而成,也就是说一个PPP接口下面可以有多个物理链路,这里的物理链路不一定是物理接口,也可以是一个物理接口上的多个频段(channel)比如HDLC channel。

PPPK中channel用结构channel表示,unit用结构ppp表示。

| linux-2.6.18/drivers/net/ppp_generic.c |

| /* * Data structure describing one ppp unit. * A ppp unit corresponds to a ppp network interface device * and represents a multilink bundle. * It can have 0 or more ppp channels connected to it. */ struct ppp{ struct ppp_file file; /* stuff for read/write/poll 0 */ struct file *owner; /* file that owns this unit 48 */ struct list_headchannels; /* list of attached channels 4c */ int n_channels; /* how many channels are attached 54 */ spinlock_t rlock; /* lock for receive side 58 */ spinlock_t wlock; /* lock for transmit side 5c */ int mru; /* max receive unit 60 */ unsignedint flags; /* control bits 64 */ unsignedint xstate; /* transmit state bits 68 */ unsignedint rstate; /* receive state bits 6c */ int debug; /* debug flags 70 */ struct slcompress*vj; /* state for VJ header compression */ enumNPmode npmode[NUM_NP];/* what to do with each net proto 78 */ struct sk_buff *xmit_pending; /* a packet ready to go out 88 */ struct compressor*xcomp;/* transmit packet compressor 8c */ void *xc_state; /* its internal state 90 */ struct compressor*rcomp; /* receive decompressor 94 */ void *rc_state; /* its internal state 98 */ unsignedlonglast_xmit; /* jiffies when last pkt sent 9c */ unsignedlonglast_recv; /* jiffies when last pkt rcvd a0 */ struct net_device*dev; /* network interface device a4 */ #ifdef CONFIG_PPP_MULTILINK int nxchan; /* next channel to send something on */ u32 nxseq; /* next sequence number to send */ int mrru; /* MP: max reconst. receive unit */ u32 nextseq; /* MP: seq no of next packet */ u32 minseq; /* MP: min of most recent seqnos */ struct sk_buff_head mrq; /* MP: receive reconstruction queue */ #endif/* CONFIG_PPP_MULTILINK */ struct net_device_statsstats; /* statistics */ #ifdef CONFIG_PPP_FILTER struct sock_filter*pass_filter; /* filter for packets to pass */ struct sock_filter*active_filter;/* filter for pkts to reset idle */ unsigned pass_len, active_len; #endif/* CONFIG_PPP_FILTER */ };

/* * Private data structure for each channel. * This includes the data structure used for multilink. */ struct channel{ struct ppp_file file; /* stuff for read/write/poll */ struct list_headlist; /* link in all/new_channels list */ struct ppp_channel*chan; /* public channel data structure */ struct rw_semaphorechan_sem;/* protects `chan' during chan ioctl */ spinlock_t downl; /* protects `chan', file.xq dequeue */ struct ppp *ppp; /* ppp unit we're connected to */ struct list_headclist; /* link in list of channels per unit */ rwlock_t upl; /* protects `ppp' */ #ifdef CONFIG_PPP_MULTILINK u8 avail; /* flag used in multilink stuff */ u8 had_frag; /* >= 1 fragments have been sent */ u32 lastseq; /* MP: last sequence # received */ #endif/* CONFIG_PPP_MULTILINK */ }; struct ppp_file{ enum{ INTERFACE=1,CHANNEL } kind; //代表打开的/dev/ppp类型是channel还是unit struct sk_buff_headxq; /* pppd transmit queue */ struct sk_buff_headrq; /* receive queue for pppd */ wait_queue_head_t rwait;/* for poll on reading /dev/ppp */ atomic_t refcnt; /* # refs (incl /dev/ppp attached) */ int hdrlen; /* space to leave for headers */ int index; /* interface unit / channel number */ int dead; /* unit/channel has been shut down */ }; |

注意这两个结构体的第一个字段都是structppp_file,ppp_file的kind字段代表/dev/ppp的类型。

现在回到ppp_ioctl,它的执行要判定三种情况,没有任何绑定,绑定到PPP unit或绑定到PPP channel,在初始化时并没有任何绑定即file->private_data为空,因此这里会调用ppp_unattached_ioctl:

| linux-2.6.18/drivers/net/ppp_generic.c main() -> open_ppp_loopback() -> make_ppp_unit() -> ioctl(ppp_dev_fd,PPPIOCNEWUNIT,&ifunit) -> ppp_ioctl() –> ppp_unattached_ioctl(): |

| staticintppp_unattached_ioctl(struct ppp_file *pf,struct file*file, unsignedintcmd,unsignedlongarg) { intunit,err=-EFAULT; struct ppp*ppp; struct channel*chan; int__user*p=(int__user*)arg;

switch(cmd){ casePPPIOCNEWUNIT: /* Create a new ppp unit */ if(get_user(unit, p)) break; ppp=ppp_create_interface(unit,&err); //创建ppp网络接口 if(ppp==0) break; file->private_data=&ppp->file; //注意:现在绑定到了PPP unit,指向的是struct ppp_file结构 ppp->owner=file; err=-EFAULT; if(put_user(ppp->file.index,p)) break; err=0; break; |

这个函数又会调用ppp_create_interface创建一个ppp网络接口:

| linux-2.6.18/drivers/net/ppp_generic.c main() -> open_ppp_loopback() -> make_ppp_unit() -> ioctl(ppp_dev_fd,PPPIOCNEWUNIT,&ifunit) -> ppp_ioctl() –> ppp_unattached_ioctl()-> ppp_create_interface(): |

| /* * Create a new ppp interface unit. Fails if it can't allocate memory * or if there is already a unit with the requested number. * unit == -1 means allocate a new number. */ staticstruct ppp* ppp_create_interface(intunit,int*retp) { struct ppp*ppp; struct net_device*dev=NULL; intret=-ENOMEM; inti;

ppp=kzalloc(sizeof(struct ppp), GFP_KERNEL); //分配struct ppp即新建一个unit if(!ppp) gotoout; dev=alloc_netdev(0,"",ppp_setup); //分配net_device,这个结构表示一个网络接口 if(!dev) gotoout1;

ppp->mru=PPP_MRU; //初始化MRU(最大接收单元) init_ppp_file(&ppp->file,INTERFACE); //初始化ppp_file结构,类型为INTERFACE ppp->file.hdrlen=PPP_HDRLEN-2; /* don't count proto bytes */ for(i=0;i<NUM_NP;++i) ppp->npmode[i]=NPMODE_PASS; INIT_LIST_HEAD(&ppp->channels); //PPP接口中的channel链表 spin_lock_init(&ppp->rlock); //接收队列的锁 spin_lock_init(&ppp->wlock); //发送队列的锁 #ifdef CONFIG_PPP_MULTILINK ppp->minseq=-1; skb_queue_head_init(&ppp->mrq); #endif/* CONFIG_PPP_MULTILINK */ ppp->dev=dev; //指向分配的net_device结构 dev->priv=ppp; //ppp网络接口的私有结构就是struct ppp

dev->hard_start_xmit=ppp_start_xmit; //ppp网络接口数据包发送函数,被TCP/IP协议栈调用 dev->get_stats=ppp_net_stats; //收发数据包统计 dev->do_ioctl=ppp_net_ioctl; //对ppp网络接口调用ioctl()时使用

ret=-EEXIST; mutex_lock(&all_ppp_mutex); //互斥操作,由于从进程进来的,要用可睡眠的mutex if(unit<0) //unit传入-1表示自动分配未使用的unit number unit=cardmap_find_first_free(all_ppp_units); elseif(cardmap_get(all_ppp_units,unit)!=NULL) gotoout2; /* unit already exists */

/* Initialize the new ppp unit */ ppp->file.index=unit; //这里就是传送中的unit number sprintf(dev->name,"ppp%d",unit); //ppp后面加上unit number就是ppp接口名,如ppp0

ret=register_netdev(dev); //注册ppp网络接口,这时ifconfig才能看到这个接口 if(ret!=0){ printk(KERN_ERR"PPP: couldn't register device %s (%d)\n", dev->name, ret); gotoout2; }

atomic_inc(&ppp_unit_count); ret=cardmap_set(&all_ppp_units,unit,ppp); if(ret!=0) gotoout3;

mutex_unlock(&all_ppp_mutex); //互斥操作,解锁 *retp=0; returnppp;

…… } |

OK,现在PPP网络接口已经创建起来了,例如建立的接口名为ppp0,这里的ppp0还只是一个“假接口”,其实到这里PPP的整个拨号过程根本就还没有开始,之所以建立这个接口只是为了让数据报文可以通过这个接口发送出去从而触发PPP拨号。

接下来回到PPPD的open_ppp_loopback,make_ppp_unit这时候成功返回后,还会调用modify_flags函数来设置标志位SC_LOOP_TRAFFIC,这个函数其实调用的还是ioctl()->ppp_ioctl()来设置的flag。

| pppd/sys-linux.c main() -> open_ppp_loopback() –> modify_flags(): |

| staticintmodify_flags(intfd,intclear_bits,intset_bits) { intflags;

if(ioctl(fd, PPPIOCGFLAGS,&flags)==-1) gotoerr; flags=(flags&~clear_bits)|set_bits; if(ioctl(fd, PPPIOCSFLAGS,&flags)==-1) gotoerr;

return0; |

标志位SC_LOOP_TRAFFIC相当的重要,当通过ppp0接口发送数据时,PPPK才会唤醒PPPD进程去建立真正的PPP连接。之前在内核中创建ppp接口时会注册一个接口数据包发送函数ppp_start_xmit,当网络程序通过ppp0接口发送数据时,TCP/IP协议栈最终会调用到此函数。这个函数的call trace为ppp_start_xmit() -> ppp_xmit_process()-> ppp_send_frame():

| linux-2.6.18/drivers/net/ppp_generic.c |

| staticvoid ppp_send_frame(struct ppp*ppp,struct sk_buff*skb) { ……

/* * If we are waiting for traffic (demand dialling), * queue it up for pppd to receive. */ if(ppp->flags&SC_LOOP_TRAFFIC){ if(ppp->file.rq.qlen>PPP_MAX_RQLEN) gotodrop; skb_queue_tail(&ppp->file.rq,skb); //发送的数据包放在rq接收对列而不是发送队列! wake_up_interruptible(&ppp->file.rwait); //唤醒PPPD进程!!! return; }

…… } |

很显然,只要ppp->flags中SC_LOOP_TRAFFIC置位,就要做点特殊处理:把发送的数据包放在接收队列ppp->file.rq中而不是平常的发送队列,这是为啥呢?留待过会分解。唤醒PPPD进程进行处理,并没有将数据发送出去哦。

返回主函数main()中,当open_ppp_loopback调用返回后,其返回值同时被赋值给fd_loop代表/dev/ppp的文件描述符。此时,网络接口ppp0已创建好并注册到TCP/IP协议栈中,当然只有 ppp0接口还不够,我们还需要对ppp0接口做些配置,接着调用demand_conf:

| pppd/demand.c main() -> demand_conf(): |

| void demand_conf() { inti; struct protent*protp;

…… netif_set_mtu(0,MIN(lcp_allowoptions[0].mru,PPP_MRU)); //设置ppp0接口的MTU if(ppp_send_config(0,PPP_MRU,(u_int32_t)0,0,0)<0 ||ppp_recv_config(0,PPP_MRU,(u_int32_t)0,0,0)<0) fatal("Couldn't set up demand-dialled PPP interface: %m");

…… /* * Call the demand_conf procedure for each protocol that's got one. */ for(i=0;(protp=protocols[i])!= NULL;++i) if(protp->enabled_flag&&protp->demand_conf!= NULL) if(!((*protp->demand_conf)(0))) //调用每个控制协议的demand_conf函数 die(1); } |

这个函数设置ppp0的MTU和MRU,然后调用每个控制协议的demand_conf函数。对于LCP,PAP,CHAP协议protp->demand_conf都为空, 只有IPCP协议有初始化这个函数指针:

| pppd/ipcp.c main() -> demand_conf()-> ip_demand_conf(): |

| staticint ip_demand_conf(u) intu; { ipcp_options*wo=&ipcp_wantoptions[u];

if(wo->hisaddr==0){ /* make up an arbitrary address for the peer */ wo->hisaddr=htonl(0x0a707070+ifunit); //对端地址 wo->accept_remote=1; } if(wo->ouraddr==0){ /* make up an arbitrary address for us */ wo->ouraddr=htonl(0x0a404040+ifunit); //本端地址 wo->accept_local=1; ask_for_local=0; /* don't tell the peer this address */ } if(!sifaddr(u,wo->ouraddr,wo->hisaddr,GetMask(wo->ouraddr))) //在ppp0接口上配置本端地址和对端地址及子网掩码 return0; if(!sifup(u)) //将ppp0接口设置为UP,接口类型为点对点。 return0; if(!sifnpmode(u,PPP_IP,NPMODE_QUEUE)) return0; if(wo->default_route) if(sifdefaultroute(u,wo->ouraddr,wo->hisaddr)) //设置ppp0为默认网关接口 default_route_set[u]=1; if(wo->proxy_arp) if(sifproxyarp(u,wo->hisaddr)) proxy_arp_set[u]=1;

notice("local IP address %I",wo->ouraddr); notice("remote IP address %I",wo->hisaddr);

return1; } |

上面提到在按需拨号模式下,要让数据报文通过ppp0接口发送才会触发PPP连接的建立。所以这里,IPCP协议块提供的ip_demand_conf函数就为ppp0配置了两个假的IP地址:本端IP地址为10.64.64.64,对端IP地址为10.112.112.112,并设置对端IP为默认网关。这样,当用户访问外部网络时,Linux路由子系统会选择ppp0接口发送数据包,从而触发PPP链路的建立。

第二阶段:

回到主函数main()中,接下来是最外层的for(;;)循环进行事件处理,

| pppd/main.c -> main(): |

| (第一阶段)…… do_callback=0; for(;;){ /* 最外层for(;;)循环 */

…… doing_callback=do_callback; do_callback=0;

if(demand&&!doing_callback){//按需拨号 /* * Don't do anything until we see some activity. */ new_phase(PHASE_DORMANT); //PPPD状态机 demand_unblock(); add_fd(fd_loop); //将fd_loop即/dev/ppp的文件描述符加入select的fds中 for(;;){ //嵌套for(;;)循环 handle_events(); //select事件处理 if(asked_to_quit) break; if(get_loop_output()) //发送数据有效就跳出循环 break; } remove_fd(fd_loop); //注意:要把/dev/ppp文件描述符从fds中remove掉,后面还会再加入 if(asked_to_quit) break;

/* * Now we want to bring up the link. */ demand_block(); info("Starting link"); }

(第三阶段)……

} |

如果是demand拨号模式,PPPD状态机进入PHASE_DORMANT, 主要包含两个部分:

1. 调用add_fd将/dev/ppp的文件描述符fd_loop加入in_fds中:

| pppd/sys-linux.c: main() -> add_fd(): |

| /* * add_fd - add an fd to the set that wait_input waits for. */ voidadd_fd(intfd) { if(fd>= FD_SETSIZE) fatal("internal error: file descriptor too large (%d)",fd); FD_SET(fd,&in_fds); if(fd>max_in_fd) max_in_fd=fd; } |

2. 在嵌套的for(;;)死循环里调用handle_events函数进行事件处理。

| pppd/main.c: main() -> handle_events(): |

| /* * handle_events - wait for something to happen and respond to it. */ staticvoid handle_events() { struct timevaltimo;

kill_link=open_ccp_flag=0; if(sigsetjmp(sigjmp,1)==0){ sigprocmask(SIG_BLOCK,&signals_handled, NULL); if(got_sighup||got_sigterm||got_sigusr2||got_sigchld){ sigprocmask(SIG_UNBLOCK,&signals_handled, NULL); }else{ waiting=1; sigprocmask(SIG_UNBLOCK,&signals_handled, NULL); wait_input(timeleft(&timo)); //调用select进行I/O多路复用 } } waiting=0; calltimeout(); //调用注册的timer函数 /* 下面都是信号处理 */ if(got_sighup){ info("Hangup (SIGHUP)"); kill_link=1; got_sighup=0; if(status!=EXIT_HANGUP) status=EXIT_USER_REQUEST; } if(got_sigterm){ //收到SIGTERM信号时退出 info("Terminating on signal %d",got_sigterm); kill_link=1; asked_to_quit=1; //注意 persist=0; status=EXIT_USER_REQUEST; got_sigterm=0; } if(got_sigchld){ got_sigchld=0; reap_kids(); /* Don't leave dead kids lying around */ } if(got_sigusr2){ open_ccp_flag=1; got_sigusr2=0; } } |

这个函数里面重点是调用了wait_input对前面加入的/dev/ppp文件描述符调用select监听事件。

| pppd/sys-linux.c: main() -> handle_events()-> wait_input(): |

| voidwait_input(struct timeval*timo) { fd_set ready,exc; intn;

ready=in_fds; //in_fds中包含有/dev/ppp的文件描述符 exc=in_fds; n=select(max_in_fd+1,&ready, NULL,&exc,timo); //主菜在这里,调用select监听事件!!! if(n<0&& errno != EINTR) fatal("select: %m"); } |

还记得吗,/dev/ppp在前面的make_ppp_unit函数中已经被设置为非阻塞,因此当没有事件发生时select调用不会一直阻塞下去,当超时时间到时wait_input会很快返回,calltimeout函数会被调用以处理注册的timer函数。这些timer函数是各控制协议及其fsm状态机需要用到的,从这里可以看出/dev/ppp被设置为非阻塞方式的必要性。

这个嵌套的for(;;)循环什么时候能跳出呢,这里有两个可能:

1. 变量asked_to_quit置为1。参考handle_events中对信号的处理,当收到SIGTERM时,表示用户想主动退出PPPD。

2. 函数get_loop_output调用返回1。下面分析一下这个函数:

| pppd/sys-linux.c: main() -> get_loop_output(): |

| /******************************************************************** * * get_loop_output - get outgoing packets from the ppp device, * and detect when we want to bring the real link up. * Return value is 1 if we need to bring up the link, 0 otherwise. */ int get_loop_output(void) { intrv=0; intn;

if(new_style_driver){ while((n=read_packet(inpacket_buf))>0) //有数据通过ppp0发送 if(loop_frame(inpacket_buf,n))//发送数据合法时为真 rv=1;//返回1,导致嵌套的for(;;)循环退出 returnrv; }

…… } |

首先调用read_packet读取数据到inpacket_buf中:

| pppd/sys-linux.c: main() -> get_loop_output() -> read_packet(): |

| intread_packet(unsignedchar*buf) { intlen,nr;

len=PPP_MRU+PPP_HDRLEN; if(new_style_driver){ *buf++=PPP_ALLSTATIONS; *buf++=PPP_UI; len-=2; } nr=-1;

if(ppp_fd>=0){ nr=read(ppp_fd,buf,len); //读/dev/ppp if(nr<0&& errno != EWOULDBLOCK&& errno!= EAGAIN && errno!= EIO&& errno!= EINTR) error("read: %m"); if(nr<0&& errno == ENXIO) return0; } if(nr<0&&new_style_driver&&ppp_dev_fd>=0&&!bundle_eof){ /* N.B. we read ppp_fd first since LCP packets come in there. */ nr=read(ppp_dev_fd,buf,len); if(nr<0&& errno != EWOULDBLOCK&& errno!= EAGAIN && errno!= EIO&& errno!= EINTR) error("read /dev/ppp: %m"); if(nr<0&& errno == ENXIO) nr=0; if(nr==0&&doing_multilink){ remove_fd(ppp_dev_fd); bundle_eof=1; } } if(new_style_driver&&ppp_fd<0&&ppp_dev_fd<0) nr=0; return(new_style_driver&&nr>0)?nr+2:nr; } |

这个函数很简单,实际上就是调用标准的文件读函数read()读取/dev/ppp设备文件,其实就是调用到PPPK中的ppp_read:

| linux-2.6.18/drivers/net/ppp_generic.c main() -> get_loop_output() -> read_packet() -> ppp_read(): |

| staticssize_tppp_read(struct file*file,char__user*buf, size_tcount, loff_t*ppos) { struct ppp_file*pf=file->private_data; DECLARE_WAITQUEUE(wait, current); ssize_tret; struct sk_buff*skb=NULL;

ret=count;

if(pf==0) return-ENXIO; add_wait_queue(&pf->rwait,&wait); //注意:加入到等待队列,会被ppp_send_frame()唤醒 for(;;){ set_current_state(TASK_INTERRUPTIBLE); //设置当前进程的状态为可中断睡眠 skb=skb_dequeue(&pf->rq); //从接收队列出队列一个数据包 if(skb) //如果有数据包,表示有数据可读 break; ret=0; if(pf->dead) //unit或channel已经不存在了,这里不讨论 break; if(pf->kind==INTERFACE){ /* * Return 0 (EOF) on an interface that has no * channels connected, unless it is looping * network traffic (demand mode). */ struct ppp*ppp=PF_TO_PPP(pf); if(ppp->n_channels==0 &&(ppp->flags&SC_LOOP_TRAFFIC)==0) break; } ret=-EAGAIN; if(file->f_flags& O_NONBLOCK)//若fd为O_NONBLOCK,则不睡眠,直接跳出循环 break; ret=-ERESTARTSYS; if(signal_pending(current)) //收到signal也不睡眠,直接跳出循环 break; schedule(); //进程调度器,让当前进程睡眠 } set_current_state(TASK_RUNNING); remove_wait_queue(&pf->rwait,&wait);

if(skb==0) //能到这里表明fd为O_NONBLOCK或收到signal gotoout;

ret=-EOVERFLOW; if(skb->len>count) gotooutf; ret=-EFAULT; if(copy_to_user(buf,skb->data,skb->len)) //将数据拷贝到用户缓冲区 gotooutf; ret=skb->len; //返回值就是数据长度

outf: kfree_skb(skb); out: returnret; } |

这个函数要把PPPD进程加入到等待队列中,若pf->rq队列不为空,则读取队列中的第一个数据包并立即返回。注意哦,上面提到当网络程序通过ppp0接口发送数据时,最终会调用内核函数ppp_send_frame,发送的数据则放在了该函数的ppp->file.rq队列中,这个队列就是这里的pf->rq队列,这就意味着ppp_read读取的数据其实就是刚才网络程序发送的数据。

反之,如果pf->rq队列为空,表示没有数据包需要通过ppp0接口发送,此函数直接返回-EAGAIN。也就是说,用户态函数 read_packet立即返回<0导致get_loop_output返回0,嵌套for(;;)循环不能退出继续循环等待事件处理。

考虑有数据通过ppp0发送,read_packet返回读取的数据长度,这时loop_frame会被调用:

| pppd/demand.c main() -> get_loop_output() -> loop_frame(): |

| int loop_frame(frame,len) unsignedchar*frame; intlen; { struct packet*pkt;

/* dbglog("from loop: %P", frame, len); */ if(len<PPP_HDRLEN) return0; if((PPP_PROTOCOL(frame)&0x8000)!=0) return0; /* shouldn't get any of these anyway */ if(!active_packet(frame,len)) //检测发送的数据是否有效 return0;

pkt=(struct packet*)malloc(sizeof(struct packet)+len); if(pkt!= NULL){ pkt->length=len; pkt->next= NULL; memcpy(pkt->data,frame,len); if(pend_q== NULL) pend_q=pkt; else pend_qtail->next=pkt; pend_qtail=pkt; } return1; } |

这里实际上最后是调用IPCP协议块的ip_active_pkt函数来检查数据包有效性,这里就不具体分析了。如果发送数据是合法的IP报文,后面会保存这些数据包,并暂时放在pend_qtail队列中,留待PPP链路建立后重新发送。

第三阶段:

如果是demand拨号模式,并且假设有数据通过ppp0发送且是合法IP报文,第二阶段中的嵌套for(;;)循环会被跳出,接下来的代码和正常拨号模式就一样了,真是殊途同归啊。

再次回到主函数main() 中,我们要开始建立真正的PPP链路了:

| pppd/main.c -> main(): |

| …… do_callback=0; for(;;){ /* 最外层for(;;)循环 */

……

lcp_open(0); /* Start protocol */ //第一步:打开PPPK接口发送LCP帧 while(phase!=PHASE_DEAD){ //第二步:PPPD状态机循环进行事件处理 handle_events(); //select事件处理 get_input(); //对接收报文的处理 if(kill_link) lcp_close(0,"User request"); if(asked_to_quit){ bundle_terminating=1; if(phase==PHASE_MASTER) mp_bundle_terminated(); } …… }

…… } |

第一步:调用lcp_open(0)建立LCP链路。

| pppd/lcp.c Main() -> lcp_open(): |

| /* * lcp_open - LCP is allowed to come up. */ void lcp_open(unit) intunit; { fsm*f=&lcp_fsm[unit]; //LCP状态机 lcp_options*wo=&lcp_wantoptions[unit];

f->flags&=~(OPT_PASSIVE|OPT_SILENT); if(wo->passive) f->flags|=OPT_PASSIVE; if(wo->silent) f->flags|=OPT_SILENT; fsm_open(f); } |

调用fsm_open打开LCP状态机:

| pppd/fsm.c main() -> lcp_open() -> fsm_open(): |

| void fsm_open(f) fsm*f; { switch(f->state){ caseINITIAL: f->state=STARTING; if(f->callbacks->starting) (*f->callbacks->starting)(f); //初始化时开始建立链路 break;

…… } } |

初始化状态,实际调用lcp_starting()-> link_required():

| pppd/auth.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required(): |

| void link_required(unit) intunit; { new_phase(PHASE_SERIALCONN); //PPPD状态机为“串口连接”阶段

devfd=the_channel->connect(); //1. 调用connect_tty连接TTY驱动 if(devfd<0) gotofail;

/* set up the serial device as a ppp interface */ /* * N.B. we used to do tdb_writelock/tdb_writeunlock around this * (from establish_ppp to set_ifunit). However, we won't be * doing the set_ifunit in multilink mode, which is the only time * we need the atomicity that the tdb_writelock/tdb_writeunlock * gives us. Thus we don't need the tdb_writelock/tdb_writeunlock. */ fd_ppp=the_channel->establish_ppp(devfd); //2. 调用 tty_establish_ppp if(fd_ppp<0){ status=EXIT_FATAL_ERROR; gotodisconnect; }

if(!demand&&ifunit>=0) //如果是不是demand模式,需要设置 IFNAME环境变量 set_ifunit(1);

/* * Start opening the connection and wait for * incoming events (reply, timeout, etc.). */ if(ifunit>=0) notice("Connect: %s <--> %s",ifname,ppp_devnam); else notice("Starting negotiation on %s",ppp_devnam); add_fd(fd_ppp); //把/dev/ppp文件描述加入fds。如果是demand模式,由于在main()中已经remove了需要再次加入,对于非demand模式则是首次加入

status=EXIT_NEGOTIATION_FAILED; new_phase(PHASE_ESTABLISH); //PPPD状态机进入“链路建立”阶段

lcp_lowerup(0); //3. 发送LCP Configure Request报文,向对方请求建立LCP链路 return;

disconnect: new_phase(PHASE_DISCONNECT); if(the_channel->disconnect) the_channel->disconnect();

fail: new_phase(PHASE_DEAD); if(the_channel->cleanup) (*the_channel->cleanup)();

} |

这个函数的主要作用从函数命名上就能看出,就是将需要的物理链路都带起来,现在PPPD状态机进入PHASE_SERIALCONN阶段。

1. 调用connect_tty打开串口TTY驱动并配置TTY参数,变量ppp_devnam是串口驱动的设备文件如/dev/ttyS0,/dev/ttyUSB0,/dev/ttyHDLC0等,具体可以参考相关的串口TTY驱动,这里不作具体分析。

2. 然后调用tty_establish_ppp:

| pppd/sys-linux.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp(): |

| inttty_establish_ppp(inttty_fd) { intret_fd;

…… /* * Set the current tty to the PPP discpline */

#ifndef N_SYNC_PPP #defineN_SYNC_PPP 14 #endif ppp_disc=(new_style_driver&&sync_serial)?N_SYNC_PPP: N_PPP; //同步还是异步PPP if(ioctl(tty_fd, TIOCSETD,&ppp_disc)<0){ //2.1 设置PPP线路规程 if(!ok_error(errno)){ error("Couldn't set tty to PPP discipline: %m"); return-1; } }

ret_fd=generic_establish_ppp(tty_fd); //2.2 创建PPP接口

#defineSC_RCVB(SC_RCV_B7_0 | SC_RCV_B7_1 | SC_RCV_EVNP | SC_RCV_ODDP) #defineSC_LOGB(SC_DEBUG | SC_LOG_INPKT | SC_LOG_OUTPKT | SC_LOG_RAWIN \ | SC_LOG_FLUSH)

if(ret_fd>=0){ modify_flags(ppp_fd,SC_RCVB|SC_LOGB, (kdebugflag* SC_DEBUG)&SC_LOGB); }else{ if(ioctl(tty_fd, TIOCSETD,&tty_disc)<0&&!ok_error(errno)) warn("Couldn't reset tty to normal line discipline: %m"); }

returnret_fd; } |

分成两部分来具体深入分析:

2.1 首先调用ioctl(tty_fd, TIOCSETD, &ppp_disc)将TTY驱动绑定到PPP线路规程,这里的ioctl是对TTY文件描述符的操作,实际上是调用了内核中的tty_ioctl() -> tiocsetd():

| linux-2.6.18/drivers/char/tty_io.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp() -> tty_ioctl() -> tiocsetd(): |

| /** * tiocsetd - set line discipline * @tty: tty device * @p: pointer to user data * * Set the line discipline according to user request. * * Locking: see tty_set_ldisc, this function is just a helper */

staticinttiocsetd(struct tty_struct *tty,int__user*p) { intldisc;

if(get_user(ldisc,p)) return-EFAULT; returntty_set_ldisc(tty,ldisc); //设定线路规程,本文设定为N_PPP即异步PPP } |

这个tiocsetd函数是个wrapper函数,只是把用户态传入的int参数放在内核态的ldisc中,再调用tty_set_ldist设置线路规程:

| linux-2.6.18/drivers/char/tty_io.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp() -> tty_ioctl() -> tiocsetd() -> tty_set_ldisc(): |

| staticinttty_set_ldisc(struct tty_struct *tty,intldisc) { intretval=0; struct tty_ldisco_ldisc; charbuf[64]; intwork; unsignedlongflags; struct tty_ldisc*ld; struct tty_struct*o_tty;

if((ldisc< N_TTY)||(ldisc>= NR_LDISCS)) return-EINVAL;

restart:

ld=tty_ldisc_get(ldisc); //从tty_ldiscs全局数组找出注册的N_PPP线路规程 /* Eduardo Blanco <ejbs@cs.cs.com.uy> */ /* Cyrus Durgin <cider@speakeasy.org> */ if(ld==NULL){ request_module("tty-ldisc-%d",ldisc); ld=tty_ldisc_get(ldisc); } if(ld==NULL) return-EINVAL; (如果当前TTY上已经设置了线路规程,这部分代码会detach现有的线路规程,这里忽略详细分析)…… /* Shutdown the current discipline. */ if(tty->ldisc.close) //调用当前线路规程的close()函数 (tty->ldisc.close)(tty);

/* Now set up the new line discipline. */ tty_ldisc_assign(tty,ld); //将上面获取的N_PPP线路规程attach到当前TTY tty_set_termios_ldisc(tty,ldisc); if(tty->ldisc.open) retval=(tty->ldisc.open)(tty); //调用N_PPP线路规程的open() if(retval<0){ tty_ldisc_put(ldisc); /* There is an outstanding reference here so this is safe */ tty_ldisc_assign(tty,tty_ldisc_get(o_ldisc.num)); tty_set_termios_ldisc(tty,tty->ldisc.num); if(tty->ldisc.open&&(tty->ldisc.open(tty)<0)){ tty_ldisc_put(o_ldisc.num); /* This driver is always present */ tty_ldisc_assign(tty,tty_ldisc_get(N_TTY)); tty_set_termios_ldisc(tty, N_TTY); if(tty->ldisc.open){ intr=tty->ldisc.open(tty);

if(r<0) panic("Couldn't open N_TTY ldisc for " "%s --- error %d.", tty_name(tty,buf),r); } } } /* At this point we hold a reference to the new ldisc and a a reference to the old ldisc. If we ended up flipping back to the existing ldisc we have two references to it */

if(tty->ldisc.num!=o_ldisc.num&&tty->driver->set_ldisc) tty->driver->set_ldisc(tty); //如果是新的线路规程则调用TTY驱动的set_ldisc函数

tty_ldisc_put(o_ldisc.num); //释放旧的线路规程参考计数

/* * Allow ldisc referencing to occur as soon as the driver * ldisc callback completes. */

tty_ldisc_enable(tty); //激活新的TTY线路规程,实际上是置位TTY_LDISC if(o_tty) tty_ldisc_enable(o_tty);

/* Restart it in case no characters kick it off. Safe if already running */ if(work) schedule_delayed_work(&tty->buf.work,1); returnretval; } |

这个函数为TTY驱动绑定N_PPP线路规程,绑定后调用线路规程的open()函数,对于N_PPP实际上是调用ppp_asynctty_open:

| linux-2.6.18/drivers/net/ppp_async.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp() -> tty_ioctl() -> tiocsetd() -> tty_set_ldisc() -> ppp_asynctty_open(): |

| /* * Called when a tty is put into PPP line discipline. Called in process * context. */ staticint ppp_asynctty_open(struct tty_struct*tty) { struct asyncppp*ap; interr;

err=-ENOMEM; ap=kmalloc(sizeof(*ap), GFP_KERNEL); if(ap==0) gotoout;

/* initialize the asyncppp structure */ memset(ap,0,sizeof(*ap)); ap->tty=tty; ap->mru=PPP_MRU; spin_lock_init(&ap->xmit_lock); spin_lock_init(&ap->recv_lock); ap->xaccm[0]=~0U; ap->xaccm[3]=0x60000000U; ap->raccm=~0U; ap->optr=ap->obuf; ap->olim=ap->obuf; ap->lcp_fcs=-1;

skb_queue_head_init(&ap->rqueue); tasklet_init(&ap->tsk,ppp_async_process,(unsignedlong)ap);//接收数据时使用的tasklet

atomic_set(&ap->refcnt,1); init_MUTEX_LOCKED(&ap->dead_sem);

ap->chan.private=ap; //channel反向指针指向struct asyncppp ap->chan.ops=&async_ops; //异步channel的操作函数集合 ap->chan.mtu=PPP_MRU; err=ppp_register_channel(&ap->chan); //注册异步channel if(err) gotoout_free;

tty->disc_data=ap; //现在tty结构可以找到asyncppp了 tty->receive_room=65536; return0;

out_free: kfree(ap); out: returnerr; } |

此函数分配并初始化struct asyncppp结构来表示一个异步PPP,并将tty结构的disc_data指向该结构。另外调用ppp_register_channel注册了一个异步PPP channel:

| linux-2.6.18/drivers/net/ppp_generic.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp() -> tty_ioctl() -> tiocsetd() -> tty_set_ldisc() -> ppp_asynctty_open() -> ppp_register_channel(): |

| /* * Create a new, unattached ppp channel. */ int ppp_register_channel(struct ppp_channel*chan) { struct channel*pch;

pch=kzalloc(sizeof(struct channel), GFP_KERNEL); if(pch==0) return-ENOMEM; pch->ppp=NULL; //channel还不属于任何PPP unit,初始化为NULL pch->chan=chan; //channel中指向ppp_channel的指针 chan->ppp=pch; //ppp_channel中指向channel的指针 init_ppp_file(&pch->file,CHANNEL); //初始化ppp_file,类型为CHANNEL pch->file.hdrlen=chan->hdrlen; #ifdef CONFIG_PPP_MULTILINK pch->lastseq=-1; #endif/* CONFIG_PPP_MULTILINK */ init_rwsem(&pch->chan_sem); spin_lock_init(&pch->downl); rwlock_init(&pch->upl); spin_lock_bh(&all_channels_lock); pch->file.index=++last_channel_index; //channel索引值,后面会用到 list_add(&pch->list,&new_channels); //注册到new_channels全局链表 atomic_inc(&channel_count); spin_unlock_bh(&all_channels_lock); return0; } |

OK,到此ioctl(tty_fd, TIOCSETD, &ppp_disc)在内核中的实现就分析完了。

2.2 返回tty_establish_ppp,继续调用generic_establish_ppp创建PPP接口:

| pppd/sys-linux.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp() –> generic_establish_ppp(): |

| intgeneric_establish_ppp(intfd) { intx;

if(new_style_driver){ //进入到这里 intflags;

/* Open an instance of /dev/ppp and connect the channel to it */ if(ioctl(fd, PPPIOCGCHAN,&chindex)==-1){ //1) 获取channel number error("Couldn't get channel number: %m"); gotoerr; } dbglog("using channel %d",chindex); fd=open("/dev/ppp", O_RDWR); //打开/dev/ppp if(fd<0){ error("Couldn't reopen /dev/ppp: %m"); gotoerr; } (void)fcntl(fd, F_SETFD, FD_CLOEXEC); if(ioctl(fd, PPPIOCATTCHAN,&chindex)<0){ //2) 将channel绑定到/dev/ppp error("Couldn't attach to channel %d: %m",chindex); gotoerr_close; } flags=fcntl(fd, F_GETFL); if(flags==-1||fcntl(fd, F_SETFL,flags| O_NONBLOCK)==-1) //设为非阻塞fd warn("Couldn't set /dev/ppp (channel) to nonblock: %m"); set_ppp_fd(fd); //将这个fd保存到变量ppp_fd

if(!looped) ifunit=-1; if(!looped&&!multilink){ //回想一下,在demand模式下open_ppp_loopback会将looped置为1 /* * Create a new PPP unit. */ if(make_ppp_unit()<0) //3) demand模式下已经调用过make_ppp_unit了,这里用于正常拨号 gotoerr_close; }

if(looped) modify_flags(ppp_dev_fd, SC_LOOP_TRAFFIC,0); //对demand模式,清除

if(!multilink){ add_fd(ppp_dev_fd); //把ppp_dev_fd加入到select的fds中 if(ioctl(fd, PPPIOCCONNECT,&ifunit)<0){ //4) 连接channel到unit error("Couldn't attach to PPP unit %d: %m",ifunit); gotoerr_close; } }

}else{ (old driver忽略)…… }

…… looped=0;

returnppp_fd; …… } |

这个函数可以分成4个主要部分:

1) 获取TTY中已注册的channel的索引值。

2) 将注册的channel绑定到/dev/ppp文件描述符,并保存到ppp_fd。

3) 对于正常拨号,调用make_ppp_unit创建ppp0网络接口并将此接口绑定,绑定后的/dev/ppp文件描述符保存在ppp_dev_fd。

4) 将ppp_dev_fd加入到select的fds,并连接channe到PPP unit。

第1部分:对TTY fd调用 ioctl(fd, PPPIOCGCHAN, &chindex),实际上调用内核中的tty_ioctl():

| linux-2.6.18/drivers/char/tty_io.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp() –> generic_establish_ppp() –> tty_ioctl(): |

| inttty_ioctl(struct inode*inode,struct file*file, unsignedintcmd,unsignedlongarg) { struct tty_struct*tty,*real_tty; void__user*p=(void__user*)arg; intretval; struct tty_ldisc*ld; …… ld=tty_ldisc_ref_wait(tty);//前面TTY已绑定了PPP线路规程,所以返回的是异步PPP线路规程 retval=-EINVAL; if(ld->ioctl){ retval=ld->ioctl(tty,file,cmd,arg); //实际调用ppp_asynctty_ioctl if(retval==-ENOIOCTLCMD) retval=-EINVAL; } tty_ldisc_deref(ld); returnretval; } |

异步PPP线路规程已经在内核文件ppp_async.c中初始化了,并且在前面已经设置TTY的异步PPP线路规程,因此这里的ld->ioctl实际指向的是ppp_asynctty_ioctl:

| linux-2.6.18/drivers/net/ppp_async.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp() –> generic_establish_ppp() –> tty_ioctl() –> ppp_asynctty_ioctl(): |

| staticint ppp_asynctty_ioctl(struct tty_struct*tty,struct file*file, unsignedintcmd,unsignedlongarg) { struct asyncppp*ap=ap_get(tty); interr,val; int__user*p=(int__user*)arg;

if(ap==0) return-ENXIO; err=-EFAULT; switch(cmd){ casePPPIOCGCHAN: err =-ENXIO; if(ap==0) break; err=-EFAULT; if(put_user(ppp_channel_index(&ap->chan),p))//拷贝channel索引值到chindex break; err=0; break; …… } |

ppp_channel_index实现:

| linux-2.6.18/drivers/net/ppp_generic.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp() –> generic_establish_ppp() –> tty_ioctl() –> ppp_asynctty_ioctl() –> ppp_channel_index(): |

| intppp_channel_index(struct ppp_channel*chan) { struct channel*pch=chan->ppp;

if(pch!=0) returnpch->file.index;//返回ppp_register_channel()中初始化的channel索引值 return-1; } |

第2部分:对/dev/ppp调用ioctl(fd, PPPIOCATTCHAN, &chindex),实际上调用ppp_ioctl -> ppp_unattached_ioctl:

| linux-2.6.18/drivers/net/ppp_generic.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp() –> generic_establish_ppp() -> ppp_ioctl() -> ppp_unattached_ioctl(): |

| staticintppp_unattached_ioctl(struct ppp_file *pf,struct file*file, unsignedintcmd,unsignedlongarg) { intunit,err=-EFAULT; struct ppp*ppp; struct channel*chan; int__user*p=(int__user*)arg; …… casePPPIOCATTCHAN: if(get_user(unit, p)) break; spin_lock_bh(&all_channels_lock); err=-ENXIO; chan=ppp_find_channel(unit);//通过参数chindex寻找注册的channel if(chan!=0){ atomic_inc(&chan->file.refcnt); file->private_data=&chan->file; //绑定到channel,指向channel的ppp_file err=0; } spin_unlock_bh(&all_channels_lock); break;

default: err=-ENOTTY; } returnerr; } |

这个ioctl返回后,/dev/ppp文件描述符绑定了索引值为chindex的channel。然后通过set_ppp_fd(fd)保存在全局变量ppp_fd中。

第3部分:会判断是否是demand模式,对正常拨号会调用make_ppp_unit创建ppp0接口,而demand模式在第二阶段已经调用过make_ppp_unit了,这里就直接忽略。具体参见第二阶段中对make_ppp_unit的详细分析。

注意:ppp_dev_fd文件描述符代表的是一个unit,ppp_fd文件描述符代表的是一个channel。

第4部分:对绑定了channel的ppp_fd调用ioctl(fd, PPPIOCCONNECT, &ifunit)将channel连接到unit。

| linux-2.6.18/drivers/net/ppp_generic.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp() –> generic_establish_ppp() -> ppp_ioctl(): |

| staticintppp_ioctl(struct inode *inode,struct file*file, unsignedintcmd,unsignedlongarg) { struct ppp_file*pf=file->private_data; struct ppp*ppp; …… if(pf->kind==CHANNEL){ struct channel*pch=PF_TO_CHANNEL(pf); struct ppp_channel*chan;

switch(cmd){ casePPPIOCCONNECT: if(get_user(unit, p)) break; err=ppp_connect_channel(pch,unit); //连接channel到unit break;

casePPPIOCDISCONN: err=ppp_disconnect_channel(pch); break;

default: down_read(&pch->chan_sem); chan=pch->chan; err=-ENOTTY; if(chan&&chan->ops->ioctl) err=chan->ops->ioctl(chan,cmd,arg); up_read(&pch->chan_sem); } returnerr; } …… } |

ppp_connect_channel实现:

| linux-2.6.18/drivers/net/ppp_generic.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> tty_establish_ppp() –> generic_establish_ppp() -> ppp_ioctl() -> ppp_connect_channel(): |

| /* * Connect a PPP channel to a PPP interface unit. */ staticint ppp_connect_channel(struct channel*pch,intunit) { struct ppp*ppp; intret=-ENXIO; inthdrlen;

mutex_lock(&all_ppp_mutex); ppp=ppp_find_unit(unit); //根据unit number找到struct ppp if(ppp==0) gotoout; write_lock_bh(&pch->upl); ret=-EINVAL; if(pch->ppp!=0) gotooutl;

ppp_lock(ppp); if(pch->file.hdrlen>ppp->file.hdrlen) ppp->file.hdrlen=pch->file.hdrlen; hdrlen=pch->file.hdrlen+2; /* for protocol bytes */ if(ppp->dev&&hdrlen>ppp->dev->hard_header_len) ppp->dev->hard_header_len=hdrlen; //PPP协议帧头长度 list_add_tail(&pch->clist,&ppp->channels); //将channel加入到unit中的channel链表 ++ppp->n_channels; //unit中的channel数目加1。注意:一个unit下可以有多个channel哦 pch->ppp=ppp; //回忆一下,刚注册channel时ppp指针为空。 atomic_inc(&ppp->file.refcnt); ppp_unlock(ppp); ret=0;

outl: write_unlock_bh(&pch->upl); out: mutex_unlock(&all_ppp_mutex); returnret; } |

3. 函数link_required中前两步已经配置好了链路接口,接下来该做正事了:PPPD状态机进入PHASE_ESTABLISH阶段,然后用lcp_lowerup(0)发送LCP报文去建立连接。

实际调用lcp_lowerup() -> fsm_lowerup() -> fsm_sconfreq()-> fsm_sdata() -> output():

| pppd/sys-linux.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> lcp_lowerup() -> fsm_lowerup() -> fsm_sconfreq() -> fsm_sdata() -> output(): |

| voidoutput(intunit,unsignedchar*p,intlen) { intfd=ppp_fd; intproto;

dump_packet("sent",p,len); if(snoop_send_hook)snoop_send_hook(p,len);

if(len<PPP_HDRLEN) return; if(new_style_driver){ //进入到这里 p+=2; len-=2; proto=(p[0]<<8)+p[1]; if(ppp_dev_fd>=0&&!(proto>=0xc000||proto== PPP_CCPFRAG)) fd=ppp_dev_fd; //注意:数据帧用ppp_dev_fd发送,LCP控制帧用ppp_fd发送 } if(write(fd,p,len)<0){//调用内核函数ppp_write发送数据 if(errno== EWOULDBLOCK|| errno == EAGAIN|| errno== ENOBUFS || errno== ENXIO|| errno == EIO|| errno== EINTR) warn("write: warning: %m (%d)", errno); else error("write: %m (%d)", errno); } }

|

数据的发送要分两种情况:

1. LCP控制帧用ppp_fd发送。

2. 数据帧用ppp_dev_fd发送。

不管是ppp_fd还是ppp_dev_fd打开的设备文件都是/dev/ppp,因此调用的都是同一个函数ppp_write:

| linux-2.6.18/drivers/net/ppp_generic.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> lcp_lowerup() -> fsm_lowerup() -> fsm_sconfreq() -> fsm_sdata() -> output() -> ppp_write(): |

| staticssize_tppp_write(struct file*file,constchar__user*buf, size_tcount,loff_t*ppos) { struct ppp_file*pf=file->private_data; struct sk_buff*skb; ssize_tret;

if(pf==0) return-ENXIO; ret=-ENOMEM; skb=alloc_skb(count+pf->hdrlen, GFP_KERNEL); //Linux内核用sk_buff存放网络数据包 if(skb==0) gotoout; skb_reserve(skb,pf->hdrlen); ret=-EFAULT; if(copy_from_user(skb_put(skb,count),buf,count)){ //将发送数据拷贝到skb kfree_skb(skb); gotoout; }

skb_queue_tail(&pf->xq,skb); //将skb放在发送队列xq的队尾

switch(pf->kind){ //注意:通过ppp_file的kind字段判断/dev/ppp绑定的是unit还是channel caseINTERFACE: //接口即unit ppp_xmit_process(PF_TO_PPP(pf)); break; caseCHANNEL: //channel ppp_channel_push(PF_TO_CHANNEL(pf)); break; }

ret=count;

out: returnret; } |

继续对这个函数分析,现在要发送LCP帧去建立连接,因此调用ppp_channel_push来进行发送:

| linux-2.6.18/drivers/net/ppp_generic.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> lcp_lowerup() -> fsm_lowerup() -> fsm_sconfreq() -> fsm_sdata() -> output() -> ppp_write() -> ppp_channel_push(): |

| staticvoid ppp_channel_push(struct channel*pch) { struct sk_buff*skb; struct ppp*ppp;

spin_lock_bh(&pch->downl); if(pch->chan!=0){ //已经在前面的ppp_register_channel初始化了,这里不为空 while(!skb_queue_empty(&pch->file.xq)){ //skb已放在发送队列xq中 skb=skb_dequeue(&pch->file.xq); /* 前面的ppp_asynctty_open已经初始化了ops为async_ops,所以这里 实际调用ppp_async_send*/ if(!pch->chan->ops->start_xmit(pch->chan,skb)){ /* put the packet back and try again later */ skb_queue_head(&pch->file.xq,skb); break; } } }else{ /* channel got deregistered */ skb_queue_purge(&pch->file.xq); } spin_unlock_bh(&pch->downl); /* see if there is anything from the attached unit to be sent */ if(skb_queue_empty(&pch->file.xq)){ read_lock_bh(&pch->upl); ppp=pch->ppp; if(ppp!=0)//如果channel已经连接到unit了,则不为空 ppp_xmit_process(ppp); //这个函数用于发送数据帧 read_unlock_bh(&pch->upl); } } |

实际调用ppp_async_send发送LCP帧:

| linux-2.6.18/drivers/net/ppp_async.c main() -> lcp_open() -> fsm_open() -> lcp_starting() -> link_required() -> lcp_lowerup() -> fsm_lowerup() -> fsm_sconfreq() -> fsm_sdata() -> output() -> ppp_write() -> ppp_channel_push() -> ppp_async_send(): |

| staticint ppp_async_send(struct ppp_channel*chan,struct sk_buff*skb) { struct asyncppp*ap=chan->private;

ppp_async_push(ap); //push到TTY驱动去发送

if(test_and_set_bit(XMIT_FULL,&ap->xmit_flags)) return0; /* already full */ ap->tpkt=skb; ap->tpkt_pos=0;

ppp_async_push(ap); return1; } |

实际调用ppp_async_push:

|

|

| staticint ppp_async_push(struct asyncppp*ap) { intavail,sent,done=0; struct tty_struct*tty=ap->tty; inttty_stuffed=0;

/* * We can get called recursively here if the tty write * function calls our wakeup function. This can happen * for example on a pty with both the master and slave * set to PPP line discipline. * We use the XMIT_BUSY bit to detect this and get out, * leaving the XMIT_WAKEUP bit set to tell the other * instance that it may now be able to write more now. */ if(test_and_set_bit(XMIT_BUSY,&ap->xmit_flags)) return0; spin_lock_bh(&ap->xmit_lock); for(;;){ if(test_and_clear_bit(XMIT_WAKEUP,&ap->xmit_flags)) tty_stuffed=0; if(!tty_stuffed&&ap->optr<ap->olim){ avail=ap->olim-ap->optr; set_bit(TTY_DO_WRITE_WAKEUP,&tty->flags); sent=tty->driver->write(tty,ap->optr,avail); //最终调用TTY驱动的write发送帧 if(sent<0) gotoflush; /* error, e.g. loss of CD */

…… } |

到此,LCP Configure Request帧就发送出去了。

现在,再次返回到PPPD中的主函数main()中:

第二步:PPPD状态机循环进行事件处理

| pppd/main.c -> main(): |

| …… do_callback=0; for(;;){ /* 最外层for(;;)循环 */

……

lcp_open(0); /* Start protocol */ //第一步:打开PPPK接口发送LCP帧 while(phase!=PHASE_DEAD){ //第二步:PPPD状态机循环进行事件处理 handle_events(); //select事件处理 get_input(); //对接收报文的处理 if(kill_link) lcp_close(0,"User request"); if(asked_to_quit){ bundle_terminating=1; if(phase==PHASE_MASTER) mp_bundle_terminated(); } …… }

…… } |

调用handle_events处理事件,见demand模式下对此函数的分析。注意:这里等待事件处理的fds中包含有ppp_dev_fd。接下来调用get_input处理收到的报文:

| pppd/main.c main() -> get_input(): |

| staticvoid get_input() { intlen,i; u_char *p; u_short protocol; struct protent*protp;

p=inpacket_buf; /* point to beginning of packet buffer */

len=read_packet(inpacket_buf); //读取接收报文到inpacket_buf缓冲区 (检查接收数据的有效性,这里不做分析)…… p+=2; /* Skip address and control */ GETSHORT(protocol,p); //获取报文中带的协议号 len-=PPP_HDRLEN; //有效数据长度 …… /* * Upcall the proper protocol input routine. */ for(i=0;(protp=protocols[i])!= NULL;++i){ if(protp->protocol==protocol&&protp->enabled_flag){ (*protp->input)(0,p,len); //调用每个协议块的input函数来处理接收报文 return; } if(protocol==(protp->protocol&~0x8000)&&protp->enabled_flag &&protp->datainput!= NULL){ (*protp->datainput)(0,p,len); return; } }

…… } |

此函数调用read_packet读取接收报文到inpacket_buf缓冲区,再提取出收到报文的协议号(LCP为0xC021),然后根据协议号匹配调用对应协议块的input和datainput函数。在第二阶段分析demand模式时已经分析了read_packet函数,这里就不啰嗦了。

至此,PPPD建立连接所需的数据收发基本流程就勾画出来了,这里我们看到的PPPD收发的数据包都是PPP控制帧如LCP,那像IP数据包这种数据流也都要经过PPPD吗?如果连数据流都经过PPPD那效率岂不是很低?

首先来看数据流的接收:

当底层TTY驱动收到数据时会产生一个中断,并在中断处理函数(硬中断或软中断BH)中调用TTY所绑定的线路规程的receive_buf函数指针。

| linux-2.6.18/drivers/net/ppp_async.c |

| staticstruct tty_ldiscppp_ldisc={ .owner =THIS_MODULE, .magic = TTY_LDISC_MAGIC, .name ="ppp", .open =ppp_asynctty_open, .close =ppp_asynctty_close, .hangup =ppp_asynctty_hangup, .read =ppp_asynctty_read, .write =ppp_asynctty_write, .ioctl =ppp_asynctty_ioctl, .poll=ppp_asynctty_poll, .receive_buf=ppp_asynctty_receive, .write_wakeup=ppp_asynctty_wakeup, }; |

对于本文中绑定了N_PPP线路规程的TTY驱动来讲,就是调用ppp_asynctty_receive:

| linux-2.6.18/drivers/net/ppp_async.c |

| /* * This can now be called from hard interrupt level as well * as soft interrupt level or mainline. */ staticvoid ppp_asynctty_receive(struct tty_struct*tty,constunsignedchar*buf, char*cflags,intcount) { struct asyncppp*ap=ap_get(tty); unsignedlongflags;

if(ap==0) return; spin_lock_irqsave(&ap->recv_lock,flags); ppp_async_input(ap,buf,cflags,count); //1. 分配skb并放在ap->rqueue队列中 spin_unlock_irqrestore(&ap->recv_lock,flags); if(!skb_queue_empty(&ap->rqueue)) //很显然队列不为空 tasklet_schedule(&ap->tsk); //2. 调度tasklet来处理skb ap_put(ap); if(test_and_clear_bit(TTY_THROTTLED,&tty->flags) &&tty->driver->unthrottle) tty->driver->unthrottle(tty); } |

1. 这个函数首先调用ppp_async_input:

| linux-2.6.18/drivers/net/ppp_async.c |

| staticvoid ppp_async_input(struct asyncppp*ap,constunsignedchar*buf, char*flags,intcount) { struct sk_buff*skb; intc,i,j,n,s,f; unsignedchar*sp; (解析buf中的数据并分配一个skb并将ap->rpkt指针指向这个skb)…… c=buf[n]; if(flags!=NULL&&flags[n]!=0){ ap->state|=SC_TOSS; }elseif(c==PPP_FLAG){ //看到PPP帧结束字符 process_input_packet(ap); //把skb放在队列中 …… } |

函数ppp_asyc_input读取buf中的数据放在sk_buff中,然后调用process_input_packet把收到的数据包放在接收队列中:

| linux-2.6.18/drivers/net/ppp_async.c |

| staticvoid process_input_packet(struct asyncppp*ap) { struct sk_buff*skb; unsignedchar*p; unsignedintlen,fcs,proto;

skb=ap->rpkt; //之前保存接收数据的skb (对skb中数据进行有效性检查)…… /* queue the frame to be processed */ skb->cb[0]=ap->state; skb_queue_tail(&ap->rqueue,skb); //把skb放在接收队列中 ap->rpkt=NULL; ap->state=0; return; …… } |

2. 再用tasklet_schedule(&ap->tsk)调度tasklet来处理,这个ap->tsk在哪个地方初始化的呢?前面分析过的ppp_asynctty_open已经初始化了tasklet。这时tasklet函数ppp_async_process会被执行:

| linux-2.6.18/drivers/net/ppp_async.c |

| staticvoidppp_async_process(unsignedlongarg) { struct asyncppp*ap=(struct asyncppp*)arg; struct sk_buff*skb;

/* process received packets */ while((skb=skb_dequeue(&ap->rqueue))!=NULL){ //循环处理队列中所有skb if(skb->cb[0]) ppp_input_error(&ap->chan,0); ppp_input(&ap->chan,skb); //调用ppp_input来处理skb }

/* try to push more stuff out */ if(test_bit(XMIT_WAKEUP,&ap->xmit_flags)&&ppp_async_push(ap)) ppp_output_wakeup(&ap->chan); } |

饶了一个圈,实际上是调用ppp_input来处理接收数据包:

| linux-2.6.18/drivers/net/ppp_generic.c |

| void ppp_input(struct ppp_channel*chan,struct sk_buff*skb) { struct channel*pch=chan->ppp; intproto;

if(pch==0||skb->len==0){ kfree_skb(skb); return; }

proto=PPP_PROTO(skb); read_lock_bh(&pch->upl); /* LCP的协议号为0xC021,因此对控制帧在这里处理 */ if(pch->ppp==0||proto>=0xc000||proto==PPP_CCPFRAG){ /* put it on the channel queue */ skb_queue_tail(&pch->file.rq,skb); //放在channel的接收队列中 /* drop old frames if queue too long */ while(pch->file.rq.qlen>PPP_MAX_RQLEN &&(skb=skb_dequeue(&pch->file.rq))!=0) kfree_skb(skb); wake_up_interruptible(&pch->file.rwait); //唤醒PPPD进程进行read处理 }else{ ppp_do_recv(pch->ppp,skb,pch); //对非控制帧进行处理 } read_unlock_bh(&pch->upl); } |

函数ppp_input分两种情况分发报文:

1. 对控制流,放在channel的接收队列中并唤醒PPPD进程读取。

2. 对数据流,调用ppp_do_recv:

| linux-2.6.18/drivers/net/ppp_generic.c |

| staticinlinevoid ppp_do_recv(struct ppp*ppp,struct sk_buff*skb,struct channel*pch) { ppp_recv_lock(ppp); /* ppp->dev == 0 means interface is closing down */ if(ppp->dev!=0) ppp_receive_frame(ppp,skb,pch); else kfree_skb(skb); ppp_recv_unlock(ppp); } |

由于PPP unit上已建立了ppp0网络接口,这里会调用ppp_receive_frame:

| linux-2.6.18/drivers/net/ppp_generic.c |

| staticvoid ppp_receive_frame(struct ppp*ppp,struct sk_buff*skb,struct channel*pch) { if(skb->len>=2){ #ifdef CONFIG_PPP_MULTILINK /*XXX do channel-level decompression here */ if(PPP_PROTO(skb)== PPP_MP) ppp_receive_mp_frame(ppp, skb, pch); else #endif/* CONFIG_PPP_MULTILINK */ ppp_receive_nonmp_frame(ppp,skb); //非多链路PPP return; }

if(skb->len>0) /* note: a 0-length skb is used as an error indication */ ++ppp->stats.rx_length_errors;

kfree_skb(skb); ppp_receive_error(ppp); } |

对于非多链路PPP调用ppp_receive_nonmp_frame:

| linux-2.6.18/drivers/net/ppp_generic.c |

| staticvoid ppp_receive_nonmp_frame(struct ppp*ppp,struct sk_buff*skb) { struct sk_buff*ns; intproto,len,npi; …… if((ppp->dev->flags&IFF_UP)==0 ||ppp->npmode[npi]!=NPMODE_PASS){ kfree_skb(skb); }else{ /* chop off protocol */ skb_pull_rcsum(skb,2); skb->dev=ppp->dev; skb->protocol=htons(npindex_to_ethertype[npi]); skb->mac.raw=skb->data; netif_rx(skb); //把skb放入Linux协议栈去处理 ppp->dev->last_rx=jiffies; } } return; …… } |

对于数据流,最终还是调用netif_rx(skb)将数据包放入Linux协议栈去处理。

结论:在PPP连接成功建立之前,为建立连接而传输的控制流都要通过PPPD进行报文解析并根据各控制协议的状态机和用户配置进行报文的收发、超时及状态迁移等处理。 当PPP连接经过三阶段LCP->PAP/CHAP->IPCP成功建立之后, 经过ppp0接口的数据流就直接通过Linux内核进行处理而不必经过PPPD,实现了控制路径与数据路径,策略与机制的有效分离。

2366

2366

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?