1.先生成数据源

package com.panguoyuan.storm.lession1;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.util.Random;

public class ProductionData {

public static void main(String[] args){

File logFile = new File("track.log");

Random random = new Random();

String[] hosts = { "www.taobao.com" };

String[] session_id = {"ABYH6Y4V4SCVXTG6DPB4VH9U123", "XXYH6YCGFJYERTT834R52FDXV9U34", "BBYH61456FGHHJ7JL89RG5VV9UYU7","CYYH6Y2345GHI899OFG4V9U567", "VVVYH6Y4V4SFXZ56JIPDPB4V678" };

String[] time = { "2014-01-07 08:40:50", "2014-01-07 08:40:51", "2014-01-07 08:40:52", "2014-01-07 08:40:53", "2014-01-07 09:40:49", "2014-01-07 10:40:49", "2014-01-07 11:40:49", "2014-01-07 12:40:49" };

StringBuffer sbBuffer = new StringBuffer() ;

for (int i = 0; i < 50; i++) {

sbBuffer.append(hosts[0]+"\t"+session_id[random.nextInt(5)]+"\t"+time[random.nextInt(8)]+"\n");

}

if(! logFile.exists())

{

try {

logFile.createNewFile();

} catch (IOException e) {

System.out.println("Create logFile fail !");

}

}

byte[] b = (sbBuffer.toString()).getBytes();

FileOutputStream fs;

try {

fs = new FileOutputStream(logFile);

fs.write(b);

fs.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}

2.spout读取文件作为数据源

package com.panguoyuan.storm.lession1;

import java.io.BufferedReader;

import java.io.FileInputStream;

import java.io.InputStreamReader;

import java.util.Map;

import backtype.storm.spout.SpoutOutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.IRichSpout;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Values;

public class ReadFileSpout implements IRichSpout{

private static final long serialVersionUID = 1L;

private FileInputStream fis;

private InputStreamReader isr;

private BufferedReader br;

private SpoutOutputCollector collector = null;

private String str = null;

@Override

public void nextTuple() {

try {

while ((str = this.br.readLine()) != null) {

collector.emit(new Values(str));

}

} catch (Exception e) {

e.printStackTrace();

}

}

@Override

public void open(Map conf, TopologyContext context,

SpoutOutputCollector collector) {

try {

this.collector = collector;

this.fis = new FileInputStream("track.log");

this.isr = new InputStreamReader(fis, "UTF-8");

this.br = new BufferedReader(isr);

} catch (Exception e) {

e.printStackTrace();

}

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("line"));

}

@Override

public Map<String, Object> getComponentConfiguration() {

return null;

}

@Override

public void ack(Object msgId) {

}

@Override

public void activate() {

}

@Override

public void close() {

try{

if(fis != null){

fis.close();

}

if(isr != null){

isr.close();

}

if(br != null){

br.close();

}

}catch(Exception e){

e.printStackTrace();

}

}

@Override

public void deactivate() {

}

@Override

public void fail(Object msgId) {

}

}3.处理数据Bolt

package com.panguoyuan.storm.lession1;

import java.util.Map;

import backtype.storm.task.OutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.IRichBolt;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Tuple;

public class ConsumeBolt implements IRichBolt {

private static final long serialVersionUID = 1L;

private OutputCollector collector = null;

private int num = 0;

private String valueString = null;

@Override

public void cleanup() {

}

@Override

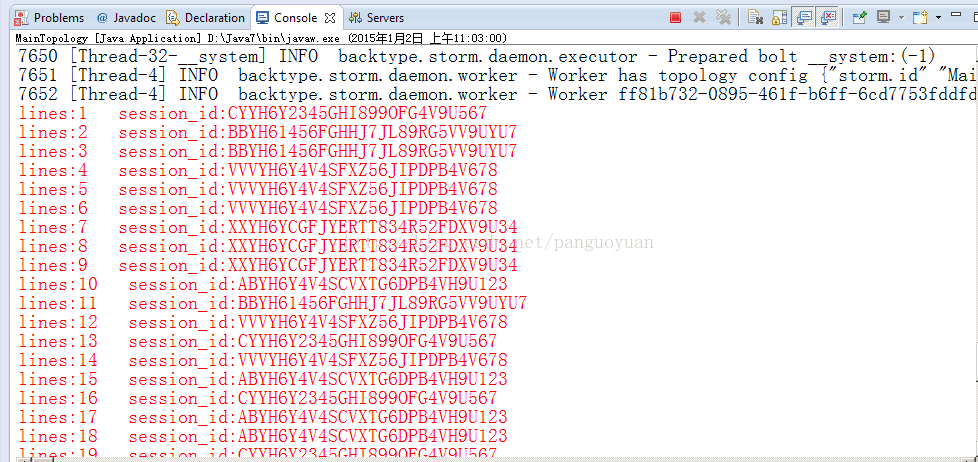

public void execute(Tuple input) {

try {

valueString = input.getStringByField("line") ;

if(valueString != null)

{

num ++ ;

System.err.println("lines:"+num +" session_id:"+valueString.split("\t")[1]);

}

collector.ack(input);

Thread.sleep(2000);

} catch (Exception e) {

collector.fail(input);

e.printStackTrace();

}

}

@Override

public void prepare(Map stormConf, TopologyContext context,

OutputCollector collector) {

this.collector = collector ;

}

@Override

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("")) ;

}

@Override

public Map<String, Object> getComponentConfiguration() {

return null;

}

}package com.panguoyuan.storm.lession1;

import java.util.HashMap;

import java.util.Map;

import backtype.storm.Config;

import backtype.storm.LocalCluster;

import backtype.storm.StormSubmitter;

import backtype.storm.generated.AlreadyAliveException;

import backtype.storm.generated.InvalidTopologyException;

import backtype.storm.topology.TopologyBuilder;

public class MainTopology {

public static void main(String[] args) {

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout("spout", new ReadFileSpout(), 1);

builder.setBolt("bolt", new ConsumeBolt(), 1).shuffleGrouping("spout");

Map conf = new HashMap();

conf.put(Config.TOPOLOGY_WORKERS, 4);

if (args.length > 0) {

try {

StormSubmitter.submitTopology(args[0], conf, builder.createTopology());

} catch (AlreadyAliveException e) {

e.printStackTrace();

} catch (InvalidTopologyException e) {

e.printStackTrace();

}

}else {

LocalCluster localCluster = new LocalCluster();

localCluster.submitTopology("MainTopology-lession1", conf, builder.createTopology());

}

}

}

7755

7755

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?