使用DataX采集Mysql数据到Hive

目录

1. 需求

2. 开发步骤

3. Centos上安装MySQL

4. 贷款数据导入MySQL

5. 安装Hive

6. 启动Hadoop和Hive

7. Hive中建表

8. 安装DataX

9. DataX导入导出文件配置

10. 启动DataX导入数据及结果检查

大数据开发工作中,我们计算分析的数据来源是关系型数据库,这就需要将关系型数据库中的数据采集到大数据系统中(如hive),采集手段和工具很多,比如ogg,sqoop,kettle,datax等。本次实训中我们要把存在于mysql中的银行借贷数据采集到hive中。

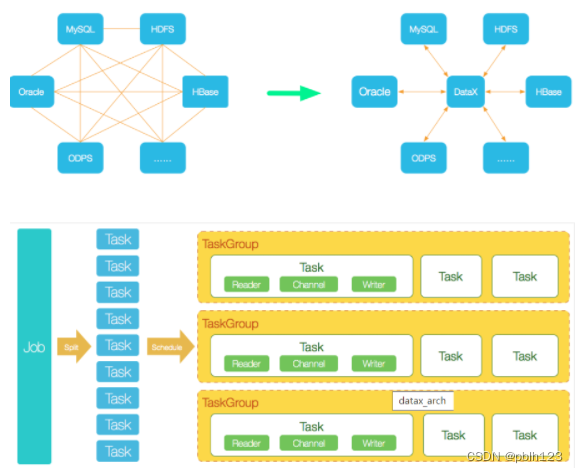

DataX简介

DataX是由Alibaba开源的一款异构数据同步工具,可以在常见的各种数据源之间进行同步,具有轻量、插件式、方便等优点,可以快速完成同步任务。一般公司的数据同步任务,基本可以满足。

入门教程 > https://developer.aliyun.com/article/59373

## 开发步骤- 安装MySQL(已完成则跳过)

- 导入贷款数据到MySQL

- 安装Hive

- Hive中创建表

- 安装及配置DataX

- 通过DataX将数据导入Hive

- 检测数据采集结果

在hadoop集群中任意选择一个Centos节点上安装MySQL数据库。

集群规划

| 序号 | IP | 主机别名 | 角色 | 集群 |

|---|---|---|---|---|

| 1 | 192.168.137.110 | node1 | NameNode(Active),DFSZKFailoverController(ZKFC),ResourceManager,mysql,RunJar(Hive服务端-metastore),RunJar(Hive服务端-hiveserver2) | Hadoop |

| 2 | 192.168.137.111 | node2 | DataNode,JournalNode,QuorumPeerMain,NodeManager,RunJar(Hive客户端,启动时有) | Zookeeper,Hadoop |

| 3 | 192.168.137.112 | node3 | DataNode,JournalNode,QuorumPeerMain,NodeManager,RunJar(Hive客户端,启动时有) | Zookeeper,Hadoop |

| 4 | 192.168.137.113 | node4 | DataNode,JournalNode,QuorumPeerMain,NodeManager,RunJar(Hive客户端,启动时有) | Zookeeper,Hadoop |

| 5 | 192.168.137.114 | node5 | NameNode(Standby),DFSZKFailoverController(ZKFC),ResourceManager,JobHistoryServer,RunJar(Hive客户端,启动时有) | Hadoop |

本案例选择node1安装MySQL

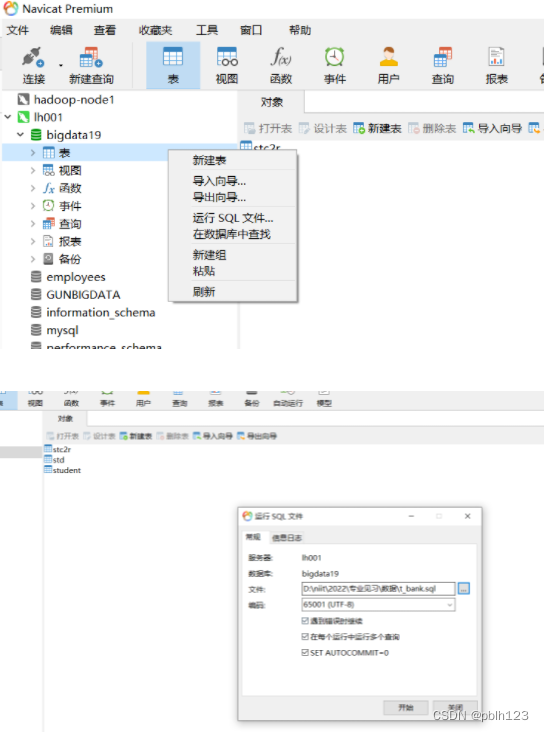

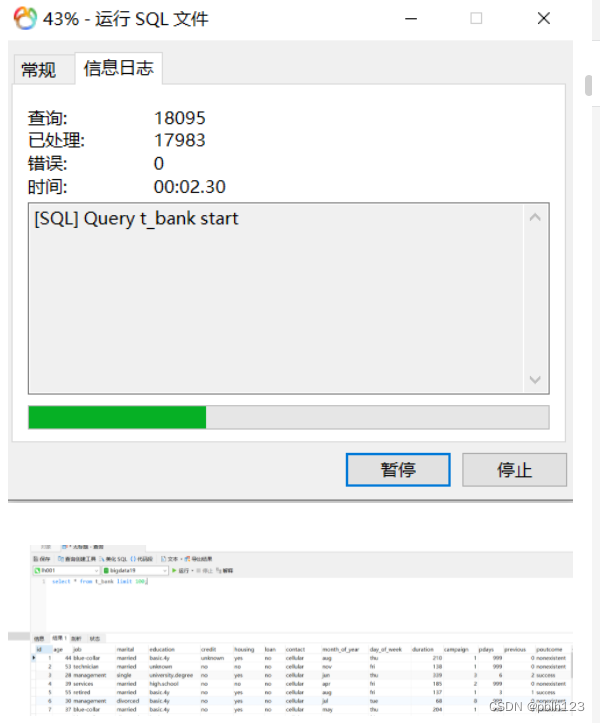

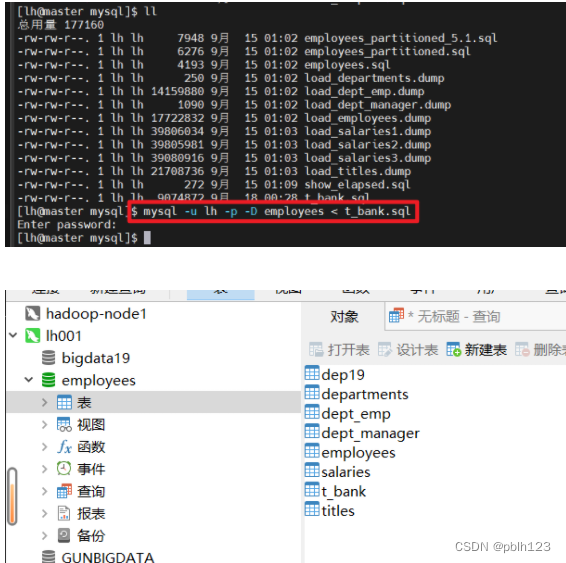

## 贷款数据导入MySQL通过数据库工具,将贷款数据导入MySQL中

通过命令行导入数据

[lh@master mysql]$ mysql -u lh -p -D employees < t_bank.sql

Enter password:

[lh@master mysql]$ mysql -u lh -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 12

Server version: 8.0.30 MySQL Community Server - GPL

Copyright (c) 2000, 2022, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> use employees;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+----------------------+

| Tables_in_employees |

+----------------------+

| current_dept_emp |

| dep19 |

| departments |

| dept_emp |

| dept_emp_latest_date |

| dept_manager |

| employees |

| salaries |

| t_bank |

| titles |

+----------------------+

10 rows in set (0.00 sec)

mysql> select * from t_bank limit 10;

+----+------+-------------+----------+-------------------+---------+---------+------+----------+---------------+-------------+----------+----------+-------+----------+-------------+--------------+----------------+---------------+-----------+-------------+------+

| id | age | job | marital | education | credit | housing | loan | contact | month_of_year | day_of_week | duration | campaign | pdays | previous | poutcome | emp_var_rate | cons_price_idx | cons_conf_idx | euribor3m | nr_employed | y |

+----+------+-------------+----------+-------------------+---------+---------+------+----------+---------------+-------------+----------+----------+-------+----------+-------------+--------------+----------------+---------------+-----------+-------------+------+

| 1 | 44 | blue-collar | married | basic.4y | unknown | yes | no | cellular | aug | thu | 210 | 1 | 999 | 0 | nonexistent | 1.4 | 93.444 | -36.1 | 4.963 | 5228.1 | 0 |

| 2 | 53 | technician | married | unknown | no | no | no | cellular | nov | fri | 138 | 1 | 999 | 0 | nonexistent | -0.1 | 93.2 | -42 | 4.021 | 5195.8 | 0 |

| 3 | 28 | management | single | university.degree | no | yes | no | cellular | jun | thu | 339 | 3 | 6 | 2 | success | -1.7 | 94.055 | -39.8 | 0.729 | 4991.6 | 1 |

| 4 | 39 | services | married | high.school | no | no | no | cellular | apr | fri | 185 | 2 | 999 | 0 | nonexistent | -1.8 | 93.075 | -47.1 | 1.405 | 5099.1 | 0 |

| 5 | 55 | retired | married | basic.4y | no | yes | no | cellular | aug | fri | 137 | 1 | 3 | 1 | success | -2.9 | 92.201 | -31.4 | 0.869 | 5076.2 | 1 |

| 6 | 30 | management | divorced | basic.4y | no | yes | no | cellular | jul | tue | 68 | 8 | 999 | 0 | nonexistent | 1.4 | 93.918 | -42.7 | 4.961 | 5228.1 | 0 |

| 7 | 37 | blue-collar | married | basic.4y | no | yes | no | cellular | may | thu | 204 | 1 | 999 | 0 | nonexistent | -1.8 | 92.893 | -46.2 | 1.327 | 5099.1 | 0 |

| 8 | 39 | blue-collar | divorced | basic.9y | no | yes | no | cellular | may | fri | 191 | 1 | 999 | 0 | nonexistent | -1.8 | 92.893 | -46.2 | 1.313 | 5099.1 | 0 |

| 9 | 36 | admin. | married | university.degree | no | no | no | cellular | jun | mon | 174 | 1 | 3 | 1 | success | -2.9 | 92.963 | -40.8 | 1.266 | 5076.2 | 1 |

| 10 | 27 | blue-collar | single | basic.4y | no | yes | no | cellular | apr | thu | 191 | 2 | 999 | 1 | failure | -1.8 | 93.075 | -47.1 | 1.41 | 5099.1 | 0 |

+----+------+-------------+----------+-------------------+---------+---------+------+----------+---------------+-------------+----------+----------+-------+----------+-------------+--------------+----------------+---------------+-----------+-------------+------+

10 rows in set (0.00 sec)

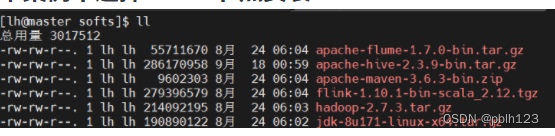

安装Hive

- 下载Hive

- 上传Hive到集群任意一节点

- 安装配置Hive

下载Hive

上传Hive到集群任意一节点

本案例中选择node1节点安装hive

安装配置Hive

解压Hive2.3.9

tar -zxvf apache-hive-2.3.9-bin.tar.gz -C /opt/soft_installed/

把Hive的环境变量配置到/etc/profile中

vim /etc/profile

# 配置Hive

export HIVE_HOME=/opt/soft_installed/apache-hive-2.3.9-bin

export PATH=.:$PATA:$HIVE_HOME/bin

source /etc/profile

配置mysql驱动

Hive的元数据库是MySQL,所以我们还需要把mysql的驱动mysql-connector-java-8.0.30.jar上传至…/apache-hive-2.3.9-bin/lib目录下

- 查看mysql版本mysqladmin --version,然后去下载对应MySQL版本的包

- mysql-connector-java下载网址

- 上传到node1节点

cd /home/lh/softs

cp mysql-connector-java-8.0.30.jar /opt/soft_installed/apache-hive-2.3.9-bin/lib/

修改配置文件

配置hive文件,修改hive-env.sh

[root@master ~]# cd $HIVE_HOME/conf

[root@master conf]# ll

总用量 288

-rw-r--r--. 1 root root 1596 6月 2 2021 beeline-log4j2.properties.template

-rw-r--r--. 1 root root 257574 6月 2 2021 hive-default.xml.template

-rw-r--r--. 1 root root 2365 6月 2 2021 hive-env.sh.template

-rw-r--r--. 1 root root 2274 6月 2 2021 hive-exec-log4j2.properties.template

-rw-r--r--. 1 root root 2925 6月 2 2021 hive-log4j2.properties.template

-rw-r--r--. 1 root root 2060 6月 2 2021 ivysettings.xml

-rw-r--r--. 1 root root 2719 6月 2 2021 llap-cli-log4j2.properties.template

-rw-r--r--. 1 root root 7041 6月 2 2021 llap-daemon-log4j2.properties.template

-rw-r--r--. 1 root root 2662 6月 2 2021 parquet-logging.properties

[root@master conf]# cp hive-default.xml.template hive-site.xml

[root@master conf]# cp hive-env.sh.template hive-env.sh

[root@master conf]# cp hive-log4j2.properties.template hive-log4j2.properties

vim hive-env.sh

# 尾部添加

export HADOOP_HOME=/opt/soft_installed/hadoop-2.7.3

export JAVA_HOME=/opt/soft_installed/jdk1.8.0_171

export HIVE_HOME=/opt/soft_installed/apache-hive-2.3.9-bin

export HADOOP_CONF_DIR=${HADOOP_HOME}/etc/hadoop

export HIVE_CONF_DIR=${HIVE_HOME}/conf

export HIVE_AUX_JARS_PATH=${HIVE_HOME}/lib

配置hive服务端

修改hive-site.xml

cd $HIVE_HOME/conf

vim hive-site.xml

# 对一下做出修改,参照自己的配置文件

<!-- Hive临时文件,用于存储每个查询的临时/中间数据集,通常在完成查询后由配置单元客户端清除 -->

<property>

<name>hive.exec.local.scratchdir</name>

<value>/opt/soft_installed/apache-hive-2.3.9-bin/tmp</value>

<description>Local scratch space for Hive jobs</description>

</property>

<!-- Hive添加资源时的临时目录 -->

<property>

<name>hive.downloaded.resources.dir</name>

<value>/opt/soft_installed/apache-hive-2.3.9-bin/tmp/resources/${hive.session.id}_resources</value>

<description>Temporary local directory for added resources in the remote file system.</description>

</property>

<!-- Hive运行时结构化日志文件的位置 -->

<property>

<name>hive.querylog.location</name>

<value>/opt/soft_installed/apache-hive-2.3.9-bin/logs</value>

<description>Location of Hive run time structured log file</description>

</property>

<!-- 使用MySQL作为hive的元数据Metastore数据库 -->

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://node1:3306/hive?createDatabaseIfNotExist=true&useSSL=false</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

<!-- MySQL JDBC驱动程序类 -->

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<!-- 连接到MySQL服务器的用户名 -->

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>Username to use against metastore database</description>

</property>

<!-- 连接MySQL服务器的密码 -->

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>你的密码</value>

<description>password to use against metastore database</description>

</property>

<!-- 初始化Hive数据库自动创建schema -->

<!-- 自动创建schema第一次启动hive时设定为true,之后改为false -->

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>true</value>

<description>Auto creates necessary schema on a startup if one doesn't exist. Set this to false, after creating it once.To enable auto create also set hive.metastore.schema.verification=false. Auto creation is not recommended for production use cases, run schematool command instead.</description>

</property>

<!-- 是否在 Hive 提示中包含当前数据库 -->

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

<description>Whether to include the current database in the Hive prompt.</description>

<!-- 打印输出中列的名称 -->

<property>

<name>hive.cli.print.header</name>

<value>true</value>

<description>Whether to print the names of the columns in query output.</description>

<!-- HiveServer2 Thrift 服务TCP节点 -->

<property>

<name>hive.server2.thrift.bind.host</name>

<value>node1</value>

<description>Bind host on which to run the HiveServer2 Thrift service.</description>

<property>

<name>hive.server2.logging.operation.enabled</name>

<value>true</value>

<description>When true, HS2 will save operation logs and make them available for clients</description>

</property>

<property>

<name>hive.server2.logging.operation.log.location</name>

<value>/opt/soft_installed/apache-hive-2.3.9-bin/logs/${system:user.name}/operation_logs</value>

<description>Top level directory where operation logs are stored if logging functionality is enabled</description>

</property>

<property>

<name>hive.server2.webui.host</name>

<value>node1</value>

<description>The host address the HiveServer2 WebUI will listen on</description>

</property>

<property>

<name>hive.server2.webui.port</name>

<value>10002</value>

<description>The port the HiveServer2 WebUI will listen on. This can beset to 0 or a negative integer to disable the web UI</description>

</property>

修改hive-log4j2.properties

cd $HIVE_HOME/conf

vim hive-log4j2.properties

property.hive.log.dir = /opt/soft_installed/apache-hive-2.3.9-bin/logs/${sys:user.name}

修改hadoop的core-site.xml

cd $HADOOP_HOME/etc/hadoop

vim core-site.xml

<!-- 表示任意节点使用hadoop集群用户root都能访问hdfs -->

<property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

创建Hive相关目录

# hdfs

hdfs dfs -mkdir -p /user/hive/warehouse

hdfs dfs -mkdir -p /tmp/hive

hdfs dfs -chmod 777 /user/hive/warehouse

hdfs dfs -chmod 777 /tmp/hive

# local

mkdir -p /opt/soft_installed/apache-hive-2.3.9-bin/tmp/resources

mkdir -p /opt/soft_installed/apache-hive-2.3.9-bin/logs

登录MySQL配置数据库和账户

[root@master ~]# mysql -u root -p

mysql> create user 'hive'@'%' identified by '你的密码';

mysql> grant all privileges on *.* to 'hive'@'%' with grant option;

mysql> alter user 'hive'@'%' identified with mysql_native_password by '你的密码';

mysql> flush privileges;

重启hadoop集群

初始化hive元数据库

[root@master scripts]# schematool -initSchema -dbType mysql

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/soft_installed/apache-hive-2.3.9-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://node1:3306/hive?createDatabaseIfNotExist=true&useSSL=false

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: hive

Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

Starting metastore schema initialization to 2.3.0

Initialization script hive-schema-2.3.0.mysql.sql

Initialization script completed

schemaTool completed

[root@master scripts]#

[root@master scripts]# mysql -uroot -p

Enter password:

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 19

Server version: 8.0.30 MySQL Community Server - GPL

Copyright (c) 2000, 2022, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| GUNBIGDATA |

| bigdata19 |

| employees |

| hive |

| information_schema |

| mysql |

| performance_schema |

| sakila |

| sys |

+--------------------+

9 rows in set (0.00 sec)

mysql> use hive;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+---------------------------+

| Tables_in_hive |

+---------------------------+

| AUX_TABLE |

| BUCKETING_COLS |

| CDS |

| COLUMNS_V2 |

| COMPACTION_QUEUE |

| COMPLETED_COMPACTIONS |

| COMPLETED_TXN_COMPONENTS |

| DATABASE_PARAMS |

| DBS |

| DB_PRIVS |

| DELEGATION_TOKENS |

| FUNCS |

| FUNC_RU |

| GLOBAL_PRIVS |

| HIVE_LOCKS |

| IDXS |

| INDEX_PARAMS |

| KEY_CONSTRAINTS |

| MASTER_KEYS |

| NEXT_COMPACTION_QUEUE_ID |

| NEXT_LOCK_ID |

| NEXT_TXN_ID |

| NOTIFICATION_LOG |

| NOTIFICATION_SEQUENCE |

| NUCLEUS_TABLES |

| PARTITIONS |

| PARTITION_EVENTS |

| PARTITION_KEYS |

| PARTITION_KEY_VALS |

| PARTITION_PARAMS |

| PART_COL_PRIVS |

| PART_COL_STATS |

| PART_PRIVS |

| ROLES |

| ROLE_MAP |

| SDS |

| SD_PARAMS |

| SEQUENCE_TABLE |

| SERDES |

| SERDE_PARAMS |

| SKEWED_COL_NAMES |

| SKEWED_COL_VALUE_LOC_MAP |

| SKEWED_STRING_LIST |

| SKEWED_STRING_LIST_VALUES |

| SKEWED_VALUES |

| SORT_COLS |

| TABLE_PARAMS |

| TAB_COL_STATS |

| TBLS |

| TBL_COL_PRIVS |

| TBL_PRIVS |

| TXNS |

| TXN_COMPONENTS |

| TYPES |

| TYPE_FIELDS |

| VERSION |

| WRITE_SET |

+---------------------------+

57 rows in set (0.01 sec)

mysql>

修改hive-site.xml,将自动创建hive元数据设置为false

cd $HIVE_HOME/conf

vim hive-site.xml

<property>

<name>datanucleus.schema.autoCreateAll</name>

<value>false</value>

<description>Auto creates necessary schema on a startup if one doesn't exist. Set this to false, after creating it once.To enable auto create also set hive.metastore.schema.verification=false. Auto creation is not recommended for production use cases, run schematool command instead.</description>

</property>

启动Hive

[root@master conf]# hive

which: no hbase in (/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/opt/soft_installed/jdk1.8.0_171/bin:/opt/soft_installed/jdk1.8.0_171/jre/bin:/opt/soft_installed/hadoop-2.7.3/bin:/opt/soft_installed/hadoop-2.7.3/sbin:/opt/soft_installed/zookeeper-3.4.5/bin:/opt/soft_installed/apache-hive-2.3.9-bin/bin:/home/lh/.local/bin:/home/lh/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/soft_installed/apache-hive-2.3.9-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in file:/opt/soft_installed/apache-hive-2.3.9-bin/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive (default)> show databases;

OK

database_name

default

Time taken: 4.683 seconds, Fetched: 1 row(s)

hive (default)> create database hdfs_bigdata_19;

OK

Time taken: 0.276 seconds

hive (default)> show databases;

OK

database_name

default

hdfs_bigdata_19

Time taken: 0.01 seconds, Fetched: 2 row(s)

hive (default)> use hdfs_bigdata_19;

OK

Time taken: 0.04 seconds

hive (hdfs_bigdata_19)> show tables;

OK

tab_name

Time taken: 0.048 seconds

hive (hdfs_bigdata_19)> create table cl(class string, name string, age int, sex string);

OK

Time taken: 1.097 seconds

hive (hdfs_bigdata_19)> show tables;

OK

tab_name

cl

Time taken: 0.019 seconds, Fetched: 1 row(s)

hive (hdfs_bigdata_19)> exit;

[root@master conf]#

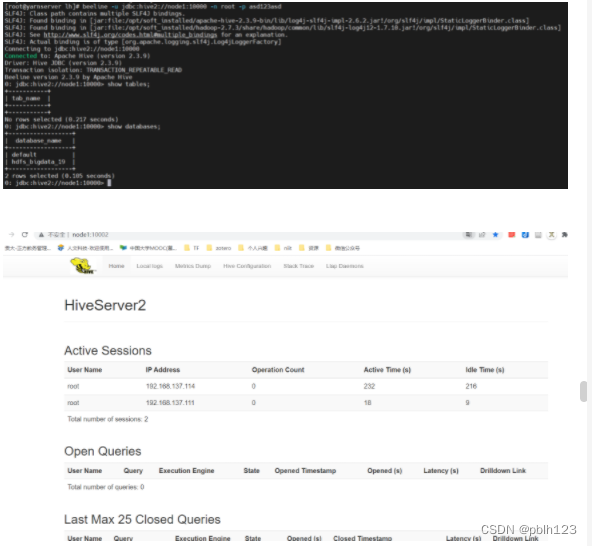

web端查看验证hive

debug

# mysql驱动类名更新

hive (default)> show databases;

Loading class `com.mysql.jdbc.Driver'. This is deprecated. The new driver class is `com.mysql.cj.jdbc.Driver'. The driver is automatically registered via the SPI and manual loading of the driver class is generally unnecessary.

OK

database_name

default

# 修改hive-site.xml

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

配置hive的远程模式

- Metastore

- HiveServer2

hive的远程模式下,node1作为hive的服务端,其他阶段作为hive的客户端

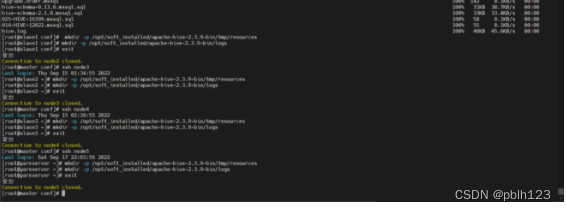

分发配置好的hive文件分发到节点node2

# 分发hive文件

scp -r /opt/soft_installed/apache-hive-2.3.9-bin/ node2:/opt/soft_installed/

# 分发/etc/profile

scp /etc/profile node2:/etc/

scp /etc/profile node3:/etc/

scp /etc/profile node4:/etc/

scp /etc/profile node5:/etc/

配置Hive客户端,先登录node2节点,修改hive-site.xml配置,

# 将连接mysql的配置删除或者注释

cd $HIVE_HOME/conf

vim hive-site.xml

<!-- hive客户端 不需要连接mysql的配置

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>你的密码</value>

<description>password to use against metastore database</description>

</property>

-->

<!-- hive客户端 不需要连接mysql的配置

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://node1:3306/hive?createDatabaseIfNotExist=true&useSSL=false</value>

<description>

JDBC connect string for a JDBC metastore.

To use SSL to encrypt/authenticate the connection, provide database-specific SSL flag in the connection URL.

For example, jdbc:postgresql://myhost/db?ssl=true for postgres database.

</description>

</property>

-->

<!-- hive客户端,不需要连接mysql的配置

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.cj.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

-->

<!-- hive客户端,不需要连接mysql的配置

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>hive</value>

<description>Username to use against metastore database</description>

</property>

-->

# 增加hive客户端配置

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.metastore.local</name>

<value>false</value>

<description>不是本地模式</description>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://node1:9083</value>

<description>Thrift URI for the remote metastore. Used by metastore client to connect to remote metastore.</description>

</property>

# 把node2的hive分发到node3,node4,node5

scp -r /opt/soft_installed/apache-hive-2.3.9-bin/ node3:/opt/soft_installed/

scp -r /opt/soft_installed/apache-hive-2.3.9-bin/ node4:/opt/soft_installed/

scp -r /opt/soft_installed/apache-hive-2.3.9-bin/ node5:/opt/soft_installed/

# 分别在node2-5节点创建hive的本地文件夹

mkdir -p /opt/soft_installed/apache-hive-2.3.9-bin/tmp/resources

mkdir -p /opt/soft_installed/apache-hive-2.3.9-bin/logs

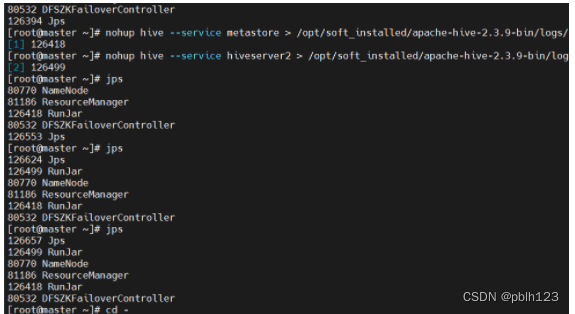

启动hive服务端

# node1 节点

nohup hive --service metastore > /opt/soft_installed/apache-hive-2.3.9-bin/logs/metastore.log 2>&1 &

# 推荐只启动hiveserver2

nohup hive --service hiveserver2 > /opt/soft_installed/apache-hive-2.3.9-bin/logs/hiveserver2.log 2>&1 &

启动hive客户端

# 方法一:metastore

[root@slave1 lh]# hive

which: no hbase in (/usr/local/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/opt/soft_installed/jdk1.8.0_171/bin:/opt/soft_installed/jdk1.8.0_171/jre/bin:/opt/soft_installed/hadoop-2.7.3/bin:/opt/soft_installed/hadoop-2.7.3/sbin:/opt/soft_installed/zookeeper-3.4.5/bin:/opt/soft_installed/apache-hive-2.3.9-bin/bin:/home/lh/.local/bin:/home/lh/bin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/soft_installed/apache-hive-2.3.9-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in file:/opt/soft_installed/apache-hive-2.3.9-bin/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive (default)> show databases;

OK

database_name

default

hdfs_bigdata_19

Time taken: 12.842 seconds, Fetched: 2 row(s)

hive (default)>

# 方法二:beeline (推荐)

[lh@yarnserver ~]$ su

Password:

[root@yarnserver lh]# beeline

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/soft_installed/apache-hive-2.3.9-bin/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/soft_installed/hadoop-2.7.3/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Beeline version 2.3.9 by Apache Hive

beeline> !connect jdbc:hive2://node1:10000

Connecting to jdbc:hive2://node1:10000

Enter username for jdbc:hive2://node1:10000: root

Enter password for jdbc:hive2://node1:10000: *********

Connected to: Apache Hive (version 2.3.9)

Driver: Hive JDBC (version 2.3.9)

Transaction isolation: TRANSACTION_REPEATABLE_READ

0: jdbc:hive2://node1:10000> show databases;

+------------------+

| database_name |

+------------------+

| default |

| hdfs_bigdata_19 |

+------------------+

# 第二种方法 一步到位

beeline -u jdbc:hive2://node1:10000 -n root -p asd123asd

#-u 连接地址 -n 账户 -p 密码

- 启动Hadoop

- 启动Hive

# 启动Hadoop

./home/lh/scripts/HA_hadoop.sh start

# 启动Hive

nohup hive --service hiveserver2 > /opt/soft_installed/apache-hive-2.3.9-bin/logs/hiveserver2.log 2>&1 &

Hive中建表

create table ods_t_bank(

id INT COMMENT '表自增主键',

age INT COMMENT '年龄',

job STRING COMMENT '工作类型',

marital STRING COMMENT '婚否',

education STRING COMMENT '教育程度',

credit STRING COMMENT '是否有信用卡',

housing STRING COMMENT '房贷',

loan STRING COMMENT '贷款',

contact STRING COMMENT '联系途径',

month_of_year STRING COMMENT '月份',

day_of_week STRING COMMENT '星期几',

duration INT COMMENT '持续时间',

campaign INT COMMENT '本次活动联系的次数',

pdays INT COMMENT '与上一次联系的时间间隔',

previous INT COMMENT '之前与客户联系的次数',

poutcome STRING COMMENT '之前市场活动的结果',

emp_var_rate DOUBLE COMMENT '就业变化速率',

cons_price_idx DOUBLE COMMENT '消费者物价指数',

cons_conf_idx DOUBLE COMMENT '消费者信心指数',

euribor3m DOUBLE COMMENT '欧元存款利率',

nr_employed DOUBLE COMMENT '职工人数',

y TINYINT COMMENT '是否有定期存款'

)ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t';

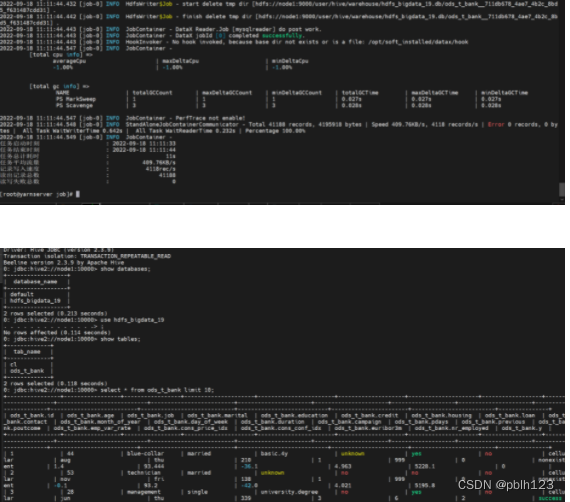

Beeline version 2.3.9 by Apache Hive

0: jdbc:hive2://node1:10000> show databases;

+------------------+

| database_name |

+------------------+

| default |

| hdfs_bigdata_19 |

+------------------+

2 rows selected (0.186 seconds)

0: jdbc:hive2://node1:10000> use hdfs_bigdata_19;

No rows affected (0.112 seconds)

0: jdbc:hive2://node1:10000> show tables;

+-----------+

| tab_name |

+-----------+

| cl |

+-----------+

1 row selected (1.13 seconds)

0: jdbc:hive2://node1:10000> create table ods_t_bank(

. . . . . . . . . . . . . .> id INT COMMENT '表自增主键',

. . . . . . . . . . . . . .> age INT COMMENT '年龄',

. . . . . . . . . . . . . .> job STRING COMMENT '工作类型',

. . . . . . . . . . . . . .> marital STRING COMMENT '婚否',

. . . . . . . . . . . . . .> education STRING COMMENT '教育程度',

. . . . . . . . . . . . . .> credit STRING COMMENT '是否有信用卡',

. . . . . . . . . . . . . .> housing STRING COMMENT '房贷',

. . . . . . . . . . . . . .> loan STRING COMMENT '贷款',

. . . . . . . . . . . . . .> contact STRING COMMENT '联系途径',

. . . . . . . . . . . . . .> month_of_year STRING COMMENT '月份',

. . . . . . . . . . . . . .> day_of_week STRING COMMENT '星期几',

. . . . . . . . . . . . . .> duration INT COMMENT '持续时间',

. . . . . . . . . . . . . .> campaign INT COMMENT '本次活动联系的次数',

. . . . . . . . . . . . . .> pdays INT COMMENT '与上一次联系的时间间隔',

. . . . . . . . . . . . . .> previous INT COMMENT '之前与客户联系的次数',

. . . . . . . . . . . . . .> poutcome STRING COMMENT '之前市场活动的结果',

. . . . . . . . . . . . . .> emp_var_rate DOUBLE COMMENT '就业变化速率',

. . . . . . . . . . . . . .> cons_price_idx DOUBLE COMMENT '消费者物价指数',

. . . . . . . . . . . . . .> cons_conf_idx DOUBLE COMMENT '消费者信心指数',

. . . . . . . . . . . . . .> euribor3m DOUBLE COMMENT '欧元存款利率',

. . . . . . . . . . . . . .> nr_employed DOUBLE COMMENT '职工人数',

. . . . . . . . . . . . . .> y TINYINT COMMENT '是否有定期存款'

. . . . . . . . . . . . . .> )ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t';

No rows affected (4.439 seconds)

0: jdbc:hive2://node1:10000> show tables;

+-------------+

| tab_name |

+-------------+

| cl |

| ods_t_bank |

+-------------+

2 rows selected (0.119 seconds)

0: jdbc:hive2://node1:10000> select * from ods_t_bank;

+----------------+-----------------+-----------------+---------------------+-----------------------+--------------------+---------------------+ ------------------+---------------------+---------------------------+-------------------------+----------------------+----------------------+-- -----------------+----------------------+----------------------+--------------------------+----------------------------+----------------------- ----+-----------------------+-------------------------+---------------+

| ods_t_bank.id | ods_t_bank.age | ods_t_bank.job | ods_t_bank.marital | ods_t_bank.education | ods_t_bank.credit | ods_t_bank.housing | ods_t_bank.loan | ods_t_bank.contact | ods_t_bank.month_of_year | ods_t_bank.day_of_week | ods_t_bank.duration | ods_t_bank.campaign | o ds_t_bank.pdays | ods_t_bank.previous | ods_t_bank.poutcome | ods_t_bank.emp_var_rate | ods_t_bank.cons_price_idx | ods_t_bank.cons_conf_i dx | ods_t_bank.euribor3m | ods_t_bank.nr_employed | ods_t_bank.y |

+----------------+-----------------+-----------------+---------------------+-----------------------+--------------------+---------------------+ ------------------+---------------------+---------------------------+-------------------------+----------------------+----------------------+-- -----------------+----------------------+----------------------+--------------------------+----------------------------+----------------------- ----+-----------------------+-------------------------+---------------+

+----------------+-----------------+-----------------+---------------------+-----------------------+--------------------+---------------------+ ------------------+---------------------+---------------------------+-------------------------+----------------------+----------------------+-- -----------------+----------------------+----------------------+--------------------------+----------------------------+----------------------- ----+-----------------------+-------------------------+---------------+

No rows selected (1.429 seconds)

0: jdbc:hive2://node1:10000>

- 下载DataX,Maven

- 配置Maven

- 配置DataX

安装datax 将下载后的datax.tar.gz压缩包直接解压后就可以使用了,但是前提是要安装好java、python的环境,。Python(推荐Python2.7.X)一定要为Python2,否则导致运行不成功

# 下载DataX

wget http://datax-opensource.oss-cn-hangzhou.aliyuncs.com/datax.tar.gz

tar -zxvf datax.tar.gz -C /opt/soft_installed/

# 下载maven

wget https://mirrors.tuna.tsinghua.edu.cn/apache/maven/maven-3/3.3.9/binaries/apache-maven-3.3.9-bin.tar.gz --no-check-certificate

tar -zxvf apache-maven-3.3.9-bin.tar.gz -C /opt/soft_installed/

# 配置Maven

vim /etc/profile

# 配置Maven

MAVEN_HOME=/opt/soft_installed/apache-maven-3.3.9

PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin:$HIVE_HOME/bin:$MAVEN_HOME/bin

export PATH JAVA_HOME JRE_HOME CLASSPATH HADOOP_HOME HADOOP_LOG_DIR YARN_LOG_DIR HADOOP_CONF_DIR HADOOP_HDFS_HOME HADOOP_YARN_HOME ZOOKEEPER_HOME HIVE_HOME MAVEN_HOME

source /etc/profile

[root@yarnserver apache-maven-3.3.9]# mvn -v

Apache Maven 3.3.9 (bb52d8502b132ec0a5a3f4c09453c07478323dc5; 2015-11-11T00:41:47+08:00)

Maven home: /opt/soft_installed/apache-maven-3.3.9

Java version: 1.8.0_171, vendor: Oracle Corporation

Java home: /opt/soft_installed/jdk1.8.0_171/jre

Default locale: en_US, platform encoding: UTF-8

OS name: "linux", version: "3.10.0-327.el7.x86_64", arch: "amd64", family: "unix"

# 配置DataX

# DataX自检

python /opt/soft_installed/datax/bin/datax.py /opt/soft_installed/datax/job/job.json

debug

经DataX智能分析,该任务最可能的错误原因是:

com.alibaba.datax.common.exception.DataXException: Code:[Common-00], Describe:[您提供的配置文件存在错误信息,请检查您的作业配置 .] - 配置信息错 误,您提供的配置文件[/opt/soft_installed/datax/plugin/reader/._drdsreader/plugin.json]不存在. 请检查您的配置文件.

at com.alibaba.datax.common.exception.DataXException.asDataXException(DataXException.java:26)

at com.alibaba.datax.common.util.Configuration.from(Configuration.java:95)

at com.alibaba.datax.core.util.ConfigParser.parseOnePluginConfig(ConfigParser.java:153)

at com.alibaba.datax.core.util.ConfigParser.parsePluginConfig(ConfigParser.java:125)

at com.alibaba.datax.core.util.ConfigParser.parse(ConfigParser.java:63)

at com.alibaba.datax.core.Engine.entry(Engine.java:137)

at com.alibaba.datax.core.Engine.main(Engine.java:204)

# 解决方案

[root@yarnserver datax]# ls

bin conf job lib log log_perf plugin script tmp

[root@yarnserver datax]# cd plugin/

[root@yarnserver plugin]# ls

reader writer

[root@yarnserver plugin]# cd reader/

[root@yarnserver reader]# rm -rf ./._*

[root@yarnserver reader]# cd ../writer/

[root@yarnserver writer]# rm -rf ./._*

配置mysql2hive_t_bank.json文件

{

"job": {

"content": [{

"reader": {

"name": "mysqlreader",

"parameter": {

"username": "hive",

"password": "你的密码",

"connection": [{

"jdbcUrl": [

"jdbc:mysql://node1:3306/bigdata19"

],

"querySql": [

"select id, age, job, marital, education, credit, housing, loan, contact, month_of_year, day_of_week, duration, campaign, pdays, previous, poutcome, emp_var_rate, cons_price_idx, cons_conf_idx, euribor3m, nr_employed, y from t_bank "

]

}]

}

},

"writer": {

"name": "hdfswriter",

"parameter": {

"column": [{

"name": "id",

"type": "INT"

},

{

"name": "age",

"type": "INT"

},

{

"name": "job",

"type": "STRING"

},

{

"name": "marital",

"type": "STRING"

},

{

"name": "education",

"type": "STRING"

},

{

"name": "credit",

"type": "STRING"

},

{

"name": "housing",

"type": "STRING"

},

{

"name": "loan",

"type": "STRING"

},

{

"name": "contact",

"type": "STRING"

},

{

"name": "month_of_year",

"type": "STRING"

},

{

"name": "day_of_week",

"type": "STRING"

},

{

"name": "duration",

"type": "INT"

},

{

"name": "campaign",

"type": "INT"

},

{

"name": "pdays",

"type": "INT"

},

{

"name": "previous",

"type": "INT"

},

{

"name": "poutcome",

"type": "STRING"

},

{

"name": "emp_var_rate",

"type": "DOUBLE"

},

{

"name": "cons_price_idx",

"type": "DOUBLE"

},

{

"name": "cons_conf_idx",

"type": "DOUBLE"

},

{

"name": "euribor3m",

"type": "DOUBLE"

},

{

"name": "nr_employed",

"type": "DOUBLE"

},

{

"name": "y",

"type": "TINYINT"

}

],

"compress": "gzip",

"defaultFS": "hdfs://node1:9000",

"fieldDelimiter": "\t",

"fileName": "user",

"fileType": "text",

"path": "/user/hive/warehouse/hdfs_bigdata_19.db/ods_t_bank",

"writeMode": "append"

}

}

}],

"setting": {

"speed": {

"channel": "1"

}

}

}

}

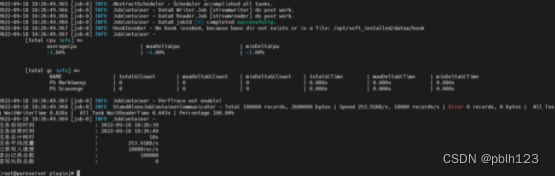

启动DataX导入数据

python /opt/soft_installed/datax/bin/datax.py /opt/soft_installed/datax/job/mysql2hive_t_bank.json

debug

2022-09-18 10:54:27.767 [job-0] ERROR RetryUtil - Exception when calling callable, 即将尝试执行第1次重试.本次重试计划等待[1000]ms,实际等待[1000]ms, 异常Msg:[DataX无法连接对应的数据库,可能原因是:1) 配置的ip/port/database/jdbc错误,无法连接。2) 配置的username/password错误,鉴权失败。请和DBA确认该数据库的连接信息是否正确。]

2022-09-18 10:54:27.772 [job-0] WARN DBUtil - test connection of [jdbc:mysql://192.168.137.110:3306/bigdata19] failed, for Code:[DBUtilErrorCode-10], Description:[连接数据库失败. 请检查您的 账号、密码、数据库名称、IP、Port或者向 DBA 寻求帮助(注意网络环境).]. - 具体错误信息为:com.mysql.jdbc.exceptions.jdbc4.MySQLNonTransientConnectionException: Could not create connection to database server..

#更换MySQL驱动包

# datax/plugin/reader/mysqlreader/libs

# datax/plugin/writer/mysqlreader/libs

# 将高版本的MySQL驱动包上传到该文件夹下

[root@master softs]# scp mysql-connector-java-8.0.30.jar node5:/opt/soft_installed/datax/plugin/reader/mysqlreader/libs

mysql-connector-java-8.0.30.jar 100% 2455KB 2.4MB/s 00:00

[root@master softs]# scp mysql-connector-java-8.0.30.jar node5:/opt/soft_installed/datax/plugin/writer/mysqlwriter/libs

mysql-connector-java-8.0.30.jar 100% 2455KB 2.4MB/s 00:00

hive服务设置一键启动

[root@master scripts]# cat onekeyhive.sh

#!/bin/bash

HIVE_LOG_DIR=$HIVE_HOME/logs

mkdir -p $HIVE_LOG_DIR

#检查进程是否运行正常,参数1为进程名,参数2为进程端口

function check_process()

{

pid=$(ps -ef 2>/dev/null | grep -v grep | grep -i $1 | awk '{print $2}')

ppid=$(netstat -nltp 2>/dev/null | grep $2 | awk '{print $7}' | cut -d '/' -f 1)

echo $pid

[[ "$pid" =~ "$ppid" ]] && [ "$ppid" ] && return 0 || return 1

}

function hive_start()

{

metapid=$(check_process HiveMetastore 9083)

cmd="nohup hive --service metastore >$HIVE_LOG_DIR/metastore.log 2>&1 &"

cmd=$cmd" sleep 4; hdfs dfsadmin -safemode wait >/dev/null 2>&1"

[ -z "$metapid" ] && eval $cmd || echo "Metastroe服务已启动"

server2pid=$(check_process HiveServer2 10000)

cmd="nohup hive --service hiveserver2 >$HIVE_LOG_DIR/hiveServer2.log 2>&1 &"

[ -z "$server2pid" ] && eval $cmd || echo "HiveServer2服务已启动"

}

function hive_stop()

{

metapid=$(check_process HiveMetastore 9083)

[ "$metapid" ] && kill $metapid || echo "Metastore服务未启动"

server2pid=$(check_process HiveServer2 10000)

[ "$server2pid" ] && kill $server2pid || echo "HiveServer2服务未启动"

}

case $1 in

"start")

hive_start

;;

"stop")

hive_stop

;;

"restart")

hive_stop

sleep 2

hive_start

;;

"status")

check_process HiveMetastore 9083 >/dev/null && echo "Metastore服务运行正常" || echo "Metastore服务运行异常"

check_process HiveServer2 10000 >/dev/null && echo "HiveServer2服务运行正常" || echo "HiveServer2服务运行异常"

;;

*)

echo Invalid Args!

echo 'Usage: '$(basename $0)' start|stop|restart|status'

;;

esac

498

498

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?