ELK日志收集系统(一)

一:软件包下载地址

本文所需要所有软件包下载地址:

链接:https://pan.baidu.com/s/1J2rbPZkWEfg_M8k3W8jQPQ

提取码:x3i9

二:环境准备

Elasticsearch的安装见:Elasticsearch集群

| IP | 系统 | 硬件配置 | 软件部署 |

|---|---|---|---|

| 172.17.2.239 | CentOS7.4 | 2 CPU, 4G MEM | elasticsearch6.6.0,kibana6.6.0,nginx,filebeat |

| 172.17.2.240 | CentOS7.4 | 2 CPU, 4G MEM | nginx,filebeat |

三:kibana安装与配置

[root@node01 tools]# yum localinstall -y kibana-6.6.0-x86_64.rpm

kibana配置文件:

[root@node01 kibana]# grep '^[a-z]' /etc/kibana/kibana.yml

server.port: 5601

server.host: "172.17.2.239"

server.name: "node01.adminba.com" # 主机的hostname

elasticsearch.hosts: ["http://localhost:9200"]

kibana.index: ".kibana"

启动kibana:

[root@node01 kibana]# systemctl start kibana

启动完成后浏览器输入http://IP:5601即可访问kibana:

四:安装nginx

yum install nginx httpd-tools -y

启动nginx并进行压力测试:

[root@node01 ~]# systemctl start nginx

[root@node01 ~]# ab -c 10 -n 100 http://172.17.2.239/ # 目的就是让nginx产生日志

五:filebeat安装配置

[root@node01 tools]# yum localinstall -y filebeat-6.6.0-x86_64.rpm

5.1 配置filebeat收集nginx日志

filebeat配置文件配置:

[root@node01 ~]# cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log # nginx日志路径

output.elasticsearch:

hosts: ["172.17.2.239:9200"]

启动filebeat:

[root@node01 ~]# systemctl start filebeat

再次访问es-head:

5.2 kibana设置

显示的nginx日志如下:

六:收集nginx json日志

首先修改ningx.conf配置文件,让nginx可以输出json格式的日志:

[root@node01 nginx]# cat nginx.conf

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

# 添加下面的json段配置

log_format json '{ "time_local": "$time_local", '

'"remote_addr": "$remote_addr", '

'"referer": "$http_referer", '

'"request": "$request", '

'"status": $status, '

'"bytes": $body_bytes_sent, '

'"agent": "$http_user_agent", '

'"x_forwarded": "$http_x_forwarded_for", '

'"up_addr": "$upstream_addr",'

'"up_host": "$upstream_http_host",'

'"upstream_time": "$upstream_response_time",'

'"request_time": "$request_time"'

' }';

access_log /var/log/nginx/access.log json; # 这里修改为json

清空nginx已有的日志:

[root@node01 nginx]# > /var/log/nginx/access.log

重启nginx,然后测试访问nginx,让其产生日志:

[root@node02 ~]# for i in {0..100};do curl -I http://172.17.2.239; done

查看nginx输出的日志:

[root@node01 nginx]# tail -f /var/log/nginx/access.log

{ "time_local": "12/Mar/2020:13:17:27 +0800", "remote_addr": "172.17.2.240", "referer": "-", "request": "HEAD / HTTP/1.1", "status": 200, "bytes": 0, "agent": "curl/7.29.0", "x_forwarded": "-", "up_addr": "-","up_host": "-","upstream_time": "-","request_time": "0.000" }

有es-head中删除filebeat索引:

删除kibana中的索引:

修改filebeat配置文件:

[root@node01 filebeat]# cat filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

# 上面两行:让filebeat以json格式传给ES

output.elasticsearch:

hosts: ["172.17.2.239:9200"]

重启filebeat:

systemctl restart filebeat.service

重新访问nginx,让其产生新的日志,在kibana中重建索引。再次查看收集到的日志格式如下:

七:收集多台服务器上的nginx日志

这里再增加一台虚拟机,共三台

| IP | 系统 | 硬件配置 | 软件部署 |

|---|---|---|---|

| 172.17.2.239 | CentOS7.4 | 2 CPU, 4G MEM | elasticsearch6.6.0,kibana6.6.0,nginx,filebeat |

| 172.17.2.240 | CentOS7.4 | 2 CPU, 4G MEM | nginx,filebeat |

| 172.17.2.241 | CentOS7.4 | 2 CPU, 4G MEM | nginx,filebeat |

172.17.2.239上filebeat配置文件:

[root@node01 ~]# cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

setup.kibana:

host: "172.17.2.239:5601"

output.elasticsearch:

hosts: ["172.17.2.239:9200"]

index: "nginx-access-%{[beat.version]}-%{+yyyy.MM}"

setup.template.name: "nginx"

setup.template.pattern: "nginx-*"

setup.template.enabled: false

setup.template.overwrite: true

将/etc/filebeat/filebeat.yml,与/etc/nginx/nginx.conf两个配置文件远程拷贝到另外两台主机上:

scp /etc/nginx/nginx.conf root@172.17.2.240:/etc/nginx/nginx.conf

scp /etc/nginx/nginx.conf root@172.17.2.241:/etc/nginx/nginx.conf

scp /etc/filebeat/filebeat.yml root@172.17.2.240:/etc/filebeat/filebeat.yml

scp /etc/filebeat/filebeat.yml root@172.17.2.241:/etc/filebeat/filebeat.yml

确保三台主机filebeat配置文件,nginx配置文件都是一样的。

分别对另外两台主机进行压测,目的是让nginx产生日志:

ab -n 20 -c 20 http://172.17.2.240/node02

ab -n 20 -c 20 http://172.17.2.241/node03

增加nginx错误日志的收集:需要将nginx的访问日志与错误日志拆分开

修改filebeat配置文件,如下:(然后两步到其他两台机器上)

[root@node01 filebeat]# cat filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

setup.kibana:

host: "172.17.2.239:5601"

output.elasticsearch:

hosts: ["172.17.2.239:9200"]

indices:

- index: "nginx_access-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "access"

- index: "nginx_error-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "error"

setup.template.name: "nginx"

setup.template.pattern: "nginx_*"

setup.template.enabled: false

setup.template.overwrite: true

八:收集tomcat日志

8.1 安装tomcat

yum install tomcat tomcat-webapps tomcat-admin-webapps tomcat-docs-webapp tomcat-javadoc -y

启动检查:

[root@ ~]# systemctl start tomcat

[root@ ~]# systemctl status tomcat

[root@ ~]# lsof -i:8080

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

java 18915 tomcat 49u IPv6 61950 0t0 TCP *:webcache (LISTEN)

8.2 修改tomcat为json日志

# 第139行修改为以下配置

[root@node01 ~]# cat -n /etc/tomcat/server.xml

137 <Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

138 prefix="localhost_access_log." suffix=".txt"

139 pattern="{"clientip":"%h","ClientUser":"%l","authenticated":"%u","AccessTime":"%t","method":"%r","status":"%s","SendBytes":"%b","Query?string":"%q","partner":"%{Referer}i","AgentVersion":"%{User-Agent}i"}"/>

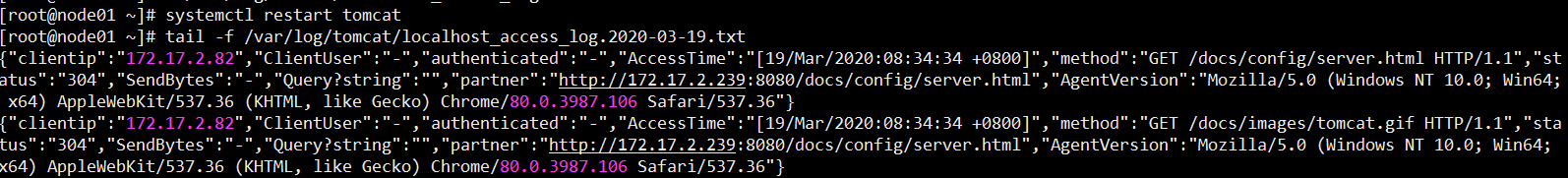

[root@node01 ~]# > /var/log/tomcat/localhost_access_log.2020-03-19.txt

[root@node01 ~]# systemctl restart tomcat

再次访问日志即为json格式日志

修改filebeat配置文件:

[root@node01 ~]# cat /etc/filebeat/filebeat.yml

filebeat.inputs:

######################### nginx #######################

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

######################### tomcat #######################

- type: log

enabled: true

paths:

- /var/log/tomcat/localhost_access_log.*.txt

json.keys_under_root: true

json.overwrite_keys: true

tags: ["tomcat"]

setup.kibana:

host: "172.17.2.239:5601"

output.elasticsearch:

hosts: ["172.17.2.239:9200"]

indices:

- index: "nginx_access-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "access"

- index: "nginx_error-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "error"

- index: "tomcat-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "tomcat"

setup.template.name: "nginx"

setup.template.pattern: "nginx_*"

setup.template.enabled: false

setup.template.overwrite: true

九:收集JAVA日志

因为java日志的输出信息非常多,需要将多行拼成一个事件,所以需要多行匹配模式

因为elasticsearch本身就是java开发的,所以我们可以直接收集ES的日志

[root@node01 filebeat]# cat filebeat.yml

filebeat.inputs:

######################### nginx #######################

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

######################### tomcat #######################

- type: log

enabled: true

paths:

- /var/log/tomcat/localhost_access_log.*.txt

json.keys_under_root: true

json.overwrite_keys: true

tags: ["tomcat"]

######################### es #######################

- type: log

enabled: true

paths:

- /var/log/elasticsearch/elasticsearch.log

tags: ["es"]

multiline.pattern: '^\['

multiline.negate: true

multiline.match: after

setup.kibana:

host: "172.17.2.239:5601"

output.elasticsearch:

hosts: ["172.17.2.239:9200"]

indices:

- index: "nginx_access-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "access"

- index: "nginx_error-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "error"

- index: "tomcat-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "tomcat"

- index: "es-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

tags: "es"

setup.template.name: "nginx"

setup.template.pattern: "nginx_*"

setup.template.enabled: false

setup.template.overwrite: true

十:收集docker日志

10.1 配置filebeat收集单个docker日志

官方介绍:

https://www.elastic.co/guide/en/beats/filebeat/6.7/filebeat-input-docker.html

启动一个nginx容器:

docker run --name nginx -p 80:80 -d nginx

首先查看docker容器的ID

docker inspect nginx|grep -w 'Id'

配置文件

filebeat.inputs:

- type: docker

containers.ids:

- '7a0e13848bd2ff028c8a1c0c299a3b606d177905e8b30b7a2a6603952953d939' # 容器ID

tags: ["docker-nginx"]

output.elasticsearch:

hosts: ["localhost:9200"]

index: "docker-nginx-%{[beat.version]}-%{+yyyy.MM.dd}"

setup.template.name: "docker"

setup.template.pattern: "docker-*"

setup.template.enabled: false

setup.template.overwrite: true

10.2 配置filebeat通过标签收集多个容器日志

新版本的filebeat增加了收集多个容器的日志的选项

https://www.elastic.co/guide/en/beats/filebeat/7.2/filebeat-input-container.html

假如我们有多个docker镜像或者重新提交了新镜像,那么直接指定ID的就不是太方便了。

多容器日志收集处理:其实收集的日志本质来说还是文件,而这个日志是以容器-json.log命名存放在默认目录下的json格式的文件

但是每个容器的ID都不一样,为了区分不同服务运行的不同容器,可以使用docker-compose通过给容器添加labels标签来作为区分,然后filbeat把容器日志当作普通的json格式来解析并传输到es

安装docker-compose:

yum install -y python2-pip

pip install docker-compose

docker-compose version

pip加速操作命令

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple pip -U

pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

编写docker-compose.yml文件:

cat docker-compose.yml

version: '3'

services:

nginx:

image: nginx

# 设置labels

labels:

service: nginx

# logging设置增加labels.service

logging:

options:

labels: "service"

ports:

- "8080:80"

db:

image: nginx:latest

# 设置labels

labels:

service: db

# logging设置增加labels.service

logging:

options:

labels: "service"

ports:

- "80:80"

运行docker-compose.yml:

docker-compose up -d

检查日志是否增加了lable标签:

tail -1 /var/lib/docker/containers/b2c1f4f7f5a2967fe7d12c1db124ae41f009ec663c71608575a4773beb6ca5f8/b2c1f4f7f5a2967fe7d12c1db124ae41f009ec663c71608575a4773beb6ca5f8-json.log

{"log":"192.168.47.1 - - [23/May/2019:13:22:32 +0000] \"GET / HTTP/1.1\" 304 0 \"-\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_5) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/74.0.3729.157 Safari/537.36\" \"-\"\n","stream":"stdout","attrs":{"service":"nginx"},"time":"2019-05-23T13:22:32.478708392Z"}

配置filebeat:

cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/lib/docker/containers/*/*-json.log

json.keys_under_root: true

json.overwrite_keys: true

output.elasticsearch:

hosts: ["172.17.2.239:9200"]

indices:

- index: "docker-nginx-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

attrs.service: "nginx"

- index: "docker-db-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

attrs.service: "db"

setup.template.name: "docker"

setup.template.pattern: "docker-*"

setup.template.enabled: false

setup.template.overwrite: true

10.3 通过服务类型和日志类型多条件创建不同索引

目前为止,已经可以按服务来收集日志了,但是错误日志和正确日志混在了一起,不好区分,所以可以进一步进行条件判断,根据服务和日志类型创建不同的索引

filebeat配置文件:

[root@node01 ~]# cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/lib/docker/containers/*/*-json.log

json.keys_under_root: true

json.overwrite_keys: true

output.elasticsearch:

hosts: ["172.17.2.239:9200"]

indices:

- index: "docker-nginx-access-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

attrs.service: "nginx"

stream: "stdout"

- index: "docker-nginx-error-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

attrs.service: "nginx"

stream: "stderr"

- index: "docker-db-access-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

attrs.service: "db"

stream: "stdout"

- index: "docker-db-error-%{[beat.version]}-%{+yyyy.MM.dd}"

when.contains:

attrs.service: "db"

stream: "stderr"

setup.template.name: "docker"

setup.template.pattern: "docker-*"

setup.template.enabled: false

setup.template.overwrite: true

1927

1927

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?