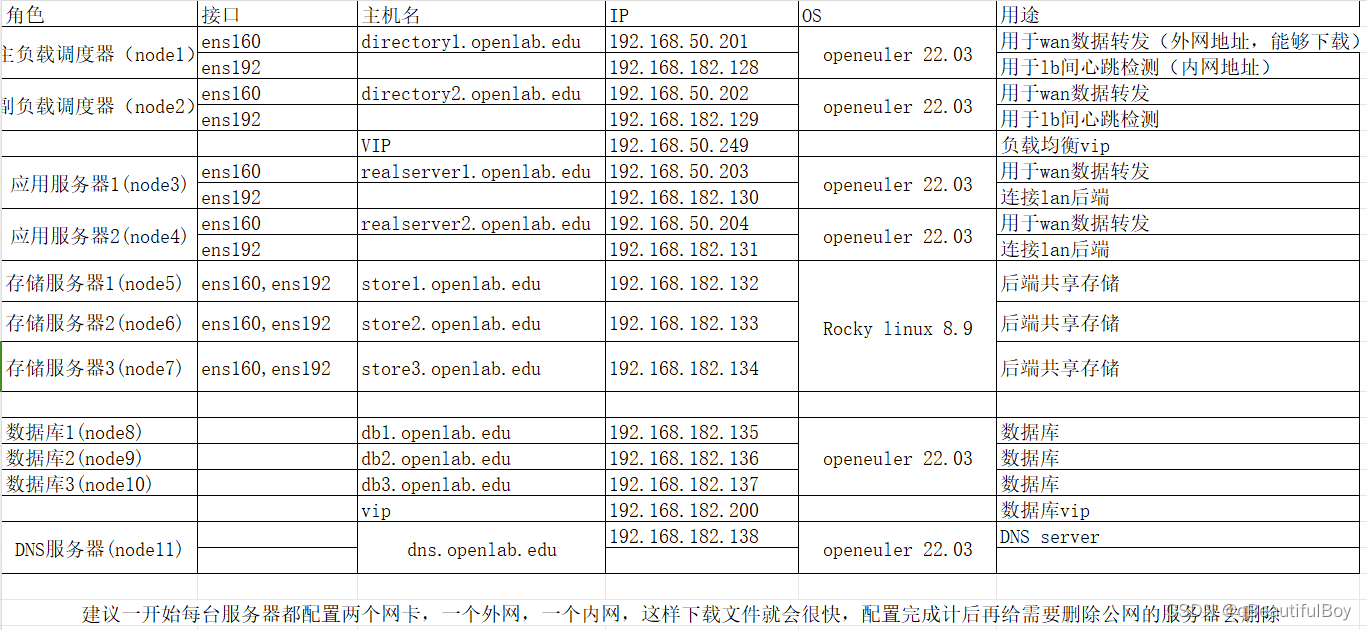

集群实现

软件层分为负载均衡层 Web 层、 数据库和共享存储层。

数据库:

keepalived+haproxy+pxc(MariaDB Galera Cluster)

共享存储:

ceph分布式集群,cephfs

web层:

tomcat

负载均衡:

nginx+keepalived

DNS:内网DNS解析、时间服务器

配置环境

1.外网ip与内网ip

每一台服务器上首先都配置一个外网ip与内网ip

2.设置hostname

hostnamectl hostname xxxxx

给本机设置域名,配合后期DNS

3.下载常用工具

dnf install -y wget bash-completion lrzsz vim net-tools tree

4.关闭selinux与防火墙

sed -i '/^SELINUX=/ -c SELINUX=disbaled /' /etc/sysconfig/selinux

systemctl disable firewalld

DNS服务器配置

1、时间服务器配置

[root@localhost ~]# yum install chrony

[root@dns ~]# vim /etc/chrony.conf

pool ntp1.aliyun.com iburst

allow 192.168.182.0/24

local stratum 10

[root@dns ~]# systemctl restart chronyd

[root@dns ~]# systemctl enable chronyd

2、DNS服务器配置

# 安装bind

[root@dns ~]# yum install bind -y

# 配置 named.conf

[root@dns ~]# vim /etc/named.conf

options {

listen-on port 53 { 192.168.182.138; };

allow-query { 192.168.182.0/24; };

# 配置正向区域

[root@dns ~]# vim /etc/named.rfc1912.zon

zone "openlab.edu" IN {

type master;

file "openlab.edu.zone";

};

# 配置区域文件

[root@dns ~]# cd /var/named

[root@dns named]# cp -p named.localhost openlab.edu.zone

[root@dns named]# vim openlab.edu.zone

$TTL 1D

@ IN SOA dns.openlab.edu. admin.openlab.edu. (

2024042701 ; serial

1D ; refresh

1H ; retry

1W ; expire

3H ) ; minimum

NS dns.openlab.edu.

dns A 192.168.182.138

realserver1 A 192.168.182.130

realserver2 A 192.168.182.131

store1 A 192.168.182.132

store2 A 192.168.182.133

store3 A 192.168.182.134

db1 A 192.168.182.135

db2 A 192.168.182.136

db3 A 192.168.182.137

# 启动named

[root@dns named]# systemctl enable --now named

# 验证配置

[root@dns named]# named-checkconf

[root@dns named]# named-checkzone openlab.zone openlab.edu.zone

zone openlab.zone/IN: loaded serial 2024042701

OK

[root@dns ~]# nslookup db1.openlab.edu 192.168.182.138

Server: 192.168.182.138

Address: 192.168.182.138#53

Name: db1.openlab.edu

Address: 192.168.182.135

所有内网服务器均把网关设为DNS服务器内网ip

#这里我内网ip用的是DHCP,没有手工配置,推荐还是配置下

nmtui c mod ens192 ipv4.dns 192.168.182.135

nmtui c up ens192

可以修改里面的网关 /etc/resolv.conf

ceph集群扩容

存储配置

三台ceph服务器先配置host解析

192.168.182.132 store1.openlab.edu

192.168.182.133 store2.openlab.edu

192.168.182.134 store3.openlab.edu

配置时间同步

dnf install chrony -y

systemctl enable chronyd --now

vim /etc/chrony.conf

server ntp1.aliyun.com iburst

server ntp2.aliyun.com iburst

server ntp3.aliyun.com iburst

server ntp4.aliyun.com iburst

chronyc sources -v //查看时间同步源

配置ceph的yum源

cat > /etc/yum.repos.d/ceph.repo <<EOF

[ceph]

name=ceph x86_64

baseurl=https://repo.huaweicloud.com/ceph/rpm-quincy/el8/x86_64

enabled=1

gpgcheck=0

[ceph-noarch]

name=ceph noarch

baseurl=https://repo.huaweicloud.com/ceph/rpm-quincy/el8/noarch

enabled=1

gpgcheck=0

[ceph-source]

name=ceph SRPMS

baseurl=https://repo.huaweicloud.com/ceph/rpm-quincy/el8/SRPMS

enabled=1

gpgcheck=0

EOF

三个节点安装python3和podman

dnf install python3 podman -y

三台store添加三块硬盘

主节点store1安装cephadm并初始化集群

cephadm bootstrap --mon-ip 192.168.182.132 \

--initial-dashboard-user admin \

--initial-dashboard-password redhat \

--dashboard-password-noupdate \

--allow-fqdn-hostname

集群扩容

## 三台服务器安装ceph-common

dnf install epel-release

dnf install ceph-common

# 拷贝公钥

使用以下命令生成集群公钥,并将其拷贝到剩余主机:

ceph cephadm get-pub-key > ~/ceph.pub

ssh-copy-id -f -i ~/ceph.pub root@store2.openlab.edu //@后面的主机名视自己实际情况而变

ssh-copy-id -f -i ~/ceph.pub root@store3.openlab.edu

//hosts解析设置了,所以直接写主机名

#将store2和3加入到集群

cephadm shell ceph orch host add store2.openlab.edu

cephadm shell ceph orch host add store3.openlab.edu

配置ssh免密登录

ssh-keygen -f ~/.ssh/id_rsa -N '' -q

for i in store1.openlab.edu store2.openlab.edu store3.openlab.edu ; do ssh-copy-id $i; done

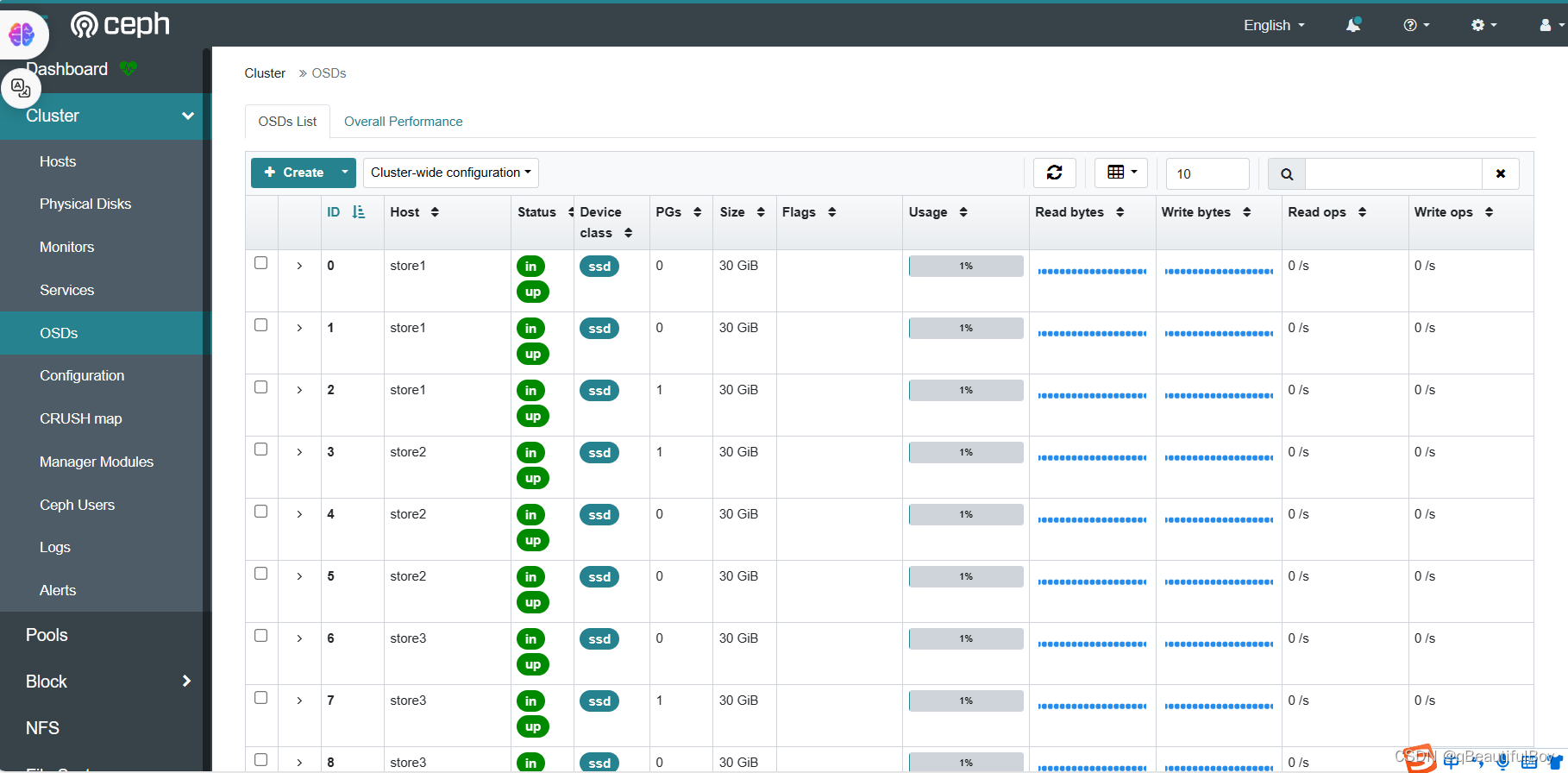

添加osd

ceph orch apply osd --all-available-devices

将mon,mgr配置到集群节点

ceph orch apply mgr --placement "store1.openlab.edu store2.openlab.edu store3.openlab.edu"

ceph orch apply mon --placement "store1.openlab.edu store2.openlab.edu store3.openlab.edu"

查看ceph集群信息

[root@store1 ceph]# ceph -s

cluster:

id: 3504b540-134f-11ef-9ad3-000c299f8a28

health: HEALTH_OK

services:

mon: 3 daemons, quorum store1,store2,store3 (age 37m)

mgr: store3.tozlph(active, since 51s), standbys: store2.omdwad

osd: 9 osds: 9 up (since 37m), 9 in (since 37m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 2.6 GiB used, 267 GiB / 270 GiB avail

pgs: 1 active+clean

[root@store1 ceph]# ceph -s

cluster:

id: 3504b540-134f-11ef-9ad3-000c299f8a28

health: HEALTH_OK

services:

mon: 3 daemons, quorum store1,store2,store3 (age 85m)

mgr: store3.tozlph(active, since 48m), standbys: store2.omdwad, store1.rvcnfo

osd: 9 osds: 9 up (since 85m), 9 in (since 85m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 2.6 GiB used, 267 GiB / 270 GiB avail

pgs: 1 active+clean

登录ceph界面,如下图所示

浏览器访问https://ip:8433

输入用户名和密码即可登录成功

配置存储池

ceph fs volume create cephfs01

创建用户

[root@store1 ceph]# ceph fs authorize cephfs01 client.rw / rws

[client.rw]

key = AQBmGEZmsGkzDxAAkPCof9UNmrRCQel49yW+0Q==

[root@store1 ceph]# ceph auth get client.rw

[client.rw]

key = AQBmGEZmsGkzDxAAkPCof9UNmrRCQel49yW+0Q==

caps mds = "allow rws fsname=cephfs01"

caps mon = "allow r fsname=cephfs01"

caps osd = "allow rw tag cephfs data=cephfs01"

[root@store1 /]# ceph auth get client.rw -o /etc/ceph/ceph.client.rw.keyring

三台ceph服务器配置设置DNS

[root@store1 ~]# nmcli c s

NAME UUID TYPE DEVICE

ens160 1021b155-0475-43a1-97ac-03d0986e7779 ethernet ens160

ens192 b9124424-e5d9-4b9c-8894-bbc364371ca6 ethernet ens192

[root@store1 ~]# nmcli c modify ens192 ipv4.dns 192.168.182.138

[root@store1 ~]# nmcli c up ens192

Connection successfully activated (D-Bus active path: /org/freedesktop/NetworkManager/ActiveConnection/3)

[root@store1 ~]# vim /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

#pool 2.rocky.pool.ntp.org iburst

pool dns.openlab.edu iburst

[root@store1 ~]# vim /etc/hosts

192.168.182.138 dns.openlab.edu

[root@store1 ~]# systemctl restart chronyd

[root@store1 ~]# chronyc sources

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* dns.openlab.edu 3 6 17 3 +387ns[ +38us] +/- 19ms

数据库配置

给三台数据库配置hosts解析

[root@db1 ~]# vim /etc/hosts

192.168.182.135 db1.openlab.edu

192.168.182.136 db2.openlab.edu

192.168.182.137 db3.openlab.edu

192.168.182.138 dns.openlab.edu

配置时间同步

vim /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (https://www.pool.ntp.org/join.html).

pool dns.openlab.edu iburst

[root@db1 ~]# systemctl restart chronyd

[root@db1 ~]# systemctl enable chronyd

[root@db1 ~]# chronyc sources

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* dns.openlab.edu 3 6 17 3 -4445ns[+3146ns] +/- 19ms

下载数据库

yum install mariadb-server mariadb-server-galera galera -y

启动数据库

systemctl enable --now mariadb

配置集群

db1:

# 1、停止数据库

[root@db1 ~]# systemctl stop mariadb

# 2、增加集群配置

[root@db1 ~]# vim /etc/my.cnf.d/mariadb-server.cnf

[mysqld]

server_id=1

log-bin=mysql-bin

[galera]

# 添加以下内容

#Mandatory settings

wsrep_on=ON

wsrep_provider=/usr/lib64/galera/libgalera_smm.so

wsrep_cluster_address="gcomm://192.168.182.135,192.168.182.136,192.168.182.137"

binlog_format=row

wsrep_node_name=db1.openlab.edu

wsrep_node_address=192.168.182.135

default_storage_engine=InnoDB

innodb_autoinc_lock_mode=2

innodb_flush_log_at_trx_commit=0

innodb_buffer_pool_size=2G

#

# Allow server to accept connections on all interfaces.

bind-address=0.0.0.0

[root@db1 ~]# vim /etc/my.cnf.d/galera.cnf

# Enable wsrep

wsrep_on=1

# 3、初始化集群

[root@db1 ~]# galera_new_cluster

db2:

[root@db2 ~]systemctl stop mariadb

# 1、配置集群

[root@db2 ~] vim /etc/my.cnf.d/galera.cnf

# Enable wsrep

wsrep_on=1

[root@db2 ~] vim /etc/my.cnf.d/mariadb-server.cnf

[mysqld]

server_id=2

log-bin=mysql-bin

[galera]

# Mandatory settings

wsrep_on=ON

wsrep_provider=/usr/lib64/galera/libgalera_smm.so

wsrep_cluster_address="gcomm://192.168.182.135,192.168.182.136,192.168.182.137"

binlog_format=row

wsrep_node_name=db2.openlab.edu

wsrep_node_address=192.168.182.136

default_storage_engine=InnoDB

innodb_autoinc_lock_mode=2

innodb_flush_log_at_trx_commit=0

innodb_buffer_pool_size=2G

#

# Allow server to accept connections on all interfaces.

bind-address=0.0.0.0

db3:

[mysqld]

server_id=3

log-bin=mysql-bin

[galera]

# Mandatory settings

wsrep_on=ON

wsrep_provider=/usr/lib64/galera/libgalera_smm.so

wsrep_cluster_address="gcomm://192.168.182.135,192.168.182.136,192.168.182.137"

binlog_format=row

wsrep_node_name=db3.openlab.edu

wsrep_node_address=192.168.182.137

default_storage_engine=InnoDB

innodb_autoinc_lock_mode=2

innodb_flush_log_at_trx_commit=0

innodb_buffer_pool_size=2G

#

# Allow server to accept connections on all interfaces.

bind-address=0.0.0.0

初始化MariaDB

注:每次重启服务器都会遇到mariadb重启失败问题

输入systemctl start mariadb后会一直卡着不动,最后报错

最后在我研究下发现,每次重启后

执行 ps -ef | grep mysql 命令会看到一个mysql进程,只要他一直在执行,我们的mariadb就一直无法启动,因此三台db服务器都要先找到那个进程号,然后kill -9 进程号。之后mariadb就可以正常启动。

若还不行,那就进行一下操作

vim /var/lib/mysql/grastate.dat

# 将这个值设为1

safe_to_bootstrap: 0

# 再

galera_new_cluster

# 然后

systemctl start mariadb

检查集群

任意节点登录MariaDB,输入命令“show status like 'wsrep_%';”可查看相应的集群状态

MariaDB [(none)]> show status like 'wsrep_%';

+-------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------+

| Variable_name | Value |

+-------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------+

| wsrep_local_state_uuid | 387ff18b-04fe-11ef-9116-fa8f804891c7 |

| wsrep_protocol_version | 10 |

| wsrep_last_committed | 4 |

| wsrep_replicated | 0 |

| wsrep_replicated_bytes | 0 |

| wsrep_repl_keys | 0 |

| wsrep_repl_keys_bytes | 0 |

| wsrep_repl_data_bytes | 0 |

| wsrep_repl_other_bytes | 0 |

| wsrep_received | 4 |

| wsrep_received_bytes | 424 |

| wsrep_local_commits | 0 |

| wsrep_local_cert_failures | 0 |

| wsrep_local_replays | 0 |

| wsrep_local_send_queue | 0 |

| wsrep_local_send_queue_max | 1 |

| wsrep_local_send_queue_min | 0 |

| wsrep_local_send_queue_avg | 0 |

| wsrep_local_recv_queue | 0 |

| wsrep_local_recv_queue_max | 1 |

| wsrep_local_recv_queue_min | 0 |

| wsrep_local_recv_queue_avg | 0 |

| wsrep_local_cached_downto | 3 |

| wsrep_flow_control_paused_ns | 0 |

| wsrep_flow_control_paused | 0 |

| wsrep_flow_control_sent | 0 |

| wsrep_flow_control_recv | 0 |

| wsrep_flow_control_active | false |

| wsrep_flow_control_requested | false |

| wsrep_cert_deps_distance | 0 |

| wsrep_apply_oooe | 0 |

| wsrep_apply_oool | 0 |

| wsrep_apply_window | 0 |

| wsrep_apply_waits | 0 |

| wsrep_commit_oooe | 0 |

| wsrep_commit_oool | 0 |

| wsrep_commit_window | 0 |

| wsrep_local_state | 4 |

| wsrep_local_state_comment | Synced |

| wsrep_cert_index_size | 0 |

| wsrep_causal_reads | 0 |

| wsrep_cert_interval | 0 |

| wsrep_open_transactions | 0 |

| wsrep_open_connections | 0 |

| wsrep_incoming_addresses | AUTO,AUTO,AUTO |

| wsrep_cluster_weight | 3 |

| wsrep_desync_count | 0 |

| wsrep_evs_delayed | |

| wsrep_evs_evict_list | |

| wsrep_evs_repl_latency | 0/0/0/0/0 |

| wsrep_evs_state | OPERATIONAL |

| wsrep_gcomm_uuid | c6dc88ce-04ff-11ef-ba8c-53afaf34810b |

| wsrep_gmcast_segment | 0 |

| wsrep_applier_thread_count | 1 |

| wsrep_cluster_capabilities | |

| wsrep_cluster_conf_id | 3 |

| wsrep_cluster_size | 3 |

| wsrep_cluster_state_uuid | 387ff18b-04fe-11ef-9116-fa8f804891c7 |

| wsrep_cluster_status | Primary |

| wsrep_connected | ON |

| wsrep_local_bf_aborts | 0 |

| wsrep_local_index | 1 |

| wsrep_provider_capabilities | :MULTI_MASTER:CERTIFICATION:PARALLEL_APPLYING:TRX_REPLAY:ISOLATION:PAUSE:CAUSAL_READS:INCREMENTAL_WRITESET:UNORDERED:PREORDERED:STREAMING:NBO: |

| wsrep_provider_name | Galera |

| wsrep_provider_vendor | Codership Oy <info@codership.com> |

| wsrep_provider_version | 4.16(rc333b191) |

| wsrep_ready | ON |

| wsrep_rollbacker_thread_count | 1 |

| wsrep_thread_count | 2 |

+-------------------------------+------------------------------------------------------------------------------------------------------------------------------------------------+

69 rows in set (0.002 sec)

其中,应当着重关注以下参数:

- wsrep_cluster_state_uuid:集群UUID,集群中所有节点此参数相同;

- wsrep_cluster_size:集群节点数量;

- wsrep_connected:本节点与集群通信状态。

连接mariadb,查看是否启用galera插件

MariaDB [(none)]> show status like "wsrep_ready";

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| wsrep_ready | ON |

+---------------+-------+

1 row in set (0.002 sec)

2、查看目前集群机器数

MariaDB [(none)]> show status like "wsrep_cluster_size";

+--------------------+-------+

| Variable_name | Value |

+--------------------+-------+

| wsrep_cluster_size | 3 |

+--------------------+-------+

1 row in set (0.001 sec)

haproxy 负载均衡

三台db都操作

# 1、安装haproxy

# yum install -y haproxy

# 2、配置haproxy

# cat /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# https://www.haproxy.org/download/1.8/doc/configuration.txt

#

#---------------------------------------------------------------------

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

user haproxy

group haproxy

daemon

maxconn 4000

defaults

mode http

log global

option httplog

option dontlognull

retries 3

timeout http-request 5s

timeout queue 1m

timeout connect 5s

timeout client 1m

timeout server 1m

timeout http-keep-alive 5s

timeout check 5s

maxconn 3000

listen mariadb

mode tcp

bind *:33060

balance roundrobin

option tcplog

option tcpka

server db1 192.168.182.135:3306 weight 1 check inter 2000 rise 2 fall 5

server db2 192.168.182.136:3306 weight 1 check inter 2000 rise 2 fall 5

server db3 192.168.182.137:3306 weight 1 check inter 2000 rise 2 fall 5

# 3、启动haproxy

# systemctl enable --now haproxy

# 4、检查

# ss -lntp | grep 33060

LISTEN 0 3000 0.0.0.0:33060 0.0.0.0:* users:(("haproxy",pid=2587,fd=6))

8、配置高可用

三台db都操作

# 1、安装keepalived

# yum install keepalived -y

# 2、配置keepalived

db1 配置:

[root@db1 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id db1

}

vrrp_script chk_haproxy {

script "/etc/keepalived/chk.sh"

interval 2

}

vrrp_instance VI_1 {

state BACKUP

nopreempt

interface ens192

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.182.200 # 设置vip

}

track_script {

chk_haproxy # 因为keepalived中没有对haproxy的健康巡检,所以写一个健康检查脚本

}

}

[root@db1 ~]# vim /etc/keepalived/chk.sh

#!/bin/bash

A=`ps -C haproxy --no-header | wc -l`

if [ $A -eq 0 ]

then

systemctl restart haproxy

echo "Start haproxy" &> /dev/null

sleep 3

if [ `ps -C haproxy --no-header | wc -l` -eq 0 ]

then

systemctl stop keepalived

echo "Stop keepalived" &> /dev/null

else

exit

fi

fi

## 写完脚本后,记得给用户执行脚本的权限

chmod + x chk.sh

db2配置:

[root@db1 ~]# scp /etc/keepalived/{keepalived.conf,chk.sh} db2.openlab.edu:/etc/keepalived/

修改keepalived配置文件

[root@db2 keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

router_id db2

}

vrrp_script chk_haproxy {

script "/etc/keepalived/chk.sh"

interval 2

}

vrrp_instance VI_1 {

state BACKUP

nopreempt

interface ens192

virtual_router_id 51

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.182.200

}

track_script {

chk_haproxy

}

}

db3配置:

[root@db1 ~]# scp /etc/keepalived/{keepalived.conf,chk.sh} db3.openlab.edu:/etc/keepalived/

修改keepalived配置文件

[root@db3 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id db3

}

vrrp_script chk_haproxy {

script "/etc/keepalived/chk.sh"

interval 2

}

vrrp_instance VI_1 {

state BACKUP

nopreempt

interface ens192

virtual_router_id 51

priority 60

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.182.200

}

track_script {

chk_haproxy

}

}

# 3、启动keepalived

# systemctl enable --now keepalived

# 4、测试负载均衡

任意一个数据库节点登录创建并授权用户

MariaDB [(none)]> CREATE USER admin@'%' IDENTIFIED BY '123456';

Query OK, 0 rows affected (0.017 sec)

MariaDB [(none)]> GRANT ALL ON *.* to admin@'%';

Query OK, 0 rows affected (0.013 sec)

负载均衡测试:

[root@db2 keepalived]# mysql -uadmin -p123456 -P33060 -h192.168.182.200 -e " show variables like '%server_id%'"

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| server_id | 2 |

+---------------+-------+

[root@db2 keepalived]# mysql -uadmin -p123456 -P33060 -h192.168.182.200 -e " show variables like '%server_id%'"

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| server_id | 3 |

+---------------+-------+

[root@db2 keepalived]# mysql -uadmin -p123456 -P33060 -h192.168.182.200 -e " show variables like '%server_id%'"

+---------------+-------+

| Variable_name | Value |

+---------------+-------+

| server_id | 1 |

+---------------+-------+

web服务器配置

1、hosts解析

hostnamectl set-hostname realserver1.openlab.edu

hostnamectl set-hostname realserver2.openlab.edu

# cat >> /etc/hosts << EOF

192.168.182.132 store1.openlab.edu store1

192.168.182.133 store2.openlab.edu store2

192.168.182.134 store3.openlab.edu store3

192.168.182.135 db1.openlab.edu db1

192.168.182.136 db2.openlab.edu db2

192.168.182.137 db3.openlab.edu db3

192.168.182.130 realserver1.openlab.edu realserver1

192.168.182.131 realserver2.openlab.edu realserver2

192.168.182.138 dns.openlab.edu

EOF

2、时间同步

3、安装jdk

yum localinstall jdk-8u261-linux-x64.rpm -y

4、创建挂载点,并挂载cephfs

mkdir /apps

5、配置ceph客户端

# 配置ceph yum 源

cat > /etc/yum.repos.d/ceph.repo <<EOF

[ceph]

name=ceph x86_64

baseurl=https://repo.huaweicloud.com/ceph/rpm-quincy/el8/x86_64

enabled=1

gpgcheck=0

[ceph-noarch]

name=ceph noarch

baseurl=https://repo.huaweicloud.com/ceph/rpm-quincy/el8/noarch

enabled=1

gpgcheck=0

[ceph-source]

name=ceph SRPMS

baseurl=https://repo.huaweicloud.com/ceph/rpm-quincy/el8/SRPMS

enabled=1

gpgcheck=0

EOF

# 安装 ceph-common

dnf install -y ceph-common --nobest

# rs1,rs2从 store1 拷贝 ceph.conf ceph.client.rw.keyring 到 /etc/ceph 目录

[root@store1 ~]# ceph auth get client.rw -o /etc/ceph/ceph.client.rw.keyring

[root@realserver1 ~]# scp store1:/etc/ceph/{ceph.conf,ceph.client.rw.keyring} /etc/ceph

[root@realserver2 ~]# scp store1:/etc/ceph/{ceph.conf,ceph.client.rw.keyring} /etc/ceph

# ceph --id rw -s

cluster:

id: d66290d6-0fff-11ef-aa38-e2b603ebf5b6

health: HEALTH_OK

services:

mon: 2 daemons, quorum store3,store2 (age 95m)

mgr: store2.rrptfl(active, since 93m), standbys: store3.evqoex

mds: 1/1 daemons up, 1 standby

osd: 9 osds: 9 up (since 94m), 9 in (since 98m)

data:

volumes: 1/1 healthy

pools: 3 pools, 273 pgs

objects: 24 objects, 579 KiB

usage: 2.6 GiB used, 447 GiB / 450 GiB avail

pgs: 273 active+clean

6、挂载 cephfs

#这里的挂载,在重启后可能会失效,当重启服务器后发现网页访问不到jeesns,可以查看此出挂载问题

echo ':/ /apps ceph defaults,_netdev,name=rw 0 0' >> /etc/fstab

mount -a

7、上传tomcat 并解压至 /apps

⚠️ 只在realserver1操作:

[root@realserver1 ~]# dnf install tar -y

[root@realserver1 ~]# tar xf apache-tomcat-8.5.20.tar.gz -C /apps/

[root@realserver1 ~]# mv /apps/apache-tomcat-8.5.20/ /apps/tomcat

提高服务管理脚本

cat > /usr/lib/systemd/system/tomcat.service << EOF

[Unit]

Description=Apache Tomcat 8

After=syslog.target network.target

[Service]

Type=forking

Environment=JAVA_HOME=/usr/java/jdk1.8.0_261-amd64

Environment=CATALINA_HOME=/apps/tomcat

ExecStart=/apps/tomcat/bin/startup.sh

ExecReload=/bin/kill -s HUP $MAINPID

ExecStop=/bin/kill -s QUIT $MAINPID

PrivateTmp=true

[Install]

WantedBy=multi-user.target

EOF

下面启动服务两台机器同时操作

[root@realserver1 ~]# systemctl daemon-reload

[root@realserver1 ~]# systemctl start tomcat

[root@realserver1 ~]# systemctl enable tomcat

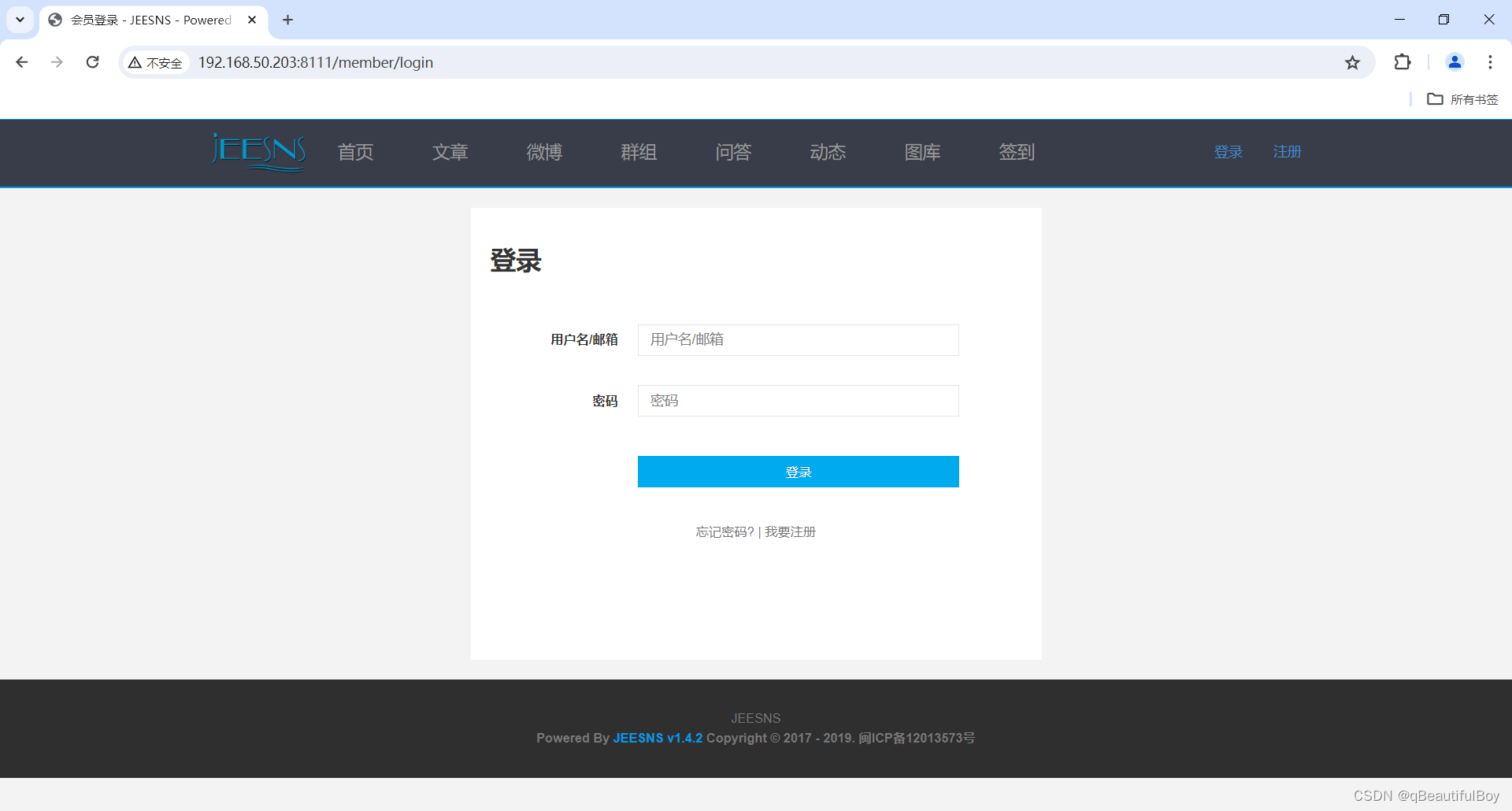

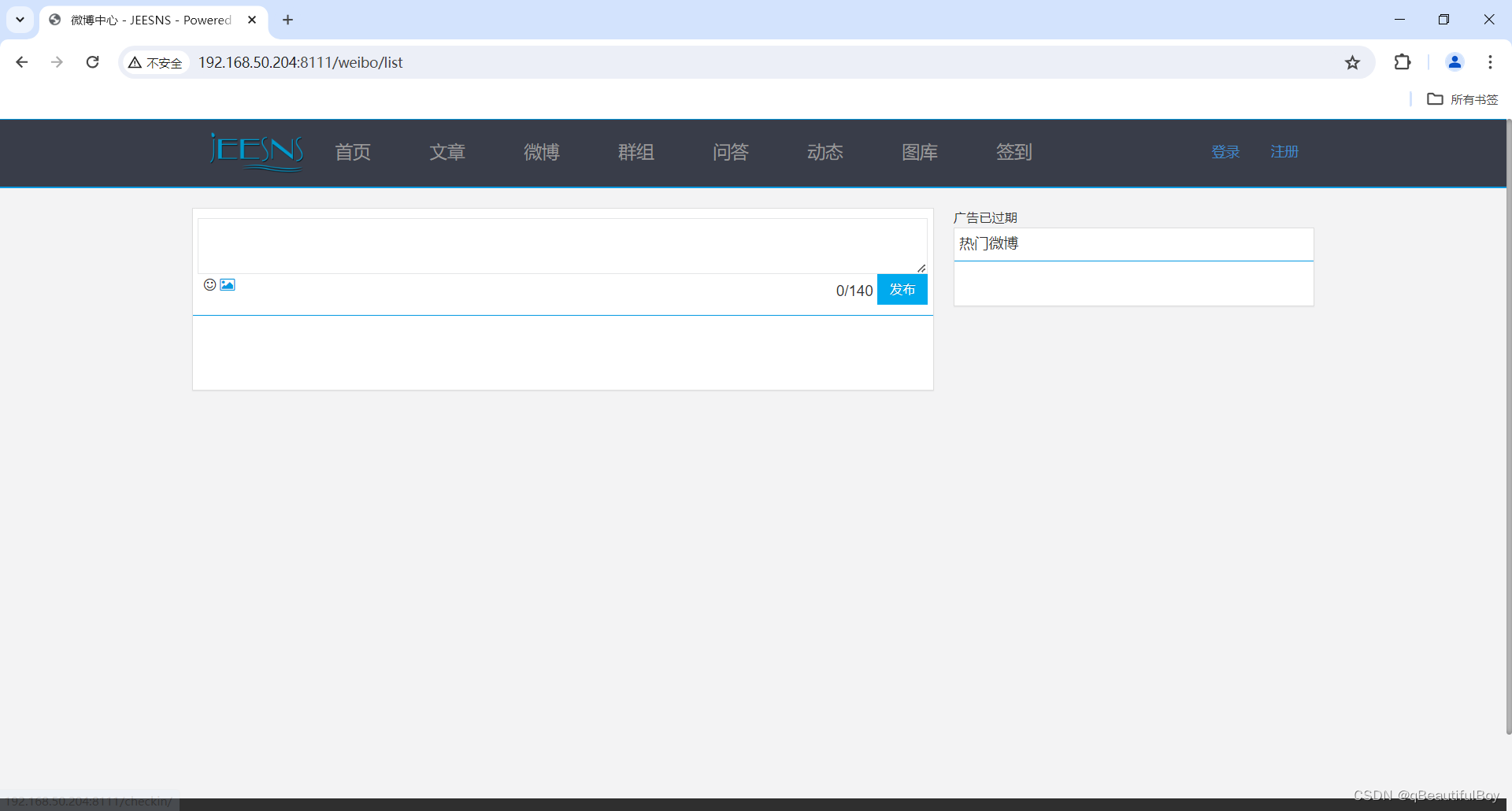

8、部署应用包

本文使用 JEESNS

只在一个应用节点操作即可。因为已经将cpeh挂载到/apps目录下,一机操作,两机都有

# 上传并解压包

[root@realserver1 ~]# dnf install unzip -y

[root@realserver1 ~]# unzip jeesns-v1.4.2.zip

# 数据库部分

MariaDB [(none)]> create database jeesns character set utf8;

Query OK, 1 row affected (0.004 sec)

MariaDB [(none)]> grant all on jeesns.* to jeesns@'%' identified by 'jeesns';

Query OK, 0 rows affected (0.010 sec)

# 导入数据

[root@realserver1 ~]# cd /root/jeesns-v1.4.2

[root@realserver1 jeesns-v1.4.2]# scp jeesns-web/database/jeesns.sql db1:~

MariaDB [jeesns]> source /root/jeesns.sql

# 部署war包

[root@realserver1 jeesns-v1.4.2]# rm -rf /apps/tomcat/webapps/*

[root@realserver1 jeesns-v1.4.2]# mv war/jeesns.war /apps/tomcat/webapps/ROOT.war

# 修改jdbc

vi /apps/tomcat/webapps/ROOT/WEB-INF/classes/jeesns.properties

jdbc.driver=com.mysql.jdbc.Driverjdbc.url=jdbc:mysql://192.168.182.200:33060/jeesns?characterEncoding=utf8&zeroDateTimeBehavior=convertToNull&allowMultiQueries=true

jdbc.user=jeesns

jdbc.password=jeesns

managePath=manage

groupPath=group

weiboPath=weibo

frontTemplate=front

memberTemplate=member

manageTemplate=manage

mobileTemplate=mobile

# 两台应用服务器重启tomcat

# systemctl restart tomcat

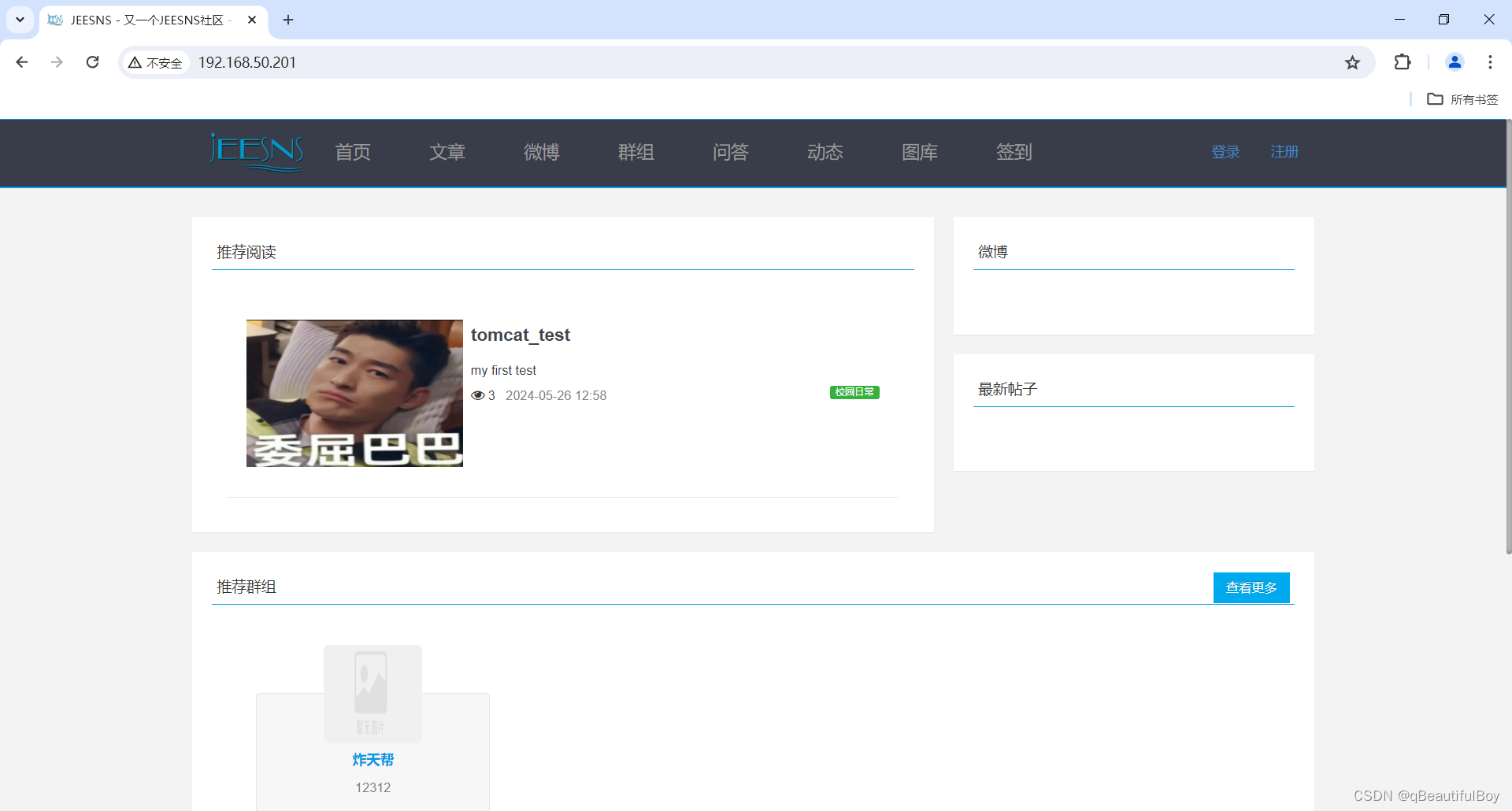

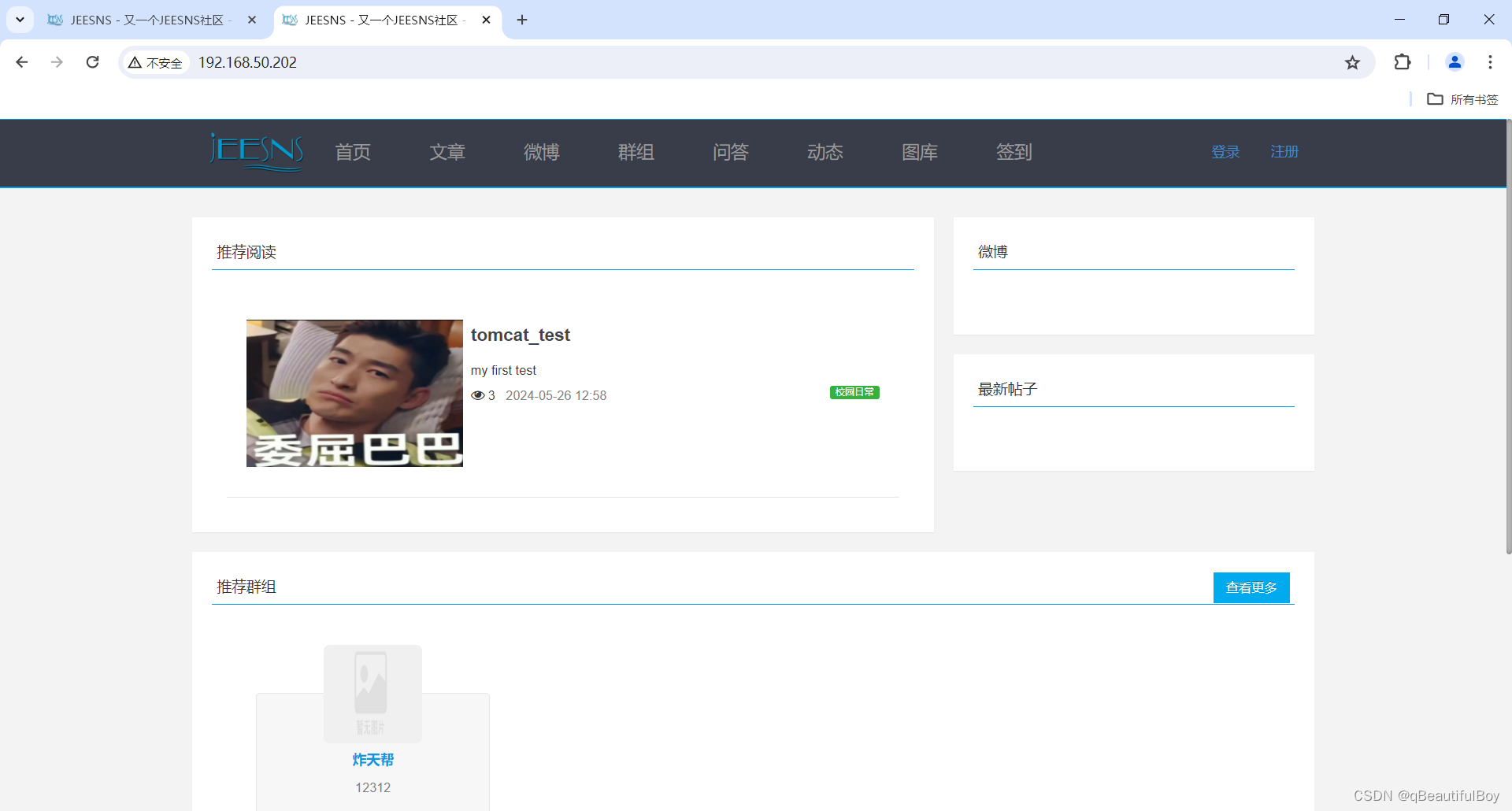

此出演示web页面成果

[root@realserver1 jeesns-v1.4.2]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:3d:27:73 brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.50.203/24 brd 192.168.50.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::c8d:5b9e:e18a:bd40/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:3d:27:7d brd ff:ff:ff:ff:ff:ff

altname enp11s0

浏览器访问192.168.50.203:8111 #默认为8080端口,这里是我修改了tomcat的端口

访问192.168.50.204:8111

负载高可用配置

时间同步与hosts解析与前面相同

1、nginx配置负载均衡

# dnf install -y nginx

# cd /etc/nginx/conf.d/

# vi web.conf

upstream appPools {

ip_hash;

server 192.168.182.130:8111;

server 192.168.182.131:8111;

}

server {

listen 80;

server_name www.openlab.edu; #在windows上配置个hosts解析后,在浏览器也能访问www.openlab.edu

location / {

proxy_pass http://appPools;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $remote_addr;

}

}

# systemctl enable --now nginx

2、keepalived 高可用配置

# dnf install -y keepalived

# vi /etc/keepalived/check_nginx.sh

#!/bin/bash

#代码一定注意空格,逻辑就是:如果nginx进程不存在则启动nginx,如果nginx无法启动则kill掉keepalived所有进程

A=`ps -C nginx --no-header |wc -l`

if [ $A -eq 0 ];then

systemctl start nginx

sleep 3

if [ `ps -C nginx --no-header |wc -l` -eq 0 ]

then

systemctl stop keepalived

else

exit

fi

fi

#添加执行权限

chmod +x /etc/keepalived/check_nginx.sh

# directory1 keepalived 配置

# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id nginx1

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 2

}

vrrp_instance nginx {

state BACKUP

nopreempt

interface ens160

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.50.249

}

track_script {

chk_nginx

}

}

# directory2 配置

# vi /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id nginx2

}

vrrp_script chk_nginx {

script "/etc/keepalived/check_nginx.sh"

interval 2

}

vrrp_instance nginx {

state BACKUP

nopreempt

interface ens160

virtual_router_id 52

priority 80

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.50.249

}

track_script {

chk_nginx

}

}

启动keepalived

# systemctl enable --now keepalived.service

测试:

负载调度器1 ip

[root@directory1 conf.d]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:d2:fe:ae brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.50.201/24 brd 192.168.50.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet 192.168.50.249/32 scope global ens160

valid_lft forever preferred_lft forever

inet6 fe80::1ea4:f154:3193:1694/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens224: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:d2:fe:b8 brd ff:ff:ff:ff:ff:ff

altname enp19s0

inet 192.168.182.128/24 brd 192.168.182.255 scope global dynamic noprefixroute ens224

valid_lft 1615sec preferred_lft 1615sec

inet6 fe80::a640:e0be:5c0a:6c45/64 scope link noprefixroute

valid_lft forever preferred_lft forever

负载调度器2 ip

[root@directory2 conf.d]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:db:5c:b4 brd ff:ff:ff:ff:ff:ff

altname enp3s0

inet 192.168.50.202/24 brd 192.168.50.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::1f49:8a9a:389:4236/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:0c:29:db:5c:be brd ff:ff:ff:ff:ff:ff

altname enp11s0

inet 192.168.182.129/24 brd 192.168.182.255 scope global noprefixroute ens192

valid_lft forever preferred_lft forever

inet6 fe80::a5f3:ea22:173f:9321/64 scope link noprefixroute

valid_lft forever preferred_lft forever

1367

1367

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?