镜像:docker pull bde2020/hive-metastore-postgresql:2.3.0

docker pull bde2020/hive:latest

hive-mysql-dockerfile

# cat hive-site.xml

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?><!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

--><configuration>

<property><name>hive.metastore.uris</name><value>thrift://hive-metastore-svc:9083</value></property>

<property><name>datanucleus.autoCreateSchema</name><value>false</value></property>

<property><name>javax.jdo.option.ConnectionURL</name><value>jdbc:mysql://mysql-svc:3306/metastore</value></property>

<property><name>javax.jdo.option.ConnectionDriverName</name><value>com.mysql.jdbc.Driver</value></property>

<property><name>javax.jdo.option.ConnectionPassword</name><value>123456</value></property>

<property><name>javax.jdo.option.ConnectionUserName</name><value>root</value></property>

</configuration>

FROM bde2020/hive:2.3.2-postgresql-metastore

COPY mysql-connector-java-5.1.47.jar /opt/hive/libs/

COPY hive-site.xml /opt/hive/conf

mysql-hive-metastore.yaml

apiVersion: v1

kind: ReplicationController

metadata:

labels:

app: hive-mysql-metastore

name: hive-mysql-metastore

spec:

replicas: 1

selector:

app: hive-mysql-metastore

template:

metadata:

labels:

app: hive-mysql-metastore

spec:

containers:

- name: hive-mysql-metastore

image: docker-registry-node:5000/mysql:5.7.37

ports:

- containerPort: 3306

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

---

apiVersion: v1

kind: Service

metadata:

labels:

app: mysql-svc

name: mysql-svc

spec:

type: NodePort

ports:

- port: 3306

targetPort: 3306

nodePort: 32306

name: mysql-port

selector:

app: hive-mysql-metastore

hive-config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: hive-config

data:

HIVE_SITE_CONF_javax_jdo_option_ConnectionURL: "jdbc:mysql://mysql-svc:3306/metastore"

HIVE_SITE_CONF_javax_jdo_option_ConnectionDriverName: "com.mysql.jdbc.Driver"

HIVE_SITE_CONF_javax_jdo_option_ConnectionUserName: "root"

HIVE_SITE_CONF_javax_jdo_option_ConnectionPassword: "123456"

HIVE_SITE_CONF_datanucleus_autoCreateSchema: "false"

HIVE_SITE_CONF_hive_metastore_uris: "thrift://hive-metastore-svc:9083"

HDFS_CONF_dfs_namenode_datanode_registration_ip___hostname___check: "false"

CORE_CONF_fs_defaultFS: "hdfs://hadoop-hdfs-master:9000"

CORE_CONF_hadoop_http_staticuser_user: "root"

CORE_CONF_hadoop_proxyuser_hue_hosts: "*"

CORE_CONF_hadoop_proxyuser_hue_groups: "*"

HDFS_CONF_dfs_webhdfs_enabled: "true"

HDFS_CONF_dfs_permissions_enabled: "false"

YARN_CONF_yarn_log___aggregation___enable: "true"

YARN_CONF_yarn_resourcemanager_recovery_enabled: "true"

YARN_CONF_yarn_resourcemanager_store_class: "org.apache.hadoop.yarn.server.resourcemanager.recovery.FileSystemRMStateStore"

YARN_CONF_yarn_resourcemanager_fs_state___store_uri: /rmstate

YARN_CONF_yarn_nodemanager_remote___app___log___dir: /app-logs

YARN_CONF_yarn_log_server_url: "http://historyserver:8188/applicationhistory/logs/"

YARN_CONF_yarn_timeline___service_enabled: "true"

YARN_CONF_yarn_timeline___service_generic___application___history_enabled: "true"

YARN_CONF_yarn_resourcemanager_system___metrics___publisher_enabled: "true"

YARN_CONF_yarn_resourcemanager_hostname: "hadoop-yarn-master"

YARN_CONF_yarn_timeline___service_hostname: "historyserver"

YARN_CONF_yarn_resourcemanager_address: "hadoop-yarn-master:8032"

YARN_CONF_yarn_resourcemanager_scheduler_address: "hadoop-yarn-master:8030"

YARN_CONF_yarn_resourcemanager_resource__tracker_address: "hadoop-yarn-master:8031"

hive-metastore-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: hive-metastore

name: hive-metastore

spec:

replicas: 1

selector:

matchLabels:

app: hive-metastore

serviceName: "hive-metastore"

template:

metadata:

labels:

app: hive-metastore

spec:

containers:

- image: docker-registry-node:5000/hive-mysql-metastore:v1

name: hive-metastore

ports:

- containerPort: 9083

### 启动后执行

lifecycle:

postStart:

exec:

command: ["/bin/bash", "-c", "/opt/hive/bin/hive --service metastore &"]

envFrom:

- configMapRef:

name: hive-config

env:

- name: "TZ"

value: "Asia/Shanghai"

- name: "SERVICE_PRECONDITION"

value: "hadoop-hdfs-master:50070 mysql-svc:3306"

---

apiVersion: v1

kind: Service

metadata:

labels:

app: hive-metastore

name: hive-metastore-svc

spec:

type: NodePort

ports:

- port: 9083

targetPort: 9083

nodePort: 31083

name: hive-metastore-port

selector:

app: hive-metastore

hive-service-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: hive-service

name: hive-service

spec:

replicas: 1

selector:

matchLabels:

app: hive-service

serviceName: "hive-service"

template:

metadata:

labels:

app: hive-service

spec:

containers:

- image: docker-registry-node:5000/hive:latest

name: hive-service

envFrom:

- configMapRef:

name: hive-config

env:

- name: "TZ"

value: "Asia/Shanghai"

- name: "SERVICE_PRECONDITION"

value: "hive-metastore-svc:9083"

---

apiVersion: v1

kind: Service

metadata:

labels:

app: hive-service

name: hive-service-svc

spec:

type: NodePort

ports:

- port: 10000

nodePort: 32000

name: service-port

selector:

app: hive-service

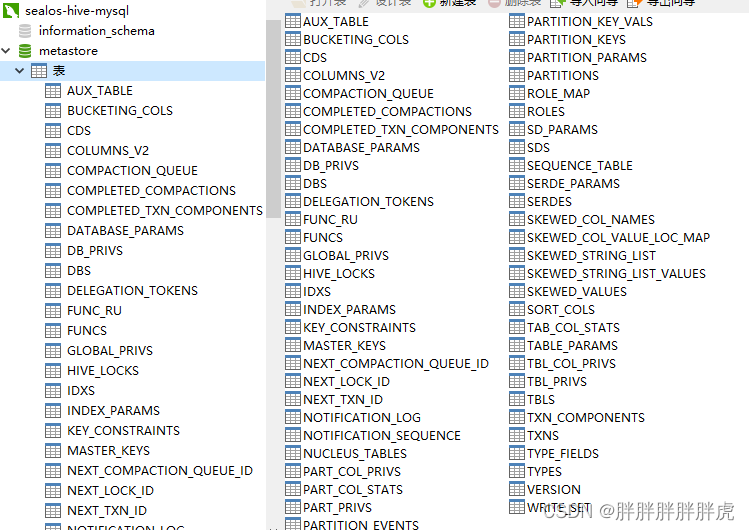

初始化元数据

注意:hive-metastore pod 不自动初始化元数据。创建mysql元数据库metastore,再执行 schematool -dbType mysql -initSchema

root@hive-metastore-0:/opt/hive/bin# schematool -dbType mysql -initSchema

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop-2.7.4/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://mysql-svc:3306/metastore

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: root

Tue May 24 15:55:07 CST 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

org.apache.hadoop.hive.metastore.HiveMetaException: Failed to get schema version.

Underlying cause: com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException : Unknown database 'metastore'

SQL Error code: 1049

Use --verbose for detailed stacktrace.

*** schemaTool failed ***

root@hive-metastore-0:/opt/hive/bin# schematool -dbType mysql -initSchema

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop-2.7.4/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Metastore connection URL: jdbc:mysql://mysql-svc:3306/metastore

Metastore Connection Driver : com.mysql.jdbc.Driver

Metastore connection User: root

Tue May 24 15:55:53 CST 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Starting metastore schema initialization to 2.3.0

Initialization script hive-schema-2.3.0.mysql.sql

Tue May 24 15:55:53 CST 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

Initialization script completed

Tue May 24 15:55:55 CST 2022 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

schemaTool completed

# kubectl exec -it hive-server-0 -- hive

Error from server (NotFound): pods "hive-server-0" not found

-bash-4.2# kubectl exec -it hive-service-0 -- hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/hive/lib/log4j-slf4j-impl-2.6.2.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop-2.7.4/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Logging initialized using configuration in file:/opt/hive/conf/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

hive> show databases;

OK

default

Time taken: 1.02 seconds, Fetched: 1 row(s)

hive>

1380

1380

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?