上一篇文章 《Flink Cep 源码分析》我们可以知道Flink cep中Pattern的创建,state的转换,以及匹配结果的数据。这一篇则对Flink cep的两个痛点进行扩展:

1.不能动态规则更新

2.不支持 Pattern间within()

对于这两个问题的解决思路:

动态规则更新:包括 mysql存储规则信息,zookeeper通知规则更新,JaninoCompiler执行动态规则(替换groovy+aviator)

Pattern间within():则是对cep中两个Pattern间设置超时时间 新增WithinType枚举类(PREVIOUS_AND_CURRENT,FIRST_AND_LAST) 来区分是全局超时还是间隔超时设置,参考:

FLIP-228: Support Within between events in CEP Pattern - Apache Flink - Apache Software Foundation

首先,动态规则更新这个实现已经有很多大佬都出了文章,我这边也是借鉴他们的思路进行实现,并且也根据自己的想法进行了实现。大家可以看下啤酒鸭大佬的文章:

Flink cep动态模板+cep规则动态修改实践_黄瓜炖啤酒鸭的博客-CSDN博客_flinkcep动态规则

如果想要了解我的实现方式可以留言,我再写一篇文章来详细介绍下,本文先讲解下Pattern间Within()的实现方式。

1.案例代码

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.cep.condition.Begincondition;

import org.apache.flink.cep.condition.Endcondition;

import org.apache.flink.cep.condition.Middlecondition;

import org.apache.flink.cep.cus.WithinType;

import org.apache.flink.cep.pattern.Pattern;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.OutputTag;

import java.time.Duration;

import java.util.Map;

public class FlinkCepTest {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

env.setParallelism(1);

//数据源

KeyedStream<Tuple3<String, Long, String>, String> source = env.fromElements(

new Tuple3<String, Long, String>("1001", 1656914303000L, "success")

, new Tuple3<String, Long, String>("1001", 1656914304000L, "fail")

, new Tuple3<String, Long, String>("1001", 1656914305000L, "fail")

, new Tuple3<String, Long, String>("1001", 1656914306000L, "success")

, new Tuple3<String, Long, String>("1001", 1656914307000L, "end")

, new Tuple3<String, Long, String>("1001", 1656914308000L, "success")

, new Tuple3<String, Long, String>("1001", 1656914309000L, "fail")

, new Tuple3<String, Long, String>("1001", 1656914310000L, "success")

, new Tuple3<String, Long, String>("1001", 1656914311000L, "fail")

, new Tuple3<String, Long, String>("1001", 1656914312000L, "fail")

, new Tuple3<String, Long, String>("1001", 1656914313000L, "success")

, new Tuple3<String, Long, String>("1001", 1656914316000L, "end")

).assignTimestampsAndWatermarks(WatermarkStrategy

.<Tuple3<String, Long, String>>forBoundedOutOfOrderness(Duration.ofSeconds(1))

.withTimestampAssigner((event, timestamp) ->{

return event.f1;

}))

.keyBy(e -> e.f0);

Pattern<Tuple3<String, Long, String>,?> pattern = Pattern

.<Tuple3<String, Long, String>>begin("begin")

.where(new Begincondition())

.followedByAny("middle")

.where(new Middlecondition())

.within(WithinType.PREVIOUS_AND_CURRENT, Time.seconds(5))

.followedBy("end")

.where(new Endcondition())

.within(WithinType.PREVIOUS_AND_CURRENT, Time.seconds(5))

;

//TODO 内部构建 PatternStreamBuilder 并返回 PatternStream

PatternStream patternStream = CEP.pattern(source, pattern);

OutputTag<Map> outputTag =

new OutputTag<Map>("exec-timeout") {};

SingleOutputStreamOperator select = patternStream.select(outputTag, new PatternTimeoutFunction() {

@Override

public Map timeout(Map map, long timeoutTimestamp) throws Exception {

return map;

}

}, new PatternSelectFunction<Tuple3<String, Long, String>, Map>() {

@Override

public Map select(Map map) throws Exception {

return map;

}

});

select.print("normal");

select.getSideOutput(outputTag).print("timeout");

env.execute("cep");

}

}2.功能实现

2.1 首先我们创建一个枚举类,用来判断当前within是全局超时时间还是间隔超时时间

public enum WithinType {

// Interval corresponds to the maximum time gap between the previous and current event.

PREVIOUS_AND_CURRENT,

// Interval corresponds to the maximum time gap between the first and last event.

FIRST_AND_LAST;

}2.2 之后在 org.apache.flink.cep.nfa.State 类中添加一个字段来表示间隔超时时间长度

public class State<T> implements Serializable {

private static final long serialVersionUID = 6658700025989097781L;

private final String name;

private final Long previousWindowTime;

private StateType stateType;

private final Collection<StateTransition<T>> stateTransitions;

public State(final String name,final Long previousWindowTime, final StateType stateType) {

this.name = name;

this.previousWindowTime = previousWindowTime;

this.stateType = stateType;

stateTransitions = new ArrayList<>();

}

.

.

.

}2.3 上一篇文章我们讲过 org.apache.flink.cep.nfa.compiler.NFACompiler.NFAFactoryCompiler#compileFactory() 会根据Pattern创建对应的states 所以我们需要在创建state时将 previousWindowTime 设置进去,修改创建方法:

private State<T> createState(String name, Long previousWindowTime, State.StateType stateType) {

String stateName = stateNameHandler.getUniqueInternalName(name);

State<T> state = new State<>(stateName, previousWindowTime, stateType);

states.add(state);

return state;

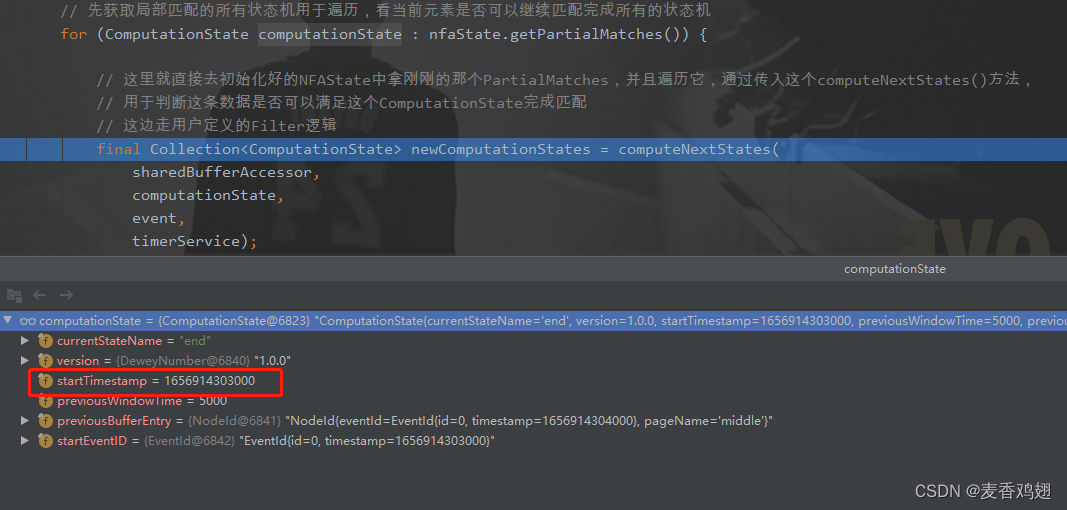

}2.4 org.apache.flink.cep.nfa.NFA#computeNextStates() 方法中会根据当前的state计算出下一个state是什么。之后调用org.apache.flink.cep.nfa.NFA#addComputationState()创建新的computationState。

private void addComputationState(

SharedBufferAccessor<T> sharedBufferAccessor,

List<ComputationState> computationStates,

State<T> currentState,

NodeId previousEntry,

DeweyNumber version,

long startTimestamp,

EventId startEventId) throws Exception {

ComputationState computationState = ComputationState.createState(

currentState.getName(), previousEntry, version, startTimestamp, currentState.getPreviousWindowTime(), startEventId);

computationStates.add(computationState);

sharedBufferAccessor.lockNode(previousEntry);

}2.5 当对数据进行处理是遍可以看到计算中的状态 computationState 包含 previousWindowTime 这个字段。

2.6 org.apache.flink.cep.nfa.NFA#advanceTime() 超时处理地方进行间隔超时判断和全局超时判断。 isStatePreTimedOut() ,isStateTimedOut()

public Collection<Tuple2<Map<String, List<T>>, Long>> advanceTime(

final SharedBufferAccessor<T> sharedBufferAccessor,

final NFAState nfaState,

final long timestamp) throws Exception {

final Collection<Tuple2<Map<String, List<T>>, Long>> timeoutResult = new ArrayList<>();

final PriorityQueue<ComputationState> newPartialMatches = new PriorityQueue<>(NFAState.COMPUTATION_STATE_COMPARATOR);

for (ComputationState computationState : nfaState.getPartialMatches()) {

if (isStateTimedOut(computationState, timestamp)) {

if (handleTimeout) {

// extract the timed out event pattern

Map<String, List<T>> timedOutPattern = sharedBufferAccessor.materializeMatch(extractCurrentMatches(

sharedBufferAccessor,

computationState));

timeoutResult.add(Tuple2.of(timedOutPattern, computationState.getStartTimestamp() + windowTime));

}

sharedBufferAccessor.releaseNode(computationState.getPreviousBufferEntry());

nfaState.setStateChanged();

} else if (isStatePreTimedOut(computationState, timestamp)) {

if (handleTimeout) {

// extract the timed out event pattern

Map<String, List<T>> timedOutPattern = sharedBufferAccessor.materializeMatch(extractCurrentMatches(

sharedBufferAccessor,

computationState));

long previousTimestamp = computationState.getPreviousBufferEntry().getEventId().getTimestamp();

timeoutResult.add(Tuple2.of(timedOutPattern, previousTimestamp + computationState.getPreviousWindowTime()));

}

sharedBufferAccessor.releaseNode(computationState.getPreviousBufferEntry());

nfaState.setStateChanged();

} else {

newPartialMatches.add(computationState);

}

}

nfaState.setNewPartialMatches(newPartialMatches);

sharedBufferAccessor.advanceTime(timestamp);

return timeoutResult;

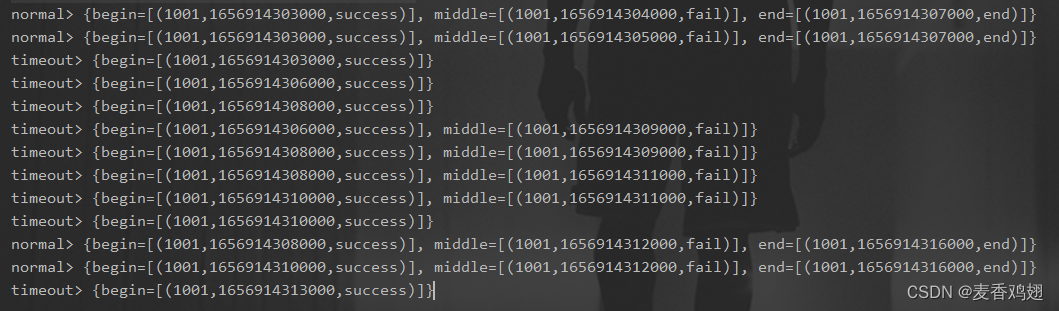

}2.7 查看超时数据

2546

2546

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?