1.概述

2.一 序

首先感谢王昊@二代的书,他要去阿里了,祝他早日大富大贵。回到正题,kafka关于NIO这一块的代码,我大概断断续续看了几天,觉得还是看起来吃力,主要是NIO这块底层的都忘了,所以一般都使用像netty的框架屏蔽底层的细节,让上层业务只需要监听端口,建立连接、接受请求、处理请求、写返回就可以了。kafka为啥没用现成的netty而是自己封装接口呢?网上看了段介绍:Performance and no dependency-hell is great! 。大概就是性能跟不想引入太多依赖。当然这也体现出kafka作者的牛逼之处。

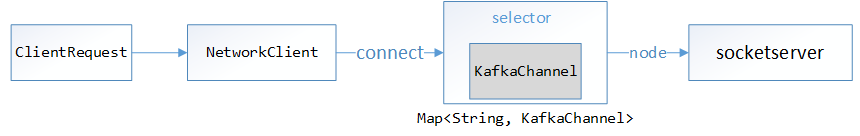

Java 的NIO基本的有Selector、Channel、ByteBuffer。kafka对应的封装NIO如下。

如果对于NIO忘了,推荐先看看:https://www.jianshu.com/p/0d497fe5484a

https://segmentfault.com/a/1190000012316621

熟悉的可以无视,跳过了。

二 network相关类

Selectable是其中的网络操作的接口, Selector是具体的实现(后面细讲), 包括了发送请求、接收返回、建立连接、断开连接等操作。

/**

* An interface for asynchronous, multi-channel network I/O

* Selectable是kafka NIO的网络操作的接口.

* Selector是具体的实现, 包括了发送请求、接收返回、建立连接、断开连接等操作。

*/

public interface Selectable {

...

}

/*

* Kafka 对 Java NIO 相关接口的封装,实现了Selectable 接口。负责具体的连接、写入、读取等操作。

*/

public class Selector implements Selectable, AutoCloseable {

public static final long NO_IDLE_TIMEOUT_MS = -1;

public static final int NO_FAILED_AUTHENTICATION_DELAY = 0;

private enum CloseMode {

GRACEFUL(true), // process outstanding staged receives, notify disconnect

NOTIFY_ONLY(true), // discard any outstanding receives, notify disconnect

DISCARD_NO_NOTIFY(false); // discard any outstanding receives, no disconnect notification

boolean notifyDisconnect;

CloseMode(boolean notifyDisconnect) {

this.notifyDisconnect = notifyDisconnect;

}

}

private final Logger log;

//用来监听网络类型

private final java.nio.channels.Selector nioSelector;

//维护了NodeId与KafkaChannel之间的映射关系

private final Map<String, KafkaChannel> channels;

private final Set<KafkaChannel> explicitlyMutedChannels;

//内存溢出标识

private boolean outOfMemory;

//记录发出去的请求

private final List<Send> completedSends;

//记录接收到的请求

private final List<NetworkReceive> completedReceives;

//暂存一次OP_READ事件处理过程中读取到的全部请求

private final Map<KafkaChannel, Deque<NetworkReceive>> stagedReceives;

//作为client,在调用SocketChannel#connect方法连接远端时返回true的连接

private final Set<SelectionKey> immediatelyConnectedKeys;

//关闭中

private final Map<String, KafkaChannel> closingChannels;

private Set<SelectionKey> keysWithBufferedRead;

//记录一次poll过程中发现的断开的连接

private final Map<String, ChannelState> disconnected;

//记录一次poll过程中发现的新建立的连接

private final List<String> connected;

//记录向哪些Node发送的请求失败了

private final List<String> failedSends;

private final Time time;

private final SelectorMetrics sensors;

//用于创建KafkaChannel的Builder

private final ChannelBuilder channelBuilder;

//一次读取的最大大小

private final int maxReceiveSize;

private final boolean recordTimePerConnection;

private final IdleExpiryManager idleExpiryManager;

private final LinkedHashMap<String, DelayedAuthenticationFailureClose> delayedClosingChannels;

private final MemoryPool memoryPool;

private final long lowMemThreshold;

private final int failedAuthenticationDelayMs;

//indicates if the previous call to poll was able to make progress in reading already-buffered data.

//this is used to prevent tight loops when memory is not available to read any more data

private boolean madeReadProgressLastPoll = true;

Send作为要发送数据的接口, 子类实现complete()方法用于判断是否已经发送完成 ,实现writeTo()方法来实现写入到Channel中

/**

* This interface models the in-progress sending of data to a specific destination

* 发送数据接口

*/

public interface Send {

/**

* The id for the destination of this send

*/

String destination();

/**

* Is this send complete?

*/

boolean completed();

/**

* Write some as-yet unwritten bytes from this send to the provided channel. It may take multiple calls for the send

* to be completely written

* @param channel The Channel to write to

* @return The number of bytes written

* @throws IOException If the write fails

*/

long writeTo(GatheringByteChannel channel) throws IOException;

/**

* Size of the send

*/

long size();

}

send接口有多个子类RecordsSend、MultiRecordsSend、ByteBufferSend,以ByteBufferSend实现为例,

/**

* A send backed by an array of byte buffers

*/

public class ByteBufferSend implements Send {

//目标集群地址

private final String destination;

//计算的buffer的字节大小

private final int size;

//要发送的内容(说明kafka一次最大传输字节是有限定的)

protected final ByteBuffer[] buffers;

private int remaining;

private boolean pending = false;

//构造方法

public ByteBufferSend(String destination, ByteBuffer... buffers) {

this.destination = destination;

this.buffers = buffers;

for (ByteBuffer buffer : buffers)

remaining += buffer.remaining();

//size是bytebuffer的大小

this.size = remaining;

}

@Override

public String destination() {

return destination;

}

//没有要发送的数据了

@Override

public boolean completed() {

return remaining <= 0 && !pending;

}

@Override

public long size() {

return this.size;

}

//将字节流写入到channel中

@Override

public long writeTo(GatheringByteChannel channel) throws IOException {

//发送有channel.write实现,实现了kafka的send与channel的解耦(具体实现类如何,相互不干扰。 )

long written = channel.write(buffers);

if (written < 0)

throw new EOFException("Wrote negative bytes to channel. This shouldn't happen.");

remaining -= written;

//每次发送 都检查是否

pending = TransportLayers.hasPendingWrites(channel);

return written;

}

}

NetworkSend类继承了ByteBufferSend,增加了4字节表示内容大小(不包含这4byte),详见注释。

/**

* A size delimited Send that consists of a 4 byte network-ordered size N followed by N bytes of content

* 继承了ByteBufferSend,增加了4字节表示内容大小(不包含这4byte)。

*/

public class NetworkSend extends ByteBufferSend {

public NetworkSend(String destination, ByteBuffer buffer) {

//destination是broker地址

//buffer是需要传输的消息

super(destination, sizeDelimit(buffer));

}

private static ByteBuffer[] sizeDelimit(ByteBuffer buffer) {

//生成了一个新的buffer,buffer内容是参数buffer大小。

return new ByteBuffer[] {sizeBuffer(buffer.remaining()), buffer};

}

//可理解kafka字节流的为:buffer=buffer1+buffer2

//其中buffer2是我们发送到broker端消息,buffer1是一个4字节的大小的数字,其含义是消息buffer2的大小

//最终在broker读取字节流时候,先去取出头四个字节,感知接下来需要读取多少字节。然后读取。

private static ByteBuffer sizeBuffer(int size) {

//

ByteBuffer sizeBuffer = ByteBuffer.allocate(4);

//将 4 个包含给定 size 值的字节按照当前的字节顺序写入到此缓冲区的当前位置,然后将该位置增加 4。

sizeBuffer.putInt(size);

sizeBuffer.rewind();

return sizeBuffer;

}

}

与Send对应的是Receive,表示从Channel中读取的数据, NetworkReceive 实现了receive接口,是ByteBuffer的封装,表示一次请求的数据包。NetworkReceive从连接读取数据的时候,先读消息的头部4字节,其中封装了消息的长度,再按照其长度创建合适大小的ByteBuffer,然后读取消息体。

/**

* This interface models the in-progress reading of data from a channel to a source identified by an integer id

* 从Channel中读取的数据

*/

public interface Receive extends Closeable {

...

}

/**

* A size delimited Receive that consists of a 4 byte network-ordered size N followed by N bytes of content

*/

public class NetworkReceive implements Receive {

前面说了kafka的selector是基于nioSelector的封装,而创建连接的一系列操作都是由Channel去完成,KafkaChannel不仅封装了SocketChannel,还封装了Kafka自己的认证器Authenticator,和读写相关的NetworkReceive、Send。这个中间多个一个间接层:TransportLayer,为了封装普通和加密的Channel(TransportLayer根据网络协议的不同,提供不同的子类)而对于KafkaChannel提供统一的接口,这是策略模式很好的应用。

public class KafkaChannel {

/**

* Mute States for KafkaChannel:

*/

....

private final String id;

//封装了底层的SocketChannel及SelectionKey,TransportLayer根据网络协议的不同,

//提供不同的子类PlaintextTransportLayer,SslTransportLayer 实现,而对于KafkaChannel提供统一的接口,这是策略模式很好的应用

private final TransportLayer transportLayer;

private final Authenticator authenticator;

// Tracks accumulated network thread time. This is updated on the network thread.

// The values are read and reset after each response is sent.

private long networkThreadTimeNanos;

private final int maxReceiveSize;

private final MemoryPool memoryPool;

private NetworkReceive receive;

private Send send;

// Track connection and mute state of channels to enable outstanding requests on channels to be

// processed after the channel is disconnected.

private boolean disconnected;

private ChannelMuteState muteState;

private ChannelState state;

private SocketAddress remoteAddress;

network层主要的类就是这些。图片来自:https://blog.csdn.net/chunlongyu/article/details/52651960

对着这个图,有清晰很多,一开始看的时候,觉得类怎么这么多,封装的好复杂,调用层级太多。多看看就好了。感谢原作者。

三 发送流程

KafkaProducer.dosend();

Sender.run();

NetworkClient.poll()(NetworkClient.dosend());

Selector.poll();

如果从发送消息的角度来看,应该涉及的主要流程如上所示,前面介绍了RecordAccumulator(3),sender1,按理应该从networkclient开始,限于篇幅,重点看selector。

3.1 selector.connect()

NetworkClient的请求一般都是交给Selector去完成的。Selector使用NIO异步非阻塞模式负责具体的连接、写入、读取等操作。

先看下连接过程。客户端在和节点连接的时候,会创建和服务端的SocketChannel连接通道。Selector维护了每个目标节点对应的KafkaChannel

//selector.java

public void connect(String id, InetSocketAddress address, int sendBufferSize, int receiveBufferSize) throws IOException {

ensureNotRegistered(id);

// 创建一个SocketChannel

SocketChannel socketChannel = SocketChannel.open();

try {//设置参数

configureSocketChannel(socketChannel, sendBufferSize, receiveBufferSize);

boolean connected = doConnect(socketChannel, address);

// 对CONNECT事件进行注册

SelectionKey key = registerChannel(id, socketChannel, SelectionKey.OP_CONNECT);

if (connected) {

// OP_CONNECT won't trigger for immediately connected channels

log.debug("Immediately connected to node {}", id);

// 加入到连接上的集合中

immediatelyConnectedKeys.add(key);

key.interestOps(0); // 取消对该连接的CONNECT事件的监听

}

} catch (IOException | RuntimeException e) {

socketChannel.close();

throw e;

}

}

private void configureSocketChannel(SocketChannel socketChannel, int sendBufferSize, int receiveBufferSize)

throws IOException {

//配置成非阻塞模式

socketChannel.configureBlocking(false);

// 创建socket并设置相关属性(这里是客户端)

Socket socket = socketChannel.socket();

//设置成长连接

socket.setKeepAlive(true);

if (sendBufferSize != Selectable.USE_DEFAULT_BUFFER_SIZE)

socket.setSendBufferSize(sendBufferSize);//设置SO_SNDBUF大小

if (receiveBufferSize != Selectable.USE_DEFAULT_BUFFER_SIZE)

socket.setReceiveBufferSize(receiveBufferSize);//设置SO_RCVBUF大小

socket.setTcpNoDelay(true);

}

// Visible to allow test cases to override. In particular, we use this to implement a blocking connect

// in order to simulate "immediately connected" sockets.

protected boolean doConnect(SocketChannel channel, InetSocketAddress address) throws IOException {

try {

// 调用SocketChannel的connect方法,该方法会向远端发起tcp建连请求

// 因为是非阻塞的,所以该方法返回时,连接不一定已经建立好(即完成3次握手)。连接如果已经建立好则返回true,否则返回false。

//一般来说server和client在一台机器上,该方法可能返回true。在后面会通过KSelector.finishConnect() 方法确认连接是否真正建立了。

return channel.connect(address);

} catch (UnresolvedAddressException e) {

throw new IOException("Can't resolve address: " + address, e);

}

}

private SelectionKey registerChannel(String id, SocketChannel socketChannel, int interestedOps) throws IOException {

//将这个socketChannel注册到nioSelector上,并关注OP_CONNECT事件。

SelectionKey key = socketChannel.register(nioSelector, interestedOps);

// 构造一个KafkaChannel并把它注册到key上

KafkaChannel channel = buildAndAttachKafkaChannel(socketChannel, id, key);

//将NodeId和KafkaChannel绑定,放到channels中管理。

this.channels.put(id, channel);

if (idleExpiryManager != null)

idleExpiryManager.update(channel.id(), time.nanoseconds());

return key;

}

private KafkaChannel buildAndAttachKafkaChannel(SocketChannel socketChannel, String id, SelectionKey key) throws IOException {

try {

//创建 KafkaChannel

KafkaChannel channel = channelBuilder.buildChannel(id, key, maxReceiveSize, memoryPool);

key.attach(channel);//将KafkaChannel注册到key上

return channel;

} catch (Exception e) {

try {

socketChannel.close();

} finally {

key.cancel();

}

throw new IOException("Channel could not be created for socket " + socketChannel, e);

}

}

注意下: 因为是非阻塞方式,所以socketChannel.connect()方法是发起一个连接,connect()方法在正式建立连接前就可能返回,为了确定连接是否建立,需要再调用finishConnect()确认完全连接上了。

selector.java

//finishConnect会作为key.isConnectable的处理方法,

public boolean finishConnect() throws IOException {

//we need to grab remoteAddr before finishConnect() is called otherwise

//it becomes inaccessible if the connection was refused.

SocketChannel socketChannel = transportLayer.socketChannel();

if (socketChannel != null) {

remoteAddress = socketChannel.getRemoteAddress();

}

//是否建立完成

boolean connected = transportLayer.finishConnect();

if (connected) {//建立完成,更改state

if (ready()) {

state = ChannelState.READY;

} else if (remoteAddress != null) {

state = new ChannelState(ChannelState.State.AUTHENTICATE, remoteAddress.toString());

} else {

state = ChannelState.AUTHENTICATE;

}

}

return connected;

}

3.2 Selector.send()

Selector.send()方法是将之前创建的RequestSend对象缓存到KafkaChannel的send字段中,并开始关注此连接的OP_WRITE事件,并没有发生网络I/O。在下次调用KSelector.poll()时,才会将RequestSend对象发送出去。如果此KafkaChannel的send字段上还保存着一个未完全发送成功的RequestSend请求,为了防止覆盖,会抛出异常。每个KafkaChannel一次poll过程中只能发送一个Send请求。

selector.java

/**

* Queue the given request for sending in the subsequent {@link #poll(long)} calls

* @param send The request to send

*/

public void send(Send send) {

String connectionId = send.destination();

//找到数据包相对应的channel

KafkaChannel channel = openOrClosingChannelOrFail(connectionId);

//如果所在的连接正在关闭中,则加入到失败集合failedSends中

if (closingChannels.containsKey(connectionId)) {

// ensure notification via `disconnected`, leave channel in the state in which closing was triggered

this.failedSends.add(connectionId);//失败

} else {

try {//暂存在这个channel里面,没有真正的发送,一次只能发送一个

channel.setSend(send);

} catch (Exception e) {

// update the state for consistency, the channel will be discarded after `close`

channel.state(ChannelState.FAILED_SEND);

// ensure notification via `disconnected` when `failedSends` are processed in the next poll

this.failedSends.add(connectionId);

close(channel, CloseMode.DISCARD_NO_NOTIFY);

if (!(e instanceof CancelledKeyException)) {

log.error("Unexpected exception during send, closing connection {} and rethrowing exception {}",

connectionId, e);

throw e;

}

}

}

}

客户端的请求Send设置到KafkaChannel中,KafkaChannel的TransportLayer会为SelectionKey注册WRITE事件。Channel的SelectionKey有了Connect和Write事件,在Selector的轮询过程中当发现这些事件到来,就开始执行真正的操作。基本流程就是:开始发送一个Send请求->注册OP_WRITE-> 发送请求… ->Send请求发送完成->取消OP_WRITE

KafkaChannel.java

//每次调用时都会注册一个 OP_WRITE 事件

public void setSend(Send send) {

if (this.send != null)//当前的不为空表示之前的Send请求还没有发送完毕,新的请求不能进来!

throw new IllegalStateException("Attempt to begin a send operation with prior send operation still in progress, connection id is " + id);

this.send = send;//暂存

// 添加对OP_WRITE事件的监听

this.transportLayer.addInterestOps(SelectionKey.OP_WRITE);

}

3.3 poll

在selector的轮询中可以操作读写事件。是真正执行网络I/O的地方,它会调用nioSelector.select()方法等待I/O事件发生。当Channel可写时,发送KafkaChannel.send字段(一次最多只发送一个RequestSend,有时候一个RequestSend也发送不完,需要多次poll才能发送完成);Channel可读时,读取数据到KafkaChannel.receive,读取一个完整的NetworkReceive后,会将其缓存存到stagedReceives中,当一次pollSelectionKeys()完成后会将stagedReceives中的数据转移到completedReceives。最后调用maybeCloseOldestConnection()方法,根据lruConnections记录和connectionsMaxIdleNanos最大空闲时间,关闭长期空闲的连接。

selector.java

/*

* 真正执行网络I/O 地方。 主要逻辑是:调用 用nioSelector.select(),处理selectedKeys

* poll轮询的策略:有数据(timeout=0)直接调用nioSelector.selectNow,否则每隔一定时间触发一次select调用。

*/

@Override

public void poll(long timeout) throws IOException {

if (timeout < 0)

throw new IllegalArgumentException("timeout should be >= 0");

boolean madeReadProgressLastCall = madeReadProgressLastPoll;

clear();//各种清空状态

boolean dataInBuffers = !keysWithBufferedRead.isEmpty();

if (hasStagedReceives() || !immediatelyConnectedKeys.isEmpty() || (madeReadProgressLastCall && dataInBuffers))

timeout = 0;

if (!memoryPool.isOutOfMemory() && outOfMemory) {

//we have recovered from memory pressure. unmute any channel not explicitly muted for other reasons

log.trace("Broker no longer low on memory - unmuting incoming sockets");

for (KafkaChannel channel : channels.values()) {

if (channel.isInMutableState() && !explicitlyMutedChannels.contains(channel)) {

channel.maybeUnmute();

}

}

outOfMemory = false;

}

/* check ready keys */

long startSelect = time.nanoseconds();

//调用nioSelector.select()方法,等待I/O事件的发生,之前的wakeup使其停止阻塞

int numReadyKeys = select(timeout);

long endSelect = time.nanoseconds();

this.sensors.selectTime.record(endSelect - startSelect, time.milliseconds());

//如果有就绪的事件或者immediatelyConnectedKeys非空

if (numReadyKeys > 0 || !immediatelyConnectedKeys.isEmpty() || dataInBuffers) {

//获取就绪的keys

Set<SelectionKey> readyKeys = this.nioSelector.selectedKeys();

// Poll from channels that have buffered data (but nothing more from the underlying socket)

if (dataInBuffers) {

keysWithBufferedRead.removeAll(readyKeys); //so no channel gets polled twice

Set<SelectionKey> toPoll = keysWithBufferedRead;

keysWithBufferedRead = new HashSet<>(); //poll() calls will repopulate if needed

//处理I/O操作的核心方法

pollSelectionKeys(toPoll, false, endSelect);

}

// Poll from channels where the underlying socket has more data

pollSelectionKeys(readyKeys, false, endSelect);

// Clear all selected keys so that they are included in the ready count for the next select

readyKeys.clear();

// 对immediatelyConnectedKeys进行处理。

pollSelectionKeys(immediatelyConnectedKeys, true, endSelect);

immediatelyConnectedKeys.clear();

} else {

madeReadProgressLastPoll = true; //no work is also "progress"

}

long endIo = time.nanoseconds();

this.sensors.ioTime.record(endIo - endSelect, time.milliseconds());

// Close channels that were delayed and are now ready to be closed

completeDelayedChannelClose(endIo);

// we use the time at the end of select to ensure that we don't close any connections that

// have just been processed in pollSelectionKeys

maybeCloseOldestConnection(endSelect);

// Add to completedReceives after closing expired connections to avoid removing

// channels with completed receives until all staged receives are completed.

// 前面收到数据加入到stagedReceives,这里对集合进行处理

addToCompletedReceives();

}

核心方法是:pollSelectionKeys

selector.java

/**

* handle any ready I/O on a set of selection keys

* @param selectionKeys set of keys to handle

* @param isImmediatelyConnected true if running over a set of keys for just-connected sockets

* @param currentTimeNanos time at which set of keys was determined

*/

// package-private for testing

void pollSelectionKeys(Set<SelectionKey> selectionKeys,

boolean isImmediatelyConnected,

long currentTimeNanos) {

for (SelectionKey key : determineHandlingOrder(selectionKeys)) {

//之前创建连接,把kafkachanel注册到key上,这里就是获取

KafkaChannel channel = channel(key);

long channelStartTimeNanos = recordTimePerConnection ? time.nanoseconds() : 0;

boolean sendFailed = false;

// register all per-connection metrics at once

sensors.maybeRegisterConnectionMetrics(channel.id());

if (idleExpiryManager != null)

idleExpiryManager.update(channel.id(), currentTimeNanos);

try {

/* complete any connections that have finished their handshake (either normally or immediately) */

//处理一些刚建立 tcp 连接的 channel

if (isImmediatelyConnected || key.isConnectable()) {

//socketChannel是否建立完成(connect是异步的,所以connect方法返回后不一定已经连接成功了)

if (channel.finishConnect()) {

//添加到已连接的集合中

this.connected.add(channel.id());

this.sensors.connectionCreated.record();

SocketChannel socketChannel = (SocketChannel) key.channel();

log.debug("Created socket with SO_RCVBUF = {}, SO_SNDBUF = {}, SO_TIMEOUT = {} to node {}",

socketChannel.socket().getReceiveBufferSize(),

socketChannel.socket().getSendBufferSize(),

socketChannel.socket().getSoTimeout(),

channel.id());

} else {//连接未完成,则跳过对此channel的后续处理:也就是理解为建立连接时前提。

continue;

}

}

/* if channel is not ready finish prepare */

//处理 tcp 连接还未完成的连接,进行传输层的握手及认证

if (channel.isConnected() && !channel.ready()) {

try {//调用kafkachanel.prepare()进行身份验证

channel.prepare();

} catch (AuthenticationException e) {

sensors.failedAuthentication.record();

throw e;

}

if (channel.ready())

sensors.successfulAuthentication.record();

}

//处理OP_READ事件

attemptRead(key, channel);

if (channel.hasBytesBuffered()) {

//this channel has bytes enqueued in intermediary buffers that we could not read

//(possibly because no memory). it may be the case that the underlying socket will

//not come up in the next poll() and so we need to remember this channel for the

//next poll call otherwise data may be stuck in said buffers forever. If we attempt

//to process buffered data and no progress is made, the channel buffered status is

//cleared to avoid the overhead of checking every time.

keysWithBufferedRead.add(key);

}

/* if channel is ready write to any sockets that have space in their buffer and for which we have data */

// 如果是WRITE事件

if (channel.ready() && key.isWritable()) {

Send send;

try {//真正的网络写

send = channel.write();

} catch (Exception e) {

sendFailed = true;

throw e;

}

// 一个Send对象可能会被拆成几次发送,write非空代表一个send发送完成

if (send != null) {

// completedSends已发送完成的集合加入send

this.completedSends.add(send);

this.sensors.recordBytesSent(channel.id(), send.size());

}

}

/* cancel any defunct sockets */

// 如果连接失效,则关闭

if (!key.isValid())

close(channel, CloseMode.GRACEFUL);

} catch (Exception e) {

String desc = channel.socketDescription();

if (e instanceof IOException)

log.debug("Connection with {} disconnected", desc, e);

else if (e instanceof AuthenticationException) // will be logged later as error by clients

log.debug("Connection with {} disconnected due to authentication exception", desc, e);

else

log.warn("Unexpected error from {}; closing connection", desc, e);

if (e instanceof DelayedResponseAuthenticationException)

maybeDelayCloseOnAuthenticationFailure(channel);

else

close(channel, sendFailed ? CloseMode.NOTIFY_ONLY : CloseMode.GRACEFUL);

} finally {

maybeRecordTimePerConnection(channel, channelStartTimeNanos);

}

}

}

关于KafkaChannel write

写操作的事件没有使用while循环来控制,而是在完成发送时取消掉Write事件。如果Send在一次write调用时没有写完,SelectionKey的OP_WRITE事件没有取消,下次isWritable事件会继续触发,直到整个Send请求发送完毕才取消。所以发送一个完整的Send请求是通过最外层的while(iter.hasNext),即SelectionKey控制的。

KafkaChannel.java

//调用send()发送send

public Send write() throws IOException {

Send result = null;

///如果send方法返回值为false,表示这个请求没有发送成功

if (send != null && send(send)) {

result = send;

//发送完毕后,设置send=null,,这样下一个请求判断到send=null就可以接着发送

send = null;

}

return result;

}

//写,可以分多次写。

//发送完成后,就删除这个 WRITE 事件

private boolean send(Send send) throws IOException {

// 最终调用Send#write进行真正的写(transportLayer包含有SocketChannel)

send.writeTo(transportLayer);

if (send.completed())

// 写完了则移除对OP_WRITE事件的监听

transportLayer.removeInterestOps(SelectionKey.OP_WRITE);

//如果Send没有全部完成发送,则SelectionKey还会监听到Writable事件的

return send.completed();

}

3.4 read()

读取操作需要读取一个完整的NetworkReceive。涉及到调用如下:

selector.java

private void attemptRead(SelectionKey key, KafkaChannel channel) throws IOException {

//if channel is ready and has bytes to read from socket or buffer, and has no

//previous receive(s) already staged or otherwise in progress then read from it

if (channel.ready() && (key.isReadable() || channel.hasBytesBuffered()) && !hasStagedReceive(channel)

&& !explicitlyMutedChannels.contains(channel)) {

NetworkReceive networkReceive;

/*

上面channel.read()读取到一个完整的 NetworkReceive,则将其添加到stagedReceives

中保存,若读取不到一个完整的NetworkReceive,则返回null,下次处理 OP_READ事件时,继续读取,直到读到一个完整的NetworkReceive。

*/

while ((networkReceive = channel.read()) != null) {

madeReadProgressLastPoll = true;

// 将读到的请求存在stagedReceives中

addToStagedReceives(channel, networkReceive);

}

if (channel.isMute()) {

outOfMemory = true; //channel has muted itself due to memory pressure.

} else {

madeReadProgressLastPoll = true;

}

}

}

KafkaChannel.java

//一旦有读操作,就要读取一个完整的NetworkReceive。而读完并没有取消掉READ事件(可能还要读新的数据)。

public NetworkReceive read() throws IOException {

NetworkReceive result = null;

if (receive == null) {

receive = new NetworkReceive(maxReceiveSize, id, memoryPool);

}

receive(receive);

if (receive.complete()) {

receive.payload().rewind();

result = receive;//读完返回数据

receive = null;

} else if (receive.requiredMemoryAmountKnown() && !receive.memoryAllocated() && isInMutableState()) {

//pool must be out of memory, mute ourselves.

mute();

}

return result;//读不完result为null

}

private long receive(NetworkReceive receive) throws IOException {

return receive.readFrom(transportLayer);

}

比较读和写在poll中的处理方式。一旦有读操作,就要读取一个完整的NetworkReceive。如果是写,可以分多次写。即读操作会在一次SelectionKey循环读取一个完整的接收动作,而写操作会在多次SelectionKey中完成一个完整的发送动作。写完后(成功地发送了Send请求),会取消掉WRITE事件(本次写已完成)。而读完并没有取消掉READ事件(可能还要读新的数据)。这所谓的消息的分包。

消息的分界:还有我们从上面知道,底层数据的通信,是在每一个channel上面,2个源源不断的byte流,一个send流,一个receive流。 怎则区分一个完整的消息?

就是我们上面的NetworkReceive,NetworkSend,在所有request,response头部,首先是一个定长的,4字节的头,receive的时候,至少调用2次read,先读取这4个字节,获取整个response的长度,接下来再读取消息体。

complte sends and receives

写操作会将当前发送成功的Send加入到completedSends。因为每次写请求在每个通道中只会有一个。读操作先加到stagedReceives,最后全部读取完之后才从stagedReceives复制到completedReceives。completedSends和completedReceives分别表示在Selector端已经发送的和接收到的请求。它们会在NetworkClient的poll调用之后被不同的handleCompleteXXX使用。

selector.java

/**

* adds a receive to staged receives

* 一次读操作就会有一个NetworkReceive生成,并加入到channel对应的队列中

*/

private void addToStagedReceives(KafkaChannel channel, NetworkReceive receive) {

if (!stagedReceives.containsKey(channel))

stagedReceives.put(channel, new ArrayDeque<>());

Deque<NetworkReceive> deque = stagedReceives.get(channel);

deque.add(receive);

}

/**

* checks if there are any staged receives and adds to completedReceives

* 只有在本次轮询中没有读操作了(也没有写了), 在退出轮询时, 将上一步的所有NetworkReceive加到completedReceives

*/

private void addToCompletedReceives() {

if (!this.stagedReceives.isEmpty()) {

Iterator<Map.Entry<KafkaChannel, Deque<NetworkReceive>>> iter = this.stagedReceives.entrySet().iterator();

while (iter.hasNext()) {

Map.Entry<KafkaChannel, Deque<NetworkReceive>> entry = iter.next();

KafkaChannel channel = entry.getKey();

//不是server处理中的

if (!explicitlyMutedChannels.contains(channel)) {

Deque<NetworkReceive> deque = entry.getValue();

addToCompletedReceives(channel, deque);

if (deque.isEmpty())

iter.remove();

}

}

}

}

private void addToCompletedReceives(KafkaChannel channel, Deque<NetworkReceive> stagedDeque) {

// 将每个channel的第一个NetworkReceive加入到completedReceives

NetworkReceive networkReceive = stagedDeque.poll();

this.completedReceives.add(networkReceive);

this.sensors.recordBytesReceived(channel.id(), networkReceive.size());

}

关于消息的时序性保证:

关于addToCompletedReceives,由于 Selector 这个类在 Client 和 Server 端都会调用,这里分两种情况讲述一下:

应用在 Server 端时,后续文章会详细介绍,这里简单说一下,Server 为了保证消息的时序性,在 Selector 中提供了两个方法:mute(String id) 和 unmute(String id),对该 KafkaChannel 做标记来保证同时只能处理这个 Channel 的一个 request(可以理解为排它锁)。当 Server 端接收到 request 后,先将其放入 stagedReceives 集合中,此时该 Channel 还未 mute,这个 Receive 会被放入 completedReceives 集合中。Server 在对 completedReceives 集合中的 request 进行处理时,会先对该 Channel mute,处理后的 response 发送完成后再对该 Channel unmute,然后才能处理该 Channel 其他的请求;

应用在 Client 端时,Client 并不会调用 Selector 的 mute() 和 unmute() 方法,client 的时序性而是通过 InFlightRequests 和 RecordAccumulator 的 mutePartition 来保证的( max.in.flight.requests.per.connection 设置为1时会保证),因此对于 Client 端而言,这里接收到的所有 Receive 都会被放入到 completedReceives 的集合中等待后续处理。

我们知道在InFlightRequests中,存放了所有发出去,但是response还没有回来的request。request发出去的时候入队;response回来,就把相对应的request出队。用队列的入队,出队,完成2者的匹配。要实现这个,服务器就必须要保证消息的时序:即在一个socket上面,假如发出去的reqeust是0, 1, 2,那返回的response的顺序也必须是0, 1, 2。服务端所有的请求进入一个requestQueue,然后是多线程并行处理的,如保证顺序性呢?就是上面提到的mute,unmute.所以这个方法只有配合 Server 端的调用才能看明白其作用。

networkclient 下一篇整理吧。

参考:

《kafka 源码剖析2.4.2》

http://zqhxuyuan.github.io/2016/01/06/2016-01-06-Kafka_Producer/

https://liuzhengyang.github.io/2017/12/31/kafka-source-2-network/

http://matt33.com/2017/08/22/producer-nio

————————————————

版权声明:本文为CSDN博主「bohu83」的原创文章,遵循CC 4.0 BY-SA版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/bohu83/article/details/88853553

234

234

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?