目录[-]

1.HDFS操作-查:

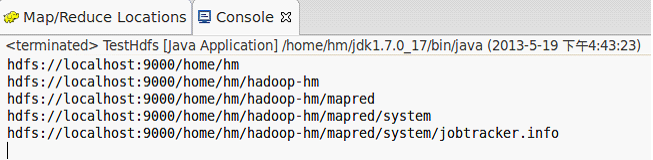

1)遍历HDFS文件,基于hadoop-0.20.2:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

|

package

cn.cvu.hdfs;

import

org.apache.hadoop.conf.Configuration;

import

org.apache.hadoop.fs.FileStatus;

import

org.apache.hadoop.fs.FileSystem;

import

org.apache.hadoop.fs.Path;

public

class

Query {

private

static

FileSystem hdfs;

public

static

void

main(String[] args)

throws

Exception {

// 1.创建配置器

Configuration conf =

new

Configuration();

// 2.创建文件系统

hdfs = FileSystem.get(conf);

// 3.遍历HDFS上的文件和目录

FileStatus[] fs = hdfs.listStatus(

new

Path(

"/home"

));

if

(fs.length >

0

) {

for

(FileStatus f : fs) {

showDir(f);

}

}

}

private

static

void

showDir(FileStatus fs)

throws

Exception {

Path path = fs.getPath();

System.out.println(path);

// 如果是目录

if

(fs.isDir()) {

FileStatus[] f = hdfs.listStatus(path);

if

(f.length >

0

) {

for

(FileStatus file : f) {

showDir(file);

}

}

}

}

}

|

解决:

因为这是个JavaProject,即使在window->preferences->HadoopMap/Reduce中配置了hadoop解压目录也不能自动导入jar包。

须要手动引入以下jar包,BuildPath,AddtoBuildPath:

hadoop-core-1.1.2.jar

commons-lang-2.4.jar

jackson-core-asl-1.8.8.jar

jackson-mapper-asl-1.8.8.jar

commons-logging-1.1.1.jar

commons-configuration-1.6.jar

2)RunonHadoop:

错误2:

此时实际搜索的是Ubuntu的目录。并且层级目录中可能含有特殊字符导致URI错误。

解决:

上面的代码是基于hadoop-0.20.2写的,不再适用于hadoop-1.1.2。改。

3)遍历HDFS文件,基于Hadoop-1.1.2一:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

package

cn.cvu.hdfs;

//导包略

public

class

Query {

private

static

FileSystem hdfs;

public

static

void

main(String[] args)

throws

Exception {

// 1.创建配置器

Configuration conf =

new

Configuration();

// 2.创建文件系统(指定为HDFS文件系统到URI)

hdfs = FileSystem.get(URI.create(

"hdfs://localhost:9000/"

), conf);

// 3.遍历HDFS上的文件和目录

FileStatus[] fs = hdfs.listStatus(

new

Path(

"/home"

));

if

(fs.length>

0

){

for

(FileStatus f : fs) {

showDir(f);

}

}

}

private

static

void

showDir(FileStatus fs)

throws

Exception {

//递归略

}

}

|

4)遍历HDFS文件,基于Hadoop-1.1.2二:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

public

static

void

main(String[] args)

throws

Exception {

// 1.创建配置器

Configuration conf =

new

Configuration();

// 2.加载指定的配置文件

conf.addResource(

new

Path(

"/home/hm/hadoop-1.1.2/conf/core-site.xml"

));

// 3.创建文件系统

hdfs = FileSystem.get(conf);

// 4.遍历HDFS上的文件和目录

FileStatus[] fs = hdfs.listStatus(

new

Path(

"/home"

));

if

(fs.length >

0

) {

for

(FileStatus f : fs) {

showDir(f);

}

}

}

|

5)判断HDFS中指定名称的目录或文件:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

|

package

cn.cvu.hdfs;

import

org.apache.hadoop.conf.Configuration;

import

org.apache.hadoop.fs.FileStatus;

import

org.apache.hadoop.fs.FileSystem;

import

org.apache.hadoop.fs.Path;

public

class

Query {

private

static

FileSystem hdfs;

public

static

void

main(String[] args)

throws

Exception {

// 1.配置器

Configuration conf =

new

Configuration();

conf.addResource(

new

Path(

"/home/hm/hadoop-1.1.2/conf/core-site.xml"

));

// 2.文件系统

hdfs = FileSystem.get(conf);

// 3.遍历HDFS上目前拥有的文件和目录

FileStatus[] fs = hdfs.listStatus(

new

Path(

"/home"

));

if

(fs.length >

0

) {

for

(FileStatus f : fs) {

showDir(f);

}

}

}

private

static

void

showDir(FileStatus fs)

throws

Exception {

Path path = fs.getPath();

// 如果是目录

if

(fs.isDir()) {

if

(path.getName().equals(

"system"

)) {

System.out.println(path +

"是目录"

);

}

FileStatus[] f = hdfs.listStatus(path);

if

(f.length >

0

) {

for

(FileStatus file : f) {

showDir(file);

}

}

}

else

{

if

(path.getName().equals(

"system"

)) {

System.out.println(path +

"是文件"

);

}

}

}

}

|

6)查看HDFS文件的最后修改时间:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

|

package

cn.cvu.hdfs;

import

java.net.URI;

import

java.util.Date;

import

org.apache.hadoop.conf.Configuration;

import

org.apache.hadoop.fs.FileStatus;

import

org.apache.hadoop.fs.FileSystem;

import

org.apache.hadoop.fs.Path;

public

class

Query2 {

private

static

FileSystem hdfs;

public

static

void

main(String[] args)

throws

Exception {

// 1.创建配置器

Configuration conf =

new

Configuration();

// 2.创建文件系统(指定为HDFS文件系统到URI)

hdfs = FileSystem.get(URI.create(

"hdfs://localhost:9000/"

), conf);

// 3.列出HDFS上目前拥有的文件和目录

FileStatus[] fs = hdfs.listStatus(

new

Path(

"/home"

));

if

(fs.length>

0

){

for

(FileStatus f : fs) {

showDir(f);

}

}

}

private

static

void

showDir(FileStatus fs)

throws

Exception {

Path path = fs.getPath();

//获取最后修改时间

long

time = fs.getModificationTime();

System.out.println(

"HDFS文件的最后修改时间:"

+

new

Date(time));

System.out.println(path);

if

(fs.isDir()) {

FileStatus[] f = hdfs.listStatus(path);

if

(f.length>

0

){

for

(FileStatus file : f) {

showDir(file);

}

}

}

}

}

|

7)查看HDFS中指定文件的状态:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

package

cn.cvu.hdfs;

import

java.net.URI;

import

org.apache.hadoop.conf.Configuration;

import

org.apache.hadoop.fs.BlockLocation;

import

org.apache.hadoop.fs.FileStatus;

import

org.apache.hadoop.fs.FileSystem;

import

org.apache.hadoop.fs.Path;

public

class

Query {

public

static

void

main(String[] args)

throws

Exception {

//1.配置器

Configuration conf =

new

Configuration();

conf.addResource(

new

Path(

"/home/hm/hadoop-1.1.2/conf/core-site.xml"

));

//2.文件系统

FileSystem fs = FileSystem.get(conf);

//3.已存在的文件

Path path =

new

Path(

"/home/hm/hadoop-hm/mapred/system/jobtracker.info"

);

//4.文件状态

FileStatus status = fs.getFileStatus(path);

//5.文件块

BlockLocation[] blockLocations = fs.getFileBlockLocations(status,

0

, status.getLen());

int

blockLen = blockLocations.length;

System.err.println(

"块数量:"

+blockLen);

for

(

int

i =

0

; i < blockLen; i++) {

// 主机名

String[] hosts = blockLocations[i].getHosts();

for

(String host : hosts) {

System.err.println(

"主机:"

+host);

}

}

}

}

|

8)读取HDFS中txt文件的内容:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

package

cn.cvu.hdfs;

import

org.apache.hadoop.conf.Configuration;

import

org.apache.hadoop.fs.FSDataInputStream;

import

org.apache.hadoop.fs.FileStatus;

import

org.apache.hadoop.fs.FileSystem;

import

org.apache.hadoop.fs.Path;

public

class

Query {

public

static

void

main(String[] args)

throws

Exception {

Configuration conf =

new

Configuration();

conf.addResource(

new

Path(

"/home/hm/hadoop-1.1.2/conf/core-site.xml"

));

FileSystem fs = FileSystem.get(conf);

Path path =

new

Path(

"/test.txt"

);

FSDataInputStream is = fs.open(path);

FileStatus stat = fs.getFileStatus(path);

byte

[] buffer =

new

byte

[Integer.parseInt(String.valueOf(stat.getLen()))];

is.readFully(

0

, buffer);

is.close();

fs.close();

System.out.println(

new

String(buffer));

}

}

|

2.HDFS操作-增:

1)上传文件到HDFS,基于hadoop-0.20.2:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

package

cn.cvu.hdfs;

import

org.apache.hadoop.conf.Configuration;

import

org.apache.hadoop.fs.FileStatus;

import

org.apache.hadoop.fs.FileSystem;

import

org.apache.hadoop.fs.Path;

public

class

TestHdfs {

public

static

void

main(String[] args)

throws

Exception {

// 1.创建配置器

Configuration conf =

new

Configuration();

// 2.创建文件系统

FileSystem hdfs = FileSystem.get(conf);

// 3.创建可供hadoop使用的文件系统路径

Path src =

new

Path(

"/home/hm/test.txt"

);

//本地目录/文件

Path dst =

new

Path(

"/home"

);

//目标目录/文件

// 4.拷贝本地文件上传(本地文件,目标路径)

hdfs.copyFromLocalFile(src, dst);

System.err.println(

"文件上传成功至:"

+ conf.get(

"fs.default.name"

));

// 5.列出HDFS上的文件

FileStatus[] fs = hdfs.listStatus(dst);

for

(FileStatus f : fs) {

System.err.println(f.getPath());

}

}

}

|

错误:

目标路径的操作权限不够,因为这实际是Ubuntu的/home目录!

Exceptioninthread"main"java.io.FileNotFoundException:/home/test.txt(Permissiondenied权限不够)

atjava.io.FileOutputStream.open(NativeMethod)

atjava.io.FileOutputStream.<init>(FileOutputStream.java:212)

atorg.apache.hadoop.fs.RawLocalFileSystem$LocalFSFileOutputStream.<init>(RawLocalFileSystem.java:188)

atorg.apache.hadoop.fs.RawLocalFileSystem$LocalFSFileOutputStream.<init>(RawLocalFileSystem.java:184)

atorg.apache.hadoop.fs.RawLocalFileSystem.create(RawLocalFileSystem.java:255)

atorg.apache.hadoop.fs.RawLocalFileSystem.create(RawLocalFileSystem.java:236)

atorg.apache.hadoop.fs.ChecksumFileSystem$ChecksumFSOutputSummer.<init>(ChecksumFileSystem.java:335)

atorg.apache.hadoop.fs.ChecksumFileSystem.create(ChecksumFileSystem.java:381)

atorg.apache.hadoop.fs.ChecksumFileSystem.create(ChecksumFileSystem.java:364)

atorg.apache.hadoop.fs.FileSystem.create(FileSystem.java:555)

atorg.apache.hadoop.fs.FileSystem.create(FileSystem.java:536)

atorg.apache.hadoop.fs.FileSystem.create(FileSystem.java:443)

atorg.apache.hadoop.fs.FileUtil.copy(FileUtil.java:229)

atorg.apache.hadoop.fs.FileUtil.copy(FileUtil.java:163)

atorg.apache.hadoop.fs.LocalFileSystem.copyFromLocalFile(LocalFileSystem.java:67)

atorg.apache.hadoop.fs.FileSystem.copyFromLocalFile(FileSystem.java:1143)

解决:

上传到有权限的目录(囧)

结果:

实际是上传到了Ubuntu的/home/hm/hadoop-hm目录中!

2)上传文件到HDFS,基于hadoop-1.1.2一:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

|

package

cn.cvu.hdfs;

import

java.net.URI;

import

org.apache.hadoop.conf.Configuration;

import

org.apache.hadoop.fs.FileStatus;

import

org.apache.hadoop.fs.FileSystem;

import

org.apache.hadoop.fs.Path;

public

class

TestHdfs1 {

private

static

FileSystem hdfs;

public

static

void

main(String[] args)

throws

Exception {

//1.创建配置器

Configuration conf =

new

Configuration();

//2.初始化文件系统(指定为HDFS文件系统URI,配置)

hdfs = FileSystem.get(URI.create(

"hdfs://localhost:9000/"

), conf);

//3.创建本地目录/文件路径

Path src =

new

Path(

"/home/hm/test.txt"

);

//4.创建HDFS目录(指定为HDFS文件系统)

Path dst =

new

Path(

"hdfs://localhost:9000//home/hm/hadoop-hm"

);

//5.执行上传

hdfs.copyFromLocalFile(src, dst);

System.out.println(

"文件上传成功至:"

+ conf.get(

"fs.default.name"

));

//6.遍历HDFS目录

FileStatus[] list = hdfs.listStatus(dst);

for

(FileStatus f : list) {

showDir(f);

}

}

private

static

void

showDir(FileStatus f)

throws

Exception {

if

(f.isDir()) {

System.err.println(

"目录:"

+ f.getPath());

FileStatus[] listStatus = hdfs.listStatus(f.getPath());

for

(FileStatus fn : listStatus) {

showDir(fn);

}

}

else

{

System.out.println(

"文件:"

+ f.getPath());

}

}

}

|

3)上传文件到HDFS,基于hadoop-1.1.2二:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

|

package

cn.cvu.hdfs;

import

org.apache.hadoop.conf.Configuration;

import

org.apache.hadoop.fs.FileStatus;

import

org.apache.hadoop.fs.FileSystem;

import

org.apache.hadoop.fs.Path;

public

class

TestHdfs2 {

private

static

FileSystem hdfs;

public

static

void

main(String[] args)

throws

Exception {

//1. 创建配置器

Configuration conf =

new

Configuration();

//2. 手动加载配置

conf.addResource(

new

Path(

"/home/hm/hadoop-1.1.2/conf/core-site.xml"

));

//3. 创建文件系统

hdfs = FileSystem.get(conf);

//4. 本地文件

Path src =

new

Path(

"/home/hm/test.txt"

);

//5. 目标路径

Path dst =

new

Path(

"/home"

);

//6. 上传文件

if

(!hdfs.exists(

new

Path(

"/home/test.txt"

))){

hdfs.copyFromLocalFile(src, dst);

System.err.println(

"文件上传成功至: "

+ conf.get(

"fs.default.name"

));

}

else

{

System.err.println(conf.get(

"fs.default.name"

) +

" 中已经存在 test.txt"

);

}

//7. 遍历HDFS文件

System.out.println(

"\nHDFS文件系统中存在的目录和文件:"

);

FileStatus[] fs = hdfs.listStatus(dst);

for

(FileStatus f : fs) {

showDir(f);

}

}

private

static

void

showDir(FileStatus f)

throws

Exception{

if

(f.isDir()){

System.err.println(

"目录:"

+ f.getPath());

FileStatus[] listStatus = hdfs.listStatus(f.getPath());

for

(FileStatus fn : listStatus){

showDir(fn);

}

}

else

{

System.err.println(

"文件:"

+ f.getPath());

}

}

}

|

4)在HDFS中创建目录和文件:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

package

cn.cvu.hdfs;

import

org.apache.hadoop.conf.Configuration;

import

org.apache.hadoop.fs.FSDataOutputStream;

import

org.apache.hadoop.fs.FileStatus;

import

org.apache.hadoop.fs.FileSystem;

import

org.apache.hadoop.fs.Path;

public

class

TestHdfs2 {

public

static

void

main(String[] args)

throws

Exception {

Configuration conf =

new

Configuration();

conf.addResource(

new

Path(

"/home/hm/hadoop-1.1.2/conf/core-site.xml"

));

FileSystem hdfs = FileSystem.get(conf);

// 使用HDFS数据输出流(写)对象 在HDSF上根目录创建一个文件夹,其内再创建文件

FSDataOutputStream out = hdfs.create(

new

Path(

"/eminem/hip-hop.txt"

));

// 在文件中写入一行数据,必须使用UTF-8

//out.writeUTF("Hell使用UTF-8"); //不能用?

out.write(

"痞子阿姆,Hello !"

.getBytes(

"UTF-8"

));

out = hdfs.create(

new

Path(

"/alizee.txt"

));

out.write(

"艾莉婕,Hello !"

.getBytes(

"UTF-8"

));

out.close();

FileStatus[] fs = hdfs.listStatus(

new

Path(

"/"

));

for

(FileStatus f : fs) {

System.out.println(f.getPath());

}

}

}

|

3.HDFS操作-改:

1)重命名文件:

|

1

2

3

4

5

6

7

8

|

public

static

void

main(String[] args)

throws

Exception {

Configuration conf =

new

Configuration();

conf.addResource(

new

Path(

"/home/hm/hadoop-1.1.2/conf/core-site.xml"

));

FileSystem fs = FileSystem.get(conf);

//重命名:fs.rename(源文件,新文件)

boolean

rename = fs.rename(

new

Path(

"/test.txt"

),

new

Path(

"/new_test.txt"

));

System.out.println(rename);

}

|

2)删除文件:

|

1

2

3

4

5

6

7

8

9

10

11

12

13

|

public

static

void

main(String[] args)

throws

Exception {

Configuration conf =

new

Configuration();

conf.addResource(

new

Path(

"/home/hm/hadoop-1.1.2/conf/core-site.xml"

));

FileSystem fs = FileSystem.get(conf);

//删除

//fs.delete(new Path("/new_test.txt")); //已过时

//程序结束时执行

boolean

exit = fs.deleteOnExit(

new

Path(

"/new_test.txt"

));

System.out.println(

"删除执行:"

+exit);

//判断删除(路径,true。false=非空时不删除,抛RemoteException、IOException异常)

boolean

delete = fs.delete(

new

Path(

"/test.txt"

),

true

);

System.out.println(

"执行删除:"

+delete);

}

|

- end

1419

1419

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?