1. 节点优先级

属于某个特定位置的一组端点被称为LLE或LLBE(LocalityLBEndpoints),它们具有相同的位置(locality),权重(load_balancing_weight)和优先级(priority)

- locality: 从大到小分别是region(地域),zone(区域)sub_zone(子区域)进行逐级标识

- load_balancing_weight可以对每个priority/region/zone/sub_zone配置权重,取值范围[1,n),通常一个locality权重除以具有相同的优先级的所有locality的权重之和即为当前locality的流量比例

- priority此LocalityLbEndpoints组的优先级,默认为最高优先级0

通常Envoy调度时仅挑选最高优先级的一组Endpoint,且仅此优先级的所有端点均不可用时才进行故障转移至下一个优先级的相关端点.

也可以在同个位置配置多个LbEndpoints,但这通常仅在不同组需要具有不同的负载均衡权重或不同的优先级时才需要

2. 优先级调度

调度时,Envoy仅将流量调度至最高优先级的一组端点(LocalityLbEndpoints)

- 最高优先级不健康时,流量发生转移.

- 可以在一组端点中设置超配因子,实现部分端点故障时仍将更大比例的流量导向本组端点.

优先级调度支持同一优先级内部的端点降级(DEGRADED)机制,其工作方式类同于在两个不同优先级之间的端点分配流量的机制

3. Panic恐慌阈值

调度期间Envoy仅考虑上游主机列表中的可用(健康)端点,但可用端点的百分比过低时,Envoy将忽略所有端点的健康状态并将流量调度给所有端点;此百分比即为Panic阈值,也称恐慌阈值.

- 默认Panic阈值是50%

- Panic阈值用于避免在流量增长时导致主机故障进入级联状态

例如: Endpoints一共10个节点,当其中的6个节点异常时,就触发了恐慌阈值(50%),此时进来700个请求,就会被调度到所有的Endpoints 10个节点,即正常的4个节点负责响应40%的流量,6个异常节点负责60%的流量,这样就会造成60%的访问是异常的,但保证了有40%的访问是正常的.避免了全部流量压在4台ep上造成雪崩的发生.

恐慌阈值可以与优先级一同使用

给定优先级中的可用端点数量下降时,Envoy会将一些流量转移给较低优先级的端点.

- 若第一级的端点能承载所有流量时,忽略恐慌阈值

- 否则,Envoy会在所有优先级之间分配流量,并在给定的优先级的可用性低于恐慌阈值时将该优先级的流量分配至该优先级所有主机

# Cluster.CommonLbConfig

{

"healthy_panic_threshold": "{...}", # 百分比数值,定义恐慌阈值,默认为50%

"zone_aware_lb_config": "{...}",

"locality_weighted_lb_config": "{...}",

"update_merge_window": "{...}",

"ignore_new_hosts_until_first_hc": "..."

}

4. Priority levels测试

4.1 docker-compose

一共11个container:

- envoy:Front Proxy,地址为172.31.29.2

- webserver01:第一个后端服务

- webserver01-sidecar:第一个后端服务的Sidecar Proxy,地址为172.31.29.11, 别名为red和webservice1

- webserver02:第二个后端服务

- webserver02-sidecar:第一个后端服务的Sidecar Proxy,地址为172.31.29.12, 别名为blue和webservice1

- webserver03:第三个后端服务

- webserver03-sidecar:第一个后端服务的Sidecar Proxy,地址为172.31.29.13, 别名为green和webservice1

- webserver04:第四个后端服务

- webserver04-sidecar:第四个后端服务的Sidecar Proxy,地址为172.31.29.14, 别名为gray和webservice2

- webserver05:第五个后端服务

- webserver05-sidecar:第五个后端服务的Sidecar Proxy,地址为172.31.29.15, 别名为black和webservice2

version: '3'

services:

front-envoy:

image: envoyproxy/envoy-alpine:v1.13-latest

volumes:

- ./front-envoy-v2.yaml:/etc/envoy/envoy.yaml

networks:

- envoymesh

expose:

# Expose ports 80 (for general traffic) and 9901 (for the admin server)

- "80"

- "9901"

webserver01-sidecar:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

hostname: red

networks:

envoymesh:

ipv4_address: 172.31.29.11

aliases:

- webservice1

- red

webserver01:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:webserver01-sidecar"

depends_on:

- webserver01-sidecar

webserver02-sidecar:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

hostname: blue

networks:

envoymesh:

ipv4_address: 172.31.29.12

aliases:

- webservice1

- blue

webserver02:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:webserver02-sidecar"

depends_on:

- webserver02-sidecar

webserver03-sidecar:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

hostname: green

networks:

envoymesh:

ipv4_address: 172.31.29.13

aliases:

- webservice1

- green

webserver03:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:webserver03-sidecar"

depends_on:

- webserver03-sidecar

webserver04-sidecar:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

hostname: gray

networks:

envoymesh:

ipv4_address: 172.31.29.14

aliases:

- webservice2

- gray

webserver04:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:webserver04-sidecar"

depends_on:

- webserver04-sidecar

webserver05-sidecar:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./envoy-sidecar-proxy.yaml:/etc/envoy/envoy.yaml

hostname: black

networks:

envoymesh:

ipv4_address: 172.31.29.15

aliases:

- webservice2

- black

webserver05:

image: ikubernetes/demoapp:v1.0

environment:

- PORT=8080

- HOST=127.0.0.1

network_mode: "service:webserver05-sidecar"

depends_on:

- webserver05-sidecar

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.29.0/24

4.2 envoy.yaml

- 对每个service的/livez进行健康检查,返回如果不是OK就认为失败.

- 两个集群cn-north-1和cn-north-2,cn-north-1优先级是0,cn-north-2优先级是1.所以当cn-north-1全部正常的情况下cn-north-2不分担流量

admin:

access_log_path: "/dev/null"

address:

socket_address:

address: 0.0.0.0

port_value: 9901

static_resources:

listeners:

- address:

socket_address:

address: 0.0.0.0

port_value: 80

name: listener_http

filter_chains:

- filters:

- name: envoy.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.config.filter.network.http_connection_manager.v2.HttpConnectionManager

codec_type: auto

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: webcluster1

http_filters:

- name: envoy.router

clusters:

- name: webcluster1

connect_timeout: 0.5s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

http2_protocol_options: {}

load_assignment:

cluster_name: webcluster1

policy:

overprovisioning_factor: 140

endpoints:

- locality:

region: cn-north-1

priority: 0

lb_endpoints:

- endpoint:

address:

socket_address:

address: webservice1

port_value: 80

- locality:

region: cn-north-2

priority: 1

lb_endpoints:

- endpoint:

address:

socket_address:

address: webservice2

port_value: 80

health_checks:

- timeout: 5s

interval: 10s

unhealthy_threshold: 2

healthy_threshold: 1

http_health_check:

path: /livez

4.3 部署测试

此时由于cn-north-1的3台服务器都正常,所以由cn-north-1的服务器响应所有请求

# docker-compose

root@k8s-node-1:~# while true; do curl 172.31.29.2;sleep 0.5 ;done

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: blue, ServerIP: 172.31.29.12!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: blue, ServerIP: 172.31.29.12!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: red, ServerIP: 172.31.29.11!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: blue, ServerIP: 172.31.29.12!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: red, ServerIP: 172.31.29.11!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: red, ServerIP: 172.31.29.11!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: blue, ServerIP: 172.31.29.12!

4.4 触发异常

- 当将cn-north-1中的服务器172.31.29.12/livez设置为非OK状态,此时触发健康检查失败,返回值506

- 当连续3次506后,故障节点172.31.29.12被标记为不可用,不再将流量转发至172.31.29.12

- 此时cn-north-1可用率是66.66%,乘以1.4的超配因子(overprovisioning_factor),此时93.324%的流量由cn-north-1负载,其余6.676%的流量由cn-north-2负担.所以此时会看到172.31.29.14,172.31.29.15进行响应

root@k8s-node-1:~# curl -XPOST -d "livez=FAIL" 172.31.29.12/livez

webserver01_1 | 127.0.0.1 - - [29/Sep/2022 03:59:13] "GET / HTTP/1.1" 200 -

webserver05_1 | 127.0.0.1 - - [29/Sep/2022 03:59:13] "GET /livez HTTP/1.1" 200 -

webserver04_1 | 127.0.0.1 - - [29/Sep/2022 03:59:13] "GET /livez HTTP/1.1" 200 -

webserver02_1 | 127.0.0.1 - - [29/Sep/2022 03:59:13] "GET /livez HTTP/1.1" 506 -

webserver01_1 | 127.0.0.1 - - [29/Sep/2022 03:59:13] "GET /livez HTTP/1.1" 200 -

webserver01_1 | 127.0.0.1 - - [29/Sep/2022 03:59:13] "GET / HTTP/1.1" 200 -

# 经过3次506之后就会有一定几率往14和15进行流量的调度

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: red, ServerIP: 172.31.29.11!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: red, ServerIP: 172.31.29.11!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: gray, ServerIP: 172.31.29.14!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

...

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: red, ServerIP: 172.31.29.11!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: red, ServerIP: 172.31.29.11!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: black, ServerIP: 172.31.29.15!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

4.5 恢复

此时cn-north-1恢复到100%状态,由cn-north-1的服务器响应所有请求,cn-north-2不再参与响应.

root@k8s-node-1:~# curl -XPOST -d "livez=OK" 172.31.29.12/livez

webserver02_1 | 127.0.0.1 - - [29/Sep/2022 04:01:46] "GET / HTTP/1.1" 200 -

webserver01_1 | 127.0.0.1 - - [29/Sep/2022 04:01:47] "GET / HTTP/1.1" 200 -

webserver03_1 | 127.0.0.1 - - [29/Sep/2022 04:01:47] "GET / HTTP/1.1" 200 -

webserver01_1 | 127.0.0.1 - - [29/Sep/2022 04:01:48] "GET / HTTP/1.1" 200 -

webserver02_1 | 127.0.0.1 - - [29/Sep/2022 04:01:48] "GET / HTTP/1.1" 200 -

webserver01_1 | 127.0.0.1 - - [29/Sep/2022 04:01:49] "GET / HTTP/1.1" 200 -

## 此时流量又恢复到原先11.12.13上

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: red, ServerIP: 172.31.29.11!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: red, ServerIP: 172.31.29.11!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: blue, ServerIP: 172.31.29.12!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: red, ServerIP: 172.31.29.11!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: green, ServerIP: 172.31.29.13!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: blue, ServerIP: 172.31.29.12!

iKubernetes demoapp v1.0 !! ClientIP: 127.0.0.1, ServerName: blue, ServerIP: 172.31.29.12!

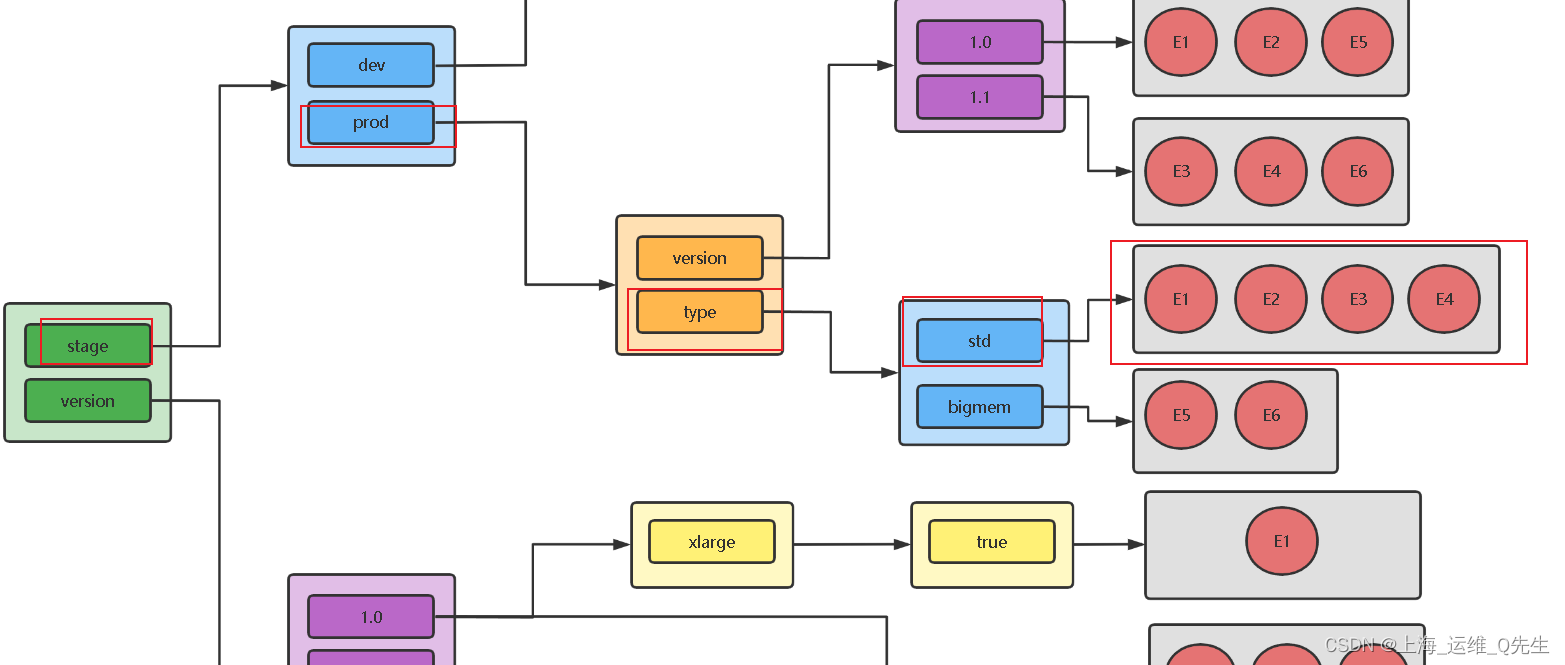

5. 位置加权负载均衡

位置加权负载均衡(Locality weighted load balancing)即为特定Locality及相关LbEndpoints组显示赋予权重,并根据此权重比在各Locality之间分配流量.

- 所有locality均可用时,则根据位置权重在各locality之间进行加权轮询

- 位置权重负载均衡同区域感知负载均衡互斥,因此,用户仅可在Cluster级别设置locality_weighted_lb_config或zone_aware_lb_config其中之一,以明确启用的负载均衡策略

cluster:

- name:

...

common_lb_config:

locality_weighted_lb_config: {} # 启用位置加权负载均衡机制,它没有可用的子参数

...

load_assignment:

endpoints:

locality: "{...}"

lb_endpoints: "[]"

load_balancing_weight: "{}" #整数值,定义当前位置或优先级的权重,最小值为1

priority: "..."

- 当某个Locality的某些Endpoints不可用时,Envoy按比例动态调整该Locality的权重

- 若同时配置了优先级和权重,负载均衡器会先选择priority,再从priority中选择locality;最后再locality中选择endpoint

6. 负载均衡子集(subset)

6.1 子集和元数据

Envoy还支持一个集群中基于子集实现更细粒度的流量分发

- 首先,在集群的上游主机上添加元数据(键值标签),并使用子集选择器(分类元数据)将上游主机划分为子集

- 在路由配置中指定负载均衡器可以选择的且必须具有匹配的元数据的上游主机,从而实现向特定子集的路由.

- 各子集内的主机间的负载均衡采用集群定义的策略(lb_policy)

配置了子集,但路由并未指定元数据或不存在与指定元数据匹配的子集时,则子集均衡器为其应用"回退策略"

- NO_FALLBACK: 请求失败,类似集群中不存在任何主机;默认

- ANY_ENDPOINT: 所有主机间进行调度,不再考虑主机元数据

- DEFAULT_SUBSET:调度至默认的子集,该子集需要事先定义.

子集必须预定义才能由子集负载均衡器在调度时使用.

子集元数据是键值数据,且必须定义在envoy.lb过滤器之下.

元数据示例:

load_assignment:

cluster_name: webcluster1

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

protocol: TCP

address: ep1

port_value: 80

metadata:

filter_metadata:

envoy.lb:

version: '1.0'

stage: 'prod'

6.2 子集

子集示例:

clusters:

- name: ...

...

lb_subset_config:

fallback_policy: "..." # 回退策略,默认为NO_FALLBACK

default_subset: "{...}" # 回退策略DEFAULT_SUBSET使用的默认子集

subset_selectors: [] # 子集选择器

- keys: [] # 定义一个选择器,指定用于归类主机元数据的键列表,可以是值或是表达式

fallback_policy: ... # 当前选择器专用的回退策略.

locality_weight_aware: "..." # 是否在将请求路由到子集时考虑端点的位置和位置权重,存在一些潜在的缺陷

scale_locality_weight: "..." # 是否将子集与主机中的主机比率来缩放每个位置权重

panic_mode_any: "..." # 是否在配置回退策略且其相应的子集无法找到主机时尝试从整个集群中选择主机

list_as_any: "..." # 列出主机

- 对于每个选择器,Envoy会遍历主机并检查其"envoy.lb"过滤器元数据,并为每个唯一的键值组合创建一个子集

- 若某主机元数据可以匹配该选择器中指定每个键,则会将该主机添加至此选择器中.这同时意味着,一个主机可能同时满足多个子集选择器的适配条件,此时该主机将同时隶属于多个子集

- 若所有主机均未定义元数据,则不会生成任何子集.

6.3 路由元数据匹配(metadata_match)

使用负载均衡器子集还要求在路由条目上配置元数据匹配(metadata_match)条件,它用于在路由期间查找特定子集

- 仅在上游集群中与metadata_match中设置的元数据匹配的子集时才能完成流量路由

- 使用weighted_cluster定义路由目标时,其内部的各目标集群也可以定义专用metadata_match

routes:

- name: ...

metach: {...}

route: {...}

cluster:

metadata_match: {...} # 子集负载均衡器使用的端点元数据匹配条件,若使用了weighted_clusters且内部定义了metadata_match,

# 则元数据将被合并,且weighted_cluster中定义的值优先,过滤器名称指定为envoy.lb

filter_metadata: {...}

envoy.lb: {...}

key1: value1

key2: value2

...

weighted_clusters: {...}

clusters: []

- name: ...

weight: ...

metadata_match: {...}

不存在与路由元数据匹配的子集时,将启用回退策略,就是发给默认的集群

6.4 docker-compose

一共 8 个container:

- Front Proxy: 地址为172.31.33.2

- webserver01:第一个后端服务地址为172.31.33.11,stage: “prod”,version: “1.0”,type: “std”,xlarge: true

- webserver02:第一个后端服务地址为172.31.33.12,stage: “prod”,version: “1.0”,type: “std”

- webserver03:第一个后端服务地址为172.31.33.13,stage: “prod”,version: “1.1”,type: “std”

- webserver04:第一个后端服务地址为172.31.33.14,stage: “prod”,version: “1.1”,type: “std”

- webserver05:第一个后端服务地址为172.31.33.15,stage: “prod”,version: “1.0”,type: “bigmem”

- webserver06:第一个后端服务地址为172.31.33.16,stage: “prod”,version: “1.1”,type: “bigmem”

- webserver07:第一个后端服务地址为172.31.33.17,stage: “dev”,version: “1.2-pre”,type: “std”

version: '3'

services:

front-envoy:

image: envoyproxy/envoy-alpine:v1.21.5

volumes:

- ./front-envoy.yaml:/etc/envoy/envoy.yaml

networks:

envoymesh:

ipv4_address: 172.31.33.2

expose:

# Expose ports 80 (for general traffic) and 9901 (for the admin server)

- "80"

- "9901"

e1:

image: ikubernetes/demoapp:v1.0

hostname: e1

networks:

envoymesh:

ipv4_address: 172.31.33.11

aliases:

- e1

expose:

- "80"

e2:

image: ikubernetes/demoapp:v1.0

hostname: e2

networks:

envoymesh:

ipv4_address: 172.31.33.12

aliases:

- e2

expose:

- "80"

e3:

image: ikubernetes/demoapp:v1.0

hostname: e3

networks:

envoymesh:

ipv4_address: 172.31.33.13

aliases:

- e3

expose:

- "80"

e4:

image: ikubernetes/demoapp:v1.0

hostname: e4

networks:

envoymesh:

ipv4_address: 172.31.33.14

aliases:

- e4

expose:

- "80"

e5:

image: ikubernetes/demoapp:v1.0

hostname: e5

networks:

envoymesh:

ipv4_address: 172.31.33.15

aliases:

- e5

expose:

- "80"

e6:

image: ikubernetes/demoapp:v1.0

hostname: e6

networks:

envoymesh:

ipv4_address: 172.31.33.16

aliases:

- e6

expose:

- "80"

e7:

image: ikubernetes/demoapp:v1.0

hostname: e7

networks:

envoymesh:

ipv4_address: 172.31.33.17

aliases:

- e7

expose:

- "80"

networks:

envoymesh:

driver: bridge

ipam:

config:

- subnet: 172.31.33.0/24

6.5 envoy.yaml

admin:

access_log_path: "/dev/null"

address:

socket_address: { address: 0.0.0.0, port_value: 9901 }

static_resources:

listeners:

- address:

socket_address: { address: 0.0.0.0, port_value: 80 }

name: listener_http

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

codec_type: auto

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: backend

domains:

- "*"

routes:

- match:

prefix: "/"

headers:

- name: x-custom-version

exact_match: pre-release

route:

cluster: webcluster1

metadata_match:

filter_metadata:

envoy.lb:

version: "1.2-pre"

stage: "dev"

- match:

prefix: "/"

headers:

- name: x-hardware-test

exact_match: memory

route:

cluster: webcluster1

metadata_match:

filter_metadata:

envoy.lb:

type: "bigmem"

stage: "prod"

- match:

prefix: "/"

route:

weighted_clusters:

clusters:

- name: webcluster1

weight: 90

metadata_match:

filter_metadata:

envoy.lb:

version: "1.0"

- name: webcluster1

weight: 10

metadata_match:

filter_metadata:

envoy.lb:

version: "1.1"

metadata_match:

filter_metadata:

envoy.lb:

stage: "prod"

http_filters:

- name: envoy.filters.http.router

clusters:

- name: webcluster1

connect_timeout: 0.5s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: webcluster1

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: e1

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.0"

type: "std"

xlarge: true

- endpoint:

address:

socket_address:

address: e2

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.0"

type: "std"

- endpoint:

address:

socket_address:

address: e3

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.1"

type: "std"

- endpoint:

address:

socket_address:

address: e4

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.1"

type: "std"

- endpoint:

address:

socket_address:

address: e5

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.0"

type: "bigmem"

- endpoint:

address:

socket_address:

address: e6

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "prod"

version: "1.1"

type: "bigmem"

- endpoint:

address:

socket_address:

address: e7

port_value: 80

metadata:

filter_metadata:

envoy.lb:

stage: "dev"

version: "1.2-pre"

type: "std"

lb_subset_config:

fallback_policy: DEFAULT_SUBSET

default_subset:

stage: "prod"

version: "1.0"

type: "std"

subset_selectors:

- keys: ["stage", "type"]

- keys: ["stage", "version"]

- keys: ["version"]

- keys: ["xlarge", "version"]

health_checks:

- timeout: 5s

interval: 10s

unhealthy_threshold: 2

healthy_threshold: 1

http_health_check:

path: /livez

expected_statuses:

start: 200

end: 399

6.6 部署测试

# docker-compose up

6.6.1 权重测试

由于envoy.yaml中对标签权重设置

- name: webcluster1

weight: 90

metadata_match:

filter_metadata:

envoy.lb:

version: "1.0"

- name: webcluster1

weight: 10

metadata_match:

filter_metadata:

envoy.lb:

version: "1.1"

当执行以下脚本时,就会产生9:1的访问比例

# cat test.sh

#!/bin/bash

declare -i v10=0

declare -i v11=0

for ((counts=0; counts<200; counts++)); do

if curl -s http://$1/hostname | grep -E "e[125]" &> /dev/null; then

# $1 is the host address of the front-envoy.

v10=$[$v10+1]

else

v11=$[$v11+1]

fi

done

echo "Requests: v1.0:v1.1 = $v10:$v11"

# ./test.sh 172.31.33.2

Requests: v1.0:v1.1 = 183:17

# ./test.sh 172.31.33.2

Requests: v1.0:v1.1 = 184:16

# ./test.sh 172.31.33.2

Requests: v1.0:v1.1 = 184:16

# ./test.sh 172.31.33.2

Requests: v1.0:v1.1 = 180:20

6.6.2 子集测试1

当以header x-hardware-test: memory访问时就会根据envoy.yaml中的以下配置进行选择type: “bigmem”,stage: "prod"的subset

即E5和E6

- match:

prefix: "/"

headers:

- name: x-hardware-test

exact_match: memory

route:

cluster: webcluster1

metadata_match:

filter_metadata:

envoy.lb:

type: "bigmem"

stage: "prod"

root@k8s-node-1:~# curl -H "x-hardware-test: memory" 172.31.33.2/hostname

ServerName: e5

root@k8s-node-1:~# curl -H "x-hardware-test: memory" 172.31.33.2/hostname

ServerName: e5

root@k8s-node-1:~# curl -H "x-hardware-test: memory" 172.31.33.2/hostname

ServerName: e6

root@k8s-node-1:~# curl -H "x-hardware-test: memory" 172.31.33.2/hostname

ServerName: e5

root@k8s-node-1:~# curl -H "x-hardware-test: memory" 172.31.33.2/hostname

ServerName: e6

root@k8s-node-1:~# curl -H "x-hardware-test: memory" 172.31.33.2/hostname

ServerName: e6

6.6.3 子集测试2

当以header x-custom-version: pre-release访问时会根据标签选择version: “1.2-pre”,stage: "dev"的后端subset,即e7

- match:

prefix: "/"

headers:

- name: x-custom-version

exact_match: pre-release

route:

cluster: webcluster1

metadata_match:

filter_metadata:

envoy.lb:

version: "1.2-pre"

stage: "dev"

root@k8s-node-1:~# curl -H "x-custom-version: pre-release" 172.31.33.2/hostname

ServerName: e7

root@k8s-node-1:~# curl -H "x-custom-version: pre-release" 172.31.33.2/hostname

ServerName: e7

root@k8s-node-1:~# curl -H "x-custom-version: pre-release" 172.31.33.2/hostname

ServerName: e7

root@k8s-node-1:~# curl -H "x-custom-version: pre-release" 172.31.33.2/hostname

ServerName: e7

root@k8s-node-1:~# curl -H "x-custom-version: pre-release" 172.31.33.2/hostname

ServerName: e7

root@k8s-node-1:~# curl -H "x-custom-version: pre-release" 172.31.33.2/hostname

ServerName: e7

6.6.4 子集测试3

预想将配置改为选择 E1 E2 E3 E4这组的subset

那么就需要修改envoy实现新增一组基于子集的标签选择

stage=prod,type=std

- match:

prefix: "/"

headers:

- name: prod-type

exact_match: std

route:

cluster: webcluster1

metadata_match:

filter_metadata:

envoy.lb:

stage: "prod"

type: "std"

效果如下:

root@k8s-node-1:~# curl -H "prod-type: std" 172.31.33.2/hostname

ServerName: e1

root@k8s-node-1:~# curl -H "prod-type: std" 172.31.33.2/hostname

ServerName: e1

root@k8s-node-1:~# curl -H "prod-type: std" 172.31.33.2/hostname

ServerName: e2

root@k8s-node-1:~# curl -H "prod-type: std" 172.31.33.2/hostname

ServerName: e1

root@k8s-node-1:~# curl -H "prod-type: std" 172.31.33.2/hostname

ServerName: e2

root@k8s-node-1:~# curl -H "prod-type: std" 172.31.33.2/hostname

ServerName: e3

root@k8s-node-1:~# curl -H "prod-type: std" 172.31.33.2/hostname

ServerName: e2

root@k8s-node-1:~# curl -H "prod-type: std" 172.31.33.2/hostname

ServerName: e3

6.6.5 子集测试4

只选择有E1的子集

- match:

prefix: "/"

headers:

- name: subset

exact_match: endpoint

route:

cluster: webcluster1

metadata_match:

filter_metadata:

envoy.lb:

version: "1.0"

xlarge: true

测试效果

root@k8s-node-1:~# curl -H "subset: endpoint" 172.31.33.2/hostname

ServerName: e1

root@k8s-node-1:~# curl -H "subset: endpoint" 172.31.33.2/hostname

ServerName: e1

root@k8s-node-1:~# curl -H "subset: endpoint" 172.31.33.2/hostname

ServerName: e1

root@k8s-node-1:~# curl -H "subset: endpoint" 172.31.33.2/hostname

ServerName: e1

root@k8s-node-1:~# curl -H "subset: endpoint" 172.31.33.2/hostname

ServerName: e1

373

373

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?