Kubernetes的早期版本依靠Heapster来实现完整的性能数据采集和监控功能,Kubernetes从1.8版本开始,性能数据开始以Metrics API的方式提供标准化接口,并且从1.10版本开始将Heapster替换为Metrics Server。在Kubernetes新的监控体系中,Metrics Server用于提供核心指标(Core Metrics),包括Node、Pod的CPU和内存使用指标。对其他自定义指标(Custom Metrics)的监控则由Prometheus等组件来完成。

通过Metrics Server监控Pod和Node的CPU和内存资源使用数据

Metrics Server在部署完成后,将通过Kubernetes核心API Server的“/apis/metrics.k8s.io/v1beta1”路径提供Pod和Node的监控数据。Metrics Server源代码和部署配置可以在GitHub代码库(https://github.com/kubernetes-incubator/metrics-server)

首先,部署Metrics Server实例,在下面的YAML配置中包含ServiceAccount、Deployment和Service的定义:

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

containers:

- name: metrics-server

image: k8s.gcr.io/metrics-server-amd64:v0.3.1 # 镜像可以先拉到本地镜像仓库

imagePullPolicy: IfNotPresent

command:

- /metrics-server

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternIP

volumeMounts:

- name: tmp-dir

mountPath: /tmp

volumes:

- name: tmp-dir

emptyDir: {}

---

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: 443最后,创建APIService资源,将监控数据通过“/apis/metrics.k8s.io/v1beta1”路径提供:

apiVersion: apiregistration.k8s.io/v1beta1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100在部署完成后确保metrics-server的Pod启动成功。

使用kubectl top nodes和kubectl top pods命令监控CPU和内存资源的使用情况。

Metrics Server提供的数据也可以供HPA控制器使用,以实现基于CPU使用率或内存使用值的Pod自动扩缩容功能。

Prometheus + Grafana 集群性能监控平台搭建

Prometheus是由SoundCloud公司开发的开源监控系统,是继Kubernetes之后CNCF第2个毕业的项目,在容器和微服务领域得到了广泛应用。Prometheus的主要特点如下。

◎ 使用指标名称及键值对标识的多维度数据模型。

◎ 采用灵活的查询语言PromQL。

◎ 不依赖分布式存储,为自治的单节点服务。

◎ 使用HTTP完成对监控数据的拉取。

◎ 支持通过网关推送时序数据。

◎ 支持多种图形和Dashboard的展示,例如Grafana。

Prometheus生态系统由各种组件组成,用于功能的扩充。

◎ Prometheus Server:负责监控数据采集和时序数据存储,并提供数据查询功能。

◎ 客户端SDK:对接Prometheus的开发工具包。

◎ Push Gateway:推送数据的网关组件。

◎ 第三方Exporter:各种外部指标收集系统,其数据可以被Prometheus采集。

◎ AlertManager:告警管理器。

◎ 其他辅助支持工具。

Prometheus的核心组件Prometheus Server的主要功能包括:从Kubernetes Master获取需要监控的资源或服务信息;从各种Exporter抓取(Pull)指标数据,然后将指标数据保存在时序数据库(TSDB)中;向其他系统提供HTTP API进行查询;提供基于PromQL语言的数据查询;可以将告警数据推送(Push)给AlertManager,等等。

下面对部署Prometheus服务的过程进行说明。

首先,创建一个ConfigMap用于保存Prometheus的主配置文件prometheus.yml,其中可以配置需要监控的Kubernetes集群的资源对象或服务(如Service、Pod、Node等):

---

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: EnsureExists

data:

prometheus.rules.yml: |

groups:

- name: recording_rules

rules:

- record: service_myweb:container_memory_working_set_bytes:sum

expr: sum by (namespace, label_service_myweb) (sum(container_memory_working_set_bytes{image!=""}) by (pod_name, namespace) * on (namespace, pod_name) group_left(service_myweb, label_service_myweb) label_replace(kube_pod_labels, "pod_name", "$1", "pod", "(.*)"))

prometheus.yml: |

global:

scrape_interval: 30s

rule_files:

- 'prometheus.rules.yml'

scrape_configs:

- job_name: prometheus

static_configs:

- targets:

- localhost:9090

- job_name: kubernetes-apiservers

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: default;kubernetes;https

source_labels:

- __meta_kubernetes_namespace

- __meta_kubernetes_service_name

- __meta_kubernetes_endpoint_port_name

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-nodes-kubelet

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-nodes-cadvisor

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __metrics_path__

replacement: /metrics/cadvisor

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

insecure_skip_verify: true

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

- job_name: kubernetes-service-endpoints

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scrape

- action: replace

regex: (https?)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_scheme

target_label: __scheme__

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_service_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- job_name: kubernetes-services

kubernetes_sd_configs:

- role: service

metrics_path: /probe

params:

module:

- http_2xx

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_service_annotation_prometheus_io_probe

- source_labels:

- __address__

target_label: __param_target

- replacement: blackbox

target_label: __address__

- source_labels:

- __param_target

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- source_labels:

- __meta_kubernetes_service_name

target_label: kubernetes_name

- job_name: kubernetes-pods

kubernetes_sd_configs:

- role: pod

relabel_configs:

- action: keep

regex: true

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_scrape

- action: replace

regex: (.+)

source_labels:

- __meta_kubernetes_pod_annotation_prometheus_io_path

target_label: __metrics_path__

- action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

source_labels:

- __address__

- __meta_kubernetes_pod_annotation_prometheus_io_port

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- action: replace

source_labels:

- __meta_kubernetes_namespace

target_label: kubernetes_namespace

- action: replace

source_labels:

- __meta_kubernetes_pod_name

target_label: kubernetes_pod_name

alerting:

alertmanagers:

- kubernetes_sd_configs:

- role: pod

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace]

regex: kube-system

action: keep

- source_labels: [__meta_kubernetes_pod_label_k8s_app]

regex: alertmanager

action: keep

- source_labels: [__meta_kubernetes_pod_container_port_number]

regex:

action: drop

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: prometheus

namespace: kube-system

labels:

k8s-app: prometheus

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

replicas: 1

selector:

matchLabels:

k8s-app: prometheus

template:

metadata:

labels:

k8s-app: prometheus

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

initContainers:

- name: "init-chown-data"

image: "busybox:latest"

imagePullPolicy: "IfNotPresent"

command: ["chown", "-R", "65534:65534", "/data"]

volumeMounts:

- name: storage-volume

mountPath: /data

subPath: ""

containers:

- name: prometheus-server-configmap-reload

image: "jimmidyson/configmap-reload:v0.1"

imagePullPolicy: "IfNotPresent"

args:

- --volume-dir=/etc/config

- --webhook-url=http://localhost:9090/-/reload

volumeMounts:

- name: config-volume

mountPath: /etc/config

readOnly: true

- name: prometheus-server

image: "prom/prometheus:v2.8.0"

imagePullPolicy: "IfNotPresent"

args:

- --config.file=/etc/config/prometheus.yml

- --storage.tsdb.path=/data

- --web.console.libraries=/etc/prometheus/console_libraries

- --web.console.templates=/etc/prometheus/consoles

- --web.enable-lifecycle

ports:

- containerPort: 9090

readinessProbe:

httpGet:

path: /-/ready

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

livenessProbe:

httpGet:

path: /-/healthy

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- name: config-volume

mountPath: /etc/config

- name: storage-volume

mountPath: /data

subPath: ""

terminationGracePeriodSeconds: 300

volumes:

- name: config-volume

configMap:

name: prometheus-config

- name: storage-volume

hostPath:

path: /prometheus-data

type: Directory

---

kind: Service

apiVersion: v1

metadata:

name: prometheus

namespace: kube-system

labels:

kubernetes.io/name: "Prometheus"

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

type: NodePort

ports:

- name: http

port: 9090

nodePort: 9090

protocol: TCP

targetPort: 9090

selector:

k8s-app: prometheus

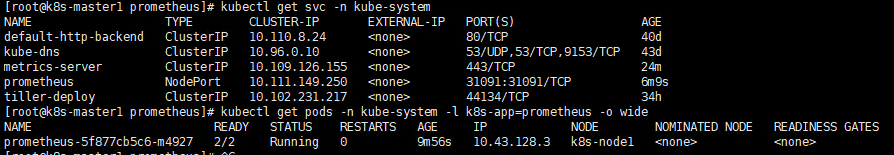

Prometheus提供了一个简单的Web页面用于查看已采集的监控数据,上面的Service定义了NodePort为9090,我们可以通过访问Node的9090端口访问这个页面。

在Prometheus提供的Web页面上,可以输入PromQL查询语句对指标数据进行查询,也可以选择一个指标进行查看,例如选择container_network_receive_bytes_total指标查看容器的网络。

单击Graph标签,可以查看该指标的时序图。

接下来可以针对各种系统和服务部署各种Exporter进行指标数据的采集。目前Prometheus支持多种开源软件的Exporter,包括数据库、硬件系统、消息系统、存储系统、HTTP服务器、日志服务,等等,可以从Prometheus的官网https://prometheus.io/docs/instrumenting/exporters/获取各种Exporter的信息。

下面以官方维护的node_exporter为例进行部署。node_exporter主要用于采集主机相关的性能指标数据,其官网为https://github.com/prometheus/node_exporter。node_exporter的YAML配置文件如下:

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: node-exporter

namespace: kube-system

labels:

k8s-app: node-exporter

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

version: v0.17.0

spec:

updateStrategy:

type: OnDelete

template:

metadata:

labels:

k8s-app: node-exporter

version: v0.17.0

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-node-critical

containers:

- name: prometheus-node-exporter

image: "prom/node-exporter:v0.17.0"

imagePullPolicy: "IfNotPresent"

args:

- --path.procfs=/host/proc

- --path.sysfs=/host/sys

ports:

- name: metrics

containerPort: 9100

hostPort: 9100

volumeMounts:

- name: proc

mountPath: /host/proc

readOnly: true

- name: sys

mountPath: /host/sys

readOnly: true

resources:

limits:

cpu: 1

memory: 512Mi

requests:

cpu: 100m

memory: 50Mi

hostNetwork: true

hostPID: true

volumes:

- name: proc

hostPath:

path: /proc

- name: sys

hostPath:

path: /sys

---

apiVersion: v1

kind: Service

metadata:

name: node-exporter

namespace: kube-system

annotations:

prometheus.io/scrape: "true"

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "NodeExporter"

spec:

clusterIP: None

ports:

- name: metrics

port: 9100

protocol: TCP

targetPort: 9100

selector:

k8s-app: node-exporter

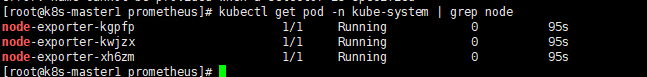

Prometheus的Web页面就可以查看node-exporter采集的Node指标数据了,如图:

最后,部署Grafana用于展示专业的监控页面,其YAML配置文如下:

---

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: grafana

namespace: kube-system

labels:

k8s-app: grafana

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

replicas: 1

selector:

matchLabels:

k8s-app: grafana

template:

metadata:

labels:

k8s-app: grafana

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

spec:

priorityClassName: system-cluster-critical

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

- key: "CriticalAddonsOnly"

operator: "Exists"

containers:

- name: grafana

image: grafana/grafana:6.0.1

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1

memory: 1Gi

requests:

cpu: 100m

memory: 100Mi

env:

- name: GF_AUTH_BASIC_ENABLED

value: "false"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ORG_ROLE

value: Admin

- name: GF_SERVER_ROOT_URL

value: /api/v1/namespaces/kube-system/services/grafana/proxy/

ports:

- name: ui

containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Grafana"

spec:

ports:

- port: 80

protocol: TCP

targetPort: ui

selector:

k8s-app: grafana部署完成后,通过Kubernetes Master的URL访问Grafana页面,例如http://ip:8080/api/v1/namespaces/kube-system/services/grafana/proxy。

在Grafana的设置页面添加类型为Prometheus的数据源,输入Prometheus服务的URL(如http://prometheus:9090)进行保存。

在Grafana的Dashboard控制面板导入预置的Dashboard模板,以显示各种监控图表。Grafana官网(https://grafana.com/dashboards)提供了许多针对Kubernetes集群监控的Dashboard模板,可以下载、导入并使用。下图显示了一个可以监控集群CPU、内存、文件系统、网络吞吐率的Dashboard。

小结:

至此,基于Prometheus+Grafana的Kubernetes集群监控系统就搭建完成了。

谢谢大家的浏览。

4633

4633

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?