新API android.hardware.camera2

从API-21(5.0)开始,新增一个Android.hardware.camera2包,取代原来Camera.java。

Android平台支持拍照及录制视频,通过android.hardware.camera2相关API或camera Intent。

与android.hardware.camera API相比,不同之处在于:

-

原生支持RAW照片输出突发拍摄模式。

-

制约拍照速度的不再是软件而是硬件。以Nexus5为例,分辨率全开下Android L的连拍速度可达到30fps。

-

全自动控制快门、感光度、对焦、测光、硬件视频防抖等多种参数都被整合到了新的API内。

-

新的API中添加的手动控制功能列表:感光度手动对焦/AF开关AE/AF/AWB模式AE/AWB锁硬件视频防抖连续帧。

-

可以用单个手指进行缩放。

-

支持QR码识别。

接下来介绍Camera2下的各个类:

-

CameraManager:摄像头管理者,这是一个全新的系统管理者,专门用户检测系统摄像头,打开系统摄像头,除此之外,调用CameraManager.getCameraCharacteristics(string)方法即可获取指定摄像头的相关特性。

-

CameraCharacteristic:摄像头特性。该对象通过CameraManager来获取,用于描述特定摄像头所支持的各种特性。

-

CameraDevice:代表系统摄像头。该类的功能类似于早期的Camera类。

-

CameraCaptureSession:这是一个与摄像头建立会话的类,当程序需要预览、拍照时,都需要先通过该类的实例创建Session。而且不管预览还是拍照,也都是由该对象的方法进行控制的,其中控制预览的方法为setRepeatingRequest(),控制拍照的方法为capture()。

为了监听CameraCaptureSession的创建过程,以及监听CameraCaptureSession的拍照过程,Camera2 API为CameraCaptureSession提供了StateCallback、CaptureCallback等内部类。 -

CameraRequest和CameraReequest.Builder:当程序调用setRepeatingRequest()方法进行预览时,或调用capture()方法进行拍照时,都需要传入CameraRequest参数。CameraRequest代表了一次捕获请求,用于描述捕获图片的各种参数设置,比如对焦模式、曝光模式……程序需要对照品所做的各种控制,都通过CameraRequest参数进行设置。可以理解一个请求参数一样,CameraRequest.Builder则负责生成CameraRequest对象。

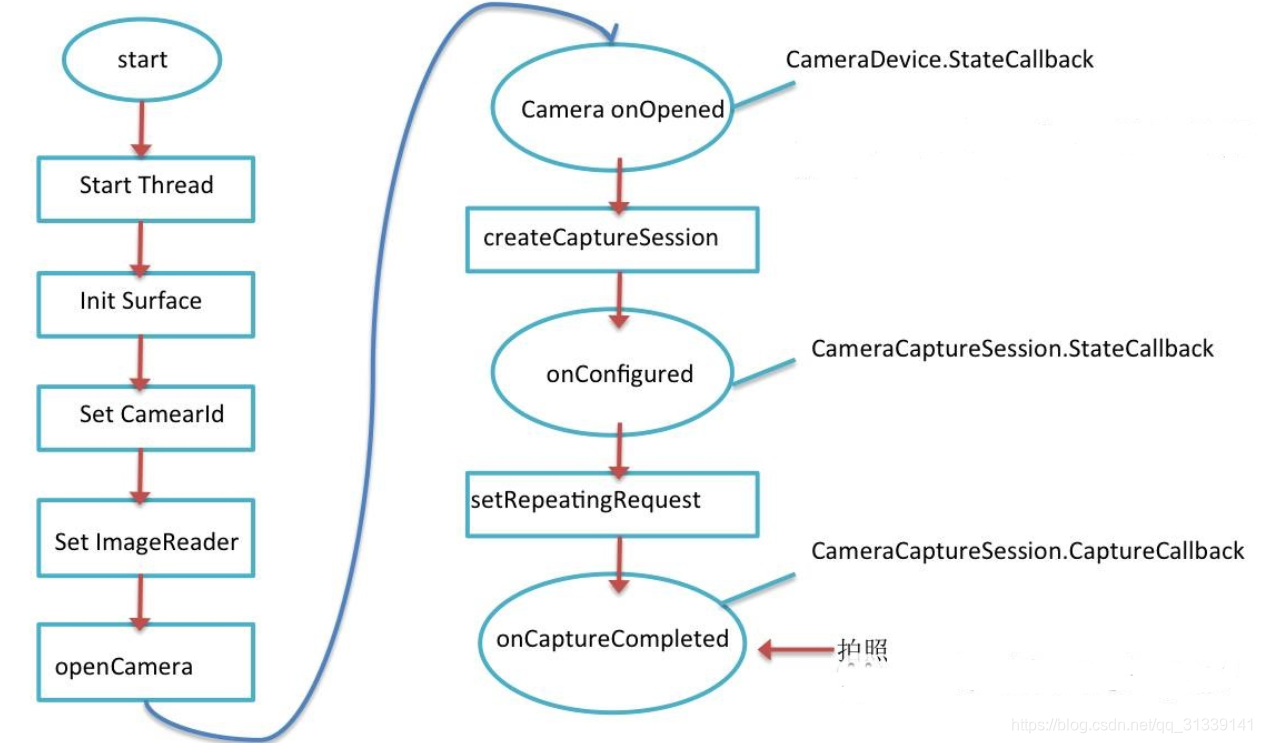

Camera2流程图:

Google采用了pipeline(管道)的概念,将Camera Device相机设备和Android Device安卓设备连接起来, Android Device通过管道发送CaptureRequest拍照请求给Camera Device,Camera Device通过管道返回CameraMetadata数据给Android Device,这一切建立在一个叫作CameraCaptureSession的会话中。

Camera2类图:

其中CameraManager是所有相机设备(CameraDevice)的管理者,而每个CameraDevice自己会负责建立CameraCaptureSession以及建立CaptureRequest。CameraCharacteristics是CameraDevice的属性描述类,在CameraCharacteristics中可以进行相机设备功能的详细设定(当然了,首先你得确定你的相机设备支持这些功能才行)。类图中有着三个重要的callback,其中CameraCaptureSession.CaptureCallback将处理预览和拍照图片的工作,需要重点对待。

Camera2拍照流程图:

-

可以看出调用openCamera方法后会回调CameraDevice.StateCallback这个方法,在该方法里重写onOpened函数。

-

在onOpened方法中调用createCaptureSession,该方法又回调CameraCaptureSession.StateCallback方法。

-

在CameraCaptureSession.StateCallback中重写onConfigured方法,设置setRepeatingRequest方法(也就是开启预览了)。

-

setRepeatingRequest又会回调 CameraCaptureSession.CaptureCallback方法。

-

重写CameraCaptureSession.CaptureCallback中的 onCaptureCompleted方法,result就是未经过处理的元数据了

顺便提一下onCaptureProgressed方法很明显是在Capture过程中的,也就是在onCaptureCompleted之前,所以,在这之前相对图像干什么就看你的了,像美颜等操作就可以在这个方法中实现了。

可以看出Camera2相机使用的逻辑还是比较简单的,其实就是3个Callback函数的回调,先说一下:setRepeatingRequest和capture方法其实都是向相机设备发送获取图像的请求,但是capture就获取那么一次,而setRepeatingRequest就是不停的获取图像数据,所以呢,使用capture就想拍照一样,图像就停在那里了,但是setRepeatingRequest一直在发送和获取,所以需要连拍的时候就调用它,然后在onCaptureCompleted中保存图像就行了。(注意了,图像的预览也是用的setRepeatingRequest,只是你不处理数据就行了)

<?xml version="1.0" encoding="utf-8"?>

<LinearLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="match_parent"

android:layout_height="match_parent"

xmlns:tools="http://schemas.android.com/tools"

android:orientation="vertical">

<!-- 使用TextureView预览 -->

<TextureView

android:id="@+id/texture_view"

android:layout_width="match_parent"

android:layout_height="0dp"

android:layout_weight="1" />

<ImageView

android:id="@+id/iv_capture_picture"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:scaleType="centerCrop"

tools:ignore="contentDescription"/>

<Button

android:id="@+id/btn_take_picture"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:text="拍照" />

</LinearLayout>

package com.example.media.camera;

import android.Manifest;

import android.annotation.TargetApi;

import android.content.pm.PackageManager;

import android.graphics.Bitmap;

import android.graphics.BitmapFactory;

import android.graphics.ImageFormat;

import android.graphics.SurfaceTexture;

import android.hardware.camera2.CameraAccessException;

import android.hardware.camera2.CameraCaptureSession;

import android.hardware.camera2.CameraCharacteristics;

import android.hardware.camera2.CameraDevice;

import android.hardware.camera2.CameraManager;

import android.hardware.camera2.CameraMetadata;

import android.hardware.camera2.CaptureFailure;

import android.hardware.camera2.CaptureRequest;

import android.hardware.camera2.CaptureResult;

import android.hardware.camera2.TotalCaptureResult;

import android.hardware.camera2.params.StreamConfigurationMap;

import android.media.Image;

import android.media.ImageReader;

import android.os.Build;

import android.os.Bundle;

import android.os.Environment;

import android.os.Handler;

import android.os.HandlerThread;

import android.support.annotation.NonNull;

import android.support.annotation.Nullable;

import android.support.v4.app.ActivityCompat;

import android.support.v7.app.AppCompatActivity;

import android.util.Log;

import android.util.Size;

import android.util.SparseIntArray;

import android.util.TypedValue;

import android.view.Surface;

import android.view.TextureView;

import android.view.View;

import android.widget.ImageView;

import com.example.media.R;

import java.io.BufferedOutputStream;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileOutputStream;

import java.io.IOException;

import java.nio.ByteBuffer;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Collections;

import java.util.Comparator;

import java.util.Date;

import java.util.List;

import java.util.Locale;

public class Camera2Activity extends AppCompatActivity implements TextureView.SurfaceTextureListener {

private static final String TAG = Camera2Activity.class.getSimpleName();

private ImageView mIvCapturePicture;

private static final SparseIntArray ORIENTATIONS = new SparseIntArray();

static {

ORIENTATIONS.append(Surface.ROTATION_0, 90);

ORIENTATIONS.append(Surface.ROTATION_90, 0);

ORIENTATIONS.append(Surface.ROTATION_180, 270);

ORIENTATIONS.append(Surface.ROTATION_270, 180);

}

private TextureView mTextureView;

// private Handler mHandler;

private int mWidth;

private int mHeight;

private ImageReader mImageReader;

private Size mPreviewSize;

private CameraDevice mCameraDevice;

private CaptureRequest.Builder mCaptureBuilder;

private CameraCaptureSession mCaptureSession;

@Override

protected void onCreate(@Nullable Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_camera2);

// 摄像头预览:openCamera()->

// CameraDevice.StateCallback onOpened()->

// startPreview()->

// CameraCaptureSession.StateCallback onConfigured()->

// CameraCaptureSession.CaptureCallback onCaptureCompleted()

// 手动拍照:takePicture()->onImageAvailable()

mIvCapturePicture = findViewById(R.id.iv_capture_picture);

mTextureView = findViewById(R.id.texture_view);

// HandlerThread handlerThread = new HandlerThread("camera2");

// handlerThread.start();

// mHandler = new Handler(handlerThread.getLooper());

mTextureView.setSurfaceTextureListener(this);

findViewById(R.id.btn_take_picture).setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

takePicture();

}

});

}

@Override

protected void onResume() {

super.onResume();

startCamera();

}

@Override

protected void onPause() {

super.onPause();

if (mCameraDevice != null) {

stopCamera();

}

}

/**

* 点击拍照

*/

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

private void takePicture() {

try {

if (mCameraDevice == null) {

Log.v(TAG, "camera device is null");

return;

}

mCaptureBuilder = mCameraDevice.createCaptureRequest(CameraDevice.TEMPLATE_STILL_CAPTURE);

// 设置自动对焦模式

mCaptureBuilder.set(CaptureRequest.CONTROL_AF_MODE,

CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

mCaptureBuilder.addTarget(mImageReader.getSurface());

// 设置设备方向

int rotation = getWindowManager().getDefaultDisplay().getRotation();

mCaptureBuilder.set(CaptureRequest.JPEG_ORIENTATION, ORIENTATIONS.get(rotation));

// // 停止连续取景

// mCaptureSession.stopRepeating();

// 拍照

// handler设置为null表示在当前线程执行该操作

mCaptureSession.capture(mCaptureBuilder.build(), null, null);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onSurfaceTextureAvailable(SurfaceTexture surface, int width, int height) {

mWidth = width;

mHeight = height;

openCamera();

}

@Override

public void onSurfaceTextureSizeChanged(SurfaceTexture surface, int width, int height) {

}

@Override

public boolean onSurfaceTextureDestroyed(SurfaceTexture surface) {

stopCamera();

return true;

}

@Override

public void onSurfaceTextureUpdated(SurfaceTexture surface) {

}

private void startCamera() {

if (mTextureView.isAvailable() && mCameraDevice == null) {

openCamera();

} else {

mTextureView.setSurfaceTextureListener(this);

}

}

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

private void stopCamera() {

if (mCameraDevice != null) {

mCameraDevice.close();

mCameraDevice = null;

}

}

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

private void openCamera() {

try {

CameraManager cameraManager = (CameraManager) getSystemService(CAMERA_SERVICE);

if (cameraManager == null) {

Log.v(TAG, "camera service is null");

return;

}

String[] cameraIds = cameraManager.getCameraIdList();

if (cameraIds.length == 0) {

Log.v(TAG, "no useful camera");

return;

}

// 设置摄像参数

setCameraCharacteristics(cameraManager, cameraIds[0]);

// 权限检查

if (ActivityCompat.checkSelfPermission(this, Manifest.permission.CAMERA)

!= PackageManager.PERMISSION_GRANTED) {

Log.v(TAG, "camera permission no granted");

return;

}

cameraManager.openCamera(cameraIds[0], mCameraDeviceStateCallback, null);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

/**

* 设置摄像参数

*/

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

private void setCameraCharacteristics(CameraManager cameraManager, String cameraId) throws CameraAccessException {

CameraCharacteristics c = cameraManager.getCameraCharacteristics(cameraId);

// 获取摄像头支持的配置属性

StreamConfigurationMap map = c.get(CameraCharacteristics.SCALER_STREAM_CONFIGURATION_MAP);

if (map == null) {

Log.v(TAG, "map is null");

return;

}

// 获取摄像头支持的最大尺寸

Size supportLargestSize = Collections.max(

Arrays.asList(map.getOutputSizes(ImageFormat.JPEG)), new CompareSizesByArea());

// 创建ImageReader对象,用于获取摄像头的图像数据

mImageReader = ImageReader.newInstance(supportLargestSize.getWidth(),

supportLargestSize.getHeight(), ImageFormat.JPEG, 2);

mImageReader.setOnImageAvailableListener(mImageAvailableListener, null);

mPreviewSize = chooseOptimalSize(map.getOutputSizes(SurfaceTexture.class), mWidth, mHeight, supportLargestSize);

}

/**

* 拍照后的图像数据回调处理

*/

private ImageReader.OnImageAvailableListener mImageAvailableListener = new ImageReader.OnImageAvailableListener() {

// 当照片数据可用时回调该方法

@TargetApi(Build.VERSION_CODES.KITKAT)

@Override

public void onImageAvailable(ImageReader reader) {

if (!Environment.getExternalStorageState().equals(Environment.MEDIA_MOUNTED)) {

Log.v(TAG, "external storage no exists");

return;

}

// 获取捕获的照片数据

Image image = reader.acquireNextImage();

ByteBuffer byteBuffer = image.getPlanes()[0].getBuffer();

byte[] buffer = new byte[byteBuffer.remaining()];

byteBuffer.get(buffer);

File imageDir = Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_PICTURES);

if (!imageDir.exists()) {

Log.v(TAG, "picture directory no exists");

return;

}

String imagePath = imageDir.getAbsolutePath() + File.separator + "image" +

new SimpleDateFormat("yyyyMMddHHmmss", Locale.CHINA).format(new Date()) + ",jpg";

BufferedOutputStream bos = null;

try {

bos = new BufferedOutputStream(new FileOutputStream(imagePath));

bos.write(buffer);

bos.flush();

Bitmap bitmap = createBitmap(imagePath);

if (bitmap != null) {

mIvCapturePicture.setImageBitmap(bitmap);

}

} catch (FileNotFoundException e) {

e.printStackTrace();

} catch (IOException e) {

e.printStackTrace();

} finally {

if (bos != null) {

try {

bos.close();

} catch (IOException e) {

e.printStackTrace();

}

}

image.close();

}

}

};

private Bitmap createBitmap(String imagePath) {

BitmapFactory.Options options = new BitmapFactory.Options();

options.inJustDecodeBounds = true;

BitmapFactory.decodeFile(imagePath, options);

options.inSampleSize = getThumbnailSampleSize(options);

options.inJustDecodeBounds = false;

return BitmapFactory.decodeFile(imagePath, options);

}

private int getThumbnailSampleSize(BitmapFactory.Options options) {

int inSampleSize = 1;

int imageWidth = options.outWidth;

int imageHeight = options.outHeight;

int reqWidth = (int) TypedValue.applyDimension(TypedValue.COMPLEX_UNIT_PX,

100, getResources().getDisplayMetrics());

int reqHeight = (int) TypedValue.applyDimension(TypedValue.COMPLEX_UNIT_PX,

100, getResources().getDisplayMetrics());

if (imageWidth > reqWidth || imageHeight > reqHeight) {

final int halfWidth = imageWidth / 2;

final int halfHeight = imageHeight / 2;

while ((halfWidth / inSampleSize) >= reqWidth && (halfHeight / inSampleSize) >= reqHeight) {

inSampleSize *= 2;

}

}

return inSampleSize;

}

/**

* 获取合适的预览图像大小

*/

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

private Size chooseOptimalSize(Size[] options, int width, int height, Size aspectRatio) {

List<Size> sizes = new ArrayList<>();

int w = aspectRatio.getWidth();

int h = aspectRatio.getHeight();

for (Size option : options) {

if (option.getHeight() == option.getWidth() * h / w &&

option.getWidth() >= width && option.getHeight() >= height) {

sizes.add(option);

}

}

// 如果找到多个预览尺寸,获取其中面积最小的

if (sizes.size() > 0) {

return Collections.min(sizes, new CompareSizesByArea());

}

// 没有合适的预览尺寸

else {

return options[0];

}

}

/**

* 预览图像大小的Size对比

*/

private class CompareSizesByArea implements Comparator<Size> {

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

@Override

public int compare(Size lhs, Size rhs) {

// 强转为long保证不会发生溢出

return Long.signum(lhs.getWidth() * lhs.getHeight() - rhs.getWidth() * rhs.getHeight());

}

}

/**

* CameraDevice.StateCallback

*/

private CameraDevice.StateCallback mCameraDeviceStateCallback = new CameraDevice.StateCallback() {

// 相机开启时回调

@Override

public void onOpened(@NonNull CameraDevice camera) {

Log.v(TAG, "onOpened");

mCameraDevice = camera;

// 创建预览

startPreview(camera);

}

// 相机断开时回调

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

@Override

public void onDisconnected(@NonNull CameraDevice camera) {

Log.v(TAG, "onDisconnected");

camera.close();

}

// 相机错误时回调

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

@Override

public void onError(@NonNull CameraDevice camera, int error) {

Log.v(TAG, "onError");

camera.close();

}

};

/**

* 开启预览

*/

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

private void startPreview(CameraDevice camera) {

SurfaceTexture surfaceTexture = mTextureView.getSurfaceTexture();

// 设置TextureView的缓冲区大小

surfaceTexture.setDefaultBufferSize(mPreviewSize.getWidth(), mPreviewSize.getHeight());

// 获取Surface显示预览数据

Surface surface = new Surface(surfaceTexture);

// 创建CameraRequest请求

try {

mCaptureBuilder = camera.createCaptureRequest(CameraDevice.TEMPLATE_STILL_CAPTURE);

// 设置自动对焦模式

mCaptureBuilder.set(CaptureRequest.CONTROL_AF_MODE, CaptureRequest.CONTROL_AF_MODE_CONTINUOUS_PICTURE);

// 重设自动对焦模式

mCaptureBuilder.set(CaptureRequest.CONTROL_AF_TRIGGER, CameraMetadata.CONTROL_AF_TRIGGER_CANCEL);

// 设置自动曝光模式

mCaptureBuilder.set(CaptureRequest.CONTROL_AE_MODE, CaptureRequest.CONTROL_AE_MODE_ON_AUTO_FLASH);

// 设置Surface作为预览数据的显示界面

mCaptureBuilder.addTarget(surface);

camera.createCaptureSession(Arrays.asList(surface, mImageReader.getSurface()),

mCameraCaptureSessionStateCallback, null);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

/**

* CameraCaptureSession.StateCallback

*/

private CameraCaptureSession.StateCallback mCameraCaptureSessionStateCallback = new CameraCaptureSession.StateCallback() {

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

@Override

public void onConfigured(@NonNull CameraCaptureSession session) {

Log.v(TAG, "onConfigured");

// 通过setRepeatingRequest()预览

// 通过capture()拍照

// setRepeatingRequest()和capture()都是发送向设备获取图像数据请求

// 不同在于,capture()只获取一次,而setRepeatingRequest()则是不断发送获取

// setRepeatingRequest()不处理图像数据时就是预览的效果;

// setRepeatingRequest()也可以通过该方法处理连拍效果,然后在onCaptureCompleted()保存图像

try {

mCaptureSession = session;

session.setRepeatingRequest(mCaptureBuilder.build(), mCameraCaptureSessionCaptureCallback, null);

} catch (CameraAccessException e) {

e.printStackTrace();

}

}

@Override

public void onConfigureFailed(@NonNull CameraCaptureSession session) {

Log.v(TAG, "onConfiguredFailed");

}

};

/**

* CaptureCallback

*/

private CameraCaptureSession.CaptureCallback mCameraCaptureSessionCaptureCallback = new CameraCaptureSession.CaptureCallback() {

// 拍照capture过程中,在onCaptureCompleted之前回调,可以在此对操作美颜等处理

@Override

public void onCaptureProgressed(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull CaptureResult partialResult) {

super.onCaptureProgressed(session, request, partialResult);

Log.v(TAG, "onCaptureProgressed");

}

// 拍照完成后回调

@TargetApi(Build.VERSION_CODES.LOLLIPOP)

@Override

public void onCaptureCompleted(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull TotalCaptureResult result) {

super.onCaptureCompleted(session, request, result);

Log.v(TAG, "onCaptureCompleted");

}

// 拍照失败回调

@Override

public void onCaptureFailed(@NonNull CameraCaptureSession session, @NonNull CaptureRequest request, @NonNull CaptureFailure failure) {

super.onCaptureFailed(session, request, failure);

Log.v(TAG, "onCaptureFailed");

}

};

}

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?