一、环境准备:

前提:hadoop完全分布式已部署完成

相关软件包:zookeeper-3.6.2,hadoop-2.7.3

二、安装zookeeper

1、解压文件并创建软链接

tar -zxvf apache-zookeeper-3.6.2-bin.tar.gz

ln -s apache-zookeeper-3.6.2-bin.tar.gz zookeeper

2、在zookeeper目录下创建data目录

mkdir -p data

3、将/zookeeper/conf下的zoo_sample.cfg改为zoo.cfg

mv zoo_sample.cfg zoo.cfg

4、配置zoo.cfg文件

server.1=ch01:2888:3888

server.2=ch02:2888:3888

server.3=ch03:2888:3888

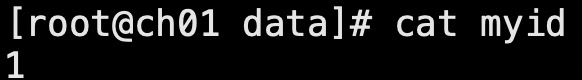

5、在zookeeper/data目录中创建myid文件

touch myid

#在myid中添加相应序号三台机器分别为1、2、3

6、启动zk

zkServer.sh start

#查看状态

zkServer.sh status

三、配置HDFS-HA高可用

1、配置core-site.xml

<configuration>

<property>

<!-- 把两个NameNode)的地址组装成一个集群mycluster -->

<name>fs.defaultFS</name>

<value>hdfs://mycluster</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/app/hadoop/data/tmp</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>ch01:2181,ch02:2181,ch03:2181</value>

</property>

</configuration>

2、配置hdfs-site.xml

<configuration>

<property>

<!--指定副本数为3-->

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- 完全分布式集群名称 -->

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<!-- 集群中NameNode节点都有哪些 -->

<property>

<name>dfs.ha.namenodes.mycluster</name>

<value>nn1,nn2</value>

</property>

<!-- nn1的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1</name>

<value>ch01:8020</value>

</property>

<!-- nn2的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2</name>

<value>ch02:8020</value>

</property>

<!-- nn1的http通信地址 -->

<property>

<name>dfs.namenode.http-address.mycluster.nn1</name>

<value>ch01:50070</value>

</property>

<!-- nn2的http通信地址 -->

<property>

<name>dfs.namenode.http-address.mycluster.nn2</name>

<value>ch02:50070</value>

</property>

<!-- 指定NameNode元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://ch01:8485;ch02:8485;ch03:8485/mycluster</value>

</property>

<!-- 配置隔离机制,即同一时刻只能有一台服务器对外响应 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<!-- 使用隔离机制时需要ssh无秘钥登录-->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<!-- 声明journalnode服务器存储目录-->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/opt/app/hadoop/data/jn</value>

</property>

<!-- 关闭权限检查-->

<property>

<name>dfs.permissions.enable</name>

<value>false</value>

</property>

<!-- 访问代理类:client,mycluster,active配置失败自动切换实现方式-->

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

</configuration>

3、将配置好的文件分发到其他机器

scp hdfs-site.xml ch02:/opt/app/hadoop/etc/hadoop

scp core-site.xml ch02:/opt/app/hadoop/etc/hadoop

4、启动集群

4.1在各个节点启动journalnode

hadoop-daemon.sh start journalnode

4.2在nn1上格式化namenode,并启动

hdfs namenode -format

hadoop-daemon.sh start namenode

4.3在nn2上同步nn1的元数据信息

hdfs namenode -bootstrapStandby

4.4启动[nn2]

hadoop-daemon.sh start namenode

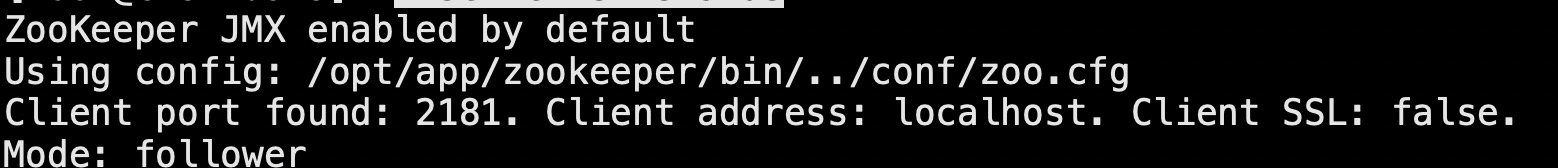

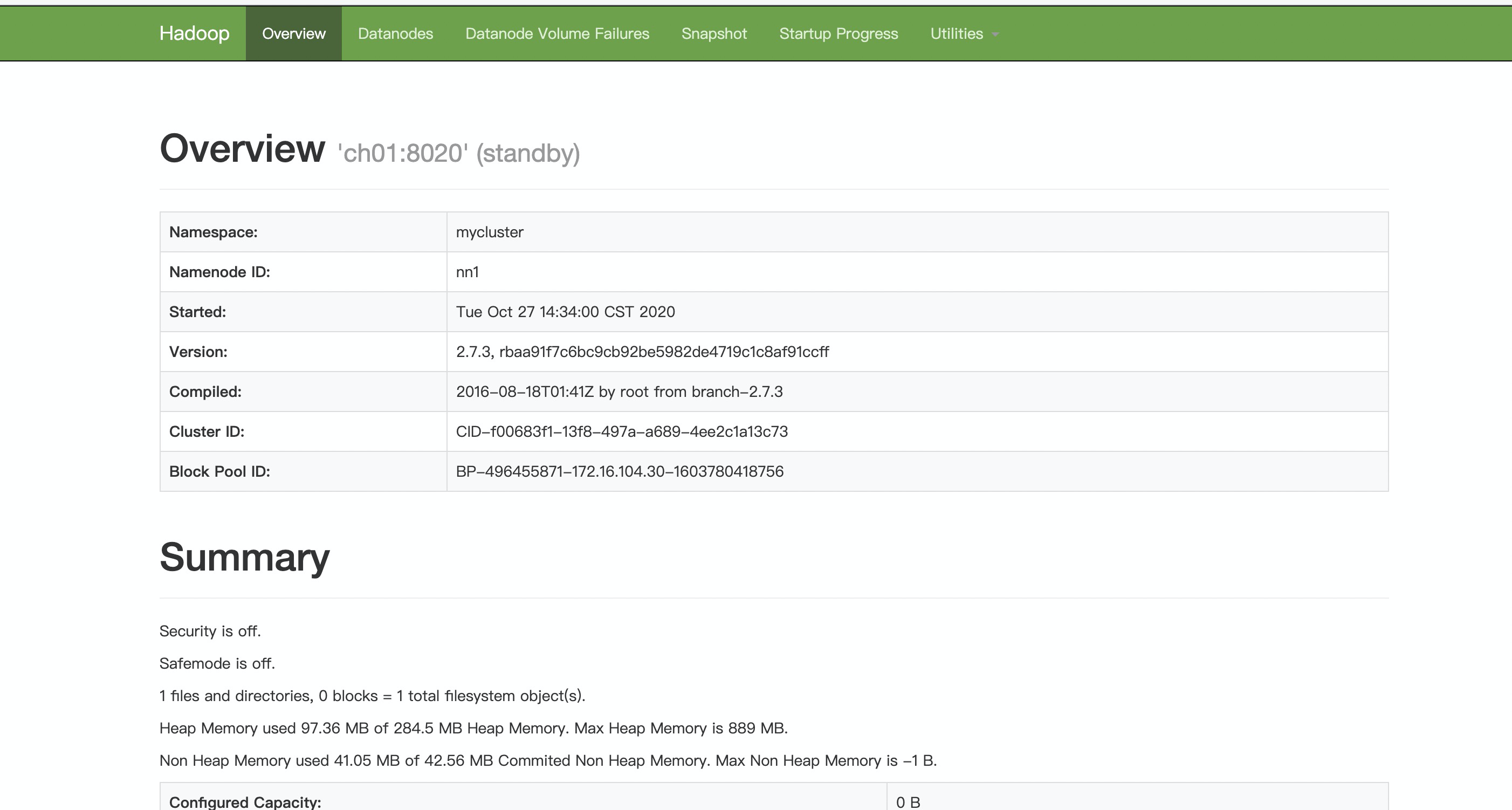

4.5查看web页面显示

4.6启动datanode

hadoop-daemons.sh start datanode

4.7将[nn1]切换为Active

hdfs haadmin -transitionToActive nn1

4.8查看nn1 是否为Active

hdfs haadmin -getServiceState nn1

5、配置自动故障转移

5.1 hdfs-site.xml

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

5.2 core-site.xml

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop202:2181,hadoop203:2181,hadoop204:2181</value>

</property>

6、启动

6.1启动zookeeper

bin/zkServer.sh start

6.2初始化HA在Zookeeper中状态:

hdfs zkfc -formatZK

6.3在__各个__NameNode__节点__上启动__DFSZK Failover Controller_,_先在__哪台__机器启动,哪个机器的__NameNode__就是__Active NameNode

hadoop-daemin.sh start zkfc

6.4 验证

将Active NameNode进程kill

kill -9 namenode的进程id

四、配置yarn—HA

1、yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<!--设置shuffle流程-->

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!--启用resourcemanager ha-->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!--声明两台resourcemanager的地址-->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>cluster-yarn1</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>ch01</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>ch03</value>

</property>

<!--指定zookeeper集群的地址-->

<property>

<name>yarn.resourcemanager.zk-address</name

<value>ch01:2181,ch02:2181,ch03:2181</value>

</property>

<!--启用自动恢复-->

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<!--指定resourcemanager的状态信息存储在zookeeper集群-->

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

</configuration>

(2)同步更新其他节点的配置信息

2、 启动hdfs (如果之前已经执行过关于hdfs操作,此处无需再次操作)

(1)在各个JournalNode节点上,输入以下命令启动journalnode服务:

hadoop-daemon.sh start journalnode

(2)在[nn1]上,对其进行格式化,并启动:

hdfs namenode -format

hadoop-daemon.sh start namenode

(3)在[nn2]上,同步nn1的元数据信息:

hdfs namenode -bootstrapStandby

(4)启动[nn2]:

hadoop-daemon.sh start namenode

(5)启动所有DataNode

hadoop-daemons.sh start datanode

(6)将[nn1]切换为Active

hdfs haadmin -transitionToActive nn1

3、 启动YARN

(1)在ch01中执行:

start-yarn.sh

(2)在ch03中执行:

yarn-daemon.sh start resourcemanager

(3)查看服务状态

yarn rmadmin -getServiceState rm1

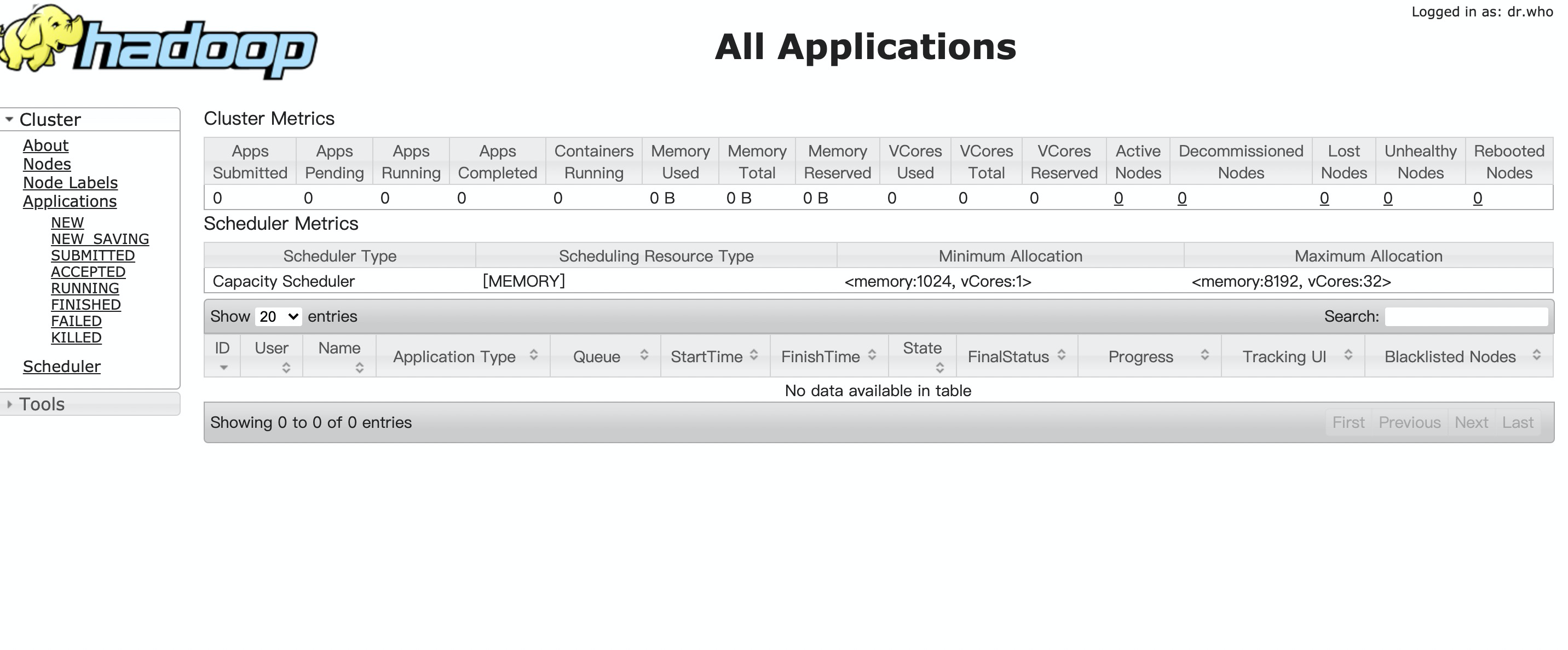

访问8080端口,查看web页面

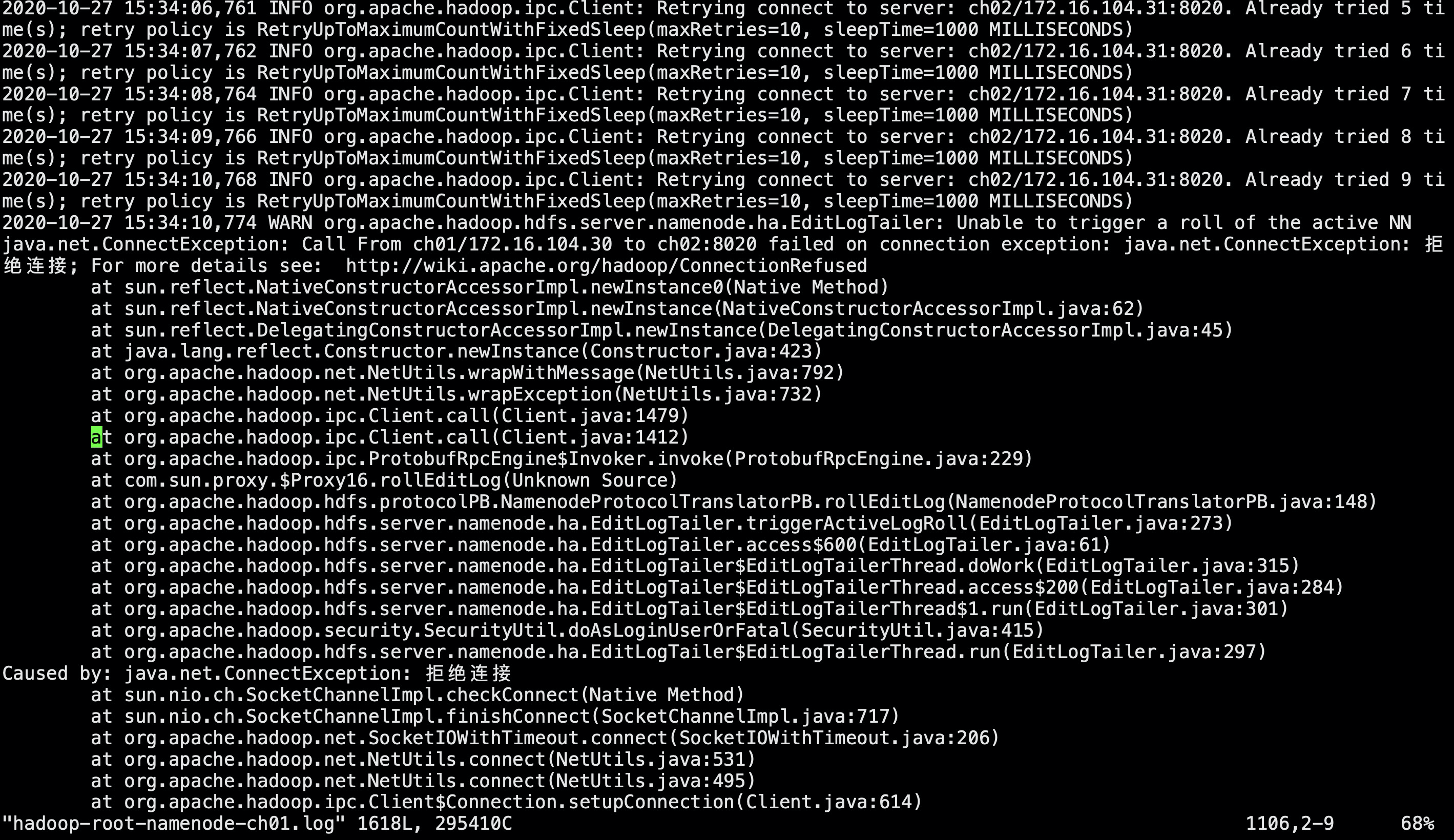

Ps:遇到的问题

解决方法:

修改 hdfs-site.xml

添加如下属性

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

分析:

active节点所在的namenode down掉后,standby节点会连接down掉的namenode,如果失败就一直连到指定的次数后放弃,然后。。。就没有然后了

加入shell(/bin/true)后,就是可以直接执行自定义脚本,让standyby节点成为active

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?