kafka的数据发送和接收

引入pom文件

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

</dependency>

添加配置文件

spring:

kafka:

# 指定kafka 代理地址,可以多个

bootstrap-servers: ip:port

producer: # 生产者

retries: 1 # 设置大于0的值,则客户端会将发送失败的记录重新发送

# 每次批量发送消息的数量

batch-size: 16384

buffer-memory: 33554432

# 指定消息key和消息体的编解码方式

key-serializer: org.apache.kafka.common.serialization.StringSerializer

value-serializer: org.apache.kafka.common.serialization.StringSerializer

#修改最大向kafka推送消息大小

properties:

max.request.size: 52428800

consumer:

kafka:

kafka-topic-names: testTopic

#手动提交offset保证数据一定被消费

enable-auto-commit: false

#指定从最近地方开始消费(earliest)

auto-offset-reset: latest

#消费者组

group-id: dev

properties:

security:

protocol: SASL_PLAINTEXT

sasl:

mechanism: PLAIN

jaas:

config: 'org.apache.kafka.common.security.scram.ScramLoginModule required username="producer" password="XXXX";'

```XXXX

Application.java中添加kafka Bean

~~~java

/**

* 配置kafka手动提交offset

*

* @param consumerFactory 消费者factory

* @return 监听factory

*/

@Bean

public KafkaListenerContainerFactory<?> kafkaListenerContainerFactory(ConsumerFactory consumerFactory) {

ConcurrentKafkaListenerContainerFactory<Integer, String> factory =

new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory);

//消费者并发启动个数,最好跟kafka分区数量一致,不能超过分区数量

//factory.setConcurrency(1);

factory.getContainerProperties().setPollTimeout(1500);

//设置手动提交ackMode

factory.getContainerProperties().setAckMode(ContainerProperties.AckMode.MANUAL_IMMEDIATE);

return factory;

}

~~~

生产者向kafka发送数据

~~~java

package com.gbm.emp.utils;

import lombok.extern.slf4j.Slf4j;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.support.SendResult;

import org.springframework.stereotype.Component;

import org.springframework.util.concurrent.ListenableFuture;

/**

* 向kafka推送数据

*

* @author wangfenglei

*/

@Slf4j

@Component

public class KafkaDataProducer {

@Autowired

private KafkaTemplate<String, String> kafkaTemplate;

/**

* 向kafka push表数据,同步到本地

*

* @param msg 消息

* @param topic 主题

* @throws Exception 异常

*/

public RecordMetadata sendMsg(String msg, String topic) throws Exception {

try {

String defaultTopic = kafkaTemplate.getDefaultTopic();

System.out.println("defaultTopic:"+defaultTopic);

ListenableFuture<SendResult<String, String>> future = kafkaTemplate.send(topic, msg);

return future.get().getRecordMetadata();

} catch (Exception e) {

log.error("sendMsg to kafka failed!", e);

throw e;

}

}

}

~~~

~~~java

package com.gbm.emp.utils;

import lombok.extern.slf4j.Slf4j;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.kafka.support.Acknowledgment;

import org.springframework.stereotype.Component;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap;

/**

* kafka消息消费

*

* @author wangfenglei

*/

@Component

@Slf4j

public class KafkaDataConsumer {

private final Map<String, Object> strategyMap = new ConcurrentHashMap<>();

// public KafkaDataConsumer(Map<String, BaseConsumerStrategy> strategyMap){

// this.strategyMap.clear();

// strategyMap.forEach((k, v)-> this.strategyMap.put(k, v));

// }

/**

* @param record 消息 topics为订阅的队列,可以多个可在配置文件中配置

*/

@KafkaListener(topics = {"testTopic"}, containerFactory = "kafkaListenerContainerFactory")

public void receiveMessage(ConsumerRecord record, Acknowledgment ack) throws Exception {

String message = (String) record.value();

//接收消息

log.info("Receive from kafka topic[" + record.topic() + "]:" + message);

// try{

// BaseConsumerStrategy strategy = strategyMap.get(record.topic());

//

// if(null != strategy){

// strategy.consumer(message);

// }

// }finally {

// //手动提交保证数据被消费

// ack.acknowledge();

// }

}

}

~~~

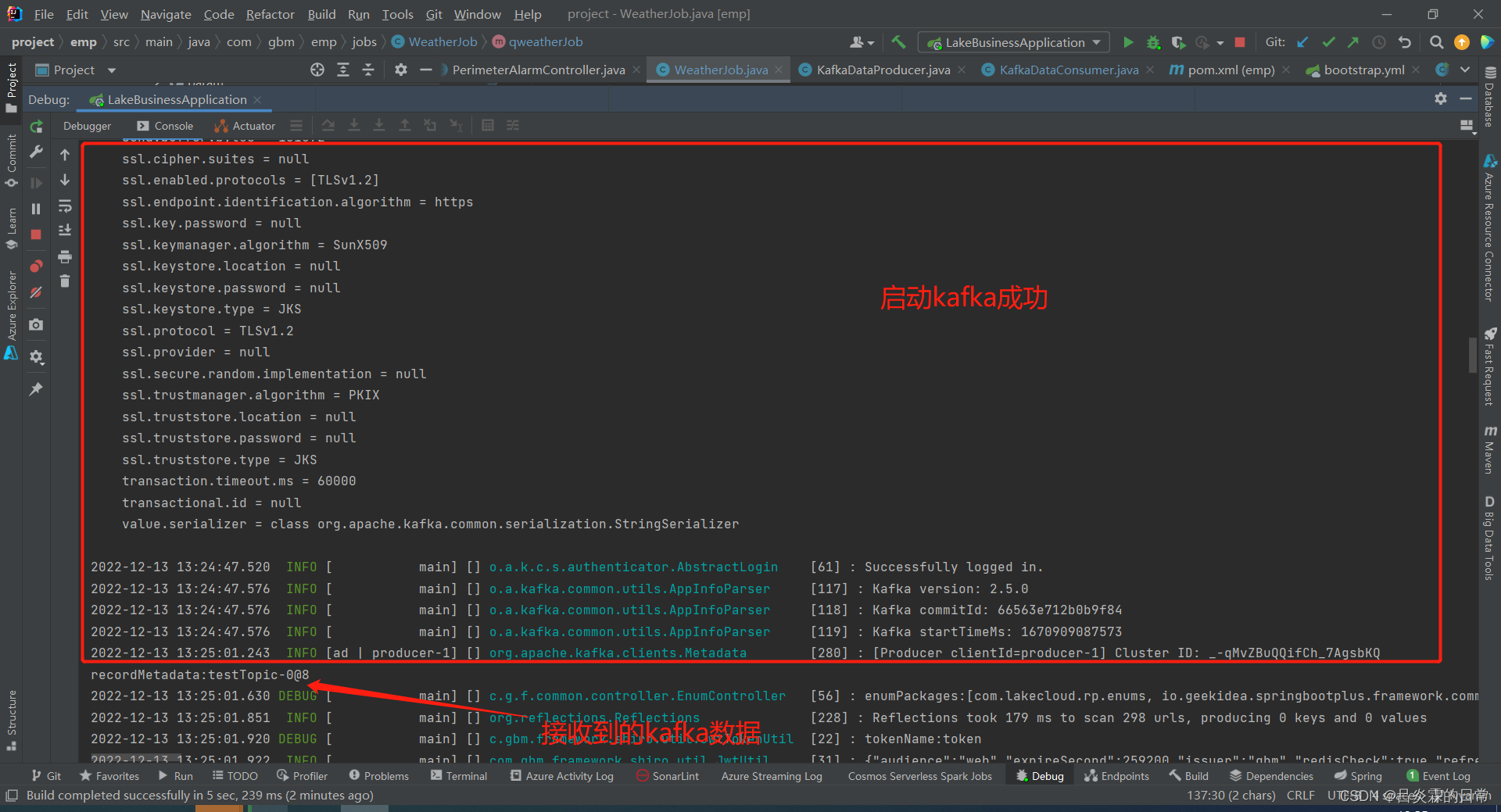

测试启动发送数据

~~~java

//启动时先跑一遍

@PostConstruct

@XxlJob("qweatherJob")

public void qweatherJob(){

log.info("====qweatherJob======>>>");

try {

RecordMetadata recordMetadata = kafkaDataProducer.sendMsg("test", "testTopic");

System.out.println("recordMetadata:"+recordMetadata.toString());

} catch (Exception e) {

e.printStackTrace();

}

// weatherService.getQweather();

}

~~~

6353

6353

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?