1 OpenStack简介

Openstack是开源的云计算管理平台项目,由几个主要的组件组合起来完成具体工作,为私有云和公有云提供可扩展的弹性的云计算服务。主要任务是为用户提供IaaS服务(Infrastructure as a Service),每个服务提供API以进行集成。

OpenStack控制数据中心的大量的计算、存储和网络资源,这些资源通过图形化界面或者OpenStack的API进行管理。

2 OpenStack架构及组件

1.OpenStack架构

三种网络:

A.管理网络: OpenStack的管理节点(或管理服务)对其他的节点进行管理的网络,他们之间有不同组件之间的API调用,如虚拟机之间的迁移等。

B.存储网络:计算节点,访问存储服务的网络。向存储设备里读写数据的流量,都走存储网络。

C.服务网络:由OpenStack去管理的虚拟机对外提供服务的网络

四种节点:

A.控制节点controller:OpenStack的管理节点,数据库服务,消息队列(RabbitMQ),keystone服务,glance服务,neutron服务等都运行在控制节点上。至少2个网络接口。

Nova和neutron两个组件必须分布式部署。Nova-compute必须部署在计算节点,Nova其他的服务应该部署在控制节点。Neutron的插件或agent需要部署在网络节点和计算节点,其他可以部署在控制节点。

B.计算节点compute:指实际运行虚拟机的节点。也就是OpenStack管理的虚拟机实际上运行在计算节点。包含nova,neutron服务,至少2个网络接口。

C.存储节点storage:可以是提供块存储的cinder节点,对象存储的swift节点,或者是swift集群的proxy节点,也可以是一个其他服务的存储后端。最少包含2个网络接口

D.网络节点network:只包含neutron服务,包含三个网络接口,用于与控制节点通信,与除了控制节点之外的计算/存储节点通信和外部虚拟机与相应网络之间的通信。一般可以控制节点复用。

2.基本组件:

Compute(计算服务Nova)

Identity(身份认证Keystone)

Network(网络&地址管理Neutron)

Image Service(镜像服务Glance)

3.常用组件的功能介绍:

基本组件:

Compute——Nova:管理虚拟机的整个生命周期:创建、运行、挂起、调度、关闭、销毁等。这是真正的执行部件。接受DashBoard发来的命令并完成具体动作。需要虚拟机软件配合(KVM,Xen,Hyper-V)

Identity——Keystone:为其他服务提供身份验证、权限管理、令牌管理及服务名册管理,管理domains,projects,users,groups,roles。要使用云计算的所有用户事先需要在Keystone中建立账号和密码,并定义权限。OpenStack服务也要在里面注册,并且登记具体的API,Keystone本身也要注册和登记API。

Image Storage——Glance:存取虚拟机磁盘镜像文件,Compute服务在启动虚拟机时需要从这里获取镜像文件。与swift和cinder不同,这两者是存储在虚拟机里面使用的。

Network——Neutron:提供云计算的网络虚拟化技术,为OpenStack其他服务提供网络连接服务。管理网络资源,提供一组应用编程接口(API),可以定义network,subnet,router,配置dhcp,dns,负载均衡,L3服务,网络支持GRE,VLAN。插件式结构支持当前主流的网络设备和最新网络技术,如openvswitch.

其他常用组件:

Block Storage——Cinder:管理块设备,为虚拟机管理SAN设备源,本身不是块设备源,需要一个存储后端来提供实际的块设备源(iSCSI、FC等)。Cinder作为管理,当虚拟机需要块设备时,询问它去哪里获取具体的块设备。插件驱动架构有利于块设备的创建和管理,如创建卷、删除卷,挂载和卸载卷。

Dashboard——Horizon:提供图形化界面,用户登录后可以进行以下操作:管理虚拟机、配置权限、分配IP地址、创建租户和用户。

3 部署OpenStack

一.OpenStack的部署要求:

-最低配置要求:

| 节点类型 | 硬件配置 | 网络接口 |

|---|---|---|

| 控制节点 | 1个CPU,4 GB内存和5 GB存储 | 2个 |

| 计算节点 | 1个cpu,2 GB内存和10 GB存储 | 2个 |

存储节点与计算节点复用

注:其实硬件配置可以再好一点,比如实际运用时,最低差不多是控制为16G内存,计算为8G。否则就算跑起来了都会很卡。

二.基本环境配置:

1.关闭防火墙,selinux

systemctl disable firewalld

systemctl stop firewalld

setenforce 0

vim /etc/selinux/config

SELINUX=disabled

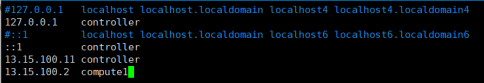

2.配置主机名称解析(所有节点都进行以下操作):

控制节点:vim /etc/hosts

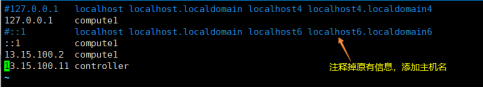

计算节点:vim /etc/hosts

注:如果还有其他节点也要同样配置。例如块存储节点:block1…对象存储节点:object 1…

3.安装时间同步服务器:chrony或者ntp

A.安装Chrony

控制节点:

1)yum install chrony

2)vim /etc/chrony.conf

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

server NTP_SERVER iburst

allow 13.15.100.0/24

注:NTP_SERVER使用NTP服务器的hostname或ip代替

3)systemctl enable chronyd

systemctl start chronyd

其他节点:

1)yum install chrony

2)vim /etc/chrony.conf

server controller iburst

3)systemctl enable chronyd

systemctl start chronyd

验证:在控制节点和其他节点都执行:chronyc sources

B.安装ntp

1)yum install ntp

2)vim /etc/ntp.conf

可以根据页面上的步骤进行操作和验证

https://blog.csdn.net/willinge/article/details/79928726

4.配置Newton版本yum源

由于Newton版本官方资源库已经删除,安装软件包之前先进行以下操作:

vim /etc/yum.repos.d/OpenStack-Newton.repo

[OpenStack-Newton]

name=OpenStack-Newton

baseurl=http://vault.centos.org/7.2.1511/cloud/x86_64/openstack-newton/

gpgcheck=0

enabled=1

5.安装openstack软件包

yum --disablerepo=* --enablerepo=OpenStack-Newton install centos-release-openstack-newton

yum upgrade(该操作为升级安装包但不升级内核)

注:由于官方资源库已经删除,可以不进行升级

yum install python-openstackclient

yum install openstack-selinux

6.安装数据库(mariadb)

(1)yum install mariadb mariadb-server python2-PyMySQL

(2)vim /etc/my.cnf.d/openstack.cnf

[mysqld]

bind-address = 13.15.100.11

default-storage-engine = innodb

innodb_file_per_table

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

(3)设置mariadb自启并启动,完成初始化

systemctl enable mariadb

systemctl start mariadb

mysql_secure-installation

7.消息队列 RabbitMQ安装(只在控制节点)

(1)yum install rabbitmq-server

(2)启动服务,设置自启动

systemctl enable rabbitmq-server

systemctl start rabbitmq-server

(4)添加openstack用户

rabbitmqctl add_user openstack RABBIT_PASS

注:RABBIT_PASS修改为Rabbit服务器密码

(5)允许用户配置、写入和读取访问权限

rabbitmqctl set_permissions openstack “." ".” “.*”

8.Memcache配置(只在控制节点)

(1)yum install memcached python-memcached

(2)vim /etc/sysconfig/memcached

PORT="11211"

USER="memcached"

MAXCONN="1024"

CACHESIZE="64"

OPTIONS="-l 13.15.100.11"

(3)systemctl enable memcached

systemctl start memcached

三.服务配置:

1.Keystone服务:

以下操作在控制节点进行:

(1)创建keystone数据库,授予对keystone数据库的适当访问权限

mysql -uroot -p

CREATE DATABASE keystone;

GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone’@‘localhost’ IDENTIFIED BY ‘KEYSTONE_DBPASS’;

GRANT ALL PRIVILEGES ON keystone.* TO ‘keystone’@’%’ IDENTIFIED BY ‘KEYSTONE_DBPASS’;

(2)安装组件

yum install openstack-keystone httpd mod_wsgi

(3)vim /etc/keystone/keystone.conf

[database]下添加

connection = mysql+pymysql://keystone:KEYSTONE_DBPASS@controller/keystone

[token]下添加

provider = fernet

(4)填充身份认证服务数据库

su -s /bin/sh -c “keystone-manage db_sync” keystone

(5)初始化

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

(6)引导身份服务

keystone-manage bootstrap --bootstrap-password ADMIN_PASS --bootstrap-admin-url http://controller:35357/v3/ --bootstrap-internal-url http://controller:35357/v3/ --bootstrap-public-url http://controller:5000/v3/ --bootstrap-region-id RegionOne

(7)配置Apache HTTP服务器

vim /etc/httpd/conf/httpd.conf

添加:ServerName controller

(8)创建软链接:ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d

(9)systemctl enable httpd

systemctl start httpd

(10)配置管理账户

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_PROJECT_NAME=admin

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_DOMAIN_NAME=Default

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

(11)创建域,项目,用户和角色

创建service项目和demo项目:

openstack project create --domain default --description “Service Project” service

openstack project create --domain default --description “Demo Project” demo

创建demo用户,user角色

openstack user create --domain default --password-prompt demo

将user角色添加到demo项目和用户

以admin用户身份请求身份验证令牌:

openstack --os-auth-url http://controller:35357/v3 --os-project-domain-name Default --os-user-domain-name Default --os-project-name admin --os-username admin token issue

以demo用户身份请求身份验证令牌:

openstack --os-auth-url http://controller:35357/v3 --os-project-domain-name Default --os-user-domain-name Default --os-project-name demo --os-username demo token issue

(12)创建OpenStack客户端环境脚本

vim admin-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=ADMIN_PASS

export OS_AUTH_URL=http://controller:35357/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

vim demo-openrc

export OS_PROJECT_DOMAIN_NAME=Default

export OS_USER_DOMAIN_NAME=Default

export OS_PROJECT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=DEMO_PASS

export OS_AUTH_URL=http://controller:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

使用方法:

. admin-openrc

openstack token issue

2.Glance服务

以下部分在控制节点配置:

(1)创建数据库并授予权限,完成后退出

mysql -uroot -p

CREATE DATABASE glance;

GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@‘localhost’ IDENTIFIED BY ‘GLANCE_DBPASS’;

GRANT ALL PRIVILEGES ON glance.* TO ‘glance’@’%’ IDENTIFIED BY ‘GLANCE_DBPASS’;

(2). admin-openrc

(3)创建服务凭证

创建glance用户:openstack user create --domain default --password-prompt glance

将admin角色添加到glance用户和service项目:

openstack role add --project service --user glance admin

创建glance服务实体:

openstack service create --name glance --description “OpenStack Image” image

(4)创建glance服务API端点:

openstack endpoint create --region RegionOne image public http://controller:9292

openstack endpoint create --region RegionOne image internal http://controller:9292

openstack endpoint create --region RegionOne image admin http://controller:9292

(5)安装和配置

1)yum install openstack-glance

2)vim /etc/glance/glance-api.conf

[database]

connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

[paste_deploy]

flavor = keystone

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images/

3)vim /etc/glance/glance-registry.conf

[database]

Connection = mysql+pymysql://glance:GLANCE_DBPASS@controller/glance

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = glance

password = GLANCE_PASS

[paste_deploy]

flavor = keystone

(6)执行以下步骤

su -s /bin/sh -c “glance-manage db_sync” glance

systemctl enable openstack-glance-api openstack-glance-registry

systemctl start openstack-glance-api openstack-glance-registry

(7)验证

. admin-openrc

wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

openstack image create “cirros” --file cirros-0.3.4-x86_64-disk.img --disk-format qcow2 --container-format bare --public

openstack image list

3.Nova服务

以下部分在控制节点配置:

(1)创建数据库

mysql -uroot -p

CREATE DATABASE nova_api;

CREATE DATABASE nova;

GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@‘localhost’ IDENTIFIED BY ‘NOVA_DBPASS’;

GRANT ALL PRIVILEGES ON nova_api.* TO ‘nova’@’%’ IDENTIFIED BY ‘NOVA_DBPASS’;

GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@‘localhost’ IDENTIFIED BY ‘NOVA_DBPASS’;

GRANT ALL PRIVILEGES ON nova.* TO ‘nova’@’%’ IDENTIFIED BY ‘NOVA_DBPASS’;

(2). admin-openrc

(3)创建服务凭证

创建nova用户:openstack user create --domain default --password-prompt nova

向nova添加admin角色:openstack role add --project service --user nova admin

创建nova服务实体:openstack service create --name nova --description “OpenStack Compute” compute

创建Compute服务API端点:

openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1/%(tenant_id)s

openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1/%(tenant_id)s

openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1/%(tenant_id)s

(4)安装配置

1)yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler

2)vim /etc/nova/nova.conf

[DEFAULT]

enable_apis = osapi_compute,metadata

transport_url = rabbit://openstack:NOVA_DBPASS@controller

auth_strategy = keystone

my_ip = 13.15.100.11

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[api_database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova_api

[database]

connection = mysql+pymysql://nova:NOVA_DBPASS@controller/nova

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[vnc]

vncserver_listen = $my_ip

vncserver_proxyclient_address = $my_ip

3)执行下列操作

su -s /bin/sh -c “nova-manage api_db sync” nova

su -s /bin/sh -c “nova-manage db sync” nova

systemctl enable openstack-nova-api openstack-nova-consoleauth openstack-nova-scheduler openstack-nova-conductor openstack-nova-novncproxy

systemctl start openstack-nova-api openstack-nova-consoleauth openstack-nova-scheduler openstack-nova-conductor openstack-nova-novncproxy

以下部分在计算节点配置:

(1)yum install openstack-nova-compute

(2)vim /etc/nova/nova.conf

[DEFAULT]

enabled_apis = osapi_compute,metadata

auth_strategy = keystone

transport_url = rabbit://openstack:RABBIT_PASS@controller

my_ip = MANAGEMENT_INTERFACE_IP_ADDRESS

use_neutron = True

firewall_driver = nova.virt.firewall.NoopFirewallDriver

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = nova

password = NOVA_PASS

[vnc]

enabled = True

vncserver_listen = 0.0.0.0

vncserver_proxyclient_address = $my_ip

novncproxy_base_url = http://controller:6080/vnc_auto.html

[glance]

api_servers = http://controller:9292

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

(3)执行以下语句

egrep -c ‘(vmx|svm)’ /proc/cpuinfo

如果返回值大于等于1,则计算节点支持硬件加速,不需要其他配置

如果返回值为0,则计算节点不支持硬件加速,并且必须配置libvirt为使用QEMU

vim /etc/nova/nova.conf

[libvirt]

virt_type = qemu

(4)systemctl enable libvirtd openstack-nova-compute

systemctl start libvirtd openstack-nova-compute

可能遇到的问题:

如果nova-compute服务无法启动,请检查 /var/log/nova/nova-compute.log。错误消息AMQP server on controller:5672 is unreachable 可能表明控制器节点上的防火墙阻止访问端口5672。

(5)验证

. admin-openrc

openstack compute service list

4.Neutron服务:

以下部分在控制节点配置:

(1)创建数据库并授予权限,完成后退出

mysql -uroot -p

CREATE DATABASE neutron;

GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@‘localhost’ IDENTIFIED BY ‘NEUTRON_DBPASS’;

GRANT ALL PRIVILEGES ON neutron.* TO ‘neutron’@’%’ IDENTIFIED BY ‘NEUTRON_DBPASS’;

(2)创建neutron用户

. admin-openrc

openstack user create --domain default --password-prompt neutron

(3)向neutron用户添加admin角色:

openstack role add --project service --user neutron admin

(4)创建neutron服务实体

openstack service create --name neutron --description “OpenStack Networking” network

(5)创建网络服务的API端点:

配置网络选项——提供商网络(provider networks):

1)yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables

2)配置服务器组件

vim /etc/neutron/neutron.conf,在配置文件中修改以下部分:

[DEFAULT]

core_plugin = ml2

service_plugins =

transport_url = rabbit://openstack:RABBIT_PASS@controller

auth_strategy = keystone

notify_nova_on_port_status_changes = True

notify_nova_on_port_data_changes = True

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = neutron

password = NEUTRON_PASS

[nova]

auth_url = http://controller:35357

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = nova

password = NOVA_PASS

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

(1)配置ML2插件

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[ml2]

type_drivers = flat,vlan

tenant_network_types =

mechanism_drivers = linuxbridge

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[securitygroup]

enable_ipset = True

(2)配置Linux网桥代理

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

[vxlan]

enable_vxlan = False

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

(3)配置DHCP代理

vim /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = neutron.agent.linux.interface.BridgeInterfaceDriver

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = True

(4)配置元数据代理

vim /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_ip = controller

metadata_proxy_shared_secret = METADATA_SECRET

(5)配置计算服务使用网络服务

vim /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

service_metadata_proxy = True

metadata_proxy_shared_secret = METADATA_SECRET

(8)创建软链接

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

(9)填充数据库

su -s /bin/sh -c “neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head” neutron

(10)进行以下服务操作

#systemctl restart openstack-nova-api

#systemctl enable neutron-server neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent

#systemctl start neutron-server neutron-linuxbridge-agent neutron-dhcp-agent neutron-metadata-agent

以下部分在计算节点配置:

(1)yum install openstack-neutron-linuxbridge ebtables ipset

(2)配置公共组件

vim /etc/neutron/neutron.conf

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@controller

auth_strategy = keystone

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = neutron

password = NEUTRON_PASS

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

(3)提供商网络-配置Linux网桥代理

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge]

physical_interface_mappings = provider:PROVIDER_INTERFACE_NAME

[vxlan]

enable_vxlan = False

[securitygroup]

enable_security_group = True

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

(4)配置计算服务使用网络服务

vim /etc/nova/nova.conf

[neutron]

url = http://controller:9696

auth_url = http://controller:35357

auth_type = password

project_domain_name = Default

user_domain_name = Default

region_name = RegionOne

project_name = service

username = neutron

password = NEUTRON_PASS

(5)进行以下操作

systemctl restart openstack-nova-compute

systemctl enable neutron-linuxbridge-agent

systemctl start neutron-linuxbridge-agent

(6)在控制节点上验证操作

. admin-openrc

neutron ext-list

openstack network agent list

启动实例:

(1)在控制节点,执行. admin-openrc

(2)openstack network create --share --external --provider-physical-network provider --provider-network-type flat provider

该–share选项允许所有项目使用虚拟网络。

该–external选项将虚拟网络定义为外部。如果要创建内部网络,则可以使用–internal。默认值为internal。

–provider-physical-network provider和–provider-network-type flat 操作使用从下面的文件的信息在主机上的接口将虚拟扁平网络通过网络接口“bond0”连接到(天然/未标记的)物理扁平网络:

(3)在网络上创建一个子网:

openstack subnet create --network provider --allocation-pool start=13.15.100.150, end=13.15.100.160 --dns-nameserver 8.8.8.8 --gateway 13.15.11.254 --subnet-range 13.15.100.0/24 provider

openstack flavor create --id 0 --vcpus 1 --ram 64 --disk 1 m1.nano

ssh-keygen -q -N “”

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

openstack keypair list

openstack security group rule create --proto icmp default

openstack security group rule create --proto tcp --dst-port 22 default

. demo-openrc

openstack flavor list

openstack image list

openstack network list

openstack security group list

openstack server create --flavor m1.nano --image cirros --security-group default --key-name mykey provider-instance

openstack server list

openstack console url show provider-instance

Ping主机 验证网络是否连通

5.Horizon服务(dashboard web界面)

(1)yum install openstack-dashboard

(2)vim /etc/openstack-dashboard/local_settings

OPENSTACK_HOST = “controller”

ALLOWED_HOSTS = [’*’, ]

SESSION_ENGINE = ‘django.contrib.sessions.backends.cache’

CACHES = {

‘default’: {

‘BACKEND’: ‘django.core.cache.backends.memcached.MemcachedCache’,

‘LOCATION’: ‘controller:11211’,

}

}

OPENSTACK_KEYSTONE_URL = “http://%s:5000/v3” % OPENSTACK_HOST

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

OPENSTACK_API_VERSIONS = {

“identity”: 3,

“image”: 2,

“volume”: 2,

}

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = “default”

OPENSTACK_KEYSTONE_DEFAULT_ROLE = “user”

OPENSTACK_NEUTRON_NETWORK = {

‘enable_router’: False,

‘enable_quotas’: False,

‘enable_distributed_router’: False,

‘enable_ha_router’: False,

‘enable_lb’: False,

‘enable_firewall’: False,

‘enable_vpn’: False,

‘enable_fip_topology_check’: False,

}

(3)systemctl restart httpd memcached

(4)验证

Web浏览器访问:http://controller/dashboard

使用default域、admin或user用户进行登录

6.Block Storage服务*:

以下操作在控制节点:

(1)创建数据库

mysql -u root -p

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder’@‘localhost’ IDENTIFIED BY ‘CINDER_DBPASS’;

GRANT ALL PRIVILEGES ON cinder.* TO ‘cinder’@’%’ IDENTIFIED BY ‘CINDER_DBPASS’;

(2)创建服务凭证

创建cinder用户 :

openstack user create --domain default --password-prompt cinder

向cinder添加admin角色:openstack role add --project service --user cinder admin

创建cinder和cinderv2服务实体:

openstack service create --name cinder --description “OpenStack Block Storage” volume

openstack service create --name cinderv2 --description “OpenStack Block Storage” volumev2

(3)创建块存储服务API端点:

openstack endpoint create --region RegionOne volume public http://controller:8776/v1/%(tenant_id)s

openstack endpoint create --region RegionOne volume internal http://controller:8776/v1/%(tenant_id)s

openstack endpoint create --region RegionOne volume admin http://controller:8776/v1/%(tenant_id)s

openstack endpoint create --region RegionOne volumev2 public http://controller:8776/v2/%(tenant_id)s

openstack endpoint create --region RegionOne volumev2 internal http://controller:8776/v2/%(tenant_id)s

openstack endpoint create --region RegionOne volumev2 admin http://controller:8776/v2/%(tenant_id)s

(4)安装配置

yum install openstack-cinder

vim /etc/cinder/cinder.conf

[database]

connection = mysql+pymysql://cinder:CINDER_DBPASS@controller/cinder

[keystone_authtoken]

auth_uri = http://controller:5000

auth_url = http://controller:35357

memcached_servers = controller:11211

auth_type = password

project_domain_name = Default

user_domain_name = Default

project_name = service

username = cinder

password = CINDER_PASS

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[DEFAULT]

transport_url = rabbit://openstack:RABBIT_PASS@controller

auth_strategy = keystone

my_ip = 13.15.100.11

(5)填充数据库

su -s /bin/sh -c “cinder-manage db sync” cinder

(6)配置计算以使用块存储

vim /etc/nova/nova.conf

[cinder]

os_region_name = RegionOne

(7)进行以下操作

systemctl restart openstack-nova-api

systemctl enable openstack-cinder-api openstack-cinder-scheduler

systemctl start openstack-cinder-api openstack-cinder-scheduler

存储节点:(计算节点复用)

除官方文档外,newton版本部署步骤还可参考:

https://blog.csdn.net/rujianxuezha/article/details/80138115

基本架构讲解:

https://www.jianshu.com/p/14158ab0081a

https://www.cnblogs.com/klb561/p/8660264.html

6560

6560

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?