原文链接: TensorFlow 函数详解 一

上一篇: SRGAN 4倍放大

下一篇: tensorboard 可视化 projector

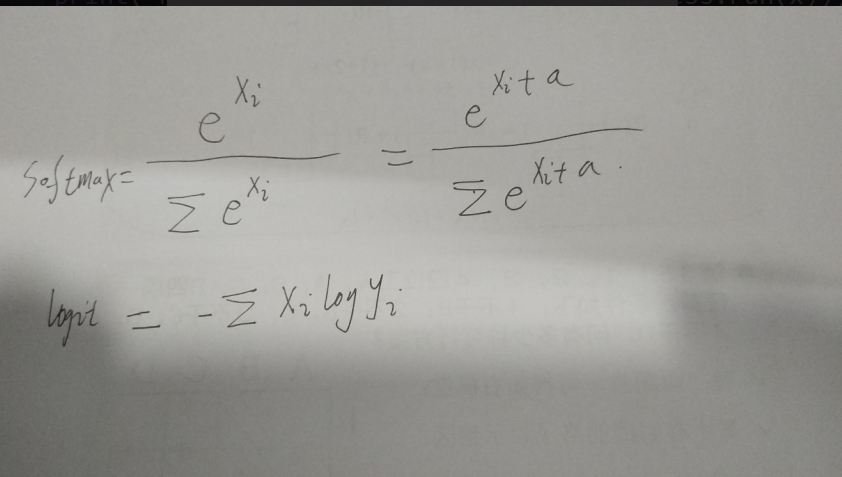

交叉熵函数

由于在做softmax时,对所有样0本加上常数后的结果是不变的,所以最终的交叉熵也不变

tf的函数softmax_cross_entropy_with_logits 默认只对logits做一次softmax,然后计算交叉熵

import tensorflow as tf

import numpy as np

def sofmax(x):

return np.exp(x) / np.sum(np.exp(x))

sess = tf.Session()

x = tf.range(1, 10, dtype=tf.float32)

print(sess.run(x)) # [1. 2. 3. 4. 5. 6. 7. 8. 9.]

print(-np.sum(sess.run(x) * np.log(sofmax(sess.run(x))))) # 140.63484

y = - tf.reduce_sum(x * tf.log(tf.nn.softmax(x)))

print(sess.run(y)) # 140.63484

y = tf.nn.softmax_cross_entropy_with_logits(logits=x, labels=x)

print(sess.run(y)) # 140.63484

y = tf.nn.softmax_cross_entropy_with_logits(logits=x, labels=x + 10)

print(sess.run(y)) # 541.9045

y = tf.nn.softmax_cross_entropy_with_logits(logits=x + 10, labels=x)

print(sess.run(y)) # 140.63484

正则化

公式如下:

y=γ(x-μ)/σ+β

其中x是输入,y是输出,μ是均值,σ是方差,γ和β是缩放(scale)、偏移(offset)系数。

将数据减去均值除以标准差

返回均值和方差

def moments(

x,

axes,

shift=None, # pylint: disable=unused-argument

name=None,

keep_dims=False):

Returns:

Two `Tensor` objects: `mean` and `variance`.

根据参数将数据正则化,注意输入的是方差,为了避免除零,会加上一个极小值

def batch_normalization(x,

mean,

variance,

offset,

scale,

variance_epsilon,

name=None):

Returns:

the normalized, scaled, offset tensor.

import tensorflow as tf

import numpy as np

x = tf.range(0, 4, dtype=tf.float32)

sess = tf.Session()

print(sess.run(x)) # [0. 1. 2. 3.]

y = tf.nn.moments(x, -1)

print(np.mean(sess.run(x)), np.std(sess.run(x)) ** 2) # 1.5 1.250000036705515

print(sess.run(y)) # (1.5, 1.25)

print(sess.run((x - y[0]) / y[1] ** .5)) # [-1.3416407 -0.4472136 0.4472136 1.3416407]

y2 = tf.nn.batch_normalization(x, *y, 0, 1, 0.00001)

print(sess.run(y2)) # [-1.3416355 -0.4472118 0.44721186 1.3416355 ]

y3 = tf.nn.batch_normalization(x, *y, 100, 2, 0.00001)

print(sess.run(y3)) # [ 97.31673 99.105576 100.894424 102.683266]

print(sess.run(y3) - 100) # [-2.6832733 -0.89442444 0.89442444 2.6832657 ]

批量正则化

与正则化类似

import tensorflow as tf

import numpy as np

#

# x = np.arange(16).reshape([4, 2, 2, 1])

# # tf.nn.batch_normalization(x)

# # tf.nn.no

# # x = [1, tf.range(1, 10, dtype=tf.float32)]

# # x = tf.reshape(x, [1, 9])

sess = tf.Session()

#

# x = tf.constant(x, dtype=tf.float32)

# print(sess.run(x).shape)

# y = tf.contrib.layers.batch_norm(x, decay=0.9, updates_collections=None, is_training=True)

# sess.run(tf.global_variables_initializer())

# print(sess.run(x))

# print(sess.run(y))

x = tf.range(0, 4, dtype=tf.float32)

x = tf.reshape(x, (4, 1, 1))

print(sess.run(x))

y1 = tf.contrib.layers.batch_norm(x, decay=0.9, is_training=True)

y2 = tf.contrib.layers.batch_norm(x, decay=0.9, is_training=False)

y3 = tf.contrib.layers.batch_norm(x, decay=0.9, is_training=False)

y4 = tf.layers.batch_normalization(x)

y5 = tf.layers.batch_normalization(x, training=True)

sess.run(tf.global_variables_initializer())

print(sess.run(y1))

print(sess.run(y2))

print(sess.run(y3))

print(sess.run(y4))

print(sess.run(y5))

[[[0.]]

[[1.]]

[[2.]]

[[3.]]]

[[[-1.3411044 ]]

[[-0.44703478]]

[[ 0.44703484]]

[[ 1.3411044 ]]]

[[[0. ]]

[[0.9995004]]

[[1.9990008]]

[[2.9985013]]]

[[[0. ]]

[[0.9995004]]

[[1.9990008]]

[[2.9985013]]]

[[[0. ]]

[[0.9995004]]

[[1.9990008]]

[[2.9985013]]]

[[[-1.3411044 ]]

[[-0.44703478]]

[[ 0.44703484]]

[[ 1.3411044 ]]]

1090

1090

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?