import pandas as pd

import numpy as np

from matplotlib import pyplot as plt

from sklearn.model_selection import train_test_split

def init():

df = pd.read_csv("./breast-cancer.csv")

df = df.drop("id",1)

df = df.drop("Unnamed: 32",1)

df['diagnosis'] = df['diagnosis'].map({

'M': 1,

'B': 0

})

train, test = train_test_split(df, test_size = 0.3, random_state=1)

train_x = train.loc[:, 'radius_mean': 'fractal_dimension_worst']

train_y = train.loc[:, ['diagnosis']]

test_x = test.loc[:, 'radius_mean': 'fractal_dimension_worst']

test_y = test.loc[:, ['diagnosis']]

train_x = np.asarray(train_x)

train_y = np.asarray(train_y)

test_x = np.asarray(test_x)

test_y = np.asarray(test_y)

d = model(train_x.T, train_y.T, num_of_iterations=10000, alpha=0.000001)

costs = d ["costs"]

w = d["w"]

b = d["b"]

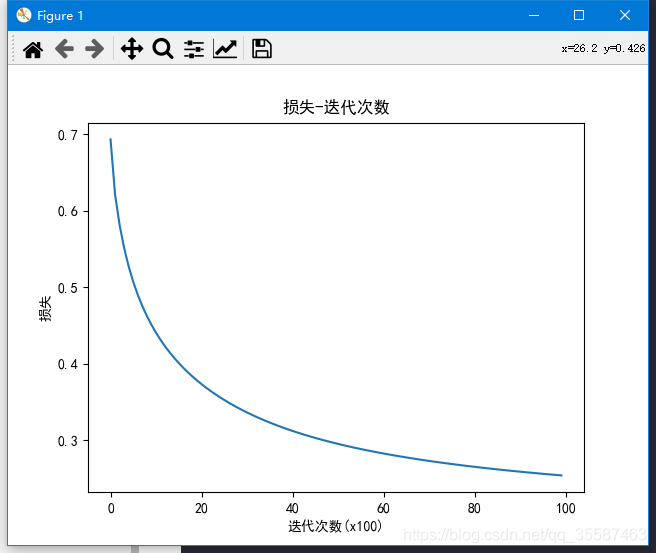

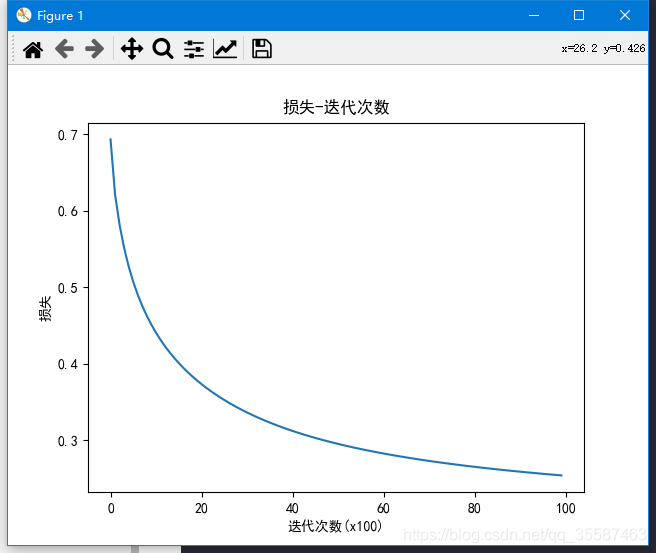

plt.plot(costs)

plt.title("损失-迭代次数")

plt.xlabel("迭代次数(x100)")

plt.ylabel("损失")

Y_prediction_train = predict(train_x.T, w, b)

Y_prediction_test = predict(test_x.T, w, b)

print("\n训练数据测试精确度: {}%".format(100 - np.mean(np.abs(Y_prediction_train - train_y.T)) * 100))

print("\n测试数据测试精确度: {}%".format(100 - np.mean(np.abs(Y_prediction_test - test_y.T)) * 100))

plt.show()

def initialize(m):

w = np.zeros((m,1))

b = 0

return w , b

def sigmoid(X):

return 1/(1 + np.exp(- X))

def propogate(X, Y, w, b):

m = X.shape[1]

Z = np.dot(w.T, X) + b;

A = sigmoid(Z)

cost= -(1/m) * np.sum(Y * np.log(A) + (1-Y) * np.log(1-A))

dw = (1/m)* np.dot(X, (A-Y).T)

db = (1/m)* np.sum(A-Y)

grads= {"dw": dw, "db": db}

return grads, cost

def optimize(X, Y, w, b, num_of_iterations, alpha):

costs=[]

for i in range(num_of_iterations):

grads, cost = propogate(X, Y, w, b)

dw = grads["dw"]

db = grads["db"]

w = w - alpha * dw

b = b - alpha * db

if i % 100 == 0:

costs.append(cost)

print("<%i>次迭代后的损失度: %f" % (i, cost))

parameters = {

"w": w,

"b": b

}

grads = {

"dw": dw,

"db": db

}

return parameters, grads, costs

def predict(X, w, b):

m = X.shape[1]

y_prediction = np.zeros((1,m))

w = w.reshape(X.shape[0], 1)

A=sigmoid(np.dot(w.T, X)+b)

for i in range(A.shape[1]):

if(A[0,i] < 0.5):

y_prediction[0,i] = 0

else:

y_prediction[0,i] = 1

return y_prediction

def model(Xtrain, Ytrain, num_of_iterations, alpha):

dim = Xtrain.shape[0]

w,b = initialize(dim)

parameters, grads, costs = optimize(Xtrain, Ytrain, w, b, num_of_iterations, alpha)

w = parameters["w"]

b = parameters["b"]

d = {

"w": w,

"b": b,

"costs": costs

}

return d

if __name__ == "__main__":

init()

1814

1814

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?