ERROR reactor.core.scheduler.Schedulers [] - Scheduler worker in group Flink Task Threads failed with an uncaught exception

io.netty.util.internal.OutOfDirectMemoryError: failed to allocate 16777216 byte(s) of direct memory (used: 671088640, max: 673605229)

at io.netty.util.internal.PlatformDependent.incrementMemoryCounter(PlatformDependent.java:802) ~[netty-common-4.1.74.Final.jar:4.1.74.Final]

at io.netty.util.internal.PlatformDependent.allocateDirectNoCleaner(PlatformDependent.java:731) ~[netty-common-4.1.74.Final.jar:4.1.74.Final]

at io.netty.buffer.PoolArena$DirectArena.allocateDirect(PoolArena.java:648) ~[netty-buffer-4.1.74.Final.jar:4.1.74.Final]

at io.netty.buffer.PoolArena$DirectArena.newChunk(PoolArena.java:623) ~[netty-buffer-4.1.74.Final.jar:4.1.74.Final]

at io.netty.buffer.PoolArena.allocateNormal(PoolArena.java:202) ~[netty-buffer-4.1.74.Final.jar:4.1.74.Final]

at io.netty.buffer.PoolArena.tcacheAllocateSmall(PoolArena.java:172) ~[netty-buffer-4.1.74.Final.jar:4.1.74.Final]

at io.netty.buffer.PoolArena.allocate(PoolArena.java:134) ~[netty-buffer-4.1.74.Final.jar:4.1.74.Final]

at io.netty.buffer.PoolArena.reallocate(PoolArena.java:286) ~[netty-buffer-4.1.74.Final.jar:4.1.74.Final]

at io.netty.buffer.PooledByteBuf.capacity(PooledByteBuf.java:122) ~[netty-buffer-4.1.74.Final.jar:4.1.74.Final]

at io.netty.buffer.AbstractByteBuf.ensureWritable0(AbstractByteBuf.java:305) ~[netty-buffer-4.1.74.Final.jar:4.1.74.Final]

at io.netty.buffer.AbstractByteBuf.ensureWritable(AbstractByteBuf.java:280) ~[netty-buffer-4.1.74.Final.jar:4.1.74.Final]

at io.netty.buffer.AbstractByteBuf.writeBytes(AbstractByteBuf.java:1073) ~[netty-buffer-4.1.74.Final.jar:4.1.74.Final]

at io.netty.buffer.ByteBufOutputStream.write(ByteBufOutputStream.java:67) ~[netty-buffer-4.1.74.Final.jar:4.1.74.Final]

at org.nustaq.serialization.util.FSTOutputStream.copyTo(FSTOutputStream.java:122) ~[fst-2.57.jar:?]

at org.nustaq.serialization.util.FSTOutputStream.flush(FSTOutputStream.java:146) ~[fst-2.57.jar:?]

at org.nustaq.serialization.coders.FSTStreamEncoder.flush(FSTStreamEncoder.java:530) ~[fst-2.57.jar:?]

at org.nustaq.serialization.FSTObjectOutput.flush(FSTObjectOutput.java:156) ~[fst-2.57.jar:?]

at org.nustaq.serialization.FSTObjectOutput.close(FSTObjectOutput.java:165) ~[fst-2.57.jar:?]

at com.agioe.eventbus.codec.FSTMessageCodec.serialize(FSTMessageCodec.java:44) ~[blob_p-d047fb355ccbb70b608533b459bbefe0707f2b7c-5942ea43d238571bf61e191dfdc74ac9:?]

at io.scalecube.transport.netty.TransportImpl.encodeMessage(TransportImpl.java:216) ~[scalecube-transport-netty-2.6.12.jar:?]

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onNext(FluxMapFuseable.java:113) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.Operators$ScalarSubscription.request(Operators.java:2398) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.request(FluxMapFuseable.java:169) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.MonoFlatMap$FlatMapMain.onSubscribe(MonoFlatMap.java:110) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.FluxMapFuseable$MapFuseableSubscriber.onSubscribe(FluxMapFuseable.java:96) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.MonoJust.subscribe(MonoJust.java:55) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.MonoDeferContextual.subscribe(MonoDeferContextual.java:55) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.MonoDeferContextual.subscribe(MonoDeferContextual.java:55) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.MonoFlatMap$FlatMapMain.onNext(MonoFlatMap.java:157) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.Operators$MonoSubscriber.complete(Operators.java:1816) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.MonoCacheTime.subscribeOrReturn(MonoCacheTime.java:151) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:57) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.MonoDeferContextual.subscribe(MonoDeferContextual.java:55) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.InternalMonoOperator.subscribe(InternalMonoOperator.java:64) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.MonoDefer.subscribe(MonoDefer.java:52) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.Mono.subscribe(Mono.java:4400) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.Mono.subscribeWith(Mono.java:4515) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.Mono.subscribe(Mono.java:4371) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.Mono.subscribe(Mono.java:4307) ~[reactor-core-3.4.17.jar:3.4.17]

at reactor.core.publisher.Mono.subscribe(Mono.java:4279) ~[reactor-core-3.4.17.jar:3.4.17]

at io.scalecube.cluster.gossip.GossipProtocolImpl.lambda$spreadGossipsTo$7(GossipProtocolImpl.java:296) ~[scalecube-cluster-2.6.12.jar:?]

at java.util.stream.ForEachOps$ForEachOp$OfRef.accept(ForEachOps.java:183) ~[?:1.8.0_345]

at java.util.stream.ReferencePipeline$3$1.accept(ReferencePipeline.java:193) ~[?:1.8.0_345]

at java.util.ArrayList$ArrayListSpliterator.forEachRemaining(ArrayList.java:1384) ~[?:1.8.0_345]

at java.util.stream.AbstractPipeline.copyInto(AbstractPipeline.java:482) ~[?:1.8.0_345]

at java.util.stream.AbstractPipeline.wrapAndCopyInto(AbstractPipeline.java:472) ~[?:1.8.0_345]

at java.util.stream.ForEachOps$ForEachOp.evaluateSequential(ForEachOps.java:150) ~[?:1.8.0_345]

at java.util.stream.ForEachOps$ForEachOp$OfRef.evaluateSequential(ForEachOps.java:173) ~[?:1.8.0_345]

at java.util.stream.AbstractPipeline.evaluate(AbstractPipeline.java:234) ~[?:1.8.0_345]

at java.util.stream.ReferencePipeline.forEach(ReferencePipeline.java:485) ~[?:1.8.0_345]

at io.scalecube.cluster.gossip.GossipProtocolImpl.spreadGossipsTo(GossipProtocolImpl.java:292) ~[scalecube-cluster-2.6.12.jar:?]

at io.scalecube.cluster.gossip.GossipProtocolImpl.lambda$doSpreadGossip$4(GossipProtocolImpl.java:156) ~[scalecube-cluster-2.6.12.jar:?]

at java.lang.Iterable.forEach(Iterable.java:75) ~[?:1.8.0_345]

at io.scalecube.cluster.gossip.GossipProtocolImpl.doSpreadGossip(GossipProtocolImpl.java:156) ~[scalecube-cluster-2.6.12.jar:?]

at reactor.core.scheduler.PeriodicSchedulerTask.call(PeriodicSchedulerTask.java:49) [reactor-core-3.4.17.jar:3.4.17]

at reactor.core.scheduler.PeriodicSchedulerTask.run(PeriodicSchedulerTask.java:63) [reactor-core-3.4.17.jar:3.4.17]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [?:1.8.0_345]

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) [?:1.8.0_345]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) [?:1.8.0_345]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) [?:1.8.0_345]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_345]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_345]

at java.lang.Thread.run(Thread.java:750) [?:1.8.0_345]出现堆外内存溢出错误,阅读异常内容得知是分配内存时,内存不足导致。于是定位到分配内存的代码部分。

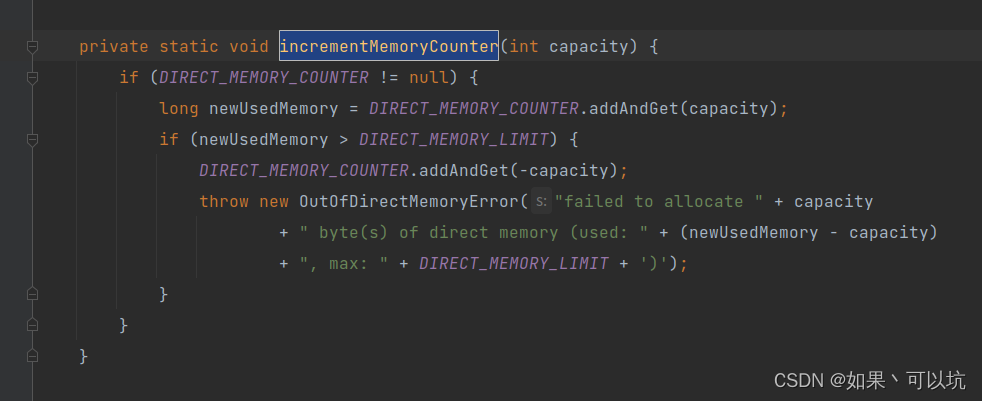

PlatformDependent.incrementMemoryCounter方法

可以看出分配时要通过DIRECT_MEMORY_COUNTER计数,从而判定是否够分配,那么可通过反射监听DIRECT_MEMORY_COUNTER在什么时候会增长变化。

可以看出分配时要通过DIRECT_MEMORY_COUNTER计数,从而判定是否够分配,那么可通过反射监听DIRECT_MEMORY_COUNTER在什么时候会增长变化。

@Slf4j

public class DirectMemoryProcess extends ProcessFunction<String, String> {

private static final int _1k = 1024;

private static final String BUSINESS_KEY = "netty_direct_memory";

private AtomicLong directMemory;

private boolean flag = true;

Field field;

@Override

public void open(Configuration parameters) throws Exception {

super.open(parameters);

field = ReflectionUtils.findField(PlatformDependent.class, "DIRECT_MEMORY_COUNTER");

field.setAccessible(true);

}

private void doReport() {

try {

directMemory = (AtomicLong) field.get(PlatformDependent.class);

int memoryInKb = (int) (directMemory.get() / _1k);

log.info("{}:{}k", BUSINESS_KEY, memoryInKb);

} catch (Exception e) {

}

}

@Override

public void processElement(String s, Context context, Collector<String> collector) throws Exception {

if (flag) {

ScheduledExecutorService threadPool = Executors.newScheduledThreadPool(1);

Runnable r = () -> doReport();

threadPool.scheduleAtFixedRate(r, 0, 1, TimeUnit.SECONDS);

flag = false;

}

}

}

flink版和spring版

监听堆外内存增长的规律,可定位内存增长的原因。

而flink中的堆外内存溢出,主要是因为taskmanager的堆外内存分配空间不足,修改配置文件taskmanager.memory.framework.off-heap.size: 1g

可解决。

反射获取某个变量的变化值来定位问题的思路是可以借鉴的。

2918

2918

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?