这一部分是对吴恩达机器学习SVM支持向量机内容的总结,主要分为以下几个部分

1.线性核函数的SVM

2.高斯核函数的SVM

3.利用SVM进行垃圾邮件检测

这一部分的代码非常繁琐,这里仅展示其功能及运行过程,对代码细节不再深究

SVM的理论原理见笔记本

1.线性核函数的SVM

首先仍然是数据可视化

fprintf('Loading and Visualizing Data ...\n')

load('ex6data1.mat');

plotData(X, y);

定义线性核函数,就是待测样本和已知样本的乘积

function sim = linearKernel(x1, x2)

x1 = x1(:); x2 = x2(:);

sim = x1' * x2;

end

在主函数中训练,如果常数C=1,则结果如下:

当C=1000时,结果如下:

说明C越大向量机对异常样本越敏感,即过拟合

训练代码如下:

C = 1;%正则常数

model = svmTrain(X, y, C, @linearKernel, 1e-3, 20);%训练函数,返回一个结构体

%这个结构体里面的w是每一个特征的权重,b是bias项

visualizeBoundaryLinear(X, y, model);

%可视化向量机分类线的函数

把上面两个函数贴上

function [model] = svmTrain(X, Y, C, kernelFunction, ...

tol, max_passes)

%SVMTRAIN Trains an SVM classifier using a simplified version of the SMO

%algorithm.

% [model] = SVMTRAIN(X, Y, C, kernelFunction, tol, max_passes) trains an

% SVM classifier and returns trained model. X is the matrix of training

% examples. Each row is a training example, and the jth column holds the

% jth feature. Y is a column matrix containing 1 for positive examples

% and 0 for negative examples. C is the standard SVM regularization

% parameter. tol is a tolerance value used for determining equality of

% floating point numbers. max_passes controls the number of iterations

% over the dataset (without changes to alpha) before the algorithm quits.

%

% Note: This is a simplified version of the SMO algorithm for training

% SVMs. In practice, if you want to train an SVM classifier, we

% recommend using an optimized package such as:

%

% LIBSVM (http://www.csie.ntu.edu.tw/~cjlin/libsvm/)

% SVMLight (http://svmlight.joachims.org/)

%

%

if ~exist('tol', 'var') || isempty(tol)

tol = 1e-3;

end

if ~exist('max_passes', 'var') || isempty(max_passes)

max_passes = 5;

end

% Data parameters

m = size(X, 1);

n = size(X, 2);

% Map 0 to -1

Y(Y==0) = -1;

% Variables

alphas = zeros(m, 1);

b = 0;

E = zeros(m, 1);

passes = 0;

eta = 0;

L = 0;

H = 0;

% Pre-compute the Kernel Matrix since our dataset is small

% (in practice, optimized SVM packages that handle large datasets

% gracefully will _not_ do this)

%

% We have implemented optimized vectorized version of the Kernels here so

% that the svm training will run faster.

if strcmp(func2str(kernelFunction), 'linearKernel')

% Vectorized computation for the Linear Kernel

% This is equivalent to computing the kernel on every pair of examples

K = X*X';

elseif strfind(func2str(kernelFunction), 'gaussianKernel')

% Vectorized RBF Kernel

% This is equivalent to computing the kernel on every pair of examples

X2 = sum(X.^2, 2);

K = bsxfun(@plus, X2, bsxfun(@plus, X2', - 2 * (X * X')));

K = kernelFunction(1, 0) .^ K;

else

% Pre-compute the Kernel Matrix

% The following can be slow due to the lack of vectorization

K = zeros(m);

for i = 1:m

for j = i:m

K(i,j) = kernelFunction(X(i,:)', X(j,:)');

K(j,i) = K(i,j); %the matrix is symmetric

end

end

end

% Train

fprintf('\nTraining ...');

dots = 12;

while passes < max_passes,

num_changed_alphas = 0;

for i = 1:m,

% Calculate Ei = f(x(i)) - y(i) using (2).

% E(i) = b + sum (X(i, :) * (repmat(alphas.*Y,1,n).*X)') - Y(i);

E(i) = b + sum (alphas.*Y.*K(:,i)) - Y(i);

if ((Y(i)*E(i) < -tol && alphas(i) < C) || (Y(i)*E(i) > tol && alphas(i) > 0)),

% In practice, there are many heuristics one can use to select

% the i and j. In this simplified code, we select them randomly.

j = ceil(m * rand());

while j == i, % Make sure i \neq j

j = ceil(m * rand());

end

% Calculate Ej = f(x(j)) - y(j) using (2).

E(j) = b + sum (alphas.*Y.*K(:,j)) - Y(j);

% Save old alphas

alpha_i_old = alphas(i);

alpha_j_old = alphas(j);

% Compute L and H by (10) or (11).

if (Y(i) == Y(j)),

L = max(0, alphas(j) + alphas(i) - C);

H = min(C, alphas(j) + alphas(i));

else

L = max(0, alphas(j) - alphas(i));

H = min(C, C + alphas(j) - alphas(i));

end

if (L == H),

% continue to next i.

continue;

end

% Compute eta by (14).

eta = 2 * K(i,j) - K(i,i) - K(j,j);

if (eta >= 0),

% continue to next i.

continue;

end

% Compute and clip new value for alpha j using (12) and (15).

alphas(j) = alphas(j) - (Y(j) * (E(i) - E(j))) / eta;

% Clip

alphas(j) = min (H, alphas(j));

alphas(j) = max (L, alphas(j));

% Check if change in alpha is significant

if (abs(alphas(j) - alpha_j_old) < tol),

% continue to next i.

% replace anyway

alphas(j) = alpha_j_old;

continue;

end

% Determine value for alpha i using (16).

alphas(i) = alphas(i) + Y(i)*Y(j)*(alpha_j_old - alphas(j));

% Compute b1 and b2 using (17) and (18) respectively.

b1 = b - E(i) ...

- Y(i) * (alphas(i) - alpha_i_old) * K(i,j)' ...

- Y(j) * (alphas(j) - alpha_j_old) * K(i,j)';

b2 = b - E(j) ...

- Y(i) * (alphas(i) - alpha_i_old) * K(i,j)' ...

- Y(j) * (alphas(j) - alpha_j_old) * K(j,j)';

% Compute b by (19).

if (0 < alphas(i) && alphas(i) < C),

b = b1;

elseif (0 < alphas(j) && alphas(j) < C),

b = b2;

else

b = (b1+b2)/2;

end

num_changed_alphas = num_changed_alphas + 1;

end

end

if (num_changed_alphas == 0),

passes = passes + 1;

else

passes = 0;

end

fprintf('.');

dots = dots + 1;

if dots > 78

dots = 0;

fprintf('\n');

end

if exist('OCTAVE_VERSION')

fflush(stdout);

end

end

fprintf(' Done! \n\n');

% Save the model

idx = alphas > 0;

model.X= X(idx,:);

model.y= Y(idx);

model.kernelFunction = kernelFunction;

model.b= b;

model.alphas= alphas(idx);

model.w = ((alphas.*Y)'*X)';

end

以及

function visualizeBoundaryLinear(X, y, model)

%VISUALIZEBOUNDARYLINEAR plots a linear decision boundary learned by the

%SVM

% VISUALIZEBOUNDARYLINEAR(X, y, model) plots a linear decision boundary

% learned by the SVM and overlays the data on it

w = model.w;

b = model.b;

xp = linspace(min(X(:,1)), max(X(:,1)), 100);

yp = - (w(1)*xp + b)/w(2);

plotData(X, y);

hold on;

plot(xp, yp, '-b');

hold off

end

2.高斯核函数的SVM

由于SVM的优化算法非常复杂,所以我们只要知道它的原理,编程上只要知道如何定义核函数就好了

定义高斯核函数:

function sim = gaussianKernel(x1, x2, sigma)

x1 = x1(:); x2 = x2(:);

sim=exp(-sum((x1-x2).^2)/(2*sigma^2));

end

现有一个非线性样本 让我们来观察高斯核函数的处理效果

训练代码如下

C = 1; sigma = 0.1;

model= svmTrain(X, y, C, @(x1, x2) gaussianKernel(x1, x2, sigma));

visualizeBoundary(X, y, model);

效果如下:

现在我们设计一种可以自动寻找C和sigma的方法,就是分出test集,然后用算好的model去拟合test集,看看error的大小,选择误差最小的模型,假设我们的C和sigma都要从集

eg=[0.01; 0.03; 0.1; 0.3; 1; 3; 10; 30]

里面寻找

先定义预测函数

function pred = svmPredict(model, X)

%SVMPREDICT returns a vector of predictions using a trained SVM model

%(svmTrain).

% pred = SVMPREDICT(model, X) returns a vector of predictions using a

% trained SVM model (svmTrain). X is a m*n matrix where there each

% example is a row. model is a svm model returned from svmTrain.

% predictions pred is a m x 1 column of predictions of {0, 1} values.

%

% Check if we are getting a column vector, if so, then assume that we only

% need to do prediction for a single example

if (size(X, 2) == 1)

% Examples should be in rows

X = X';

end

% Dataset

m = size(X, 1);

p = zeros(m, 1);

pred = zeros(m, 1);

if strcmp(func2str(model.kernelFunction), 'linearKernel')

% We can use the weights and bias directly if working with the

% linear kernel

p = X * model.w + model.b;

elseif strfind(func2str(model.kernelFunction), 'gaussianKernel')

% Vectorized RBF Kernel

% This is equivalent to computing the kernel on every pair of examples

X1 = sum(X.^2, 2);

X2 = sum(model.X.^2, 2)';

K = bsxfun(@plus, X1, bsxfun(@plus, X2, - 2 * X * model.X'));

K = model.kernelFunction(1, 0) .^ K;

K = bsxfun(@times, model.y', K);

K = bsxfun(@times, model.alphas', K);

p = sum(K, 2);

else

% Other Non-linear kernel

for i = 1:m

prediction = 0;

for j = 1:size(model.X, 1)

prediction = prediction + ...

model.alphas(j) * model.y(j) * ...

model.kernelFunction(X(i,:)', model.X(j,:)');

end

p(i) = prediction + model.b;

end

end

% Convert predictions into 0 / 1

pred(p >= 0) = 1;

pred(p < 0) = 0;

end

这样我们就可以定义参数选择函数:

function [C, sigma] = dataset3Params(X, y, Xval, yval)

C = 1;

sigma = 0.3;

eg=[0.01; 0.03; 0.1; 0.3; 1; 3; 10; 30];

error=1;

for i=1:length(eg)

for j=1:length(eg)

model=svmTrain(X, y, eg(j), @(x1, x2) gaussianKernel(x1, x2, eg(i)));

predictions=svmPredict(model,Xval);

terror= mean(double(predictions ~= yval));

if terror<=error

C=eg(j);

sigma=eg(i);

error=terror;

end

end

end

end

返回的C和sigma是最优的,现在来看看效果:

model= svmTrain(X, y, C, @(x1, x2) gaussianKernel(x1, x2, sigma));

visualizeBoundary(X, y, model);

3.利用SVM进行垃圾邮件检测

这一部分的思路是这样的,首先弄一个常用词dictionary,并且给dictionary里面的每一个词标序号,弄出一个列向量,dictionary的长度就是输入样本的特征值长度,即dictionary里面的每一次就是一个特征,如果一封邮件里面出现了某词,该特征值就是1,没出现就是0。但是在这之前,要对邮件进行预处理,比如统一化网址,数字,钱币符号,因为这些东西五花八门,但是他们可以统一成一个特征,还有去掉多余的空格,去掉奇怪的符号以及标点符号等等,仅研究字符或者说仅研究邮件内容,现在我们一步一步说明每一个环节的实现过程

首先读取邮件内容

file_contents = readFile('emailSample1.txt');

readFile函数的代码如下:

function file_contents = readFile(filename)

%READFILE reads a file and returns its entire contents

% file_contents = READFILE(filename) reads a file and returns its entire

% contents in file_contents

%

% Load File

fid = fopen(filename);

if fid

file_contents = fscanf(fid, '%c', inf);

fclose(fid);

else

file_contents = '';

fprintf('Unable to open %s\n', filename);

end

end

得到的是一个未经处理的字符串

然后预处理这个邮件,弄出dictionary,并且返回处理后的邮件中的每一个单词在dictionary的序号位置

word_indices = processEmail(file_contents)

这个处理函数非常复杂,我一点一点解释,先贴代码:

function word_indices = processEmail(email_contents)

%PROCESSEMAIL preprocesses a the body of an email and

%returns a list of word_indices

% word_indices = PROCESSEMAIL(email_contents) preprocesses

% the body of an email and returns a list of indices of the

% words contained in the email.

%

% Load Vocabulary

vocabList = getVocabList();

% Init return value

word_indices = [];

% ========================== Preprocess Email ===========================

% Find the Headers ( \n\n and remove )

% Uncomment the following lines if you are working with raw emails with the

% full headers

% hdrstart = strfind(email_contents, ([char(10) char(10)]));

% email_contents = email_contents(hdrstart(1):end);

% Lower case

email_contents = lower(email_contents);

% Strip all HTML

% Looks for any expression that starts with < and ends with > and replace

% and does not have any < or > in the tag it with a space

email_contents = regexprep(email_contents, '<[^<>]+>', ' ');

% Handle Numbers

% Look for one or more characters between 0-9

email_contents = regexprep(email_contents, '[0-9]+', 'number');

% Handle URLS

% Look for strings starting with http:// or https://

email_contents = regexprep(email_contents, ...

'(http|https)://[^\s]*', 'httpaddr');

% Handle Email Addresses

% Look for strings with @ in the middle

email_contents = regexprep(email_contents, '[^\s]+@[^\s]+', 'emailaddr');

% Handle $ sign

email_contents = regexprep(email_contents, '[$]+', 'dollar');

% ========================== Tokenize Email ===========================

% Output the email to screen as well

fprintf('\n==== Processed Email ====\n\n');

% Process file

l = 0;

while ~isempty(email_contents)

% Tokenize and also get rid of any punctuation

[str, email_contents] = ...

strtok(email_contents, ...

[' @$/#.-:&*+=[]?!(){},''">_<;%' char(10) char(13)]);

% Remove any non alphanumeric characters

str = regexprep(str, '[^a-zA-Z0-9]', '');

% Stem the word

% (the porterStemmer sometimes has issues, so we use a try catch block)

try str = porterStemmer(strtrim(str));

catch str = ''; continue;

end;

% Skip the word if it is too short

if length(str) < 1

continue;

end

% Look up the word in the dictionary and add to word_indices if

% found

% ====================== YOUR CODE HERE ======================

% Instructions: Fill in this function to add the index of str to

% word_indices if it is in the vocabulary. At this point

% of the code, you have a stemmed word from the email in

% the variable str. You should look up str in the

% vocabulary list (vocabList). If a match exists, you

% should add the index of the word to the word_indices

% vector. Concretely, if str = 'action', then you should

% look up the vocabulary list to find where in vocabList

% 'action' appears. For example, if vocabList{18} =

% 'action', then, you should add 18 to the word_indices

% vector (e.g., word_indices = [word_indices ; 18]; ).

%

% Note: vocabList{idx} returns a the word with index idx in the

% vocabulary list.

%

% Note: You can use strcmp(str1, str2) to compare two strings (str1 and

% str2). It will return 1 only if the two strings are equivalent.

%

for i=1:length(vocabList)

if strcmp(str, vocabList{i})==1

word_indices=[word_indices;i];

end

end

% =============================================================

% Print to screen, ensuring that the output lines are not too long

if (l + length(str) + 1) > 78

fprintf('\n');

l = 0;

end

fprintf('%s ', str);

l = l + length(str) + 1;

end

% Print footer

fprintf('\n\n=========================\n');

end

解释其中几个比较重要的部分

1.得到字典

vocabList = getVocabList();

其中getVocabList函数为:

function vocabList = getVocabList()

%GETVOCABLIST reads the fixed vocabulary list in vocab.txt and returns a

%cell array of the words

% vocabList = GETVOCABLIST() reads the fixed vocabulary list in vocab.txt

% and returns a cell array of the words in vocabList.

%% Read the fixed vocabulary list

fid = fopen('vocab.txt');

% Store all dictionary words in cell array vocab{}

n = 1899; % Total number of words in the dictionary

% For ease of implementation, we use a struct to map the strings => integers

% In practice, you'll want to use some form of hashmap

vocabList = cell(n, 1);

for i = 1:n

% Word Index (can ignore since it will be = i)

fscanf(fid, '%d', 1);

% Actual Word

vocabList{i} = fscanf(fid, '%s', 1);

end

fclose(fid);

end

得到列向量字典:

统一化数字、美元、网址、邮箱地址,去掉一些标点符号

% ========================== Preprocess Email ===========================

% Find the Headers ( \n\n and remove )

% Uncomment the following lines if you are working with raw emails with the

% full headers

% hdrstart = strfind(email_contents, ([char(10) char(10)]));

% email_contents = email_contents(hdrstart(1):end);

% Lower case

email_contents = lower(email_contents);

% Strip all HTML

% Looks for any expression that starts with < and ends with > and replace

% and does not have any < or > in the tag it with a space

email_contents = regexprep(email_contents, '<[^<>]+>', ' ');

% Handle Numbers

% Look for one or more characters between 0-9

email_contents = regexprep(email_contents, '[0-9]+', 'number');

% Handle URLS

% Look for strings starting with http:// or https://

email_contents = regexprep(email_contents, ...

'(http|https)://[^\s]*', 'httpaddr');

% Handle Email Addresses

% Look for strings with @ in the middle

email_contents = regexprep(email_contents, '[^\s]+@[^\s]+', 'emailaddr');

% Handle $ sign

email_contents = regexprep(email_contents, '[$]+', 'dollar');

进一步处理邮件,去除标点、去除任何不是数字和字母的东西、去掉单个字母,此外去除这么多东西只会也要检查一下是不是邮件空了,空了的话直接是垃圾邮件

这个过程是一个循环,在预处理的同时,循环取出邮件的每一个单词,对应到字典中对应的位置,由此将一个字符集变成一个对应字典位置的数字集,邮件变成了一个字典位置的集合,距离我们预设的输入更近了一步

l=0;

while ~isempty(email_contents)

% Tokenize and also get rid of any punctuation

[str, email_contents] = ...

strtok(email_contents, ...

[' @$/#.-:&*+=[]?!(){},''">_<;%' char(10) char(13)]);

% Remove any non alphanumeric characters

str = regexprep(str, '[^a-zA-Z0-9]', '');

% Stem the word

% (the porterStemmer sometimes has issues, so we use a try catch block)

try str = porterStemmer(strtrim(str));

catch str = ''; continue;

end;

% Skip the word if it is too short

if length(str) < 1

continue;

end

% Look up the word in the dictionary and add to word_indices if

% found

for i=1:length(vocabList)

if strcmp(str, vocabList{i})==1

word_indices=[word_indices;i];

end

end

% Print to screen, ensuring that the output lines are not too long

if (l + length(str) + 1) > 78

fprintf('\n');

l = 0;

end

fprintf('%s ', str);

l = l + length(str) + 1;

end

end

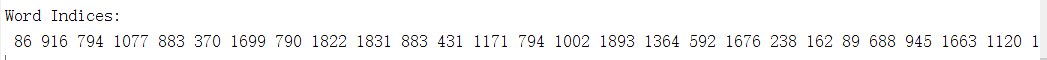

这样我们就得到了一个索引集:

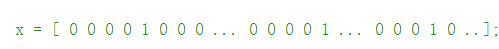

我们之前说过x里面这些位置标1,其他位置标0,它就成了一个输入样本,现在用代码实现这个功能:

function x = emailFeatures(word_indices)

n = 1899;.

x = zeros(n, 1);

for i=1:length(word_indices)

x(word_indices(i))=1;

end

这样我们就得到了这样的输入

这就是将一个邮件转化为输出的方法,现在用这样的方法标定4000个邮件,然后用SVM训练得到一个垃圾邮件分类器:

load('spamTrain.mat');

C = 0.1;

model = svmTrain(X, y, C, @linearKernel);

p = svmPredict(model, X);

fprintf('Training Accuracy: %f\n', mean(double(p == y)) * 100);

再编一个测试性能的小程序

load('spamTest.mat');

p = svmPredict(model, Xtest);

fprintf('Test Accuracy: %f\n', mean(double(p == ytest)) * 100);

此外,我们还可以统计出垃圾邮件里面出现最多的词根,以后对这种词根多加关注,实现代码如下:

[weight, idx] = sort(model.w, 'descend');

vocabList = getVocabList();

fprintf('\nTop predictors of spam: \n');

for i = 1:15

fprintf(' %-15s (%f) \n', vocabList{idx(i)}, weight(i));

end

这就是SVM向量机的全部内容

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?