1、构建环境

版本:

spark 2.4.5

hudi 0.11.0

> git clone https://github.com/apache/hudi.git && cd hudi

> vim pom.xml

> 配置阿里云maven镜像库

> mvn clean package -DskipTests -DskipITs

2、通过spark-shell快速启动

> ./spark-shell --packages org.apache.spark:spark-avro_2.11:2.4.5 --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' --jars /Users/xxx/cloudera/lib/hudi/packaging/hudi-spark-bundle/target/hudi-spark-bundle_2.11-0.11.0-SNAPSHOT.jar

2.1、插入数据

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.4.5

/_/

Using Scala version 2.11.12 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_281)

Type in expressions to have them evaluated.

Type :help for more information.

scala> import org.apache.hudi.QuickstartUtils._

import org.apache.hudi.QuickstartUtils._

scala> import scala.collection.JavaConversions._

import scala.collection.JavaConversions._

scala> import org.apache.spark.sql.SaveMode._

import org.apache.spark.sql.SaveMode._

scala> import org.apache.hudi.DataSourceReadOptions._

import org.apache.hudi.DataSourceReadOptions._

scala> import org.apache.hudi.DataSourceWriteOptions._

import org.apache.hudi.DataSourceWriteOptions._

scala> import org.apache.hudi.config.HoodieWriteConfig._

import org.apache.hudi.config.HoodieWriteConfig._

-- 设置表名

scala> val tableName = "hudi_trips_cow"

tableName: String = hudi_trips_cow

-- 设置基本路径

scala> val basePath = "file:///tmp/hudi_trips_cow"

basePath: String = file:///tmp/hudi_trips_cow

-- 数据生成器

scala> val dataGen = new DataGenerator

dataGen: org.apache.hudi.QuickstartUtils.DataGenerator = org.apache.hudi.QuickstartUtils$DataGenerator@27103726

-- 新增数据,生成一些数据,将其加载到DataFrame中,然后将DataFrame写入Hudi表

scala> val inserts = convertToStringList(dataGen.generateInserts(10))

inserts: java.util.List[String] = [{"ts": 1645671241937, "uuid": "b8799383-31c8-4a4f-9268-5155f1f1f262", "rider": "rider-213", "driver": "driver-213", "begin_lat": 0.4726905879569653, "begin_lon": 0.46157858450465483, "end_lat": 0.754803407008858, "end_lon": 0.9671159942018241, "fare": 34.158284716382845, "partitionpath": "americas/brazil/sao_paulo"}, {"ts": 1645725241890, "uuid": "aa974083-54ee-421e-9f99-a309f3d9226a", "rider": "rider-213", "driver": "driver-213", "begin_lat": 0.6100070562136587, "begin_lon": 0.8779402295427752, "end_lat": 0.3407870505929602, "end_lon": 0.5030798142293655, "fare": 43.4923811219014, "partitionpath": "americas/brazil/sao_paulo"}, {"ts": 1646141930102, "uuid": "37f684f9-9d33-44ac-9bcd-97160aabde34", "rider": "rider-213", "driver": "driver-213", "begin_lat...

scala> val df = spark.read.json(spark.sparkContext.parallelize(inserts, 2))

warning: there was one deprecation warning; re-run with -deprecation for details

df: org.apache.spark.sql.DataFrame = [begin_lat: double, begin_lon: double ... 8 more fields]

-- mode(Overwrite)将覆盖重新创建表(如果已存在)。可以检查/tmp/hudi_trips_cow是否有数据生成

scala> df.write.format("hudi").

| | options(getQuickstartWriteConfigs).

| | option(PRECOMBINE_FIELD_OPT_KEY, "ts").

| | option(RECORDKEY_FIELD_OPT_KEY, "uuid").

| | option(PARTITIONPATH_FIELD_OPT_KEY, "partitionpath").

| | option(TABLE_NAME, tableName).

| | mode(Overwrite).

| | save(basePath)

warning: there was one deprecation warning; re-run with -deprecation for details

22/03/03 10:12:48 WARN config.DFSPropertiesConfiguration: Cannot find HUDI_CONF_DIR, please set it as the dir of hudi-defaults.conf

22/03/03 10:12:48 WARN config.DFSPropertiesConfiguration: Properties file file:/etc/hudi/conf/hudi-defaults.conf not found. Ignoring to load props file

22/03/03 10:12:48 WARN hudi.HoodieSparkSqlWriter$: hoodie table at file:/tmp/hudi_trips_cow already exists. Deleting existing data & overwriting with new data.

22/03/03 10:12:49 WARN metadata.HoodieBackedTableMetadata: Metadata table was not found at path file:///tmp/hudi_trips_cow/.hoodie/metadata

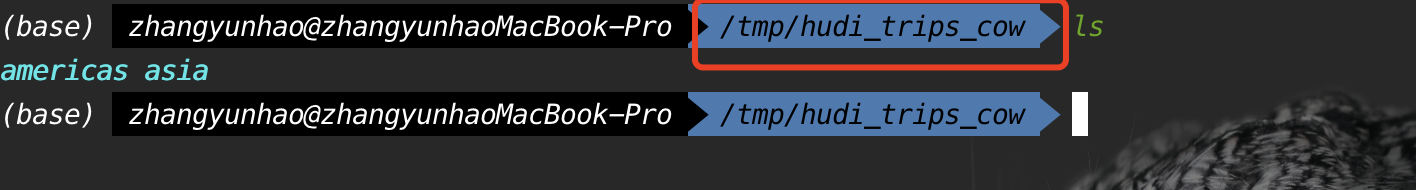

查看 /tmp/hudi_trips_cow 路径:

2.2、查询数据

scala> val tripsSnapshotDF = spark.read.format("hudi").load(basePath + "/*/*/*/*")

tripsSnapshotDF: org.apache.spark.sql.DataFrame = [_hoodie_commit_time: string, _hoodie_commit_seqno: string ... 13 more fields]

scala> tripsSnapshotDF.createOrReplaceTempView("hudi_trips_snapshot")

scala> spark.sql("select fare, begin_lon, begin_lat, ts from hudi_trips_snapshot where fare > 20.0").show()

22/03/03 10:55:09 WARN General: Plugin (Bundle) "org.datanucleus.api.jdo" is already registered. Ensure you dont have multiple JAR versions of the same plugin in the classpath. The URL "file:/Users/zhangyunhao/cloudera/cdh5.7/spark2/jars/datanucleus-api-jdo-3.2.6.jar" is already registered, and you are trying to register an identical plugin located at URL "file:/Users/zhangyunhao/cloudera/lib/spark-2.4.5-bin-hadoop2.7/jars/datanucleus-api-jdo-3.2.6.jar."

22/03/03 10:55:09 WARN General: Plugin (Bundle) "org.datanucleus" is already registered. Ensure you dont have multiple JAR versions of the same plugin in the classpath. The URL "file:/Users/zhangyunhao/cloudera/lib/spark-2.4.5-bin-hadoop2.7/jars/datanucleus-core-3.2.10.jar" is already registered, and you are trying to register an identical plugin located at URL "file:/Users/zhangyunhao/cloudera/cdh5.7/spark2/jars/datanucleus-core-3.2.10.jar."

22/03/03 10:55:09 WARN General: Plugin (Bundle) "org.datanucleus.store.rdbms" is already registered. Ensure you dont have multiple JAR versions of the same plugin in the classpath. The URL "file:/Users/zhangyunhao/cloudera/lib/spark-2.4.5-bin-hadoop2.7/jars/datanucleus-rdbms-3.2.9.jar" is already registered, and you are trying to register an identical plugin located at URL "file:/Users/zhangyunhao/cloudera/cdh5.7/spark2/jars/datanucleus-rdbms-3.2.9.jar."

22/03/03 10:55:14 WARN ObjectStore: Failed to get database global_temp, returning NoSuchObjectException

+------------------+-------------------+-------------------+-------------+

| fare| begin_lon| begin_lat| ts|

+------------------+-------------------+-------------------+-------------+

| 41.06290929046368| 0.8192868687714224| 0.651058505660742|1646014632680|

| 43.4923811219014| 0.8779402295427752| 0.6100070562136587|1645725241890|

| 66.62084366450246|0.03844104444445928| 0.0750588760043035|1645837832234|

|34.158284716382845|0.46157858450465483| 0.4726905879569653|1645671241937|

| 64.27696295884016| 0.4923479652912024| 0.5731835407930634|1646141930102|

| 93.56018115236618|0.14285051259466197|0.21624150367601136|1646206560359|

| 27.79478688582596| 0.6273212202489661|0.11488393157088261|1646174737149|

| 33.92216483948643| 0.9694586417848392| 0.1856488085068272|1645820057227|

+------------------+-------------------+-------------------+-------------+

scala> spark.sql("select _hoodie_commit_time, _hoodie_record_key, _hoodie_partition_path, rider, driver, fare from hudi_trips_snapshot").show()

+-------------------+--------------------+----------------------+---------+----------+------------------+

|_hoodie_commit_time| _hoodie_record_key|_hoodie_partition_path| rider| driver| fare|

+-------------------+--------------------+----------------------+---------+----------+------------------+

| 20220303101248907|98f7d8ae-2e7e-4d6...| asia/india/chennai|rider-213|driver-213|17.851135255091155|

| 20220303101248907|0228210c-2660-42b...| asia/india/chennai|rider-213|driver-213| 41.06290929046368|

| 20220303101248907|aa974083-54ee-421...| americas/brazil/s...|rider-213|driver-213| 43.4923811219014|

| 20220303101248907|523141f5-f261-43f...| americas/brazil/s...|rider-213|driver-213| 66.62084366450246|

| 20220303101248907|b8799383-31c8-4a4...| americas/brazil/s...|rider-213|driver-213|34.158284716382845|

| 20220303101248907|7a3ce82e-0e12-477...| americas/united_s...|rider-213|driver-213|19.179139106643607|

| 20220303101248907|37f684f9-9d33-44a...| americas/united_s...|rider-213|driver-213| 64.27696295884016|

| 20220303101248907|701a4bdf-a134-4d8...| americas/united_s...|rider-213|driver-213| 93.56018115236618|

| 20220303101248907|f9cbea31-ea9d-4aa...| americas/united_s...|rider-213|driver-213| 27.79478688582596|

| 20220303101248907|9ef275d7-e4c3-47a...| americas/united_s...|rider-213|driver-213| 33.92216483948643|

+-------------------+--------------------+----------------------+---------+----------+------------------+

注: 由于测试数据分区partitionpath是 区域/国家/城市,所以load(basePath + “////”)

2.3、修改数据

和插入新数据类似,使用数据生成器生成新数据对历史数据进行更新,将数据加载到DataFrame,并将DataFrame写入到Hudi表中。

scala> val dataGen = new DataGenerator

dataGen: org.apache.hudi.QuickstartUtils.DataGenerator = org.apache.hudi.QuickstartUtils$DataGenerator@6295b7e2

-- 小插曲: dataGen在执行更新操作之前必须要先执行插入,由于我在操作过程中关闭了一次窗口,所以这里需要再插入一次数据再进行更新

scala> val updates = convertToStringList(dataGen.generateUpdates(10))

org.apache.hudi.exception.HoodieException: Data must have been written before performing the update operation

at org.apache.hudi.QuickstartUtils$DataGenerator.generateUpdates(QuickstartUtils.java:181)

... 61 elided

scala> val inserts = convertToStringList(dataGen.generateInserts(10))

inserts: java.util.List[String] = [{"ts": 1646047511967, "uuid": "30128b4d-e6ad-43e1-accf-05604870b0be", "rider": "rider-213", "driver": "driver-213", "begin_lat": 0.4726905879569653, "begin_lon": 0.46157858450465483, "end_lat": 0.754803407008858, "end_lon": 0.9671159942018241, "fare": 34.158284716382845, "partitionpath": "americas/brazil/sao_paulo"}, {"ts": 1645891834407, "uuid": "617c5804-a254-4457-906b-932d49218f18", "rider": "rider-213", "driver": "driver-213", "begin_lat": 0.6100070562136587, "begin_lon": 0.8779402295427752, "end_lat": 0.3407870505929602, "end_lon": 0.5030798142293655, "fare": 43.4923811219014, "partitionpath": "americas/brazil/sao_paulo"}, {"ts": 1646028554890, "uuid": "3e6a0931-0610-48e4-ac54-627c0e368f96", "rider": "rider-213", "driver": "driver-213", "begin_lat...

scala> val updates = convertToStringList(dataGen.generateUpdates(10))

updates: java.util.List[String] = [{"ts": 1645751883137, "uuid": "3e6a0931-0610-48e4-ac54-627c0e368f96", "rider": "rider-284", "driver": "driver-284", "begin_lat": 0.7340133901254792, "begin_lon": 0.5142184937933181, "end_lat": 0.7814655558162802, "end_lon": 0.6592596683641996, "fare": 49.527694252432056, "partitionpath": "americas/united_states/san_francisco"}, {"ts": 1645786301398, "uuid": "30128b4d-e6ad-43e1-accf-05604870b0be", "rider": "rider-284", "driver": "driver-284", "begin_lat": 0.1593867607188556, "begin_lon": 0.010872312870502165, "end_lat": 0.9808530350038475, "end_lon": 0.7963756520507014, "fare": 29.47661370147079, "partitionpath": "americas/brazil/sao_paulo"}, {"ts": 1646252344877, "uuid": "30128b4d-e6ad-43e1-accf-05604870b0be", "rider": "rider-284", "driver": "driver-28...

scala> val tableName = "hudi_trips_cow"

tableName: String = hudi_trips_cow

scala> df.write.format("hudi").

| | options(getQuickstartWriteConfigs).

| | option(PRECOMBINE_FIELD_OPT_KEY, "ts").

| | option(RECORDKEY_FIELD_OPT_KEY, "uuid").

| | option(PARTITIONPATH_FIELD_OPT_KEY, "partitionpath").

| | option(TABLE_NAME, tableName).

| | mode(Overwrite).

| | save(basePath)

warning: there was one deprecation warning; re-run with -deprecation for details

22/03/03 11:13:49 WARN DFSPropertiesConfiguration: Cannot find HUDI_CONF_DIR, please set it as the dir of hudi-defaults.conf

22/03/03 11:13:49 WARN DFSPropertiesConfiguration: Properties file file:/etc/hudi/conf/hudi-defaults.conf not found. Ignoring to load props file

22/03/03 11:13:49 WARN HoodieSparkSqlWriter$: hoodie table at file:/tmp/hudi_trips_cow already exists. Deleting existing data & overwriting with new data.

22/03/03 11:13:49 WARN HoodieBackedTableMetadata: Metadata table was not found at path file:///tmp/hudi_trips_cow/.hoodie/metadata

scala> spark.sql("select begin_lat,begin_lon,driver,end_lat,end_lon,fare,partitionpath,rider,ts,uuid from hudi_trips_snapshot").show()

22/03/03 11:15:52 WARN InMemoryFileIndex: The directory file:/tmp/hudi_trips_cow/asia/india/chennai/3ef49959-87c7-480b-8b5d-28542372fb52-0_2-39-41_20220303101248907.parquet was not found. Was it deleted very recently?

22/03/03 11:15:52 WARN InMemoryFileIndex: The directory file:/tmp/hudi_trips_cow/americas/brazil/sao_paulo/44e4557a-8e70-4ff0-90f9-f4825d70d904-0_0-34-39_20220303101248907.parquet was not found. Was it deleted very recently?

22/03/03 11:15:52 WARN InMemoryFileIndex: The directory file:/tmp/hudi_trips_cow/americas/united_states/san_francisco/5dd0b57b-c110-4b83-851c-b89b521b0950-0_1-39-40_20220303101248907.parquet was not found. Was it deleted very recently?

+---------+---------+------+-------+-------+----+-------------+-----+---+----+

|begin_lat|begin_lon|driver|end_lat|end_lon|fare|partitionpath|rider| ts|uuid|

+---------+---------+------+-------+-------+----+-------------+-----+---+----+

+---------+---------+------+-------+-------+----+-------------+-----+---+----+

-- 从上面看到我在OverWrite数据之后,原先创建的视图失效了,这里重新进行创建

scala> val tripsSnapshotDF = spark.read.format("hudi").load(basePath + "/*/*/*/*")

tripsSnapshotDF: org.apache.spark.sql.DataFrame = [_hoodie_commit_time: string, _hoodie_commit_seqno: string ... 13 more fields]

scala> tripsSnapshotDF.createOrReplaceTempView("hudi_trips_snapshot")

scala> spark.sql("select uuid,rider,driver,fare,ts from hudi_trips_snapshot").show()

+--------------------+---------+----------+------------------+-------------+

| uuid| rider| driver| fare| ts|

+--------------------+---------+----------+------------------+-------------+

|85204893-1244-40f...|rider-213|driver-213| 41.06290929046368|1646094497489|

|bef3f285-5830-441...|rider-213|driver-213|17.851135255091155|1646099025106|

|30128b4d-e6ad-43e...|rider-213|driver-213|34.158284716382845|1646047511967|

|790bbde3-c1d1-4e4...|rider-213|driver-213| 66.62084366450246|1646168530398|

|617c5804-a254-445...|rider-213|driver-213| 43.4923811219014|1645891834407|

|f6c6fa76-8e58-444...|rider-213|driver-213|19.179139106643607|1646031985303|

|442b9617-8cf4-4c2...|rider-213|driver-213| 27.79478688582596|1646234638857|

|b57f74c5-d42b-4f0...|rider-213|driver-213| 33.92216483948643|1646019971381|

|3e6a0931-0610-48e...|rider-213|driver-213| 64.27696295884016|1646028554890|

|a00ac06e-fa48-481...|rider-213|driver-213| 93.56018115236618|1646088804580|

+--------------------+---------+----------+------------------+-------------+

-- 更新数据

scala> val updates = convertToStringList(dataGen.generateUpdates(10))

updates: java.util.List[String] = [{"ts": 1645941233171, "uuid": "bef3f285-5830-4410-8bce-958aca75a699", "rider": "rider-563", "driver": "driver-563", "begin_lat": 0.16172715555352513, "begin_lon": 0.6286940931025506, "end_lat": 0.7559063825441225, "end_lon": 0.39828516291900906, "fare": 16.098476392187365, "partitionpath": "asia/india/chennai"}, {"ts": 1646129230287, "uuid": "85204893-1244-40f4-bd6e-6775d98fa332", "rider": "rider-563", "driver": "driver-563", "begin_lat": 0.9312237784651692, "begin_lon": 0.67243450582925, "end_lat": 0.28393433672984614, "end_lon": 0.2725166210142148, "fare": 27.603571822228822, "partitionpath": "asia/india/chennai"}, {"ts": 1645684884426, "uuid": "442b9617-8cf4-4c25-b1c5-4b2eb5e4b16d", "rider": "rider-563", "driver": "driver-563", "begin_lat": 0.992715...

scala> val df = spark.read.json(spark.sparkContext.parallelize(updates, 2))

warning: there was one deprecation warning; re-run with -deprecation for details

df: org.apache.spark.sql.DataFrame = [begin_lat: double, begin_lon: double ... 8 more fields]

scala> df.write.format("hudi").

| | options(getQuickstartWriteConfigs).

| | option(PRECOMBINE_FIELD_OPT_KEY, "ts").

| | option(RECORDKEY_FIELD_OPT_KEY, "uuid").

| | option(PARTITIONPATH_FIELD_OPT_KEY, "partitionpath").

| | option(TABLE_NAME, tableName).

| | mode(Append).

| | save(basePath)

warning: there was one deprecation warning; re-run with -deprecation for details

-- 更新之后,也需要重新查询

scala> spark.sql("select uuid,rider,driver,fare,ts from hudi_trips_snapshot").show()

+----+-----+------+----+---+

|uuid|rider|driver|fare| ts|

+----+-----+------+----+---+

+----+-----+------+----+---+

scala> val tripsSnapshotDF = spark.read.format("hudi").load(basePath + "/*/*/*/*")

tripsSnapshotDF: org.apache.spark.sql.DataFrame = [_hoodie_commit_time: string, _hoodie_commit_seqno: string ... 13 more fields]

scala> tripsSnapshotDF.createOrReplaceTempView("hudi_trips_snapshot")

scala> spark.sql("select uuid,rider,driver,fare,ts from hudi_trips_snapshot").show()

+--------------------+---------+----------+------------------+-------------+

| uuid| rider| driver| fare| ts|

+--------------------+---------+----------+------------------+-------------+

|85204893-1244-40f...|rider-563|driver-563|27.603571822228822|1646129230287|

|bef3f285-5830-441...|rider-563|driver-563|16.098476392187365|1645941233171|

|30128b4d-e6ad-43e...|rider-213|driver-213|34.158284716382845|1646047511967|

|790bbde3-c1d1-4e4...|rider-563|driver-563| 55.31092276192561|1646192576878|

|617c5804-a254-445...|rider-563|driver-563| 38.4828225162323|1646111096349|

|f6c6fa76-8e58-444...|rider-563|driver-563| 19.48472236694032|1646219634123|

|442b9617-8cf4-4c2...|rider-563|driver-563| 84.66949742559657|1645855735564|

|b57f74c5-d42b-4f0...|rider-563|driver-563| 54.16944371261484|1645927926744|

|3e6a0931-0610-48e...|rider-213|driver-213| 64.27696295884016|1646028554890|

|a00ac06e-fa48-481...|rider-563|driver-563| 37.35848234860164|1645897531641|

+--------------------+---------+----------+------------------+-------------+

2.4、增量查询

Hudi还提供了获取自给定提交时间戳以来以更改记录流的功能,这可以使用Hudi的增量查询并提供开始流进行更改的开始时间来实现。

scala> val commits = spark.sql("select distinct(_hoodie_commit_time) as commitTime from hudi_trips_snapshot order by commitTime").map(k => k.getString(0)).take(50)

commits: Array[String] = Array(20220303111349066, 20220303112140957)

scala> val beginTime = commits(commits.length - 2)

beginTime: String = 20220303111349066

scala> val tripsIncrementalDF = spark.read.format("hudi").

| | option(QUERY_TYPE_OPT_KEY, QUERY_TYPE_INCREMENTAL_OPT_VAL).

| | option(BEGIN_INSTANTTIME_OPT_KEY, beginTime).

| | load(basePath)

tripsIncrementalDF: org.apache.spark.sql.DataFrame = [_hoodie_commit_time: string, _hoodie_commit_seqno: string ... 13 more fields]

scala> tripsIncrementalDF.createOrReplaceTempView("hudi_trips_incremental")

scala> spark.sql("select `_hoodie_commit_time`, fare, begin_lon, begin_lat, ts from hudi_trips_incremental where fare > 20.0").show()

+-------------------+------------------+-------------------+------------------+-------------+

|_hoodie_commit_time| fare| begin_lon| begin_lat| ts|

+-------------------+------------------+-------------------+------------------+-------------+

| 20220303112140957| 84.66949742559657|0.31331111382522836|0.8573834026158349|1645855735564|

| 20220303112140957| 54.16944371261484| 0.7548086309564753|0.5535762898838785|1645927926744|

| 20220303112140957| 37.35848234860164| 0.9084944020139248|0.6330100459693088|1645897531641|

| 20220303112140957|27.603571822228822| 0.67243450582925|0.9312237784651692|1646129230287|

| 20220303112140957| 55.31092276192561| 0.826183030502974| 0.391583018565109|1646192576878|

| 20220303112140957| 38.4828225162323|0.20404106962358204|0.1450793330198833|1646111096349|

+-------------------+------------------+-------------------+------------------+-------------+

注: 这将提供在beginTime提交后的数据,并且fare>20的数据

2.5、时间点查询

根据特定时间查询,可以将endTime指向特定时间,beginTime指向000(表示最早的时间)

scala> val beginTime = "000"

beginTime: String = 000

scala> val endTime = commits(commits.length - 2)

endTime: String = 20220303111349066

scala> val tripsPointInTimeDF = spark.read.format("hudi").

| | option(QUERY_TYPE_OPT_KEY, QUERY_TYPE_INCREMENTAL_OPT_VAL).

| | option(BEGIN_INSTANTTIME_OPT_KEY, beginTime).

| | option(END_INSTANTTIME_OPT_KEY, endTime).

| | load(basePath)

tripsPointInTimeDF: org.apache.spark.sql.DataFrame = [_hoodie_commit_time: string, _hoodie_commit_seqno: string ... 13 more fields]

scala> tripsPointInTimeDF.createOrReplaceTempView("hudi_trips_point_in_time")

scala> spark.sql("select `_hoodie_commit_time`, fare, begin_lon, begin_lat, ts from hudi_trips_point_in_time where fare > 20.0").show()

+-------------------+------------------+-------------------+-------------------+-------------+

|_hoodie_commit_time| fare| begin_lon| begin_lat| ts|

+-------------------+------------------+-------------------+-------------------+-------------+

| 20220303111349066| 27.79478688582596| 0.6273212202489661|0.11488393157088261|1646234638857|

| 20220303111349066| 33.92216483948643| 0.9694586417848392| 0.1856488085068272|1646019971381|

| 20220303111349066| 64.27696295884016| 0.4923479652912024| 0.5731835407930634|1646028554890|

| 20220303111349066| 93.56018115236618|0.14285051259466197|0.21624150367601136|1646088804580|

| 20220303111349066|34.158284716382845|0.46157858450465483| 0.4726905879569653|1646047511967|

| 20220303111349066| 66.62084366450246|0.03844104444445928| 0.0750588760043035|1646168530398|

| 20220303111349066| 43.4923811219014| 0.8779402295427752| 0.6100070562136587|1645891834407|

| 20220303111349066| 41.06290929046368| 0.8192868687714224| 0.651058505660742|1646094497489|

+-------------------+------------------+-------------------+-------------------+-------------+

注: 查询出来的数据不是表中现有的数据,而是在提交过程中所有的符合条件的记录,例如数据的历史版本。

2.6、删除数据

scala> val ds = spark.sql("select uuid, partitionpath from hudi_trips_snapshot").limit(2)

ds: org.apache.spark.sql.Dataset[org.apache.spark.sql.Row] = [uuid: string, partitionpath: string]

scala> val deletes = dataGen.generateDeletes(ds.collectAsList())

deletes: java.util.List[String] = [{"ts": "0.0","uuid": "85204893-1244-40f4-bd6e-6775d98fa332","partitionpath": "asia/india/chennai"}, {"ts": "0.0","uuid": "bef3f285-5830-4410-8bce-958aca75a699","partitionpath": "asia/india/chennai"}]

scala> val df = spark.read.json(spark.sparkContext.parallelize(deletes, 2))

warning: there was one deprecation warning; re-run with -deprecation for details

df: org.apache.spark.sql.DataFrame = [partitionpath: string, ts: string ... 1 more field]

scala> df.write.format("hudi").

| | options(getQuickstartWriteConfigs).

| | option(OPERATION_OPT_KEY,"delete").

| | option(PRECOMBINE_FIELD_OPT_KEY, "ts").

| | option(RECORDKEY_FIELD_OPT_KEY, "uuid").

| | option(PARTITIONPATH_FIELD_OPT_KEY, "partitionpath").

| | option(TABLE_NAME, tableName).

| | mode(Append).

| | save(basePath)

warning: there was one deprecation warning; re-run with -deprecation for details

scala> val roAfterDeleteViewDF = spark.read.format("hudi").load(basePath + "/*/*/*/*")

roAfterDeleteViewDF: org.apache.spark.sql.DataFrame = [_hoodie_commit_time: string, _hoodie_commit_seqno: string ... 13 more fields]

scala> roAfterDeleteViewDF.registerTempTable("hudi_trips_snapshot")

warning: there was one deprecation warning; re-run with -deprecation for details

scala> spark.sql("select uuid, partitionPath from hudi_trips_snapshot").count()

res27: Long = 8

3428

3428

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?