本文章先发布于个人博客

开始

urllib是Python内置的HTTP请求模块,其包含以下四个模块

-

request:HTTP请求模块

-

error:异常处理模块

-

parse:工具模块,拆分、解析、合并

-

robotparser:识别网站robots.txt文件,判断哪些网站可以爬。

请求发送

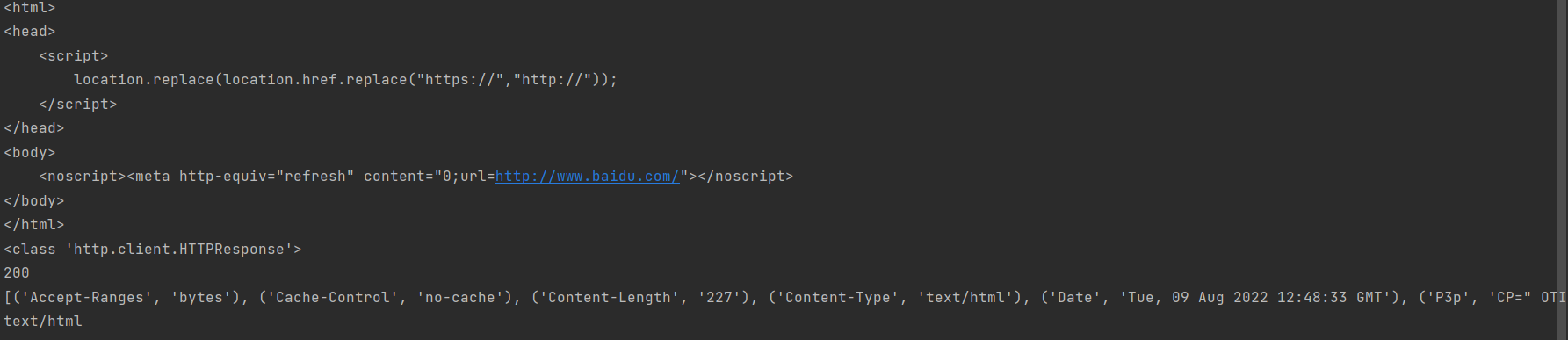

import urllib.request

response=urllib.request.urlopen('https://www.baidu.com')

print(response.read().decode('utf-8'))#read可以返回网页内容, 网页编码为UTF-8需要用decode,不然会有转义字符

print(type(response)) #类型

print(response.status) #获取响应状态码

print(response.getheaders()) #获取响应头rs())

-

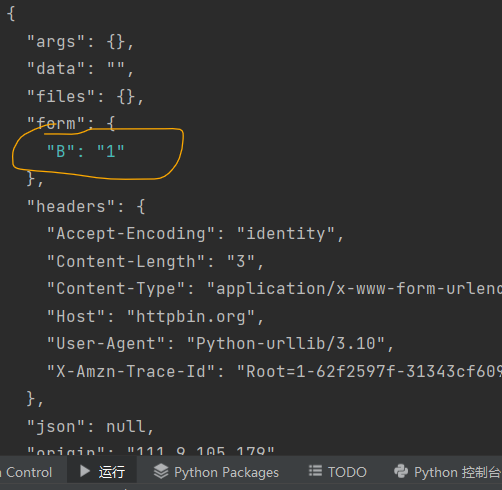

data参数

data参数为可选,使用需要,使用bytes()转换为字节流编码格式

import urllib.request import urllib.parse data=bytes(urllib.parse.urlencode({'B':'1'}),encoding='utf-8') response=urllib.request.urlopen('http://httpbin.org/post',data=data) print(response.read().decode('utf8'))

我们的数据出现在了form字段里,这是我们用POST方法传递的数据

-

timeout参数

import urllib.request import socket try: response=urllib.request.urlopen('http://www.google.com',timeout=1) print(response.read().decode('utf-8')) except urllib.error.URLError as e: if isinstance(e.reason,socket.timeout): print('TIME OUT')

urllib.request — Extensible library for opening URLs — Python 3.10.6 documentation

Request能实现更多参数的请求

def __init__(self, url, data=None, headers={}, origin_req_host=None, unverifiable=False, method=None):

URL:请求URL

data:必须为bytes(),如果是字典可以用urllib.parse.urlencode()

headers:为一个字典

origin_req_host:表示这个请求是否是无法验证的

method:请求方法

from urllib import request,parse

url='http://httpbin.org/post'

headers={

'Host':'httpbin.org',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.5112.81 Safari/537.36 Edg/104.0.1293.47'

}

dict={'b':1}

data=bytes(parse.urlencode(dict),encoding='utf8')

data=bytes(parse.urlencode({'B':'1'}),encoding='utf-8')

req=request.Request(url,data=data,headers=headers,method='POST')

"""

另一种请求头的添加方式

req=request.Request(url=url,data=data,method='POST')

req.add_header('Host','httpbin.org',)

"""

response=request.urlopen(req)

print(response.read().deco

进阶用法

Handler

现在我们介绍Handler

比如:

HTTPDefaultErrorHandler:用于处理响应错误,错误会抛出HTTPError类型的异常

HTTPRedirectHandler:处理重定向

HTTPCookieProcesser:处理Cookies

ProxyHandler:设置代理,默认代理为空

HTTPPasswordMgr:用于管理密码,维护了用户名和密码

HTTPBasicAuthHandler:用于管理认证

Cookies

import http.cookiejar,urllib.request

filename='cookies.txt'

cookie=http.cookiejar.MozillaCookieJar(filename)

handler=urllib.request.HTTPCookieProcessor(cookie)

opener=urllib.request.build_opener(handler)

response=opener.open('http://www.baidu.com')

cookie.save(ignore_discard=True,ignore_expires=True)

import http.cookiejar,urllib.request

cookie=http.cookiejar.CookieJar()

handler=urllib.request.HTTPCookieProcessor(cookie)

opener=urllib.request.build_opener(handler)

response=opener.open('http://www.baidu.com')

for item in cookie:

print(item.

import http.cookiejar,urllib.request

cookie=http.cookiejar.LWPCookieJar()

cookie.load('cookies.txt',ignore_expires=1,ignore_discard=1)

handler=urllib.request.HTTPCookieProcessor(cookie)

opener=urllib.request.build_opener(handler)

response=opener.open('http://www.baidu.com')

print(response.read.decode('utf-8'))

异常处理

URLError

from urllib import request,error

try:

response=request.urlopen('http://mrharsh.top/index.htm')

except error.URLError as e:

print(e.reason)

HTTPError

from urllib import request,error

try:

response=request.urlopen('http://mrharsh.top/index.htm')

except error.HTTPError as e:

print(e.reason)

print(e.code)

URLError是HTTPError的父类

from urllib import request,error

try:

response=request.urlopen('http://mrharsh.top/index.htm')

except error.HTTPError as e:

print(e.reason)

print(e.code)

except error.URLError as e:

print(e.reason)

else:

print('SUCCESS!!')

解析链接

urlparse

from urllib.parse import urlparse

result=urlparse('https://www.baidu.com/s?wd=%E7%89%B9%E6%9C%97%E6%99%AE%E6%88%96%E5%B0%86%E9%9D%A2%E4%B8%B4%E6%9C%80%E9%AB%9830%E5%B9%B4%E5%88%91%E6%9C%9F&tn=baiduhome_pg&usm=1&rsv_idx=2&ie=utf-8&rsv_pq=beff06110014fb0c&oq=%E9%BB%91%E5%B1%B1%E5%8F%91%E7%94%9F%E6%9E%AA%E5%87%BB%E4%BA%8B%E4%BB%B6%E8%87%B411%E6%AD%BB6%E4%BC%A4&rsv_t=203cnvqhWV3frPIJkl4SxrljZIVFGNv6ZJkChXgM4YBU2qiBwG0dd0rTfPGYEJdqPkDg&rqid=beff06110014fb0c&rsf=a7183c00b74706d91bca215ee108b466_1_15_2&rsv_dl=0_right_fyb_pchot_20811&sa=0_right_fyb_pchot_20811')

print(result)

urlunparse()

from urllib.parse import urlunparse

data=['https','wwww.baidu.com','index.html','user','a=6','comment']

print(urlunparse(data))

urlsplit

from urllib.parse import urlsplit

result=urlsplit('https://www.baidu.com/s?wd=python&pn=10&oq=python&tn=baiduhome_pg&ie=utf-8&usm=4&rsv_idx=2&rsv_pq=cb482fb3002cef17&rsv_t=6415j2DcN1b3Ov7Yj0M6vjanBErrN6Meq3YKeNQ%2BhsGLzD1xnsUAYddpkN%2FlYnFdsQ07')

for i in result:

print(i)

urlunsplit()

from urllib.parse import urlunsplit

data=['http','www.baidu.com','index.html','a=0','c']

print(urlunsplit(data))

urljoin()

from urllib.parse import urljoin

print(urljoin('http://www.baidu.com','index.html'))

urlencode

在GET中加参数

from urllib.parse import urlencode

params={

'name':'s',

"1":"2"

}

base_url='http//www.baidu.com'

urll=base_url+urlencode(params)

print(urll)

parse_qs()

反序列化

from urllib.parse import urlsplit,parse_qs

result=urlsplit('https://www.baidu.com/s?wd=python&pn=10&oq=python&tn=baiduhome_pg&ie=utf-8&usm=4&rsv_idx=2&rsv_pq=cb482fb3002cef17&rsv_t=6415j2DcN1b3Ov7Yj0M6vjanBErrN6Meq3YKeNQ%2BhsGLzD1xnsUAYddpkN%2FlYnFdsQ07')

print(parse_qs(result.query))

quote()

将内容转为URL编码

from urllib.parse import quote,urljoin

keyword='python'

url='http://www.baidu.com/s?wd='+quote(keyword)

print(url)

unquote()

from urllib.parse import quote,urljoin,unquote

keyword='你好'

url='https://www.baidu.com/s?wd='+quote(keyword)

print(url)

print(unquote(url))

Robots协议

robotparser()

-

set_ur():设置Robots.txt链接

-

read():读取robots.txt并分析

-

parse():解析robots.txt文件

-

can_fetch():传入User-Agent和URL,判读是否可爬取

-

mtime():返回上次抓取的时间

-

modified():设置当前时间为上次抓取时间

from urllib.robotparser import RobotFileParser

rp=RobotFileParser()

rp.set_url('https://www.bilibili.com/robots.txt')

rp.read()

print(rp.can_fetch('Yisouspider','https://www.bilibili.com/video/BV1fN4y1u7s1?spm_id_from=333.1007.tianma.2-3-6.click'))

print(rp.can_fetch('*','https://www.bilibili.com/video/BV1fN4y1u7s1?spm_id_from=333.1007.tianma.2-3-6.click'))

181

181

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?