简介

这是一个基于Jsoup的用来爬取网页上图片并下载到本地的Java项目。

完整项目见 https://github.com/AsajuHuishi/CrawlByJsoup

exe文件见getImageByPixivPainterId.exe

环境

- JDK 1.8

- IntelliJ Idea 2020

- Jsoup 1.13.1

目录结构

├─saveImage

│ ├─喵咕君QAQ(KH3)

│ └─小逝lullaby

└─src

└─indi

└─huishi

├─service // Crawl.java

└─utils // CrawlUtils.java

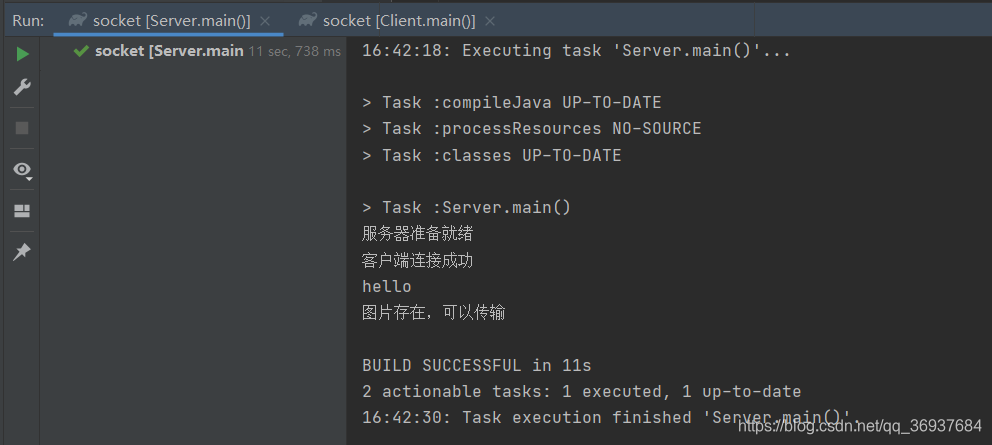

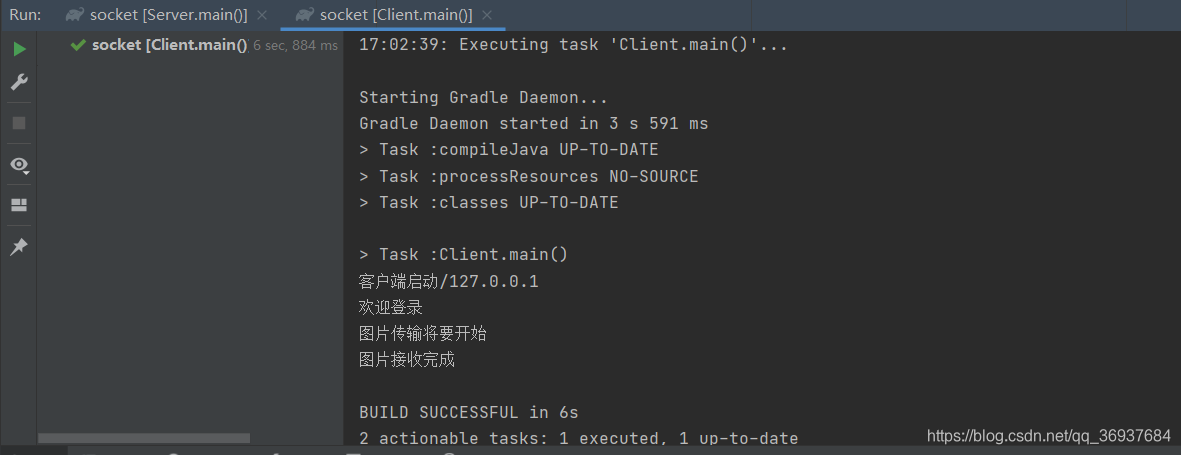

结果

客户端](https://i-blog.csdnimg.cn/blog_migrate/9ef14aa4c2b410b00ff7c293ef66cb52.png)

Crawl类

package indi.huishi.service;

import indi.huishi.utils.CrawlUtils;

import org.jsoup.nodes.Document;

import java.io.IOException;

import java.util.List;

import java.util.Map;

public class Crawl {

public static final String Proto = "https://"<

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

1160

1160

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?