创作背景

1、kettle9.4

2、jdk8

所需的jar包

jedis-2.9.0.jar

commons-pool2-2.4.2.jar(kettle自带commons-pool-1.5.7.jar)

如果不引入会报错

ERROR (version 9.4.0.0-343, build 0.0 from 2022-11-08 07.50.27 by buildguy) : org.codehaus.commons.compiler.CompileException: org/apache/commons/pool2/impl/GenericObjectPoolConfig完整的测试demo

import redis.clients.jedis.HostAndPort;

import redis.clients.jedis.JedisCluster;

import redis.clients.jedis.JedisPoolConfig;

import java.io.IOException;

import java.util.LinkedHashSet;

import java.util.Set;

public boolean processRow(StepMetaInterface smi, StepDataInterface sdi) throws KettleException {

Object[] r = getRow();

if (r == null) {

setOutputDone();

return false;

}

r = createOutputRow(r, data.outputRowMeta.size());

//1、连接redis

JedisPoolConfig poolConfig = new JedisPoolConfig();

poolConfig.setMaxTotal(5);

poolConfig.setMaxIdle(1);

poolConfig.setMaxWaitMillis(1000);

Set<HostAndPort> nodes = new LinkedHashSet<HostAndPort>();

nodes.add(new HostAndPort("11.11.1.40", 7001));

nodes.add(new HostAndPort("11.11.1.41", 7001));

nodes.add(new HostAndPort("11.11.1.42", 7001));

JedisCluster jedis = new JedisCluster(nodes, poolConfig);

//2、获取key值

//2、获取key值

String name = get(Fields.In, "name").getString(r);

boolean exists = jedis.exists(name);

logBasic("redis的key是 "+name +" 是否存在 "+exists);

//2.1、获取上一次的机米长度

String lastVsxzl = "0";

if (!exists) {

jedis.hset(name, "vsxzl", "0");

} else {

if (!jedis.hexists(name, "vsxzl")) {

jedis.hset(name, "vsxzl", "0");

}

lastVsxzl = jedis.hget(name, "vsxzl");

}

//2.2、获取状态值

String status="0";

if (!jedis.hexists(name, "status")) {

jedis.hset(name, "status", "0");

}else{

status=jedis.hget(name,"status");

}

//2.3、获取vsxg

String vsxg="0";

if (!jedis.hexists(name, "vsxg")) {

jedis.hset(name, "vsxg", "0");

}else{

vsxg=jedis.hget(name,"vsxg");

}

logBasic("上次长 "+lastVsxzl+" 上次状态 "+status+" 上次vsxg "+vsxg);

//3、输出key值

get(Fields.Out, "lastVsxzl").setValue(r, lastVsxzl);

get(Fields.Out, "lastStatus").setValue(r, status);

get(Fields.Out, "lastVsxg").setValue(r, vsxg);

// 关闭连接

try {

jedis.close();

} catch (IOException e) {

e.printStackTrace();

}

putRow(data.outputRowMeta, r);

return true;

}

<?xml version="1.0" encoding="UTF-8"?>

<transformation>

<info>

<name>redisCuster</name>

<description/>

<extended_description/>

<trans_version/>

<trans_type>Normal</trans_type>

<trans_status>0</trans_status>

<directory>/</directory>

<parameters>

</parameters>

<log>

<trans-log-table>

<connection/>

<schema/>

<table/>

<size_limit_lines/>

<interval/>

<timeout_days/>

<field>

<id>ID_BATCH</id>

<enabled>Y</enabled>

<name>ID_BATCH</name>

</field>

<field>

<id>CHANNEL_ID</id>

<enabled>Y</enabled>

<name>CHANNEL_ID</name>

</field>

<field>

<id>TRANSNAME</id>

<enabled>Y</enabled>

<name>TRANSNAME</name>

</field>

<field>

<id>STATUS</id>

<enabled>Y</enabled>

<name>STATUS</name>

</field>

<field>

<id>LINES_READ</id>

<enabled>Y</enabled>

<name>LINES_READ</name>

<subject/>

</field>

<field>

<id>LINES_WRITTEN</id>

<enabled>Y</enabled>

<name>LINES_WRITTEN</name>

<subject/>

</field>

<field>

<id>LINES_UPDATED</id>

<enabled>Y</enabled>

<name>LINES_UPDATED</name>

<subject/>

</field>

<field>

<id>LINES_INPUT</id>

<enabled>Y</enabled>

<name>LINES_INPUT</name>

<subject/>

</field>

<field>

<id>LINES_OUTPUT</id>

<enabled>Y</enabled>

<name>LINES_OUTPUT</name>

<subject/>

</field>

<field>

<id>LINES_REJECTED</id>

<enabled>Y</enabled>

<name>LINES_REJECTED</name>

<subject/>

</field>

<field>

<id>ERRORS</id>

<enabled>Y</enabled>

<name>ERRORS</name>

</field>

<field>

<id>STARTDATE</id>

<enabled>Y</enabled>

<name>STARTDATE</name>

</field>

<field>

<id>ENDDATE</id>

<enabled>Y</enabled>

<name>ENDDATE</name>

</field>

<field>

<id>LOGDATE</id>

<enabled>Y</enabled>

<name>LOGDATE</name>

</field>

<field>

<id>DEPDATE</id>

<enabled>Y</enabled>

<name>DEPDATE</name>

</field>

<field>

<id>REPLAYDATE</id>

<enabled>Y</enabled>

<name>REPLAYDATE</name>

</field>

<field>

<id>LOG_FIELD</id>

<enabled>Y</enabled>

<name>LOG_FIELD</name>

</field>

<field>

<id>EXECUTING_SERVER</id>

<enabled>N</enabled>

<name>EXECUTING_SERVER</name>

</field>

<field>

<id>EXECUTING_USER</id>

<enabled>N</enabled>

<name>EXECUTING_USER</name>

</field>

<field>

<id>CLIENT</id>

<enabled>N</enabled>

<name>CLIENT</name>

</field>

</trans-log-table>

<perf-log-table>

<connection/>

<schema/>

<table/>

<interval/>

<timeout_days/>

<field>

<id>ID_BATCH</id>

<enabled>Y</enabled>

<name>ID_BATCH</name>

</field>

<field>

<id>SEQ_NR</id>

<enabled>Y</enabled>

<name>SEQ_NR</name>

</field>

<field>

<id>LOGDATE</id>

<enabled>Y</enabled>

<name>LOGDATE</name>

</field>

<field>

<id>TRANSNAME</id>

<enabled>Y</enabled>

<name>TRANSNAME</name>

</field>

<field>

<id>STEPNAME</id>

<enabled>Y</enabled>

<name>STEPNAME</name>

</field>

<field>

<id>STEP_COPY</id>

<enabled>Y</enabled>

<name>STEP_COPY</name>

</field>

<field>

<id>LINES_READ</id>

<enabled>Y</enabled>

<name>LINES_READ</name>

</field>

<field>

<id>LINES_WRITTEN</id>

<enabled>Y</enabled>

<name>LINES_WRITTEN</name>

</field>

<field>

<id>LINES_UPDATED</id>

<enabled>Y</enabled>

<name>LINES_UPDATED</name>

</field>

<field>

<id>LINES_INPUT</id>

<enabled>Y</enabled>

<name>LINES_INPUT</name>

</field>

<field>

<id>LINES_OUTPUT</id>

<enabled>Y</enabled>

<name>LINES_OUTPUT</name>

</field>

<field>

<id>LINES_REJECTED</id>

<enabled>Y</enabled>

<name>LINES_REJECTED</name>

</field>

<field>

<id>ERRORS</id>

<enabled>Y</enabled>

<name>ERRORS</name>

</field>

<field>

<id>INPUT_BUFFER_ROWS</id>

<enabled>Y</enabled>

<name>INPUT_BUFFER_ROWS</name>

</field>

<field>

<id>OUTPUT_BUFFER_ROWS</id>

<enabled>Y</enabled>

<name>OUTPUT_BUFFER_ROWS</name>

</field>

</perf-log-table>

<channel-log-table>

<connection/>

<schema/>

<table/>

<timeout_days/>

<field>

<id>ID_BATCH</id>

<enabled>Y</enabled>

<name>ID_BATCH</name>

</field>

<field>

<id>CHANNEL_ID</id>

<enabled>Y</enabled>

<name>CHANNEL_ID</name>

</field>

<field>

<id>LOG_DATE</id>

<enabled>Y</enabled>

<name>LOG_DATE</name>

</field>

<field>

<id>LOGGING_OBJECT_TYPE</id>

<enabled>Y</enabled>

<name>LOGGING_OBJECT_TYPE</name>

</field>

<field>

<id>OBJECT_NAME</id>

<enabled>Y</enabled>

<name>OBJECT_NAME</name>

</field>

<field>

<id>OBJECT_COPY</id>

<enabled>Y</enabled>

<name>OBJECT_COPY</name>

</field>

<field>

<id>REPOSITORY_DIRECTORY</id>

<enabled>Y</enabled>

<name>REPOSITORY_DIRECTORY</name>

</field>

<field>

<id>FILENAME</id>

<enabled>Y</enabled>

<name>FILENAME</name>

</field>

<field>

<id>OBJECT_ID</id>

<enabled>Y</enabled>

<name>OBJECT_ID</name>

</field>

<field>

<id>OBJECT_REVISION</id>

<enabled>Y</enabled>

<name>OBJECT_REVISION</name>

</field>

<field>

<id>PARENT_CHANNEL_ID</id>

<enabled>Y</enabled>

<name>PARENT_CHANNEL_ID</name>

</field>

<field>

<id>ROOT_CHANNEL_ID</id>

<enabled>Y</enabled>

<name>ROOT_CHANNEL_ID</name>

</field>

</channel-log-table>

<step-log-table>

<connection/>

<schema/>

<table/>

<timeout_days/>

<field>

<id>ID_BATCH</id>

<enabled>Y</enabled>

<name>ID_BATCH</name>

</field>

<field>

<id>CHANNEL_ID</id>

<enabled>Y</enabled>

<name>CHANNEL_ID</name>

</field>

<field>

<id>LOG_DATE</id>

<enabled>Y</enabled>

<name>LOG_DATE</name>

</field>

<field>

<id>TRANSNAME</id>

<enabled>Y</enabled>

<name>TRANSNAME</name>

</field>

<field>

<id>STEPNAME</id>

<enabled>Y</enabled>

<name>STEPNAME</name>

</field>

<field>

<id>STEP_COPY</id>

<enabled>Y</enabled>

<name>STEP_COPY</name>

</field>

<field>

<id>LINES_READ</id>

<enabled>Y</enabled>

<name>LINES_READ</name>

</field>

<field>

<id>LINES_WRITTEN</id>

<enabled>Y</enabled>

<name>LINES_WRITTEN</name>

</field>

<field>

<id>LINES_UPDATED</id>

<enabled>Y</enabled>

<name>LINES_UPDATED</name>

</field>

<field>

<id>LINES_INPUT</id>

<enabled>Y</enabled>

<name>LINES_INPUT</name>

</field>

<field>

<id>LINES_OUTPUT</id>

<enabled>Y</enabled>

<name>LINES_OUTPUT</name>

</field>

<field>

<id>LINES_REJECTED</id>

<enabled>Y</enabled>

<name>LINES_REJECTED</name>

</field>

<field>

<id>ERRORS</id>

<enabled>Y</enabled>

<name>ERRORS</name>

</field>

<field>

<id>LOG_FIELD</id>

<enabled>N</enabled>

<name>LOG_FIELD</name>

</field>

</step-log-table>

<metrics-log-table>

<connection/>

<schema/>

<table/>

<timeout_days/>

<field>

<id>ID_BATCH</id>

<enabled>Y</enabled>

<name>ID_BATCH</name>

</field>

<field>

<id>CHANNEL_ID</id>

<enabled>Y</enabled>

<name>CHANNEL_ID</name>

</field>

<field>

<id>LOG_DATE</id>

<enabled>Y</enabled>

<name>LOG_DATE</name>

</field>

<field>

<id>METRICS_DATE</id>

<enabled>Y</enabled>

<name>METRICS_DATE</name>

</field>

<field>

<id>METRICS_CODE</id>

<enabled>Y</enabled>

<name>METRICS_CODE</name>

</field>

<field>

<id>METRICS_DESCRIPTION</id>

<enabled>Y</enabled>

<name>METRICS_DESCRIPTION</name>

</field>

<field>

<id>METRICS_SUBJECT</id>

<enabled>Y</enabled>

<name>METRICS_SUBJECT</name>

</field>

<field>

<id>METRICS_TYPE</id>

<enabled>Y</enabled>

<name>METRICS_TYPE</name>

</field>

<field>

<id>METRICS_VALUE</id>

<enabled>Y</enabled>

<name>METRICS_VALUE</name>

</field>

</metrics-log-table>

</log>

<maxdate>

<connection/>

<table/>

<field/>

<offset>0.0</offset>

<maxdiff>0.0</maxdiff>

</maxdate>

<size_rowset>10000</size_rowset>

<sleep_time_empty>50</sleep_time_empty>

<sleep_time_full>50</sleep_time_full>

<unique_connections>N</unique_connections>

<feedback_shown>Y</feedback_shown>

<feedback_size>50000</feedback_size>

<using_thread_priorities>Y</using_thread_priorities>

<shared_objects_file/>

<capture_step_performance>N</capture_step_performance>

<step_performance_capturing_delay>1000</step_performance_capturing_delay>

<step_performance_capturing_size_limit>100</step_performance_capturing_size_limit>

<dependencies>

</dependencies>

<partitionschemas>

</partitionschemas>

<slaveservers>

</slaveservers>

<clusterschemas>

</clusterschemas>

<created_user>-</created_user>

<created_date>2024/06/03 08:48:46.329</created_date>

<modified_user>-</modified_user>

<modified_date>2024/06/03 08:48:46.329</modified_date>

<key_for_session_key>H4sIAAAAAAAAAAMAAAAAAAAAAAA=</key_for_session_key>

<is_key_private>N</is_key_private>

</info>

<notepads>

</notepads>

<order>

<hop>

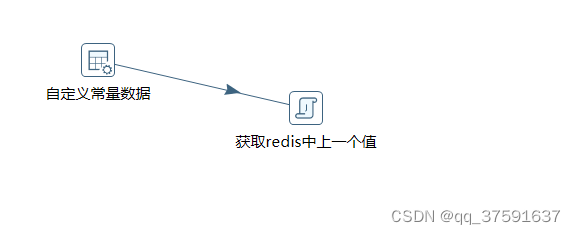

<from>自定义常量数据</from>

<to>获取redis中上一个值</to>

<enabled>Y</enabled>

</hop>

</order>

<step>

<name>自定义常量数据</name>

<type>DataGrid</type>

<description/>

<distribute>Y</distribute>

<custom_distribution/>

<copies>1</copies>

<partitioning>

<method>none</method>

<schema_name/>

</partitioning>

<fields>

<field>

<name>name</name>

<type>String</type>

<format/>

<currency/>

<decimal/>

<group/>

<length>-1</length>

<precision>-1</precision>

<set_empty_string>N</set_empty_string>

<field_null_if/>

</field>

</fields>

<data>

<line>

<item>T1-1-running</item>

</line>

</data>

<attributes/>

<cluster_schema/>

<remotesteps>

<input>

</input>

<output>

</output>

</remotesteps>

<GUI>

<xloc>256</xloc>

<yloc>112</yloc>

<draw>Y</draw>

</GUI>

</step>

<step>

<name>获取redis中上一个值</name>

<type>UserDefinedJavaClass</type>

<description/>

<distribute>Y</distribute>

<custom_distribution/>

<copies>1</copies>

<partitioning>

<method>none</method>

<schema_name/>

</partitioning>

<definitions>

<definition>

<class_type>TRANSFORM_CLASS</class_type>

<class_name>Processor</class_name>

<class_source>import redis.clients.jedis.HostAndPort;

import redis.clients.jedis.JedisCluster;

import redis.clients.jedis.JedisPoolConfig;

import java.io.IOException;

import java.util.LinkedHashSet;

import java.util.Set;

public boolean processRow(StepMetaInterface smi, StepDataInterface sdi) throws KettleException {

Object[] r = getRow();

if (r == null) {

setOutputDone();

return false;

}

r = createOutputRow(r, data.outputRowMeta.size());

//1、连接redis

JedisPoolConfig poolConfig = new JedisPoolConfig();

poolConfig.setMaxTotal(5);

poolConfig.setMaxIdle(1);

poolConfig.setMaxWaitMillis(1000);

Set<HostAndPort> nodes = new LinkedHashSet<HostAndPort>();

nodes.add(new HostAndPort("11.11.1.40", 7001));

nodes.add(new HostAndPort("11.11.1.41", 7001));

nodes.add(new HostAndPort("11.11.1.42", 7001));

JedisCluster jedis = new JedisCluster(nodes, poolConfig);

//2、获取key值

//2、获取key值

String name = get(Fields.In, "name").getString(r);

boolean exists = jedis.exists(name);

logBasic("redis的key是 "+name +" 是否存在 "+exists);

//2.1、获取上一次的机米长度

String lastVsxzl = "0";

if (!exists) {

jedis.hset(name, "vsxzl", "0");

} else {

if (!jedis.hexists(name, "vsxzl")) {

jedis.hset(name, "vsxzl", "0");

}

lastVsxzl = jedis.hget(name, "vsxzl");

}

//2.2、获取状态值

String status="0";

if (!jedis.hexists(name, "status")) {

jedis.hset(name, "status", "0");

}else{

status=jedis.hget(name,"status");

}

//2.3、获取vsxg

String vsxg="0";

if (!jedis.hexists(name, "vsxg")) {

jedis.hset(name, "vsxg", "0");

}else{

vsxg=jedis.hget(name,"vsxg");

}

logBasic("上次长 "+lastVsxzl+" 上次状态 "+status+" 上次vsxg "+vsxg);

//3、输出key值

get(Fields.Out, "lastVsxzl").setValue(r, lastVsxzl);

get(Fields.Out, "lastStatus").setValue(r, status);

get(Fields.Out, "lastVsxg").setValue(r, vsxg);

// 关闭连接

try {

jedis.close();

} catch (IOException e) {

e.printStackTrace();

}

putRow(data.outputRowMeta, r);

return true;

}

</class_source>

</definition>

</definitions>

<fields>

<field>

<field_name>lastVsxzl</field_name>

<field_type>String</field_type>

<field_length>-1</field_length>

<field_precision>-1</field_precision>

</field>

<field>

<field_name>lastStatus</field_name>

<field_type>String</field_type>

<field_length>-1</field_length>

<field_precision>-1</field_precision>

</field>

<field>

<field_name>lastVsxg</field_name>

<field_type>String</field_type>

<field_length>-1</field_length>

<field_precision>-1</field_precision>

</field>

</fields>

<clear_result_fields>N</clear_result_fields>

<info_steps/>

<target_steps/>

<usage_parameters/>

<attributes/>

<cluster_schema/>

<remotesteps>

<input>

</input>

<output>

</output>

</remotesteps>

<GUI>

<xloc>464</xloc>

<yloc>160</yloc>

<draw>Y</draw>

</GUI>

</step>

<step_error_handling>

</step_error_handling>

<slave-step-copy-partition-distribution>

</slave-step-copy-partition-distribution>

<slave_transformation>N</slave_transformation>

<attributes/>

</transformation>

1706

1706

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?