系统安装

linux系统不同发行版本安装指南

VirtualBox

VirtualBox可能发生冲突的软件有红蜘蛛、360、净网大师、CSGO的完美平台,安装VirtualBox需要卸载上述软件

安装CentOS7

VirtualBox和Vagrant全部要下最新版,否则安装Centos7会报错,不要使用下面课程的

安装步骤

-

在

https://www.virtualbox.org/wiki/Download_Old_Builds下载VirtualBox-6.0.12并点击安装 -

在

https://developer.hashicorp.com/vagrant/install下载vagrant-2.2.5并点击安装VirtualBox和Vagrant全部安装最新版,否则centos安装不上去,我这里安装的是VirtualBox7.0.41和Vagrant2.4.1

-

在windows系统上点击

win+r,输入cmd打开管理员cmd窗口,输入命令Vagrant init centos/7初始化centos系统,此时会在C:/User/Earl下创建一个名为Vagrantfile的文件在D盘的

D:\centos7vbox目录进入cmd进行初始化和安装Centos7,否则centos7会直接下载到c盘果然下载最新的VirtualBox和Vagrant安装centos7没问题【呵呵】,初始用户是vagrant,root用户的初始密码也是vagrant

-

使用命令

vagrant up安装并启动Centos7,root用户的初始密码是vagrant- 日常使用启动虚拟机最好不要使用

vagrant up,应该选择从VirtualBox中选择虚拟机启动,不需要界面或者使用远程连接的选择无界面启动,因为使用vagrant up启动可能会被vagrant覆盖已经被修改的网卡设置,docker老是重启网络服务或者系统后无法拉取镜像就是这个问题,修改了vim /etc/resolv.conf文件改了DNS地址后重启网络或者重启虚拟机该文件自动被覆盖,导致每次拉取镜像都要更改这个文件;使用vagrant up快速创建出虚拟机即可

- 日常使用启动虚拟机最好不要使用

-

使用命令

vagrant ssh从CDM窗口连接虚拟机,登录以后使用命令whoami能看到当前用户是vagrant -

在VirtualBox中右键关机可以直接关闭虚拟机,也可以在VirtualBox中右键开机,开机还可以在cmd窗口使用命令

vagrant up启动虚拟机 -

默认VirtualBox对虚拟机的网络地址转换和端口转发,类似于docker的端口映射,但是每次装软件都要在VirtualBox中设置,不方便;给虚拟机设置固定的IP地址

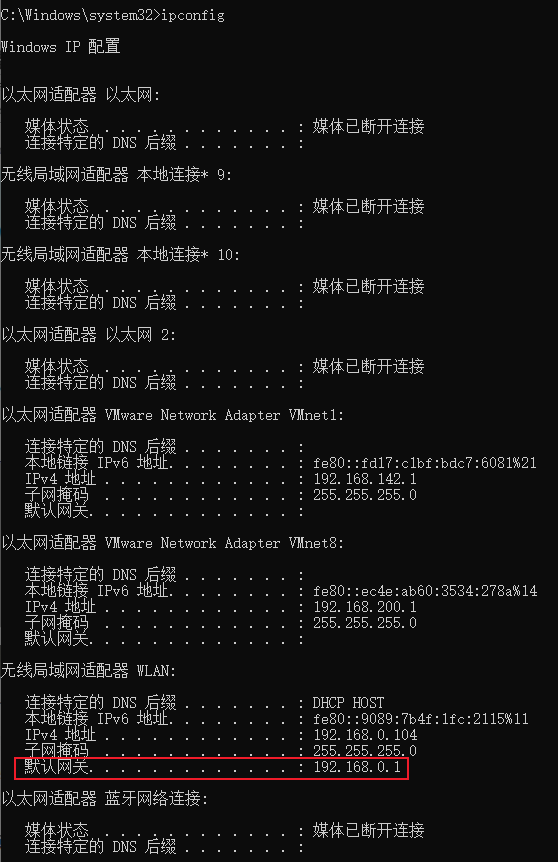

- 在

D:\centos7vbox\Vagrantfile文件中放开config.vm.network "private_network", ip: "192.168.33.10"的注释,并将ip修改为windows的VirtualBox Host-only Ethernet Adapter同一网段的另一个IP,我改成了192.168.56.10,可能名字是以太网#,上述名字找不到,此时使用命令ipconfig /all查看描述信息可以看到VirtualBox Host-only Ethernet Adapter,我这里是以太网3,IPV4对应也是192.168.56.1 - 改完以后在

D:\centos7vboxcmd命令窗口使用命令vagrant reload对虚拟机进行重启 - 重启后在虚拟机使用命令

ip addr查看eth1是否是设置的192.168.56.10,并在主机和虚拟机之间相互ping一下ip【windows的Ip是无线局域网WLAN的IP】,可以ping通没问题

- 在

-

开启CentOS的远程登录功能,使用VirtualBox安装的CentOS默认只允许ssh免密登录方式,只能通过CMD窗口使用命令

vagrant ssh连接,为了操作方便、文件上传等,需要配置允许使用账号密码远程登录,这样才能使用xshell或者xftp等远程工具连接CentOS- 使用命令

vi /etc/ssh/sshd_config打开文件/etc/ssh/sshd_config - 使用命令

/PasswordAuthentication no搜索并将配置PasswordAuthentication no修改为PasswordAuthentication yes - 使用命令

service sshd restart重启ssh服务,此时就可以使用ssh工具远程登录了,注意CentOS的远程登录root的密码也是vagrant

- 使用命令

-

VirtualBox安装的CentOS7可能无法ping通外网【也可能能ping通,因为我这儿配置没改过,但是能ping通外网,而且yum之类的命令都能正常使用】,即

ping baidu.com失败,解决办法如下-

使用命令

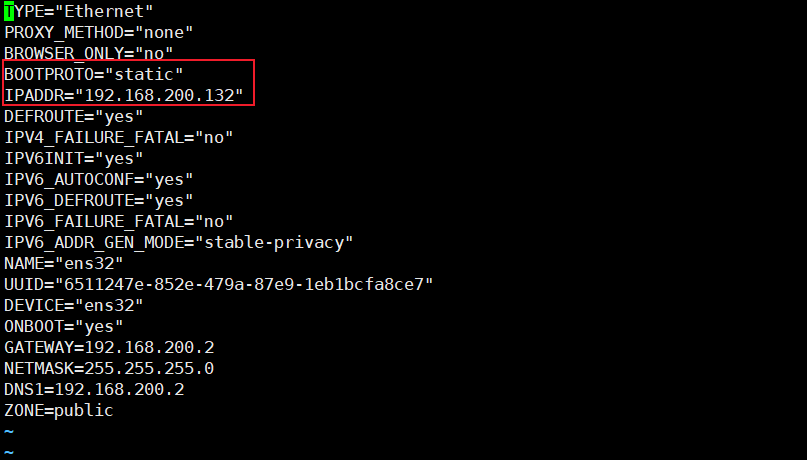

ip addr找到当前ip对应的网卡如eth1,对应的配置文件在/etc/sysconfig/network-scripts/ifcfg-eth1 -

使用命令

vi /etc/sysconfig/network-scripts/ifcfg-eth1修改网卡配置如下【Vagrant自动默认配置】

#VAGRANT-BEGIN # The contents below are automatically generated by Vagrant. Do not modify. NM_CONTROLLED=yes BOOTPROTO=none ONBOOT=yes IPADDR=192.168.56.10 NETMASK=255.255.255.0 DEVICE=eth1 PEERDNS=no #VAGRANT-END【更改后的配置】

- 添加网关地址

GATEWAY=192.168.56.1,一般网关地址都是IP的最后一位取1 - 配置DNS地址,可以配置两个DNS服务器地址

DNS1=114.114.114.114和DNS2=8.8.8.8

#VAGRANT-BEGIN # The contents below are automatically generated by Vagrant. Do not modify. NM_CONTROLLED=yes BOOTPROTO=none ONBOOT=yes IPADDR=192.168.56.10 NETMASK=255.255.255.0 GATEWAY=192.168.56.1 DNS1=114.114.114.114 DNS2=8.8.8.8 DEVICE=eth1 PEERDNS=no #VAGRANT-END - 添加网关地址

-

使用命令

service network restart重启网卡 -

使用命令

ping baidu.com测试是否能正常ping通百度

-

-

修改yum源,VirtualBox安装的CentOS默认的yum源还是国外的,最好修改为国内镜像,速度更快

- 使用命令

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup备份原yum源 - 使用命令

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.163.com/.help/CentOS7-Base-163.repo配置使用新yum源 - 使用命令

yum makecache生成缓存

- 使用命令

软件安装

Linux系统上常用软件单机集群安装指南,需要远程访问的应用端口需要设置安全组【即打开对应的端口】或者内网关闭防火墙

安装jdk

安装步骤

-

window的dev/linux下拿jdk1.8.0_261的安装包

-

mkdir /opt/jdk创建jdk目录,通过xftp6将压缩文件安装包上传到opt/jdk目录下【这种压缩文件被linux称为介质】 -

cd /opt/jdk进入opt/jdk目录,tar -zxvf jdk-8u261-linux-x64.tar.gz解压压缩包到当前目录 -

mkdir /usr/local/java在usr/local目录下创建java目录,mv /opt/jdk/jdk1.8.0_261 /usr/local/java将解压目录移动到/usr/local/java目录下作为安装目录 -

vim /etc/profile进行linux系统java环境变量配置,shift+g来到文件最后,按o开启下一行编辑,在文件最外层添加以下配置,注意要加classpath并配置当前目录为类路径export JAVA_HOME=/usr/local/java/jdk1.8.0_261 export JRE_HOME=$JAVA_HOME/jre export CLASSPATH=.:$JAVA_HOME/lib/tools.jar:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib export PATH=$JAVA_HOME/bin:$PATH:$JRE_HOME/bin -

source /etc/profile让新的环境变量生效

安装成功测试

- 任意找一个目录编写一个java文件,在当前目录用java和javac跑一下,控制台输出目标文字即安装成功

java -version出现版本也行

安装Vim

安装步骤

-

vim的运行需要以下四个文件,使用

rpm -qa |grep vim可以查出来哪些有了那些没有[root@localhost /]# rpm -qa |grep vim vim-enhanced-7.4.629-8.el7_9.x86_64 vim-common-7.4.629-8.el7_9.x86_64 vim-minimal-7.4.629-8.el7_9.x86_64 vim-filesystem-7.4.629-8.el7_9.x86_64 -

没有的使用如

yum -y install vim-enhanced对指定文件进行安装,直到四个文件都出现 -

如果一个文件都没有,可以使用

yum -y install vim*安装所有

安装成功测试

- vim一个文件,能正常打开就安装成功了

安装Canal

Canal的运行需要linux下的jdk环境

注意,canal运行没有端口号,与外界的通讯需要通过数据库端口3306,防火墙需要放开3306端口

安装步骤

-

github下载地址:https://github.com/alibaba/canal/releases ,本项目用的1.1.4.tar.gz,输入canal1.1.4.tar.gz浏览器输入地址自动下载

-

把压缩文件上传到linux中,可以直接上传到/usr/local/canal目录,也可以上传到opt/canal目录下使用

cp canal.deployer-1.1.4.tar.gz /usr/local/canal将压缩包拷贝到/usr/local/canal目录下 -

tar zxvf canal.deployer-1.1.4.tar.gz解压压缩文件,解压就能用,可以直接考虑在usr/local/canal目录下解压压缩包 -

vi conf/example/instance.properties修改canal配置文件instance.properties修改成自己的数据库信息

#需要改成自己的数据库信息,linux数据库的ip【已确认】和端口号,本机可以写127.0.0.1 canal.instance.master.address=192.168.44.132:3306 #需要改成自己的数据库用户名与密码 canal.instance.dbUsername=canal canal.instance.dbPassword=canal #需要改成同步的数据库表的规则,例如只是同步一下表,比如指定哪个表进行匹配,使用perl正则表达式进行正则匹配 #canal.instance.filter.regex=.*\\..* canal.instance.filter.regex=guli_ucenter.ucenter_member #多个正则之间以逗号(,)分隔,转义符需要双斜杠(\\) #常见例子: #1. 匹配所有数据库的所有表: .* or .*\\..* #2. 匹配canal数据库下所有表: canal\\..* #3. canal数据库下的以canal打头的表: canal\\.canal.* #4. canal数据库下的一张表【具体库具体表】: canal.test1 #5. 多个规则组合使用: canal\\..*,mysql.test1,mysql.test2 (逗号分隔) #注意:此过滤条件只针对row模式的数据有效(ps. mixed/statement因为不解析sql,所以无法准确提取tableName进行过滤) -

进入bin目录下

sh bin/startup.sh启动【canal的bin目录下有启动脚本startup.sh】- 关闭有

stop.sh脚本 grep canal查看canal的进程

- 关闭有

安装成功测试

- 运行canal后,

ps -ef |grep canal查看canal的进程 - 通过本机数据库工具如Navicat能够远程连接上对应的linux上的数据库,就说明安装且使用成功了,用户名和密码使用linux上canal的用户名和密码

安装Maven

maven3的历史版本在Maven – Download Apache Maven的Other Releases目录下点击 Maven 3 archives可以进行访问

安装步骤

-

上传介质到/opt/maven目录,使用命令

cp apache-maven-3.6.3-bin.tar.gz /usr/local/maven/apache-maven-3.6.3-bin.tar.gz将介质复制一份到/usr/local/maven目录apache-maven-3.6.3-src.tar.gz是源码文件,不是maven软件

软件是apache-maven-3.6.3-bin.tar.gz

-

使用命令

tar -zxvf apache-maven-3.6.3-bin.tar.gz解压介质 -

使用命令

vim /etc/profile修改环境变量,修改后使用命令source /etc/profile让文件立即生效export MAVEN_HOME=/usr/local/maven export PATH=$PATH:$MAVEN_HOME/bin

安装成功测试

mvn -v可以显示maven版本号

安装Git

- 使用命令

yum -y install git即可完成安装

安装Docker

方法一

参考文档:https://help.aliyun.com/document_detail/60742.html?spm=a2c4g.11174283.6.548.24c14541ssYFIZ

安装步骤

-

使用命令

yum install -y yum-utils device-mapper-persistent-data lvm2安装一些必要的系统工具 -

使用命令

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo安装一些必要的系统工具 -

使用命令

yum makecache fast和命令yum -y install docker-ce更新并安装Docker-CE -

使用命令

service docker start开启Docker服务 -

这种安装方式需要使用命令

yum remove docker-ce进行卸载,直接使用yum remove docker是卸载不掉的

安装成功测试

- 使用命令

docker -v显示docker版本信息即安装成功

官网安装步骤

官方推荐最多人使用的安装方法,好家伙和第一种安装方法基本相同

安装文档:https://docs.docker.com/engine/install/centos/

安装步骤

-

使用命令

yum install -y gcc和命令yum install -y gcc-c++安装Docker依赖 -

使用命令

yum install -y yum-utils安装yum-utils老版本的docker还会执行

yum install -y device-mapper-persistent-data和yum install -y lvm2来安装其他依赖,阳哥和官网并没有对这进行要求,在谷粒商城项目上我是执行了这两步的,和课程保持一致 -

使用命令

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo设置Docker稳定的镜像仓库不要使用官网推荐的Docker镜像仓库,因为国内访问该仓库下载镜像文件大概率会失败,使用阿里云的镜像仓库

-

使用命令

yum makecache fast更新yum软件包索引,建立yum源数据缓存,是将软件包信息提前在本地索引缓存,用来提高搜索安装软件的速度,以后下载东西更快一些 -

使用命令

yum -y install docker-ce docker-ce-cli containerd.io安装Docker CE

安装成功测试

-

使用命令

systemctl start docker启动Docker -

使用命令

ps -ef | grep docker查看docker的运行状态[root@localhost ~]# ps -ef| grep docker root 20238 1 0 12:11 ? 00:00:00 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock root 20432 17454 0 12:13 pts/1 00:00:00 grep --color=auto docker -

使用命令

docker --help能查看docker的常用命令和格式[root@localhost ~]# docker --help Usage: docker [OPTIONS] COMMAND A self-sufficient runtime for containers Common Commands: run Create and run a new container from an image exec Execute a command in a running container ps List containers build Build an image from a Dockerfile pull Download an image from a registry push Upload an image to a registry images List images login Log in to a registry logout Log out from a registry search Search Docker Hub for images version Show the Docker version information info Display system-wide information Management Commands: builder Manage builds buildx* Docker Buildx (Docker Inc., v0.11.2) compose* Docker Compose (Docker Inc., v2.21.0) container Manage containers context Manage contexts image Manage images manifest Manage Docker image manifests and manifest lists network Manage networks plugin Manage plugins system Manage Docker trust Manage trust on Docker images volume Manage volumes Swarm Commands: swarm Manage Swarm Commands: attach Attach local standard input, output, and error streams to a running container commit Create a new image from a container's changes cp Copy files/folders between a container and the local filesystem create Create a new container diff Inspect changes to files or directories on a container's filesystem events Get real time events from the server export Export a container's filesystem as a tar archive history Show the history of an image import Import the contents from a tarball to create a filesystem image inspect Return low-level information on Docker objects kill Kill one or more running containers load Load an image from a tar archive or STDIN logs Fetch the logs of a container pause Pause all processes within one or more containers port List port mappings or a specific mapping for the container rename Rename a container restart Restart one or more containers rm Remove one or more containers rmi Remove one or more images save Save one or more images to a tar archive (streamed to STDOUT by default) start Start one or more stopped containers stats Display a live stream of container(s) resource usage statistics stop Stop one or more running containers tag Create a tag TARGET_IMAGE that refers to SOURCE_IMAGE top Display the running processes of a container unpause Unpause all processes within one or more containers update Update configuration of one or more containers wait Block until one or more containers stop, then print their exit codes Global Options: --config string Location of client config files (default "/root/.docker") -c, --context string Name of the context to use to connect to the daemon (overrides DOCKER_HOST env var and default context set with "docker context use") -D, --debug Enable debug mode -H, --host list Daemon socket to connect to -l, --log-level string Set the logging level ("debug", "info", "warn", "error", "fatal") (default "info") --tls Use TLS; implied by --tlsverify --tlscacert string Trust certs signed only by this CA (default "/root/.docker/ca.pem") --tlscert string Path to TLS certificate file (default "/root/.docker/cert.pem") --tlskey string Path to TLS key file (default "/root/.docker/key.pem") --tlsverify Use TLS and verify the remote -v, --version Print version information and quit Run 'docker COMMAND --help' for more information on a command. For more help on how to use Docker, head to https://docs.docker.com/go/guides/ -

使用命令

docker cp --help能查看cp命令下更详细的格式通过命令

docker --help能查到常用功能对应的命令再对查询命令使用上诉命令能查询到对应命令的具体用法

[root@localhost ~]# docker cp --help #SRC_PATH是原地址,DEST_PATH是目的地址 Usage: docker cp [OPTIONS] CONTAINER:SRC_PATH DEST_PATH|- docker cp [OPTIONS] SRC_PATH|- CONTAINER:DEST_PATH #在容器和本地文件系统中拷贝文件或者目录 Copy files/folders between a container and the local filesystem Use '-' as the source to read a tar archive from stdin and extract it to a directory destination in a container. Use '-' as the destination to stream a tar archive of a container source to stdout. Aliases: docker container cp, docker cp Options: -a, --archive Archive mode (copy all uid/gid information) -L, --follow-link Always follow symbol link in SRC_PATH -q, --quiet Suppress progress output during copy. Progress output is automatically suppressed if no terminal is attached -

使用命令

docker version能够查看Docker安装的客户端、服务端的版本信息使用命令

docker info能够看到更详细的docker概要信息[root@localhost ~]# docker version #客户端 Client: Docker Engine - Community Version: 24.0.7 API version: 1.43 Go version: go1.20.10 Git commit: afdd53b Built: Thu Oct 26 09:11:35 2023 OS/Arch: linux/amd64 Context: default #server端,server端就是后台守护进程 Server: Docker Engine - Community Engine: Version: 24.0.7 API version: 1.43 (minimum version 1.12) Go version: go1.20.10 Git commit: 311b9ff Built: Thu Oct 26 09:10:36 2023 OS/Arch: linux/amd64 Experimental: false containerd: Version: 1.6.26 GitCommit: 3dd1e886e55dd695541fdcd67420c2888645a495 runc: Version: 1.1.10 GitCommit: v1.1.10-0-g18a0cb0 docker-init: Version: 0.19.0 GitCommit: de40ad0 -

使用命令

docker run hello-world运行docker镜像提示信息为不能在本地找到’hello-world:latest’镜像,这里docker运行的镜像就是hello-world

Docker运行镜像会先在本地寻找镜像,找不到再去远程仓库把镜像拉取到本地然后再运行镜像

[root@localhost ~]# docker run hello-world Unable to find image 'hello-world:latest' locally #等待了一会 #从远程库拉取hello-world镜像 latest: Pulling from library/hello-world c1ec31eb5944: Pull complete Digest: sha256:4bd78111b6914a99dbc560e6a20eab57ff6655aea4a80c50b0c5491968cbc2e6 Status: Downloaded newer image for hello-world:latest #下面这条消息表示Docker工作正常 Hello from Docker! This message shows that your installation appears to be working correctly. To generate this message, Docker took the following steps: 1. The Docker client contacted the Docker daemon. 2. The Docker daemon pulled the "hello-world" image from the Docker Hub. (amd64) 3. The Docker daemon created a new container from that image which runs the executable that produces the output you are currently reading. 4. The Docker daemon streamed that output to the Docker client, which sent it to your terminal. To try something more ambitious, you can run an Ubuntu container with: $ docker run -it ubuntu bash Share images, automate workflows, and more with a free Docker ID: https://hub.docker.com/ For more examples and ideas, visit: https://docs.docker.com/get-started/ -

使用命令

systemctl enable docker是设置Docker开机启动 -

Docker卸载

依次执行以下命令完成Docker的卸载

systemctl stop dockeryum remove docker-ce docker-ce-cli containerd.iorm -rf /var/lib/dockerrm -rf /var/lib/containerd

问题汇总

-

Docker镜像无法拉取:

在虚拟机上装完docker后,并且配置了阿里云镜像加速的情况下,下载镜像速度还是很慢,拉个redis镜像试了几十次都不行,报错如下:

[root@localhost ~]# docker pull redis:6.0.8 Error response from daemon: Head "https://registry-1.docker.io/v2/library/redis/manifests/6.0.8": dial tcp [2600:1f18:2148:bc00:41e1:f57f:e2e2:5e54]:443: connect: network is unreachable-

原因

- DNS有问题,换成阿里云的DNS即可

-

解决方法

-

使用命令

vi /etc/resolv.conf编辑对应文件 -

将nameserver 后面的IP改成223.5.5.5

# Generated by NetworkManager search localdomain nameserver 223.5.5.5

-

-

解决效果

[root@localhost ~]# docker pull redis:6.0.8 6.0.8: Pulling from library/redis bb79b6b2107f: Pull complete 1ed3521a5dcb: Pull complete 5999b99cee8f: Pull complete 3f806f5245c9: Pull complete f8a4497572b2: Pull complete eafe3b6b8d06: Pull complete Digest: sha256:21db12e5ab3cc343e9376d655e8eabbdbe5516801373e95a8a9e66010c5b8819 Status: Downloaded newer image for redis:6.0.8 docker.io/library/redis:6.0.8

-

安装Jenkins

Jenkins有很多安装方式,最方便的是使用war包进行安装,war包就是一个web项目,通过浏览器能进行访问,war包放在tomcat中能直接运行

最新版本的Jenkins不支持java8了要注意

下载地址:步骤:官网–download–stable–Past Releases 进去选择对应版本的war包就能下载

安装步骤

-

下载Jenkins的war包传到/usr/local/jenkins

-

使用命令

nohup java -jar /usr/local/jenkins/jenkins.war >/usr/local/jenkins/jenkins.out &直接启动java -jar /usr/local/jenkins/jenkins.war是命令的核心部分,war包目录要和命令一致/usr/local/jenkins/jenkins.out是日志输出的目录和日志名称,日志输出到jenkins.out文件nohup是命令前缀,表示后台静默启动,前台不会看见日志,日志会被输出到日志文件中&是命令后缀,表示该进程是守护线程这种方式启动后最后会提示忽略输入重定向错误到标准输出端,就是控制台不输出日志,然后再点击一次回车才能成功启动

安装成功测试

- 访问http://ip:8080能够成功访问Jenkins页面【ip为linux系统的ip地址】,需要放开8080端口的防火墙通讯

安装Zookeeper

- Zookeeper分为单击版安装和集群版安装,安装前置条件是安装了JDK

单机版安装

安装步骤

- 在Apache Zookeeper官网Apache ZooKeeper点击Download按钮跳转至Apache ZooKeeper下载中心,一般下载稳定版本【stable release】,企业开发也避讳使用最新的版本,避免遇到bug没有参考资料;历史版本下载地址Index of /dist/zookeeper (apache.org),下载后缀为bin.tar.gz的压缩包

- 将安装包拷贝到/opt/zookeeper目录下

- 使用命令

tar -zxvf apache-zookeeper-3.5.7-bin.tar.gz -C /usr/local/zookeeper将压缩包解压到指定目录/usr/local/zookeeper - 使用命令

mv apache-zookeeper-3.5.7-bin/ zookeeper-3.5.7将解压文件名更改为zookeeper-3.5.7 - 进入conf目录,使用命令

mv zoo_sample.cfg zoo.cfg将文件名改为zoo.cfg - 在

/usr/local/zookeeper/目录下创建目录zkData用于存放Zookeeper的数据快照 - 使用命令

vim zoo.cfg将dataDir=/tmp/zookeeper配置成zkData所在的目录

目录说明

- bin目录是Zookeeper服务端的命令脚本目录

- conf是配置目录

- conf目录只需要关注

zoo_sample.cfg文件,Zookeeper运行的时候会加载配置文件zoo.cfg,zoo_sample.cfg是Zookeeper为zoo.cfg文件提供的配置模板文件,用户需要自己以模板文件内容为蓝本创建配置文件zoo.cfg并修改其中配置来实现对Zookeepeer运行实例的配置,同时最好保留zoo_sample.cfg以备后续修改使用

- conf目录只需要关注

- docs是文档目录

- lib第三方依赖目录

配置文件参数

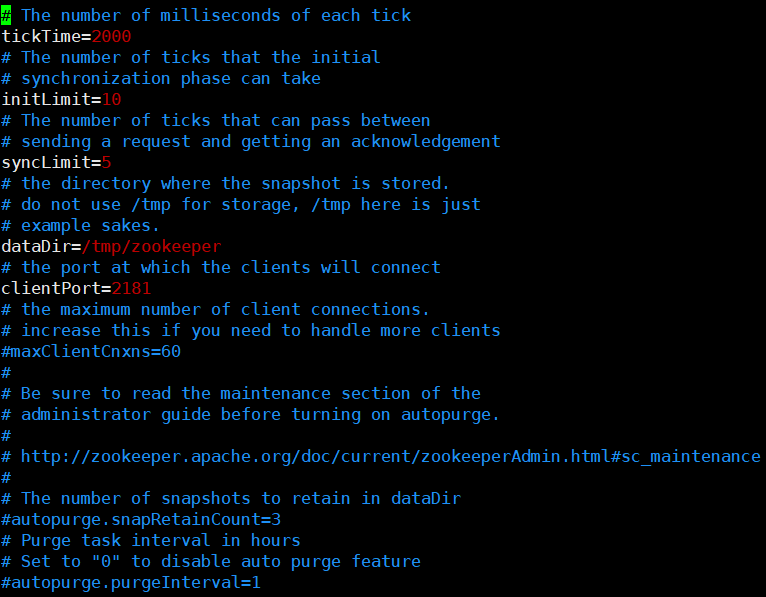

配置文件是zoo.cfg

-

配置文件示例

-

tickTime=2000【通信心跳时间】- 配置通信心跳时间为2000ms,该心跳客户端与服务端间可以互相发送,服务端与服务端间也可以互相发送,目的是检测目标服务是否仍然存活

-

initLimit=10【LF初始通信时限】- Leader和Follower建立初始连接时能容忍的最大心跳数,如果10个心跳时间内还没有成功建立Leader和Follower之间的连接,就认为本次初始通信失败,Leader就是主,Follower就是从

-

syncLimit=5【LF同步通信时限】- 初始化通信给的时间相较于同步通信时限长一些,后续通信如果超过5个心跳还没有建立连接,Leader认为Follower服务器已经挂了,会从服务器列表中删除Follower

-

dataDir=/tmp/zookeeper【Zookeeper数据保持路径】- 注意:dataDir=/tmp/zookeeper意思是快照数据会存在/tmp/zookeeper目录下,默认的tmp目录,不要使用/tmp目录存储数据,因为/tmp目录存储的临时数据,容易被Linux系统定期删除,一般需要自定义目录,这里的/tmp/zookeeper只是模板示例,通常会在Zookeeper的安装目录下创建zkData目录存储数据

-

clientPort=2181【客户端连接服务端的端口】- 客户端通过端口2181与Zookeeper服务器通讯,这个通常不做修改,就使用默认的

-

maxClientCnxns=60【客户端连接Zookeeper的最大连接数】- 客户端连接Zookeeper的最大连接数,默认是60,一般使用默认配置

安装成功测试

Zookeeper中既有服务端,又有客户端

- 使用命令

bin/zkServer.sh start启动Zookeeper服务端- 注意:当Zookeeper集群超过半数宕机Zookeeper不可用,启动集群需要启动一半以上的服务器zookeeper才能正常访问,单机版不用考虑这个问题

- 🔎:单机版启动后使用命令

bin/zkServer.sh status提示信息最后一行会提示Mode: standalone

- 🔎:单机版启动后使用命令

- 注意:当Zookeeper集群超过半数宕机Zookeeper不可用,启动集群需要启动一半以上的服务器zookeeper才能正常访问,单机版不用考虑这个问题

- 使用命令

jps查看Zookeeper进程,使用jps -l查看Zookeeper进程的全限定类名【jps:JVM会讲】 - 使用命令

bin/zkCli.sh启动并进入Zookeeper客户端,注意这个不能加start- 命令

ls在Zookeeper下也能使用 - 使用命令

quit退出zookeeper客户端

- 命令

- 使用命令

bin/zkServer.sh stop停止Zookeeper服务 - 使用命令

bin/zkServer.sh restart重启Zookeeper服务 - 使用命令

bin/zkServer.sh status查看Zookeeper服务的状态

基本指令

- 类似于Linux的目录结构,Zookeeper的节点也是以

/作为根节点开始的,根节点/下可能有若干子节点,这些子节点下还可能有若干子节点,以此类推,节点的全路径和linux系统下目录或者文件的路径写法是一样的,即/父节点名称/子节点名称/... - 该指令可以在

bin/zkCli.sh命令唤起的Zookeeper客户端中使用 - Zookeeper下

-w参数都是表示对相关事件的监听动作,比如监听配置、监听节点创建、节点删除

-

**-

功能解析:输入非指令的任意字符串,Zookeeper会提示出所有能在客户端使用的指令

-

使用示例:

[zk: localhost:2181(CONNECTED) 2] yyy ZooKeeper -server host:port cmd args addauth scheme auth close config [-c] [-w] [-s] connect host:port create [-s] [-e] [-c] [-t ttl] path [data] [acl] delete [-v version] path deleteall path delquota [-n|-b] path get [-s] [-w] path getAcl [-s] path history listquota path ls [-s] [-w] [-R] path ls2 path [watch] printwatches on|off quit reconfig [-s] [-v version] [[-file path] | [-members serverID=host:port1:port2;port3[,...]*]] | [-add serverId=host:port1:port2;port3[,...]]* [-remove serverId[,...]*] redo cmdno removewatches path [-c|-d|-a] [-l] rmr path set [-s] [-v version] path data setAcl [-s] [-v version] [-R] path acl setquota -n|-b val path stat [-w] path sync path Command not found: Command not found yyy

-

-

ls [-s] [-w] [-R] <path>-

功能解析:查看指定节点路径

path下的所有子节点 -

使用示例:

[zk: localhost:2181(CONNECTED) 0] ls ls [-s] [-w] [-R] path #1. 查看根节点下的子节点,根节点下默认有一个zookeeper子节点 [zk: localhost:2181(CONNECTED) 1] ls / [zookeeper] #2. 查看节点/zookeeper下的子节点,默认也是有config和quota两个子节点,注意节点路径必须写全路径 [zk: localhost:2181(CONNECTED) 2] ls /zookeeper [config, quota] #3. 查看节点/zookeeper/config下的子节点,没有子节点会返回一个空数组 [zk: localhost:2181(CONNECTED) 0] ls /zookeeper/config [] -

补充说明:

- 节点路径必须写全路径,返回的是子节点名字数组,没有子节点会返回空数组

-

-

get [-s] [-w] <path>-

功能解析:查看指定全路径

path节点的数据内容 -

使用示例:

#1. 查看节点`/zookeeper`中的数据,默认情况下该节点没有数据,没有数据会返回空白的一行,自建节点会返回null [zk: localhost:2181(CONNECTED) 2] get /zookeeper #在根节点下创建一个名为aa的节点 [zk: localhost:2181(CONNECTED) 4] create /aa Created /aa #2. 查看节点/aa中的数据,新创建的节点中是没有数据的 [zk: localhost:2181(CONNECTED) 5] get /aa null -

补充说明:

- 新创建的节点没有数据会返回

null,zookeeper中默认节点也没有数据但是使用该指令会返回空白的一行

- 新创建的节点没有数据会返回

-

-

create [-s] [-e] [-c] [-t ttl] <path> [data] [acl]-

功能解析:在指定路径位置创建一个节点,

[data]是指定节点的数据内容,[-e]是指定临时节点,[-s]是创建序列化永久节点 -

使用示例:

#1. 在根节点下创建一个名为aa的节点 [zk: localhost:2181(CONNECTED) 4] create /aa Created /aa #2. 节点已经存在的情况下再创建节点会直接报错节点已经存在,创建动作会失败,想要在创建的时候指定数据内容必须保证对应位置节点不存在 [zk: localhost:2181(CONNECTED) 6] create /aa test Node already exists: /aa [zk: localhost:2181(CONNECTED) 8] delete /aa #3. 创建节点`/aa`并指定节点的数据为test [zk: localhost:2181(CONNECTED) 9] create /aa test Created /aa [zk: localhost:2181(CONNECTED) 10] get /aa test #4. 创建节点时指定节点数据内容,如果数据中含有空格必须用双引号将数据整体括起来,否则空格后面的内容会被作为`acl`参数并且可能提示该参数非法,节点数据没有空格可以不加双引号 [zk: localhost:2181(CONNECTED) 11] create /aa/cc hello world world does not have the form scheme:id:perm KeeperErrorCode = InvalidACL Acl is not valid : null [zk: localhost:2181(CONNECTED) 12] create /aa/cc "hello world" Created /aa/cc [zk: localhost:2181(CONNECTED) 13] get /aa/cc hello world #5. 创建临时节点,创建临时节点的客户端断开连接以后过一段时间Zookeeper服务器就会删除该节点,老师说这是Zookeeper客户端的问题,使用Java客户端断开连接后,节点会直接删除,再连上不管时间多短节点都直接被删了 [zk: localhost:2181(CONNECTED) 13] create -e /zz "hello" Created /zz #6. 创建序列化永久节点,创建节点时必须父节点存在才能创建子节点,父节点不存在创建子节点会直接报错,创建序列化永久节点,节点名称后面会自动添加顺序编号,该编号从0开始,且查询节点也需要带顺序编号,否则会提示该节点不存在 [zk: localhost:2181(CONNECTED) 20] create -s /earl/ll "hello earl" Node does not exist: /earl/ll [zk: localhost:2181(CONNECTED) 21] create /earl Created /earl [zk: localhost:2181(CONNECTED) 22] create -s /earl/ll "hello earl" Created /earl/ll0000000000 [zk: localhost:2181(CONNECTED) 23] [zk: localhost:2181(CONNECTED) 23] create -s /earl/ll "hello earl" Created /earl/ll0000000001 [zk: localhost:2181(CONNECTED) 24] create -s /earl/ll "hello earl" Created /earl/ll0000000002 [zk: localhost:2181(CONNECTED) 25] get /earl/ll org.apache.zookeeper.KeeperException$NoNodeException: KeeperErrorCode = NoNode for /earl/ll [zk: localhost:2181(CONNECTED) 27] ls /earl [ll0000000000, ll0000000001, ll0000000002] [zk: localhost:2181(CONNECTED) 28] get /earl/ll0000000000 hello earl -

补充说明:

- 指定位置节点已经存在的前提下再创建该节点会报错,

- 创建节点同时指定节点数据,如果数据中有空格需要使用双引号将数据完整括起来,否则空格后面的内容会被识别为acl参数

- 创建节点时必须父节点存在才能创建子节点,父节点不存在创建子节点会直接报错

- 创建序列化永久节点,节点名称后面会自动添加顺序编号,该编号从0开始,且查询节点也需要带顺序编号,否则会提示该节点不存在

-

-

delete [-v version] <path>-

功能解析:删除指定位置的节点

-

使用示例:

#在根节点下创建一个名为aa的节点 [zk: localhost:2181(CONNECTED) 4] create /aa Created /aa #查看节点/aa中的数据,新创建的节点中是没有数据的 [zk: localhost:2181(CONNECTED) 5] get /aa null #1. 删除节点成功不会有任何提示 [zk: localhost:2181(CONNECTED) 8] delete /aa -

补充说明:

- 节点删除成功不会有任何提示

-

-

set [-s] [-v version] <path> <data>-

功能解析:更改指定节点的数据

-

使用示例:

#1. 更改节点/aa/cc的数据为hello Earl [zk: localhost:2181(CONNECTED) 15] set /aa/cc "hello Earl" [zk: localhost:2181(CONNECTED) 16] get /aa/cc hello Earl

-

-

stat [-w] path-

功能解析:查看指定路径节点的状态,

[-w]是监听节点状态 -

使用示例:

#1. 查看某个节点的状态 [zk: localhost:2181(CONNECTED) 5] stat / #子节点id cZxid = 0x0 #节点创建时间 ctime = Thu Jan 01 08:00:00 CST 1970 mZxid = 0x0 mtime = Thu Jan 01 08:00:00 CST 1970 pZxid = 0x5 cversion = 3 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 0 numChildren = 5 [zk: localhost:2181(CONNECTED) 5] stat -w /bb Node does not exist: /bb [zk: localhost:2181(CONNECTED) 6] #2. 即使节点不存在执行监听指令报错依然能监听成功,监听的节点发生变化会直接显示在执行监听命令的客户端,type表示事件类型、path是显示节点路径 WATCHER:: WatchedEvent state:SyncConnected type:NodeCreated path:/bb -

补充说明:

- cZxid:节点事务ID,每次修改节点ZooKeeper都会收到一个zxid形式的时间戳,这就是ZooKeeper事务ID。事务ID在ZooKeeper中表示所有修改的次序,每个修改都有唯一的zxid,如果zxid1小于zxid2,那么说明zxid1在zxid2之前发生

- ctime:znode被创建的毫秒数(从1970年开始)

- mZxid:znode最后修改的zxid

- mtime:znode最后修改的毫秒数(从1970年开始)

- pZxid:znode最后更新的子节点zxid

- cversion:znode子节点变化号,每变化一次就自增1

- dataVersion:znode数据变化号,数据每变化一次就自增1(每次更新读取最新的值,可用于实现类似数据库乐观锁功能)

- aclVersion:znode访问控制列表的变化号

- ephemeralOwner:如果是临时节点,这个是znode拥有者的session id。如果不是临时节点则是0

- dataLength:znode的数据长度

- numChildren:znode子节点数量

- 即使节点不存在执行监听指令报错依然能监听成功,监听的节点发生变化会直接显示在执行监听命令的客户端

-

节点类型

- Zookeeper中的节点类型有以下四种,就是永久/临时、序列化/非序列化两个特征组合;永久指断开客户端连接节点不会被删除、序列化指自动给节点名称后面顺序拼接一个编号

-

永久节点PERSISTENT

- 概念:客户端与Zookeeper服务器断开连接后仍然存在于服务器中的节点

- 🔎:默认情况下创建的节点都是永久节点,即使Zookeeper服务器关了也一样会保存其中的数据

- 概念:客户端与Zookeeper服务器断开连接后仍然存在于服务器中的节点

-

临时节点EPHEMERAL

- 概念:客户端与Zookeeper服务器断开连接后就会被Zookeeper服务器删除的节点

- 🔎:注意必须是创建临时节点的客户端断开连接才能删除对应的临时节点,其他客户端断开也只会删除其自身创建的临时节点;而且断开以后的几秒钟时间内,所有的客户端都还能正常查询到对应的节点和其中的数据

- 🔎:老师说这是Zookeeper本地客户端的问题,使用Java客户端断开连接后,节点会直接删除,再连上不管时间多短节点都直接被删了

- 创建方法:临时节点需要使用创建节点命令时添加

-e参数如create -e /zz "hello"来创建一个路径为/zz,节点内容为hello的临时节点

- 概念:客户端与Zookeeper服务器断开连接后就会被Zookeeper服务器删除的节点

-

序列化永久节点PERSISTENT_SEQUENTIAL

-

概念:客户端与Zookeeper服务器断开连接后仍然存在于服务器中且相同名称的节点可以被多次创建,Zookeeper会给对应节点名称后面添加一串从0开始的顺序编号

- 🔎:注意这里的序列化不是Java中那个序列化的意思,而是在节点名称后面顺序编号

-

创建方法:序列化的永久节点需要使用创建节点命令时添加

-s参数如create -s /ss "hello"来创建-

示例

#6. 创建序列化永久节点,创建节点时必须父节点存在才能创建子节点,父节点不存在创建子节点会直接报错,创建序列化永久节点,节点名称后面会自动添加顺序编号,该编号从0开始,且查询节点也需要带顺序编号,否则会提示该节点不存在 [zk: localhost:2181(CONNECTED) 20] create -s /earl/ll "hello earl" Node does not exist: /earl/ll [zk: localhost:2181(CONNECTED) 21] create /earl Created /earl [zk: localhost:2181(CONNECTED) 22] create -s /earl/ll "hello earl" Created /earl/ll0000000000 [zk: localhost:2181(CONNECTED) 23] [zk: localhost:2181(CONNECTED) 23] create -s /earl/ll "hello earl" Created /earl/ll0000000001 [zk: localhost:2181(CONNECTED) 24] create -s /earl/ll "hello earl" Created /earl/ll0000000002 [zk: localhost:2181(CONNECTED) 25] get /earl/ll org.apache.zookeeper.KeeperException$NoNodeException: KeeperErrorCode = NoNode for /earl/ll [zk: localhost:2181(CONNECTED) 27] ls /earl [ll0000000000, ll0000000001, ll0000000002] [zk: localhost:2181(CONNECTED) 28] get /earl/ll0000000000 hello earl

-

-

-

序列化临时节点PERSISTENT_SEQUENTIAL

- 概念:客户端与Zookeeper服务器断开连接后就会被Zookeeper服务器删除的节点,且Zookeeper会给对应节点名称后面添加一串从0开始的顺序编号,只是简单结合了给节点名称按顺序编号和关闭创建该节点的客户端节点就会被Zookeeper服务器删掉的节点,注意序列化临时节点被删除不会影响序列化永久节点的编号

- 创建方法:序列化的临时节点需要使用创建节点命令时组合添加

-s和-e参数如create -s -e /ss "hello"来创建

节点事件监听

- Zookeeper可以监听到节点的创建、删除和数据变化、以及子节点的创建、删除和数据变化四种事件

- 节点的事件监听全部是一次性的,一次监听完成以后后续的变化就监听不到了,这样的设计是为了节省资源;如果还想继续监听,可以设置在事件触发的时候再执行一次监听指令

-

节点创建

-

事件类型:NodeCreated

-

通过命令

stat -w <path>监听指定节点的节点创建事件,该命令执行时指定节点不存在会报错,但是不影响监听效果

-

-

节点删除

-

事件类型:NodeDeleted

-

通过命令

stat -w <path>监听指定节点的节点删除事件

-

-

节点数据变化

-

事件类型:NodeDataChanged

-

通过命令

get -w <path>监听指定节点的节点数据变化事件

-

-

子节点变化

-

事件类型:NodeChildrenChanged

-

通过命令

ls -w <path>监听指定节点的子节点变化事件

-

集群版安装

Zookeeper集群最少是3台,

安装步骤

安装成功测试

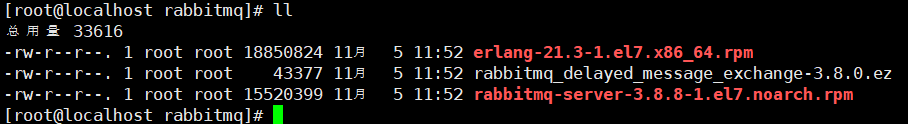

安装RabbitMQ

官网:https://www.rabbitmq.com/download.html

RabbitMQ的运行需要Erlang语言的运行环境,RabbitMQ用的最多的是linux系统的,RabbitMQ的版本需要对应linux系统的版本,使用命令

uname -a查看当前linux系统的版本。el7表示linux7

安装步骤

-

将以下文件上传至

/opt/rabbitmq目录下

-

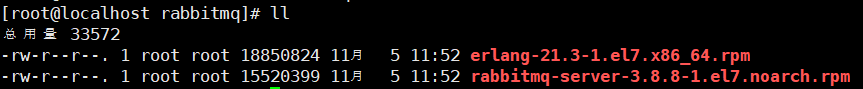

将以下两个文件移动到/usr/local/rabbitmq目录下

-

使用以下命令安装对应软件

-

使用命令

rpm -ivh erlang-21.3-1.el7.x86_64.rpm安装erlang环境【i表示安装,v表示显示安装进度】 -

使用命令

yum install socat -y【安装rabbitmq需要安装rabbitmq的依赖包socat】yum命令需要去互联网联网下载安装包

-

使用命令

rpm -ivh rabbitmq-server-3.8.8-1.el7.noarch.rpm安装rabbitmq

-

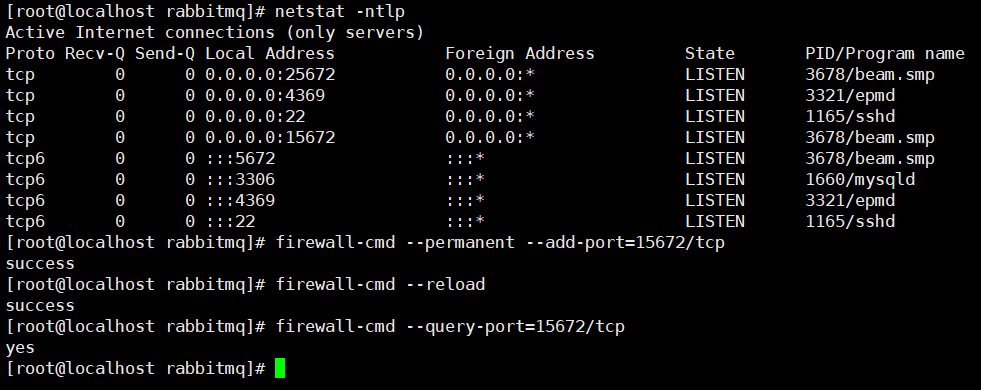

安装成功测试

-

使用命令

chkconfig rabbitmq-server on设置rab bitmq服务开机启动 -

使用命令

/sbin/service rabbitmq-server start手动启动rabbitmq服务 -

使用命令

/sbin/service rabbitmq-server status查看rabbitmq服务状态【如果服务是启动状态active会显示running,正在启动会显示activing,inactive表示服务已经关闭】 -

使用命令

/sbin/service rabbitmq-server stop停止rabbitmq服务 -

在rabbitmq服务关闭的状态下使用命令

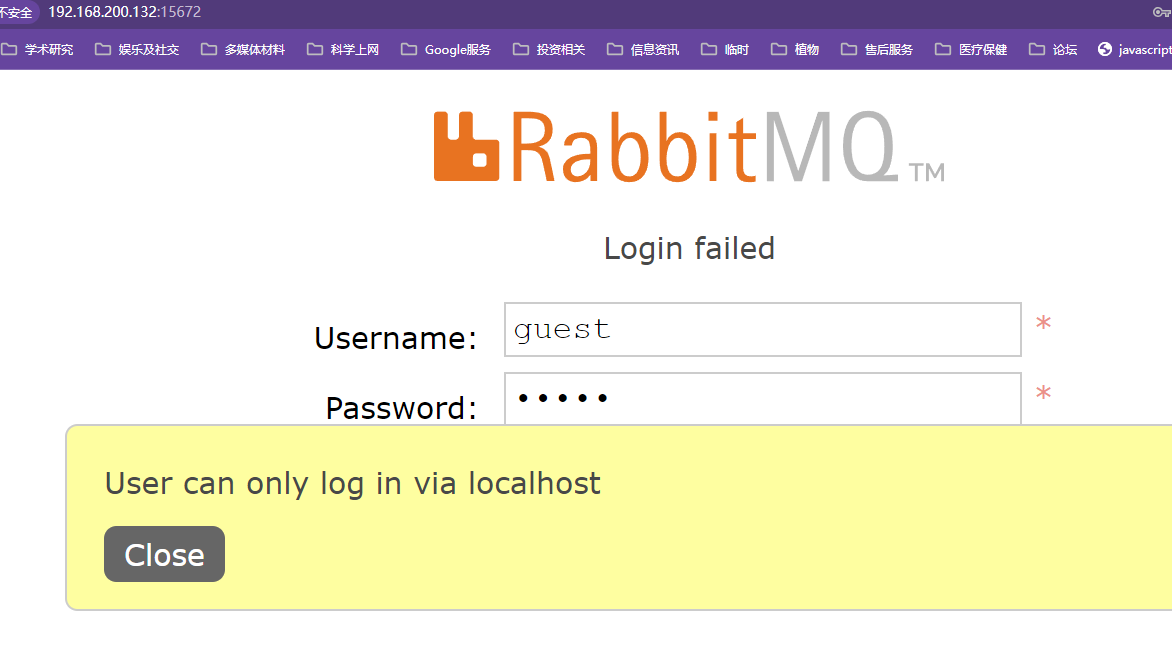

rabbitmq-plugins enable rabbitmq_management安装rabbitmq的web管理插件【执行了该命令才能通过浏览器输入地址http://主机地址:rabbitmq端口号15672访问rabbitmq,访问rabbitmq需要开启防火墙端口通讯】初始账号和密码默认都是guest,第一次登录会显示没有用户只能通过本地登录,此时需要添加一个账户进行远程登录

【开放rabbitmq防火墙端口通讯】

【web控制台】

-

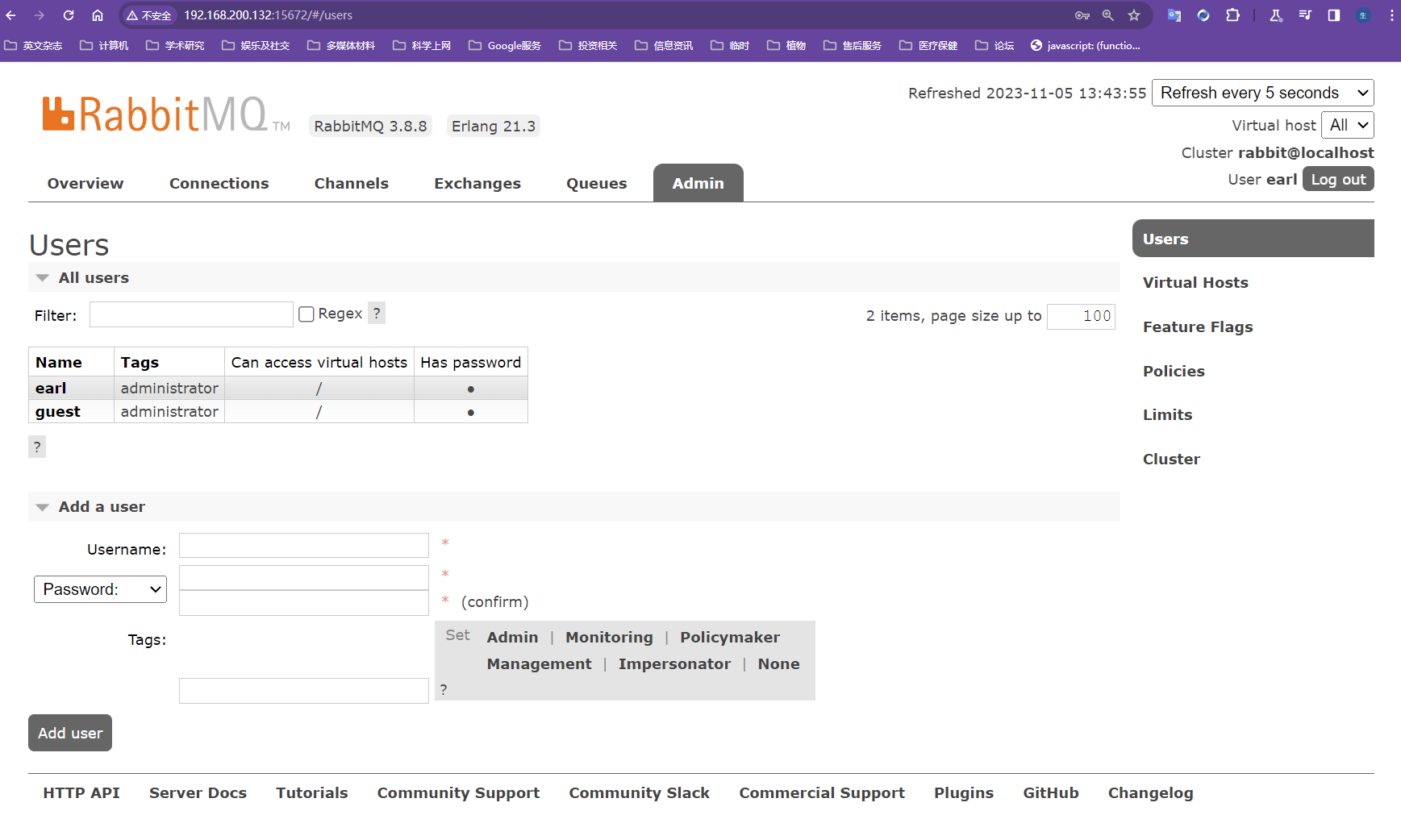

添加用户并设置超级管理员权限以登录web控制台

-

使用命令

rabbitmqctl add_user earl 123456创建账户,账户名earl,密码123456 -

使用命令

rabbitmqctl set_user_tags earl administrator设置用户earl的角色为超级管理员 -

使用命令

rabbitmqctl set_permissions -p "/" earl ".*" ".*" ".*"设置用户权限[-p <vhostpath>] <user> <conf> <write> <read>;-p<vhostpath>表示设置vhost的路径,conf表示可以配置哪些资源,user表示用户,write表示写权限、read表示读权限上个命令的意思表示对于用户earl设置具有对/vhost1这个virtual host中的所有资源的配置、写、读权限;每个vhost代表一个库,不同vhost中的交换机和队列是不同的

guest访问不了就是因为没有设置"/"vhost的路径

-

使用命令

rabbitmqctl list_users查看当前rabbitmq server有哪些用户

【MQ的后台管理界面】

admin路由中就可以显示增删改用户

-

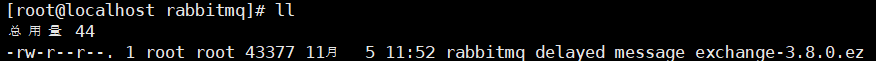

安装解决延迟队列缺陷的插件

安装RabbitMQ插件解决延迟队列缺陷

-

官网下载插件rabbitmq_delayed_message_exchange,放在RabbitMQ的插件目录

/usr/lib/rabbitmq/lib/rabbitmq_server-3.8.8/plugins这个插件不会实时更新,一直会维持放进去时候的情况

-

执行命令

systemctl restart rabbitmq-server重启RabbitMQ安装不需要写插件的版本号

-

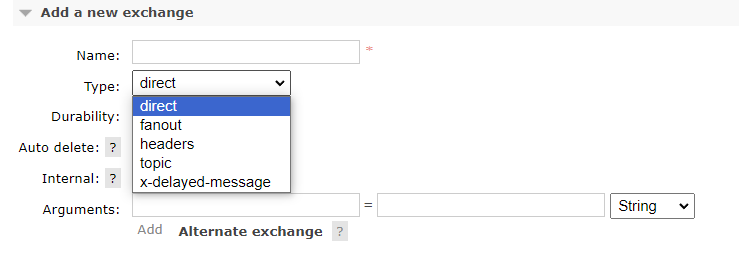

弄好之后在前端控制台的exchange列表中点击添加交换机多出来一个

x-delayed-message类型的交换机,同时也意味着延迟消息不由队列控制,由交换机来控制

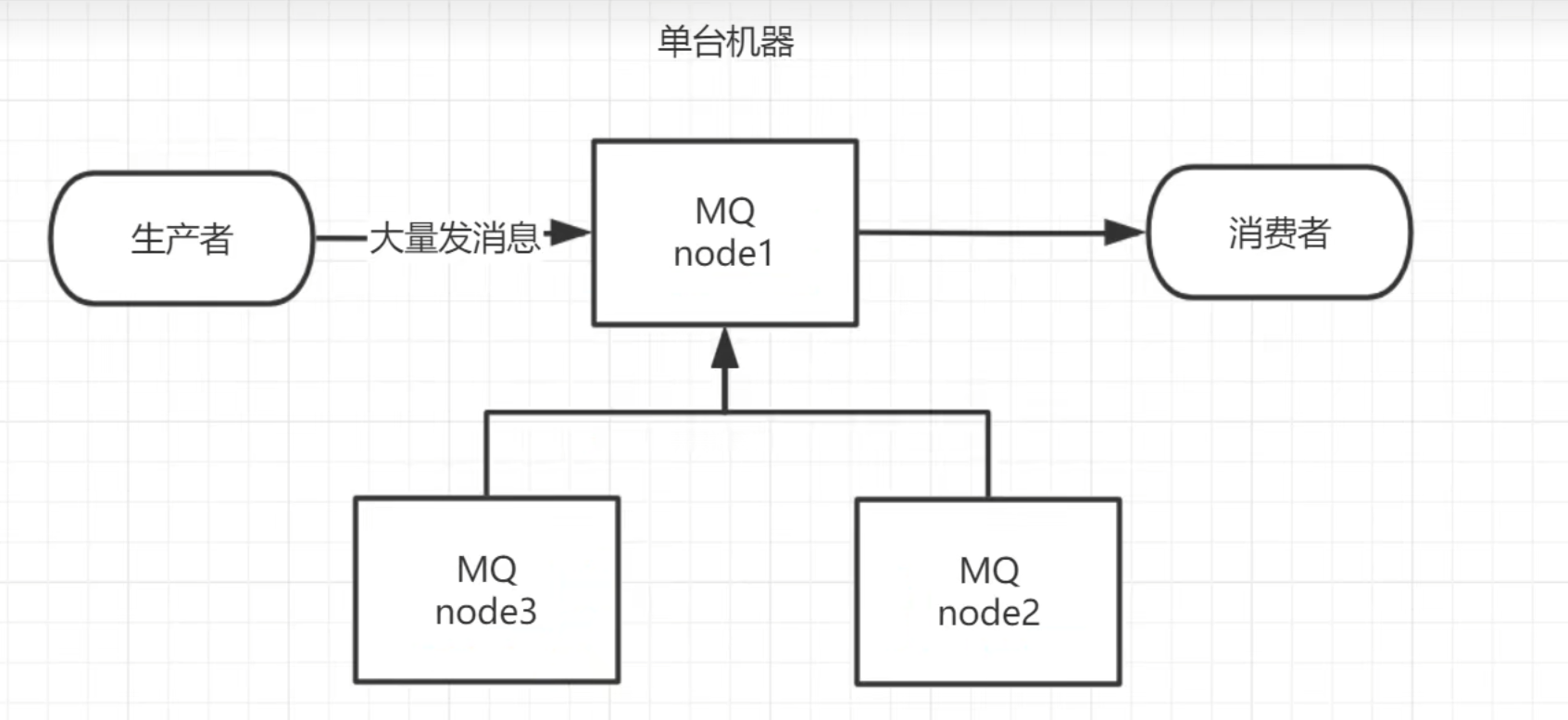

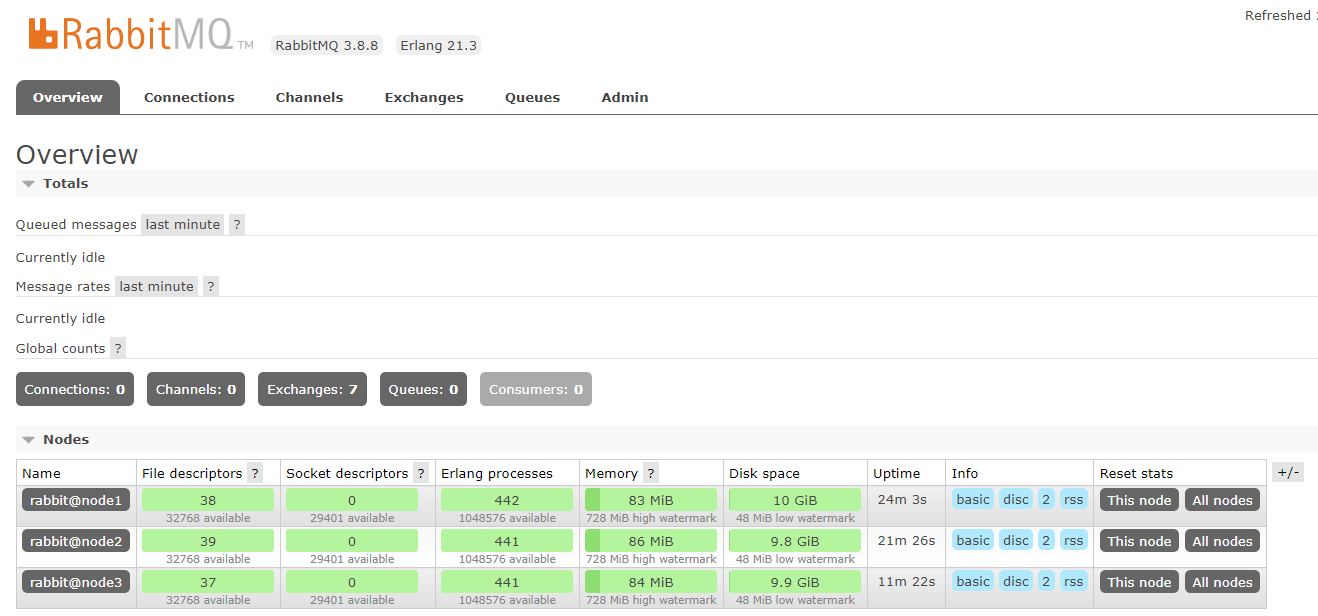

RabbitMQ集群搭建

添加其他RabbitMQ服务器,将其加入1号节点服务器就可以形成集群,比如2加入1号,4加入2号和4加入1号效果是一样的,类似于redis集群

-

集群架构

添加两台新机器,都加入RabbitMQ节点1号

-

集群搭建实操

-

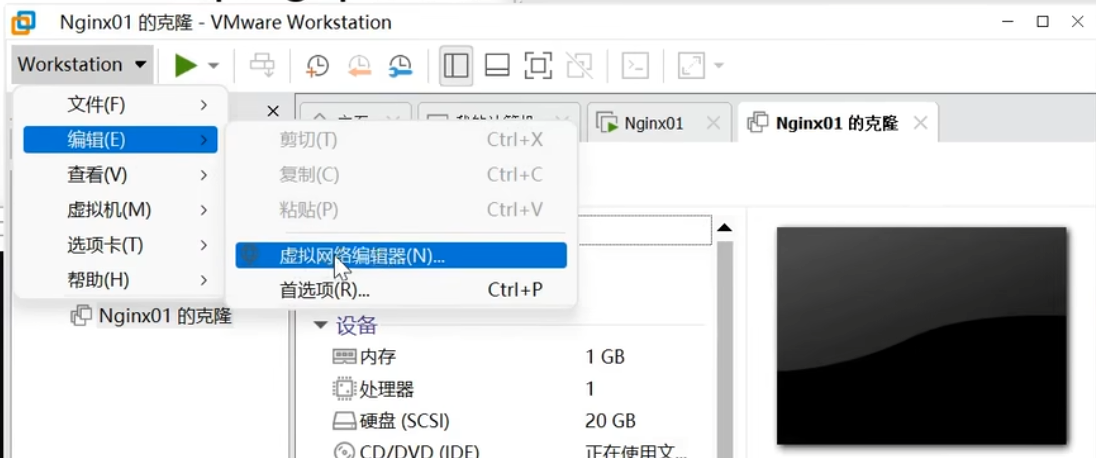

将当前机器克隆三份并修改三台机器的ip地址,不要使其冲突【电脑好,扛得住】,使用xshell对三台机器进行远程连接

-

使用命令

vim /etc/hostname修改3台机器的主机名称为目标名称node1、node2、node3并使用命令shutdown -r now重启机器,使用命令hostname查看当前机器的主机名 -

使用命令

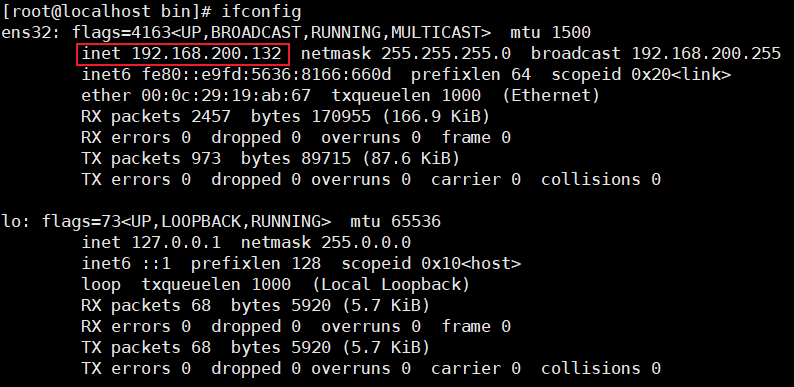

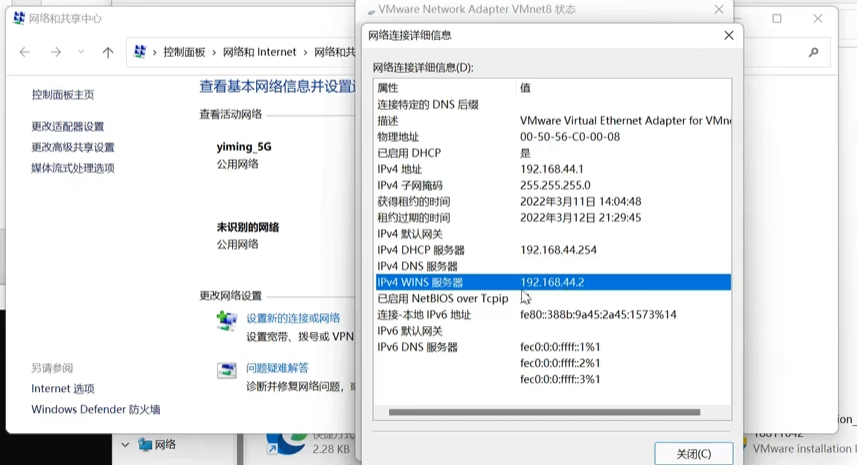

vim /etc/hosts添加各机器节点的ip和hostname配置各个虚拟机节点并重启机器,让各个节点能识别对方192.168.200.132 node1 192.168.200.133 node2 192.168.200.134 node3 -

要确保各个节点的cookie文件使用的是同一个值,在node1节点上执行远程操作命令

scp /var/lib/rabbitmq/.erlang.cookie root@node2:/var/lib/rabbitmq/.erlang.cookie和scp /var/lib/rabbitmq/.erlang.cookie root@node3:/var/lib/rabbitmq/.erlang.cookie将第一台机器的cookie复制给第二台和第三台机器 -

三台机器使用命令

rabbitmq-server -detached重启RabbitMQ服务、顺带重启Erlang虚拟机和RabbitMQ的应用服务 -

以node1为集群将node2和node3加入进去,分别在node2和node3节点执行以下命令

关闭RabbitMQ服务,将rabbitmq重置,将node2和node3节点分别加入node1节点【这里将node2节点加入node3节点观察后续移除node2节点后node3的效果,凉了手速过快,一起连上了】

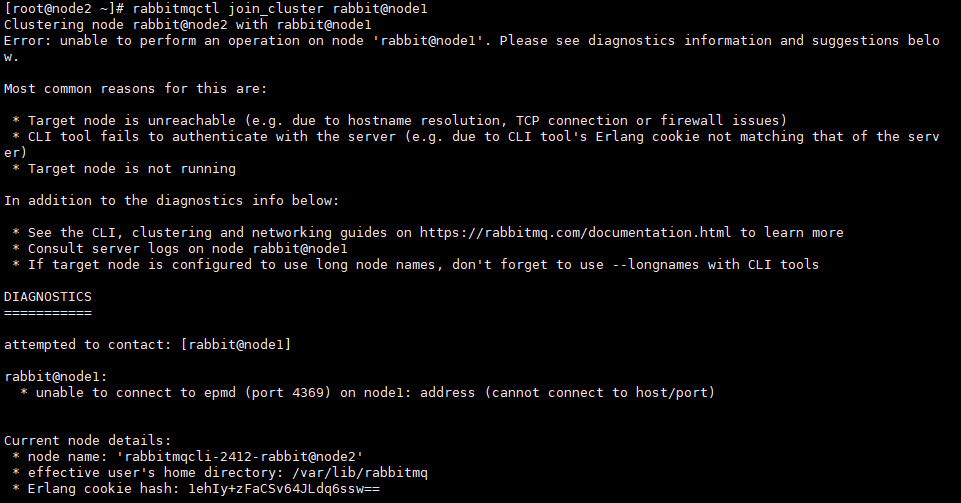

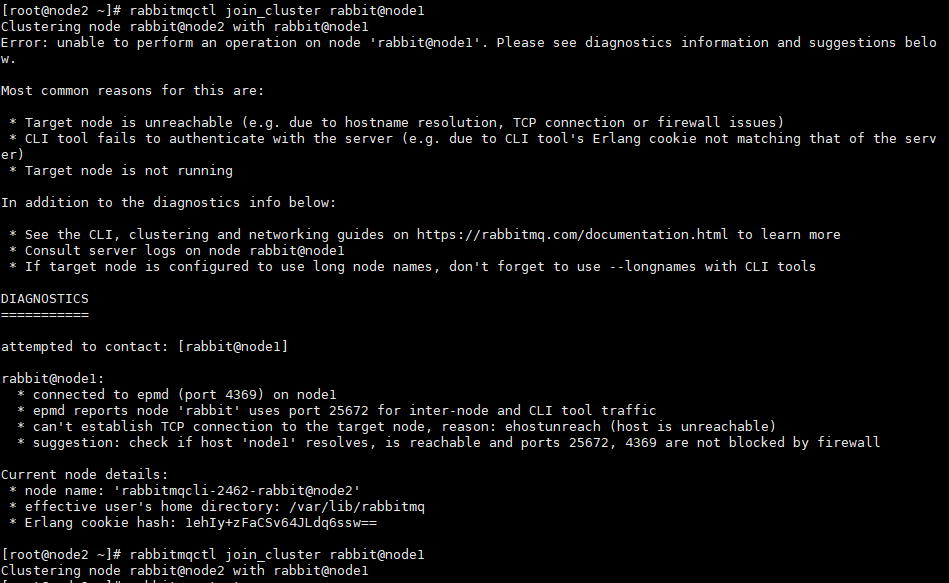

rabbitmqctl stop_app #(rabbitmqctl stop 会将 Erlang 虚拟机关闭, rabbitmqctl stop_app 只关闭 RabbitMQ 服务,就是rabbitmq本身) rabbitmqctl reset rabbitmqctl join_cluster rabbit@node1 rabbitmqctl start_app #(只启动应用服务)执行命令

rabbitmqctl join_cluster rabbit@node1必须开放node1的4369和25672端口,否则会报错;网上一堆操作猛如虎,没一个讲到点上的;克隆的系统相关端口也是开放的我靠,血泪教训,最多只能有一个机器不开放4369和25672端口,其他所有机器都必须开放这俩接口,否则严重点会直接导致所有的RabbitMQ没有一台机器能启动,一直显示正在启动中,启动命令一直卡在运行中,其他的rabbitmq命令报错消息还很傻逼,只会提醒应用没启动,网上还没啥解决方案【fuck】,最后只启动node1发现突然能启动,且能进后台,然后启动node2突然能启动了,node3死活启动不了,终于开放node2的两个端口后node3就能自动启动了,为了方便以后不出问题,建议所有机器节点都开放这俩端口,连带5672端口和15672端口

【没开放端口的情况】

【开放4369端口的情况和开放了25672端口的情况】

-

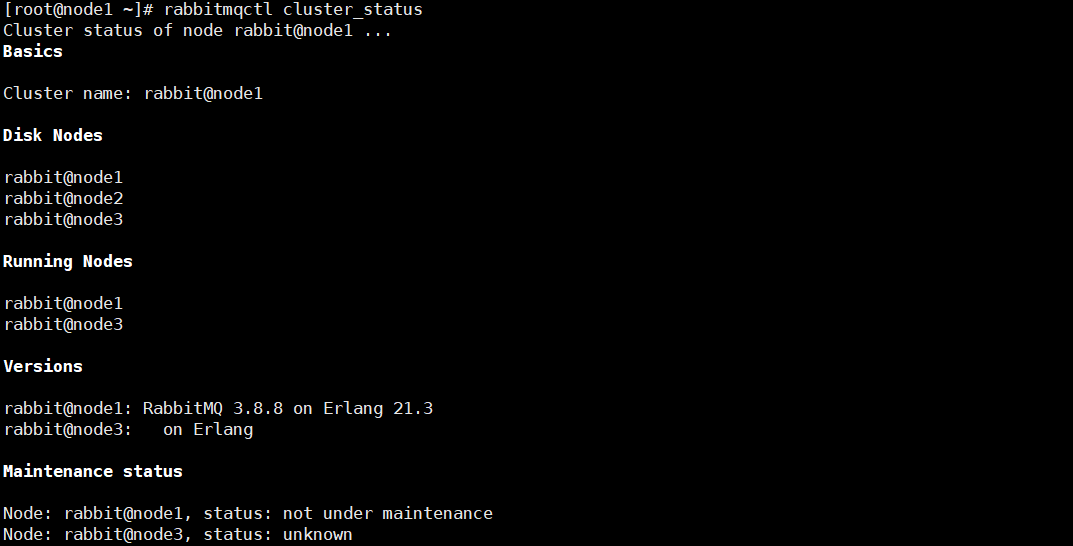

使用命令

rabbitmqctl cluster_status查看集群状态2号节点一直在启动,不知道为啥

-

只需要在一台机器上使用以下命令重新设置用户

rabbitmqctl add_user earl 123456 #创建账户,账户名earl,密码123456 rabbitmqctl set_user_tags earl administrator #设置用户earl的角色为超级管理员 rabbitmqctl set_permissions -p "/" earl ".*" ".*" ".*" #设置用户权限

-

-

搭建成功标志

-

进入网页服务界面能看到3个RabbitMQ节点【状态都是绿色就表示非常健康】

-

-

解除集群节点的命令【node2和node3分别执行以脱离,最后测试一下2号机脱离通过2号机联机集群的3号机的状态,手快了全绑在node1下了】

【脱离机器node2或node3分别执行】

rabbitmqctl stop_app rabbitmqctl reset rabbitmqctl start_app rabbitmqctl cluster_status【node1执行命令忘记脱离的节点】

rabbitmqctl forget_cluster_node rabbit@node2

安装Nacos

安装步骤

- 从地址

https://github.com/alibaba/nacos/tags下载linux系统下的nacos安装包nacos-server-1.3.1.tar.gz - 将

nacos-server-1.3.1.tar.gz安装包拷贝到/usr/local/nacos目录下 - 使用命令

tar -zxvf nacos-server-1.3.1.tar.gz解压安装包到当前目录 - 进入bin目录使用命令

startup 8848启动nacos

安装成功测试

-

启动nacos,使用浏览器访问

http://localhost:8848/nacos出现nacos可视化页面即安装成功 -

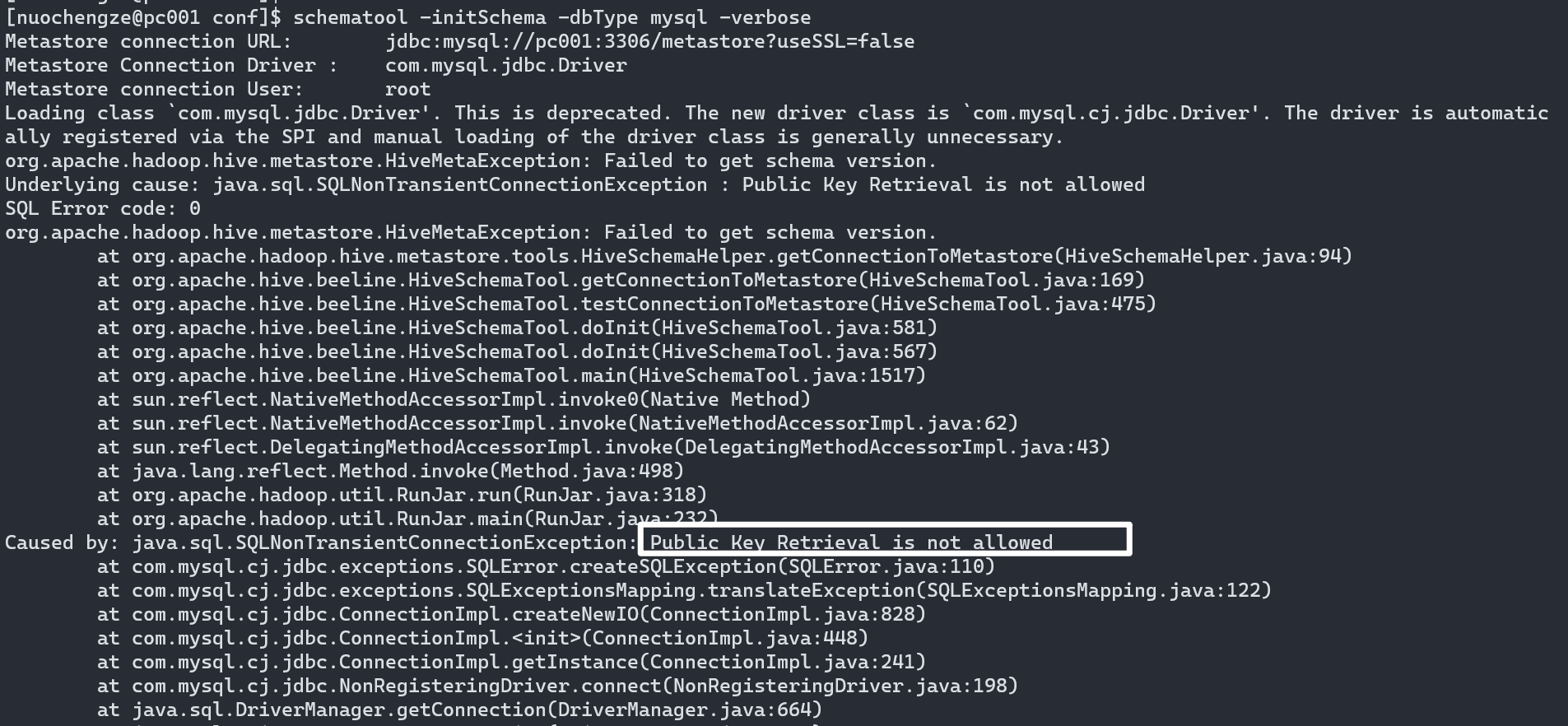

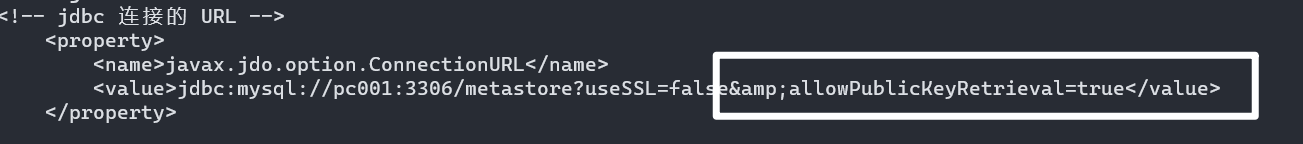

启动nacos时提示

Public Key Retrieval is not allowed错误解决方法-

背景

在使用hive元数据服务方式访问hive时,使用jdbc连接到mysql时提示错误:

java.sql.SQLNonTransientConnectionException: Public Key Retrieval is not allowed

-

原因分析

如果用户使用了 sha256_password 认证,密码在传输过程中必须使用 TLS 协议保护,但是如果 RSA 公钥不可用,可以使用服务器提供的公钥;可以在连接中通过 ServerRSAPublicKeyFile 指定服务器的 RSA 公钥,或者AllowPublicKeyRetrieval=True参数以允许客户端从服务器获取公钥;但是需要注意的是 AllowPublicKeyRetrieval=True可能会导致恶意的代理通过中间人攻击(MITM)获取到明文密码,所以默认是关闭的,必须显式开启。

-

解决措施

在请求的url后面添加参数allowPublicKeyRetrieval=true&useSSL=false

亲测加了以后不报错正常启动

- 如果是xml配置注意&符号的转义

注意:Xml文件中不能使用&,要使用他的转义&来代替。

-

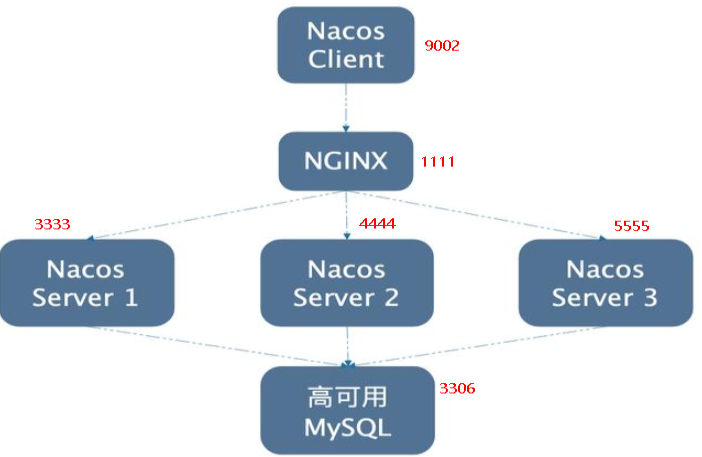

Nacos集群配置

【集群架构图】

)

)

nacos1.3.1能连上mysql8,且配置mysql友好,最好在根目录下创建plugins/mysql目录,把对应mysql的驱动jar包放进去,linux和windows用的驱动jar包是一样的

一台nginx+三台nacos+一台mysql实现注册配置中心集群化配置【自带消息总线】

-

nacos启动命令

startup 8848默认使用8848,单机版以集群的方式启动,需要修改startup.sh添加startup -p 8848,statup -p 8849,startup -p 8850以多端口的方式启动nacos -

配置nacos使用mysql数据库进行持久化

凡是修改配置文件的

-

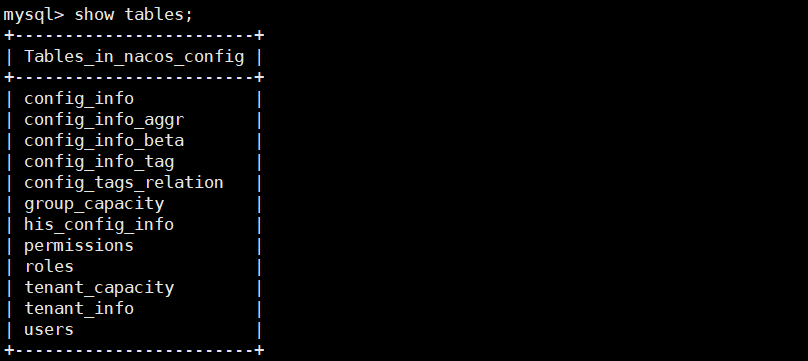

在linux系统下的mysql中创建数据库nacos_config,在该数据库下使用命令

source /usr/local/nacos/nacos/conf/nacos-mysql.sql执行nacos的confg目录下的nacos-mysql.sql中的SQL语句

-

修改配置文件application.properties,让nacos使用外置数据库mysql

#*************** Config Module Related Configurations ***************# ### If use MySQL as datasource: spring.datasource.platform=mysql ### Count of DB: db.num=1 ### Connect URL of DB: db.url.0=jdbc:mysql://127.0.0.1:3306/nacos_config?characterEncoding=utf8&connectTimeout=1000&socketTimeout=3000&autoReconnect=true&useUnicode=true&useSSL=false&serverTimezone=UTC db.user=root db.password=Haworthia0715 -

linux服务器上nacos的集群配置cluster.conf

定出3台nacos服务的端口号,默认出厂没有cluster.conf文件,只有一个cluster.conf.example

-

使用命令

|拷贝cluster.conf.example文件并重命名cluster.conf -

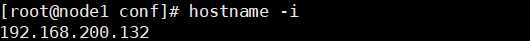

使用命令

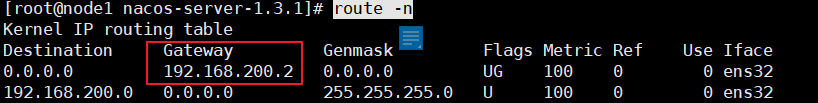

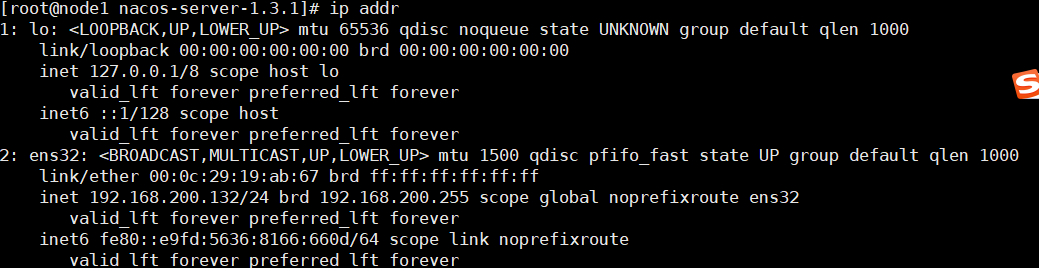

hostname -i查看本机ens33的ip地址

-

配置cluster.conf集群配置

集群配置一定要用上述的ip地址

#cluster server 192.168.200.132:8849 192.168.200.132:8850 192.168.200.132:8851 -

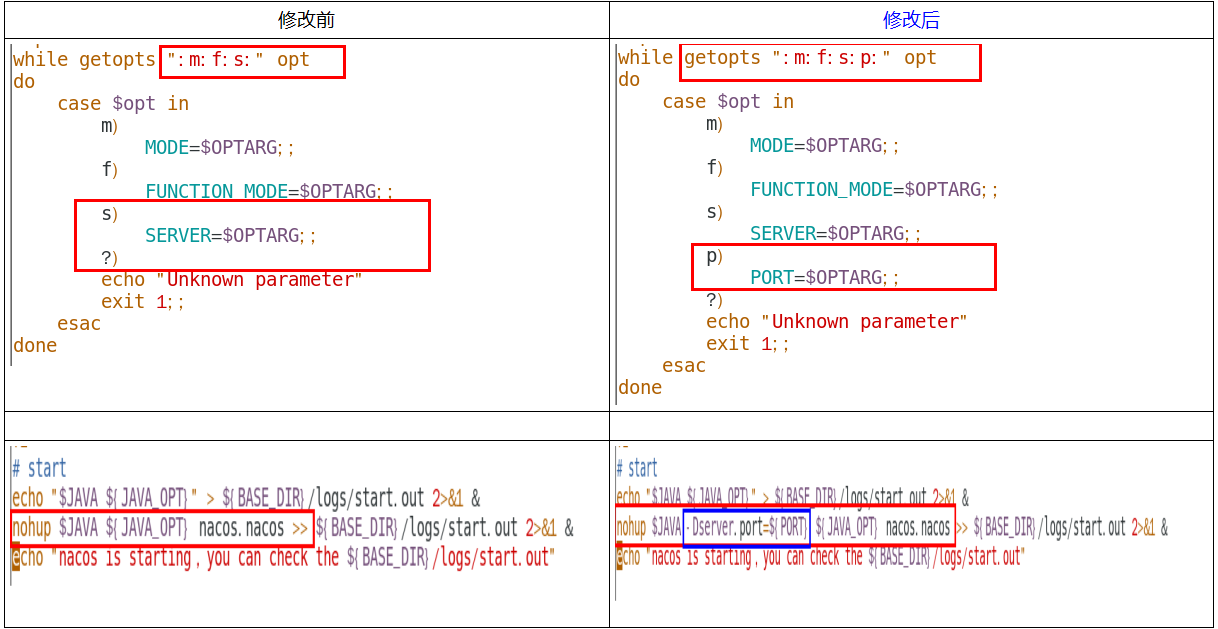

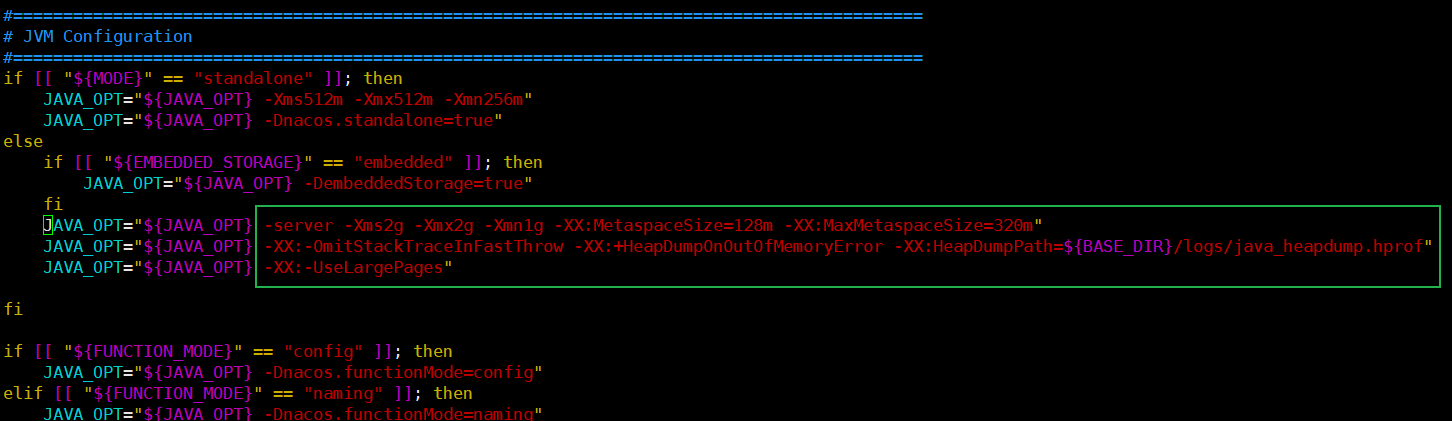

修改nacos启动脚本startup.sh,使其能接受不同的启动端口

就是在启动脚本中写入命令

startup -p 8849【端口号一定要是在cluster.conf中配置过的端口】使用命令

set number或set nu在vim中显示行号,使用命令set nonu[mber]取消显示行号,使用命令set nu!或者set invnu[mber]反转行号【反转行号显示的效果是有行号变成不显示行号,没有行号的变成显示行号】,使用命令set relativenumber设置相对于某一行的行号将反转行号绑定到按键将这行代码

nnoremap <C-N><C-N> :set invnumber<CR>放入vimrc文件中,意思是连按两下<Ctrl-N>便可以反转行号显示【<Ctrl-N>就是CTRL+n的意思,CTRL+N也可以用】,如果要在【insert模式】下反转行号显示,可以使用代码:inoremap <C-N><C-N> <C-O>:set invnumber<CR>【修改启动脚本】

- 修改前:如果启动命令传递的是m就走模式分支MODE,传递的是f就走FUNCTION MODE分支,传递的是s就走SERVER分支

- 修改后添加了

p:,传参p就会走PORT分支,表示传递变量值$OPTARG给变量PORT

- 修改后添加了

- 在

J

A

V

A

和

JAVA和

JAVA和JAVA_OPT之间加了

-Dserver.port=${PORT}表示把输入启动命令的参数值即此前给PORT赋值的参数值传递给$JAVA -Dserver.port- Nacos本身没做这个原因应该是,学习是在同一台机器进行。实际生产在不同服务器做分布式集群。

- 修改前:如果启动命令传递的是m就走模式分支MODE,传递的是f就走FUNCTION MODE分支,传递的是s就走SERVER分支

-

-

在nacos根目录下创建plugins/mysql目录,将对应mysql数据库的驱动引入其中,windows下用的jar包就行,经过测试,能正常启动,因为第一次的startup.sh的

-Dserver.port写到上一行去了,所以启动不起来才考虑加入mysql驱动插件的,然后发现startup.sh写错了,改了以后启动正常了,但是是否要加驱动jar包就不知道了,反正加了不会报错 -

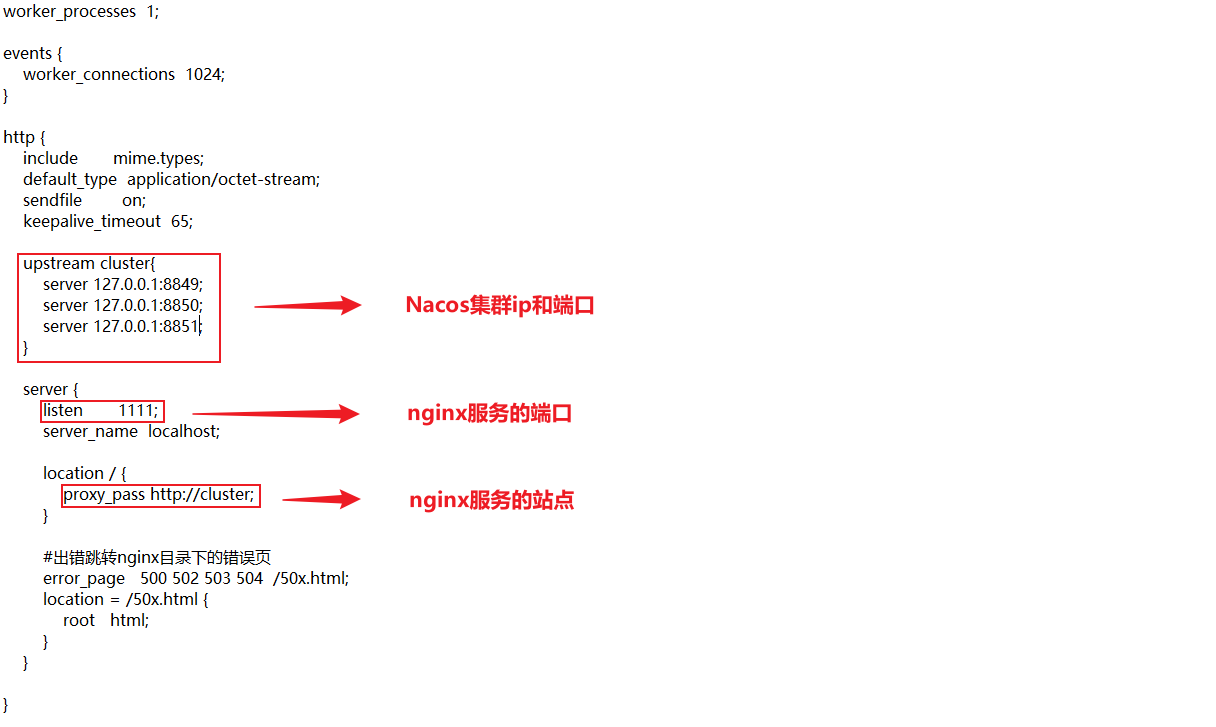

修改Nginx配置,让其作为负载均衡器

-

nginx的配置

-

-

测试

-

启动mysql服务

-

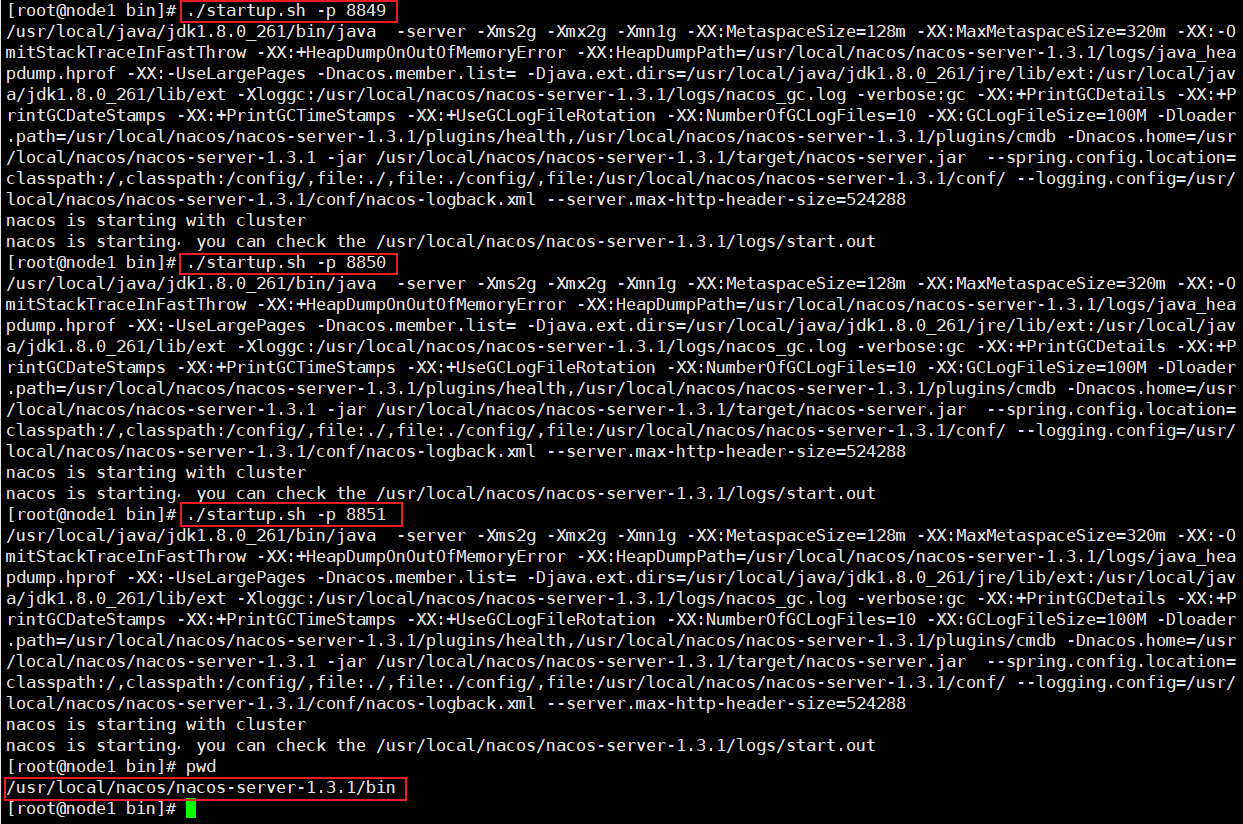

在nacos的bin目录下使用命令

./startup.sh -p 8849和./startup.sh -p 8850和./startup.sh -p 8851在不同端口启动3台nacos服务器启动单台一定要测试是否启动成功,直接在浏览器访问对应端口的服务,启动不了nginx是访问不了的

使用ps命令确认过三台nacos服务都启动了

使用命令

ps -ef|grep nacos|grep -v grep|wc -l可以查看nacos服务器启动的台数

-

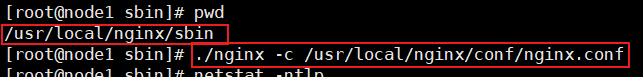

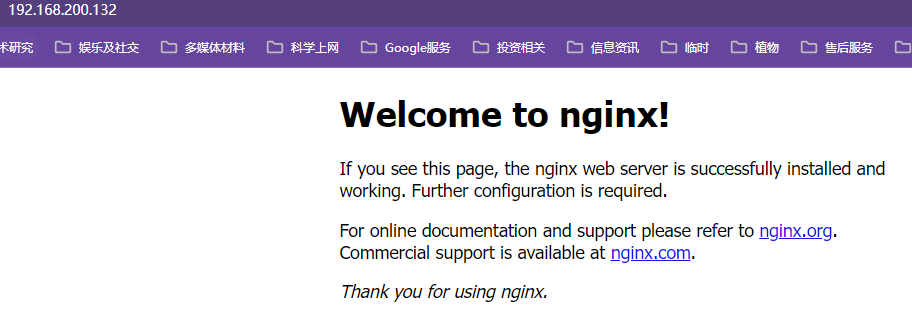

在nginx的sbin目录下使用命令

./nginx -c /usr/local/nginx/conf/nginx.conf启动nginx使用ps命令确认过nginx服务已经启动

-

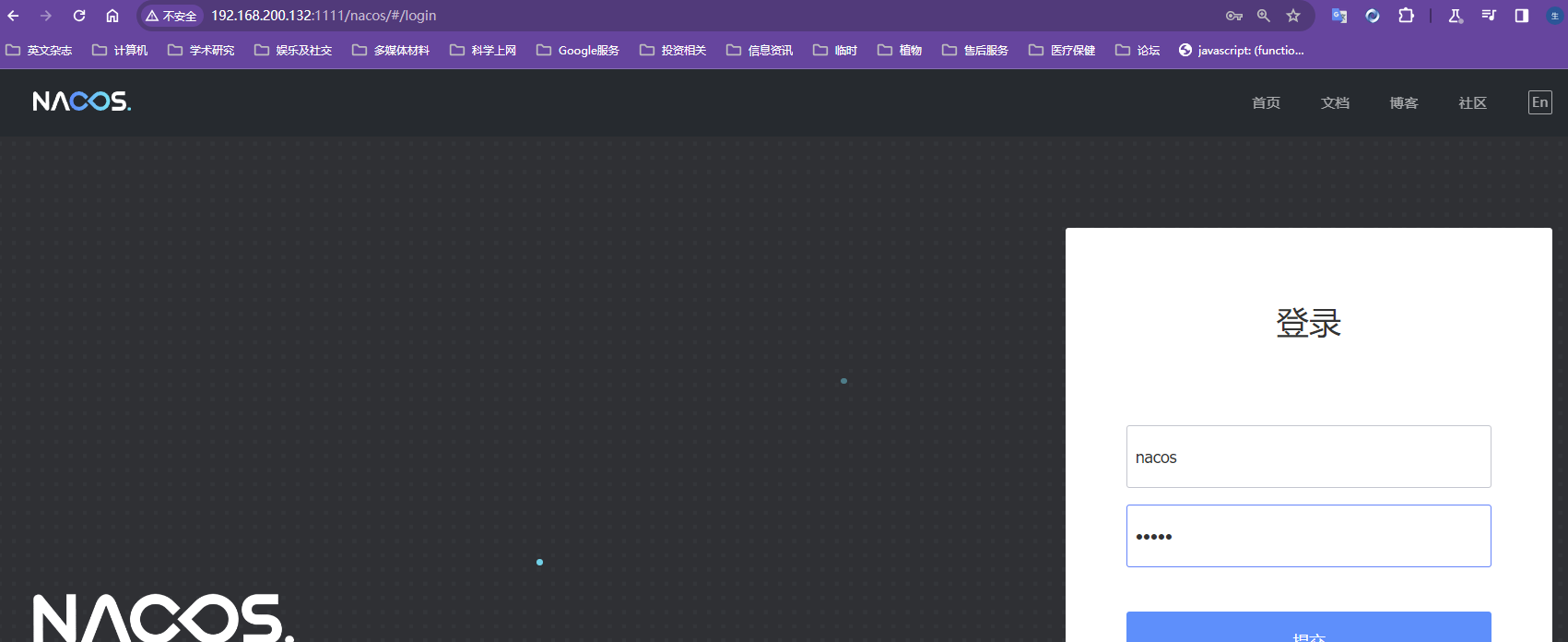

使用请求路径

192.168.200.132:1111/nacos访问nacos集群

-

测试集群是否搭建成功

-

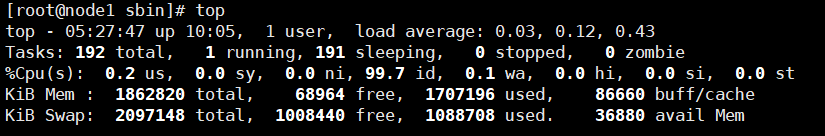

启动后发现只有两台nacos启动了,第三台无法访问

原因是虚拟机内存用完了

【nacos服务状态】

第三台因为内存不够没启动成功,这里有第一个8848端口的nacos是因为配置文件写错了,后来改了,最后一个是因为虚拟机内存不够了,启动不起来

-

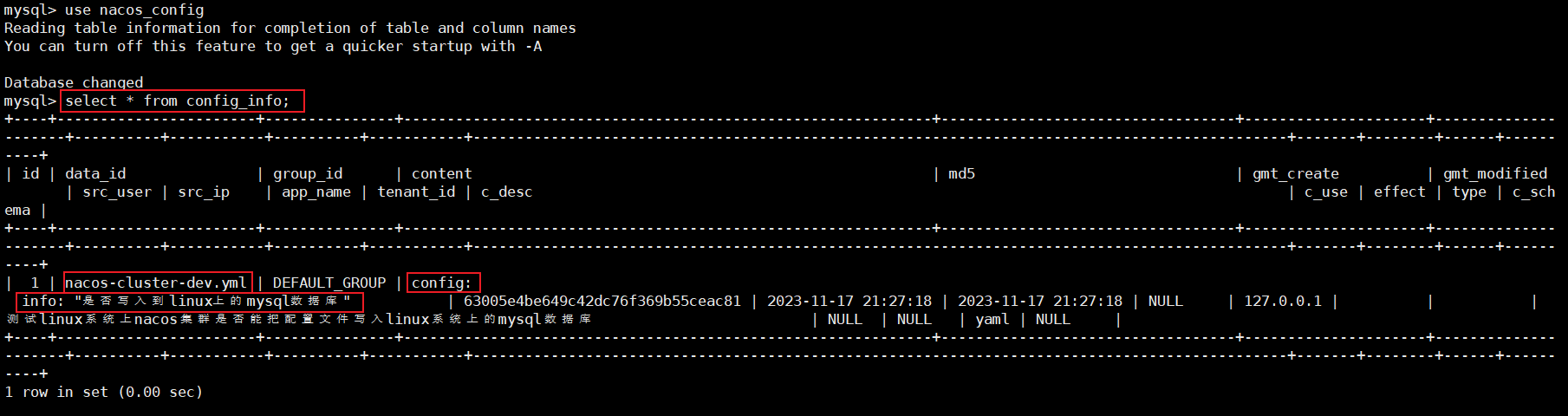

新建配置文件

配置文件会创建在数据库nacos_config的config_info表下,存在服务器没起来也能新建配置文件写入数据库,但是存在nacos服务器起不来,无法向nacos集群注册服务,服务列表是空的

【数据库配置文件存储实况】

文件名、配置文件信息都和新建配置一模一样

-

-

将SpringCloud学习项目的模块23的注册中心迁移到linux系统上由nginx负责负载均衡的nacos集群上来

此时读取的应该是linux上mysql数据库的配置文件

-

23模块配置文件切换注册中心为nacos集群

server: port: 8014 spring: application: name: nacos-provider-payment cloud: nacos: discovery: #server-addr: localhost:8848 #配置Nacos地址 server-addr: 192.168.200.132::1111 #配置Nacos地址 management: endpoints: web: exposure: include: '*' #暴露要监控的所有端点,actuator中的,雷神springboot最后有讲 -

如果nacos注册中心中服务列表显示该模块则证明服务成功注册到nacos集群中

几个要点,

- nacos中的集群管理的节点列表中会显示写在配置文件的所有nacos服务器,只要这个列表中有一台服务器起不起来,比如虚拟机内存不够了服务就启动不起来,此时服务就无法注册到nacos集群中,服务列表不会显示启动的服务【错误配置8848端口的nacos没有上线其他三台正常服务无法注册;更正8848后故意只启动两台,服务无法注册,报错拒绝连接;此时启动第三台,服务成功注册】只要有一台nacos服务器宕机,服务就无法注册到注册中心,这不是违背高可用和配置集群的原则吗?

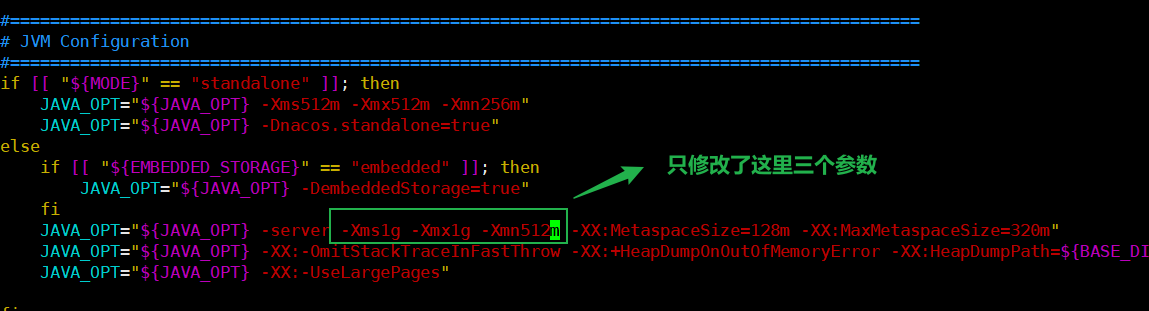

- 可以修改nacos的配置文件来限制nacos的运行内存大小,达到增加集群中服务器数量的目的

-

修改nacos运行内存限制

【运行内存的默认配置】

在startup.sh中的JVM配置中

【更改后的运行内存配置】

【修改后再次启动三个服务均运行正常】

第一个有8848纯属是nacos集群配置文件写错了,多加了一个8848,更改后就好了

-

-

-

安装Nginx

使用nginx1.20.2

安装步骤

基础部分学习使用最原始版本

-

将nginx的安装包

nginx-1.20.2.tar.gz上传到linux的/opt/nginx目录下 -

使用命令

mkdir /usr/local/nginx创建/usr/local/nginx目录 -

使用命令

tar -zxvf nginx-1.20.2.tar.gz解压文件到/opt/nginx目录 -

进入解压目录,进入nginx解压文件,使用命令

./configure [--prefix=/usr/local/nginx]【–prefix是可选项,指定安装目录】尝试检查是否满足安装条件,期间会提示缺少的依赖,以下是需要依赖的安装成功安装的标志是没有报错

- 使用命令

yum install -y gcc安装c语言编译器gcc【-y是使用默认安装,不提示信息】 - 使用命令

yum install -y pcre pcre-devel安装perl库【pcre是perl的库】 - 使用命令

yum install -y zlib zlib-devel安装zlib库 - 检查没有问题后执行命令

make进行编译 - 执行

make install安装nginx

- 使用命令

安装成功测试

-

使用命令

cd /usr/local/nginx进入nginx安装目录,查看是否有相应文件 -

进入sbin目录,使用命令

./nginx启动nginx服务启动时会启动多个线程

-

使用命令

systemctl stop firewalld.service关闭防火墙服务虚拟机是内网上的机器,外网接不进来,关闭防火墙不一定意味着不安全,当然放行端口80更完美;学习过程不需要开启,生产的时候多数时候也不需要开启,除非机器有外网直接接入,或者公司比较大,要防外边和公司里的程序员,可能开启内部的监控和日志记录,一般中小型公司是不会开内网的防火墙的,因为有硬件防火墙或者云的安全组策略

-

使用请求地址

http://129.168.200.132:80访问nginxnginx的默认端口就是80端口,一定要关梯子进行访问,靠北

-

使用命令

./nginx -s stop快速停止nginx -

使用命令

./nginx -s quit在退出前完成已经接受的链接请求如用户下载文件,等用户下载完成后再停机,此时不会再接收任何新请求

-

使用命令

./nginx -s reload重新加载nginx配置可以让nginx更新配置立即生效而不需要重启整个nginx服务器,机制是执行过程中优雅停止nginx,保持链接,reload过程开启新的线程读取配置文件,原有线程处理完任务后就会被杀掉,加载完最新配置的线程继续杀掉线程的工作

-

此时启动nginx比较麻烦,需要使用nginx的可执行文件,意外重启的时候很麻烦,需要登录到控制台手动启动,将nginx安装成系统服务脚本启动就会非常简单

-

使用命令

vi /usr/lib/systemd/system/nginx.service创建服务脚本粘贴文本普通模式粘贴可能丢字符,插入状态粘贴就不会丢字符

WantedBy=multi-user.target 属于[install],shell脚本不能有注释,否则无法设置开机自启动[Unit] Description=nginx - web server After=network.target remote-fs.target nss-lookup.target [Service] Type=forking PIDFile=/usr/local/nginx/logs/nginx.pid ExecStartPre=/usr/local/nginx/sbin/nginx -t -c /usr/local/nginx/conf/nginx.conf ExecStart=/usr/local/nginx/sbin/nginx -c /usr/local/nginx/conf/nginx.conf ExecReload=/usr/local/nginx/sbin/nginx -s reload ExecStop=/usr/local/nginx/sbin/nginx -s stop ExecQuit=/usr/local/nginx/sbin/nginx -s quit PrivateTmp=true [Install] WantedBy=multi-user.target -

使用命令

systemctl daemon-reload重新加载系统服务并关闭nginx服务 -

使用命令

systemctl start nginx.service用脚本启动nginx【启动前注意关闭nginx,避免发生冲突】 -

使用命令

systemctl status nginx查看服务运行状态 -

使用命令

systemctl enable nginx.service设置nginx开机启动nginx.service中的[Install]部分中的WantedBy=multi-user.target不能有注释,不能拼写错误,否则无法设置开机自启动

-

Nginx安装Sticky

sticky是google开源的第三方模块

sticky在nginx上的使用说明:http://nginx.org/en/docs/http/ngx_http_upstream_module.html#sticky

tengine版本中已经有sticky这个模块了,安装的时候需要进行编译;其他的版本还是需要单独安装,sticky模块用的比较多

下载地址:BitBucket下载地址,里面也有对sticky使用的介绍、github下载地址,github是第三方作者的,google主要开源在BitBucket上

sticky主要还是使用BitBucket下载,该Sticky由google进行的迁移,而且一般都下载这个,下载点击downloads–Tags选择版本【很多年不更新了,但是功能已经算比较完善了,有不同的压缩格式,linux选择.gz】–点击.gz进行下载【sticky安装在nginx负载均衡器上即131】

-

将文件

nginx-goodies-nginx-sticky-module-ng-c78b7dd79d0d.tar.gz上传至linux系统/opt/nginx目录下 -

使用命令

tar -zxvf nginx-goodies-nginx-sticky-module-ng-c78b7dd79d0d.tar.gz将文件解压到当前目录 -

在nginx的解压目录

/opt/nginx/nginx-1.20.2目录下使用命令./configure --prefix=/usr/local/nginx/ --add-module=/opt/nginx/nginx-goodies-nginx-sticky-module-ng-c78b7dd79d0d检查环境并配置将nginx编译安装至/usr/local/nginx目录下nginx重新编译会生成全新的nginx,如果老nginx配置比较多需要全部备份并编译后进行替换

–add-module是添加第三方的模块,如果是nginx自带的模块编译的时候用的是–vs-module命令,表示模块已经在nginx的官方安装包里了

-

在nginx的解压目录

/opt/nginx目录下使用命令make安装nginx编译过程如果遇到sticky报错,是因为sticky过老的问题,需要修改源码,在sticky的解压文件中找到

ngx_http_sticky_misc.h文件的第十二行添加以下代码添加后需要重新./configure一下再执行make

安装sticky模块需要openSSL依赖,如果没有make的时候也会报错没有openssl/sha.h文件,此时使用命令

yum install -y openssl-devel安装openssl-devel,再使用./configure检查环境并make进行编译安装,没有报错就安装成功了#include <openssl/sha.h> #include <openssl/md5.h> -

在nginx解压目录使用命令

make upgrade检查新编译的安装包是否存在问题【在不替换原nginx的情况下尝试跑一下新的nginx】,而不是直接把新的替换掉旧的nginx解压目录中objs是nginx编译后的文件,objs目录下的nginx.sh就是新编译的nginx可执行文件,想要平滑升级nginx不破坏原有配置可以将该nginx.sh文件直接替换掉原安装目录中的nginx.sh,但是一定要注意原文件的备份

-

使用命令

mv /usr/local/nginx/sbin/nginx /usr/local/nginx/sbin/nginx.old对原nginx可执行文件进行备份,使用命令cp nginx /usr/local/nginx/sbin/将nginx解压包objs目录下的nginx.sh拷贝到目录/usr/local/nginx/sbin/ -

使用命令

./nginx -V来查看nginx的版本和nginx的编译安装参数 -

使用命令

systemctl start nginx启动nginx,并使用浏览器访问nginx观察访问是否正常,访问正常即nginx升级第三方模块成功

Nginx安装Brolti

安装ngx_brotli和brotli稍微麻烦一点,因为有子项目依赖

-

从下载地址

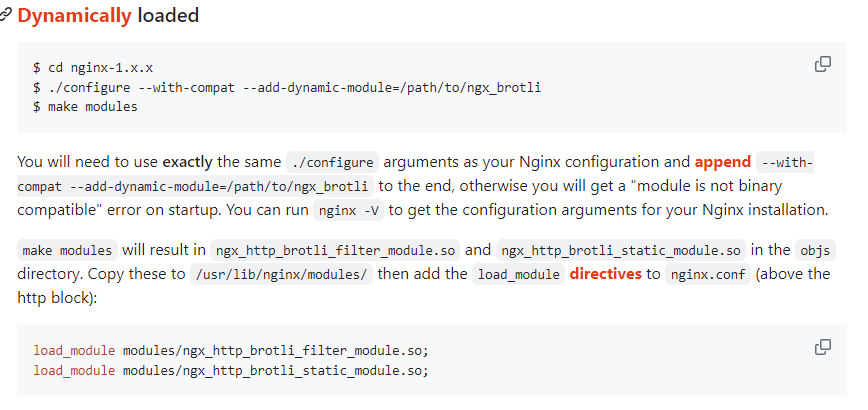

https://github.com/google/ngx_brotli下载brolti在nginx上的插件ngx_brotlingx_brotli下载地址有文档,配置使用官方推荐的动态模块的方式,动态加载模块是nginx在1.9版本以后才支持的,禁用模块无需重新进行编译,直接在配置文件中进行配置要使用的模块就可以直接使用了【就是安装是安装,需要使用要在配置文件进行配置,不配置对应的模块就会禁用】

ngx_brotli下载点击release下载,只能下载到源码,需要自己在本地进行编译和安装;或者从git直接拉取到本地进行编译和安装;稳定版本现在只有1.0.0,linux系统下载tar.gz版本

-

从下载地址

https://codeload.github.com/google/brotli/tar.gz/refs/tags/v1.0.9下载brotli单独的算法仓库地址:https://github.com/google/brotli,也可以直接从对应仓库的release下载tar.gz的源码压缩包

-

使用命令

tar -zxvf ngx_brotli-1.0.0rc.tar.gz解压ngx_brotli的压缩包 -

使用命令

tar -zxvf brotli-1.0.9.tar.gz解压brolti的压缩包 -

进入brolti的解压目录,使用命令

mv ./* /opt/nginx/ngx_brotli-1.0.0rc/deps/brotli将brotli算法的压缩包移动到ngx_brotli解压目录下的ngx_brotli-1.0.0rc/deps/brolti目录【注意不要 把解压目录brotli-1.0.9也拷贝过去了,只拷贝该目录下的文件,目录层级不对预编译检查会报错】 -

在nginx的解压目录使用命令

./configure --with-compat --add-dynamic-module=/opt/nginx/ngx_brotli-1.0.0rc --prefix=/usr/local/nginx/ --add-module=/opt/nginx/nginx-goodies-nginx-sticky-module-ng-c78b7dd79d0d --with-http_gzip_static_module --with-http_gunzip_module以动态化模块的方式【传统的方式模块无法禁用】对ngx_brolti进行编译,传统的编译可以在./configure中使用--add-dynamic-module=brotli目录 -

在nginx的解压目录使用命令

make进行编译没报错就是成功的,经测试没问题

-

使用命令

mkdir /usr/local/nginx/modules在nginx安装目录下创建一个modules模块专门来放置第三方模块,默认是没有的 -

在nginx解压目录进入

objs目录,使用命令cp ngx_http_brotli_filter_module.so /usr/local/nginx/modules/以及cp ngx_http_brotli_static_module.so /usr/local/nginx/modules/将模块ngx_http_brotli_filter_module.so和ngx_http_brotli_static_module.so拷贝到nginx安装目录的modules目录下,以后就可以在nginx配置文件动态的加载brotli模块了 -

停止nginx的运行,使用命令

mv /usr/local/nginx/sbin/nginx /usr/local/nginx/sbin/nginx.old3,使用命令cp nginx /usr/local/nginx/sbin/将objs/nginx.sh复制到nginx的sbin目录只要进行了编译就要将新的主程序nginx拷贝到nginx的安装目录,否则即使之前的步骤都没问题模块也是用不了的

每次预编译都要带上之前添加的完整的模块,否则可能由于对某些模块有配置但是没有安装相应的模块,会导致服务启动不起来

可以在拷贝完成后再重启nginx服务

能成功重启nginx就是正常的

Nginx安装Concat

官网地址:https://github.com/alibaba/nginx-http-concat

nginx官网对Concat的介绍:https://www.nginx.com/resources/wiki/modules/concat/

Tengine的官网也有介绍,该模块最早是tengine发布的,tengine已经被捐献给apache开源组织,tengine是基于nginx开源版基础上再源码层面做了很多的修改,不只是增加了模块和功能,大部分的tengine的模块直接拿到nginx中是用不了的,但是Concat模块是能直接拿过来使用的,Concat虽然很久没用了,但是比较简单,就一个C源文件,而且release中没有发行版,只能通过git clone进行安装或者点击code通过

download zip将压缩包nginx-http-concat-master.zip下载下来

-

将压缩包

nginx-http-concat-master.zip拷贝到/opt/nginx目录下这里有个坑,大家用git指令下载的文件是和直接下载的文件是不一样的,git下载的文件名没有-master

[root@nginx1 nginx]# unzip nginx-http-concat-master.zip Archive: nginx-http-concat-master.zip b8d3e7ec511724a6900ba3915df6b504337891a9 creating: nginx-http-concat-master/ inflating: nginx-http-concat-master/README.md inflating: nginx-http-concat-master/config inflating: nginx-http-concat-master/ngx_http_concat_module.c -

在nginx的解压目录中使用命令

./configure --with-compat --add-dynamic-module=/opt/nginx/ngx_brotli-1.0.0rc --prefix=/usr/local/nginx/ --add-module=/opt/nginx/nginx-goodies-nginx-sticky-module-ng-c78b7dd79d0d --with-http_gzip_static_module --with-http_gunzip_module --add-module=/opt/nginx/nginx-http-concat-master进行预编译,然后使用make命令编译这里面为了nginx的课程连续性,添加了很多其他模块,自己根据需要选择,预编译检查的命令不是固定的,对nginx.sh使用命令

./nginx -V能够查看当前nginx的版本,gcc版本和安装的模块[root@nginx1 objs]# ./nginx -V nginx version: nginx/1.20.2 built by gcc 8.3.1 20190311 (Red Hat 8.3.1-3) (GCC) configure arguments: --with-compat --add-dynamic-module=/opt/nginx/ngx_brotli-1.0.0rc --prefix=/usr/local/nginx/ --add-module=/opt/nginx/nginx-goodies-nginx-sticky-module-ng-c78b7dd79d0d --with-http_gzip_static_module --with-http_gunzip_module --add-module=/opt/nginx/nginx-http-concat-master -

使用命令

systemctl stop nginx停掉nginx,在/objs目录下使用命令cp nginx /usr/local/nginx/sbin/将编译好的nginx运行文件替换掉老的nginx.sh,然后重启nginx一般来说各个模块包括第三方模块之间不会有冲突性问题

Nginx安装GEOIP2

GEOIP是一家商业公司开发的组件,现在升级到版本2,不仅可以使用在nginx,还可以使用在java、python上,提供云API供用户直接调用,IP和地区对应的库该公司也免费开放出来了,免费版比商业版的数据精确度差一点;早期的DNS解析做的不是很好使用比较广泛,现在基本各大云平台都有很强大的DNS解析技术;自己搭建一个DNS解析系统配置和部署比较麻烦,但是现在都是云平台商提供;因此现在的GEOIP的应用场景其实不是很多,主要都是阻断一些用户请求【比如站点不对一个国家开放,或者站点只对一个城市开放,又或者根据用户所属不同区域向用户展示不同的站点,现在也比较少了,用的最多的也就是一些资源只限某个地区的用户使用】

-

GEOIP2的安装

tcp连接IP是无法造假的,因为tcp造假服务端数据就无法正常响应给用户

GEOIP的安装比较麻烦,需要下载3个资源

GEOIP的Nginx模块配置官方文档:http://nginx.org/en/docs/http/ngx_http_geoip_module.html#geoip_proxy

-

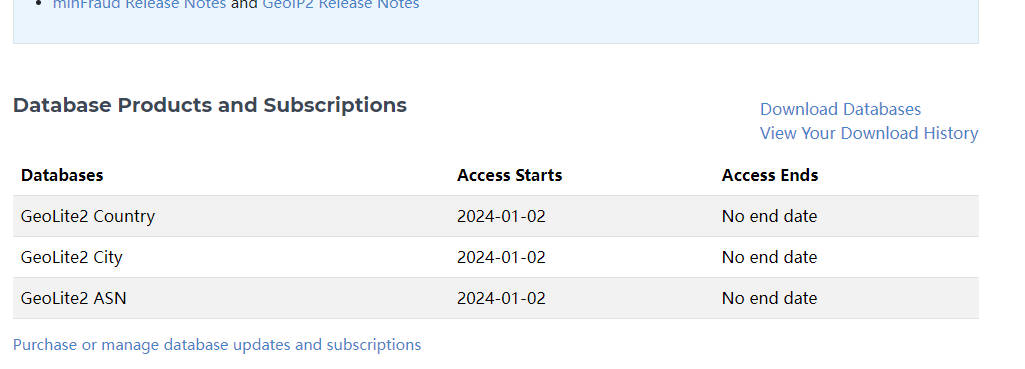

需要前往GEOIP的官网下载ip数据库

要下载需要注册并登录,GEOLite2是免费版的库,GEO2是付费版的库,而且他的库更新频率特别高,线上使用也要频繁的去更新

登录使用邮箱和密码

【下载选项】

country只能检索到国家,City能够检索到城市,ASN能检索到更详细一点的区域;测试下载country版本的就行,数据库越大,检索的效率太低,下载gzip版本;csv版本可以直接导入数据库如mysql或者oracle

测试导入云服务器上,这样能解析我们自己的ip,在自己电脑上部署无法进行访问,导入

/opt/nginx目录下

-

前往GEOIP的官方git下载GEOIP模块的相关依赖:https://github.com/maxmind/libmaxminddb

下载1.6.0版本libmaxminddb-1.6.0.tar.gz

安装需要gcc,使用命令

yum install -y gcc安装gcc,解压后在安装目录执行./confugure,使用make进行编译,使用make install进行安装;使用命令echo /usr/local/lib >> /etc/ld.so.conf.d/local.conf配置动态连接库【相当于在系统的动态连接库下额外增加一个目录】,使用命令ldconfig刷新系统的动态链接库 -

前往GEOIP的官方git下载GEOIP的对应Nginx上的模块:https://github.com/leev/ngx_http_geoip2_module

下载3.3版本ngx_http_geoip2_module-3.3.tar.gz

安装GEOIP需要pcre-devel,使用命令

yum install -y pcre pcre-devel安装;安装GEOIP需要zlib,使用命令

yum install -y zlib-devel安装;解压缩GEOIP模块的压缩包,进入nginx的解压目录使用命令

./configure --prefix=/usr/local/nginx/ --add-module=/opt/nginx/ngx_http_geoip2_module进行静态安装【动态安装更好,静态安装只是安装更省事】,使用make进行编译,使用make install进行安装【此时已经安装完毕,只需要在配置文件中进行配置,我云服务器上的nginx是通过oneinstack.com安装的,不知道安装包在哪儿,且那个nginx正在跑博客,这里就不实验了,后续记录老师的演示】

-

Nginx安装Purger

-

将文件

ngx_cache_purge-2.3.tar.gz上传到/opt/nginx目录下,使用命令tar -zxvf ngx_cache_purge-2.3.tar.gz解压缩压缩包 -

进入nginx的解压目录,使用命令

./configure --with-compat --add-dynamic-module=/opt/nginx/ngx_brotli-1.0.0rc --prefix=/usr/local/nginx/ --add-module=/opt/nginx/nginx-goodies-nginx-sticky-module-ng-c78b7dd79d0d --add-module=/opt/nginx/ngx_cache_purge-2.3 --with-http_gzip_static_module --with-http_gunzip_module --add-module=/opt/nginx/nginx-http-concat-master进行预编译,没有报错使用命令make进行编译这里图省事加了很多其他模块的配置,自己根据需要删减或者增加即可

-

使用命令

mv /usr/local/nginx/sbin/nginx /usr/local/nginx/sbin/nginx.old4将老的nginx运行文件备份,使用命令cp /opt/nginx/nginx-1.20.2/objs/nginx /usr/local/nginx/sbin/nginx将新的nginx文件拷贝到nginx的安装目录 -

在nginx中对purger进行配置

这里自定义了参数

proxy_cache_key $uri;,默认的该参数配置$scheme$proxy_host$request_uri,生成的key效果为http://stickytest/page.css,$proxy_host是upstream的名字,可以认为该参数实际读取的是proxy_pass的host部分;这个key必须和清理缓存站点的proxy_cache_purge的配置【此处配置是$1,表示uri中的第一个参数】相同,否则无法找到对应的缓存文件并删除该缓存文件自定义参数

proxy_cache_key $uri;的好处是不管访问的是http的资源,还是https的资源,或者通过各种域名访问到主机,一概不进行区分,只对访问的具体资源进行区分;这个对访问文件生成的key的协议和主机以及uri有影响,默认设置可能来自不同协议、域名等请求可能对同一份响应文件生成多个不同key的缓存文件,使用uri来作为key能够避免区分协议和不同的域名,相同的响应文件只生成同一份缓存文件proxy_cache_key $uri;还可以使用很多其他的变量,官方git举了一些例子【具体可用的参数课程没有讲过,自己总结】,比如proxy_cache_key $host$uri$is_args$args;【$is_args是判断是否有参数】,一旦修改了proxy_cache_key,proxy_cache_purge中的最后一个参数也要和key的形式保持一致worker_processes 1; events { worker_connections 1024; } http { include mime.types; default_type application/octet-stream; sendfile on; upstream stickytest{ server 192.168.200.135:8080; } proxy_cache_path /ngx_tmp levels=1:2 keys_zone=test_cache:100m inactive=1d max_size=10g ; server { listen 80; server_name localhost; #额外添加一个location,实现和proxy_cache缓存文件反向的效果,访问某个资源带/purge起头的url能够清除对应url资源的缓存文件 location ~/purge(/.*){ #调用proxy_cache_purge去清理缓存区域test_cache[proxy_cache_path中的keys_zone]的缓存文件,$1指的是请求后面的uri除去/purge的部分 proxy_cache_purge test_cache $1; } location / { proxy_cache test_cache; add_header Nginx-Cache "$upstream_cache_status"; proxy_cache_valid 23h; #自定义缓存文件的key为请求的uri proxy_cache_key $uri; proxy_pass http://stickytest; } error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } } }

安装redis连接模块

-

在https://github.com/openresty/redis2-nginx-module/tags下载模块

redis2-nginx-module,这里与课程一致下载0.15版本,上传至linux的/opt/nginx目录下-

使用命令

tar -zxvf redis2-nginx-module-0.15.tar.gz解压压缩包 -

进入nginx的安装目录,使用命令

./configure --prefix=/usr/local/nginx/ -add-module=/opt/nginx/redis2-nginx-module-0.15进行预编译,然后使用命令make实际不要直接用这个命令,要根据实际添加的模块来进行自定义该命令,实际我这里使用的是

./configure --with-compat --add-dynamic-module=/opt/nginx/ngx_brotli-1.0.0rc --prefix=/usr/local/nginx/ -add-module=/opt/nginx/redis2-nginx-module-0.15 --add-module=/opt/nginx/nginx-goodies-nginx-sticky-module-ng-c78b7dd79d0d --add-module=/opt/nginx/ngx_cache_purge-2.3 --with-http_gzip_static_module --with-http_gunzip_module --add-module=/opt/nginx/nginx-http-concat-master -

使用命令

mv /usr/local/nginx/sbin/nginx /usr/local/nginx/sbin/nginx.old5将老nginx运行文件备份,在nginx的解压目录的objs目录下使用命令cp objs/nginx /usr/local/nginx/sbin/将编译好的nginx拷贝到nginx的安装目录

-

Docker安装Nginx

-

使用命令

docker run -p 80:80 --name nginx -d nginx:1.10随便启动一个nginx实例,这一步只是为了复制出nginx的配置- 不用单独拉取nginx镜像,docker运行上述命令发现没有对应的镜像会自动先去拉取该镜像

-

在目录

/malldata下使用命令docker container cp nginx:/etc/nginx .将容器内的配置文件拷贝到当前目录,此时拷贝过来的目录名字就叫nginx,/etc/nginx目录实际上就是nginx的conf目录 -

依次使用命令

docker stop nginx和docker rm 容器id终止容器运行并删除容器实例 -

在

malldata目录使用命令mv nginx conf将nginx目录的名字改为conf,使用命令mkdir nginx在malldata目录下创建nginx目录,使用命令mv conf nginx/将conf目录移动到nginx目录下 -

使用命令

mkdir /malldata/nginx/logs和命令mkdir /malldata/nginx/html创建静态资源和日志文件对应的挂载目录,使用命令chmod -R 777 /malldata/nginx给予nginx目录可读可写可执行权限- 实际上不用执行该命令,执行容器实例带挂载目录的命令也会自动创建对应的挂载目录,这里为了保证万无一失提前创建了

-

使用以下命令创建新的nginx容器实例

- 将nginx上所有的静态资源挂载到

/malldata/nginx/html目录下 - 将nginx上的所有日志文件挂载到

/malldata/nginx/logs目录下 - 将nginx上的所有配置文件挂载到

/malldata/nginx/conf目录下

docker run -p 80:80 --privileged=true --name nginx \ -v /malldata/nginx/html:/usr/share/nginx/html \ -v /malldata/nginx/logs:/var/log/nginx \ -v /malldata/nginx/conf:/etc/nginx \ -d nginx:1.10 - 将nginx上所有的静态资源挂载到

-

使用命令

docker update --restart=always nginx将容器实例设置为开机随docker自启动 -

在nginx的挂载目录html中设置一个首页,通过ip从浏览器进行访问来验证容器实例的运行是否正常

安装keepalived

编译安装

安装包下载地址:https://www.keepalived.org/download.html#

-

解压安装包,在当前目录下使用命令

./configure查看安装环境是否完整,如果有如下报错信息需要使用命令yum install openssl-devel安装openssl-devel的依赖configure: error: !!! OpenSSL is not properly installed on your system. !!! !!! Can not include OpenSSL headers files. !!! -

编译安装以前安装过,后面补充,包括上一条命令添加安装的位置

安装步骤

-

使用命令

yum install -y keepalived安装keepalived需要在线监测的所有机器都要安装keepalived

-

如果安装提示缺少

需要:libmysqlclient.so.18()(64bit),依次使用命令wget https://dev.mysql.com/get/Downloads/MySQL-5.7/mysql-community-libs-compat-5.7.25-1.el7.x86_64.rpm和rpm -ivh mysql-community-libs-compat-5.7.25-1.el7.x86_64.rpm安装对应的依赖【是否需要注意mysql的版本和本机匹配,我这里匹配了没有问题】,然后再次执行yum install -y keepalived安装keepalived即可 -

keepalived的配置文件在目录

/etc/keepalived/keepalived.conf,使用命令vim /etc/keepalived/keepalived.conf修改keepalived的配置文件用来做nginx服务器在线监测的keepalived配置

【默认的keepalived配置文件】

! Configuration File for keepalived global_defs { #这一段是机器宕机以后发送email通知,作用不大 notification_email { acassen@firewall.loc failover@firewall.loc sysadmin@firewall.loc } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 #global_defs中只有router_id有点用,其他的都可以删掉 router_id LVS_DEVEL vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 } vrrp_instance VI_1 { state MASTER interface eth0 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.200.16 192.168.200.17 192.168.200.18 } } #仅仅用来检查nginx服务器的存活状态不用关心从这里往下的所有配置,即virtual_server都可以删掉 virtual_server 192.168.200.100 443 { delay_loop 6 lb_algo rr lb_kind NAT persistence_timeout 50 protocol TCP real_server 192.168.201.100 443 { weight 1 SSL_GET { url { path / digest ff20ad2481f97b1754ef3e12ecd3a9cc } url { path /mrtg/ digest 9b3a0c85a887a256d6939da88aabd8cd } connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } } virtual_server 10.10.10.2 1358 { delay_loop 6 lb_algo rr lb_kind NAT persistence_timeout 50 protocol TCP sorry_server 192.168.200.200 1358 real_server 192.168.200.2 1358 { weight 1 HTTP_GET { url { path /testurl/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl2/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl3/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } real_server 192.168.200.3 1358 { weight 1 HTTP_GET { url { path /testurl/test.jsp digest 640205b7b0fc66c1ea91c463fac6334c } url { path /testurl2/test.jsp digest 640205b7b0fc66c1ea91c463fac6334c } connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } } virtual_server 10.10.10.3 1358 { delay_loop 3 lb_algo rr lb_kind NAT persistence_timeout 50 protocol TCP real_server 192.168.200.4 1358 { weight 1 HTTP_GET { url { path /testurl/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl2/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl3/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } real_server 192.168.200.5 1358 { weight 1 HTTP_GET { url { path /testurl/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl2/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } url { path /testurl3/test.jsp digest 640205b7b0fc66c1ea91c463fac6334d } connect_timeout 3 nb_get_retry 3 delay_before_retry 3 } } }【做nginx代理服务器的keepalived配置文件】

! Configuration File for keepalived global_defs { #global_defs中只有router_id有点用,其他的都可以删掉,这个是当前机器的自定义名字,随便起名的,当前机器的ip是131 router_id nginx131 } #vrrp是keepalived在内网中通讯的协议【vrrp虚拟路由冗余协议】,atlisheng是实例名称,也是自定义的 vrrp_instance atlisheng { #state是当前机器的状态,当前机器是master state MASTER #interface需要和本机的网卡的名称对应上,这里修改ip地址为ens32,需要和interface对应 interface ens32 #这个不用管 virtual_router_id 51 #主备竞选的优先级,谁的优先级越高,谁就是master;Keepalived 就是使用抢占式机制进行选举,一旦有优先级高的加入,立即成为 Master priority 100 #检测间隔时间 advert_int 1 #内网中一组keepalive的凭证,因为内网中可能不止一组设备跑着keepalived,需要认证信息证明多个keepalived属于一组,同一组的authentication要保持一致,这样能决定一组keepalived中的一台机器宕机,虚拟IP是否只在本组内进行漂移,而不会漂移到其他组上 authentication { auth_type PASS auth_pass 1111 } #虚拟IP,虚拟IP可以填写好几个,意义不大,一般虚拟一个IP即可,这里设置成192.168.200.200,用户访问的是虚拟的ip,不再是真实的ip地址 virtual_ipaddress { 192.168.200.200 #192.168.200.17 #192.168.200.18 } }【备用机的keepalived配置】

注意同一组的实例名、virtual_router_id、authentication得是一样的

注意备机的priority 要设置的比主机小,state需要改成BACKUP

且备用机使用命令

ip addr不会看到虚拟ip的信息! Configuration File for keepalived global_defs { router_id nginx132 } vrrp_instance atlisheng { state BACKUP interface ens32 virtual_router_id 51 priority 50 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.200.200 } } -

使用命令

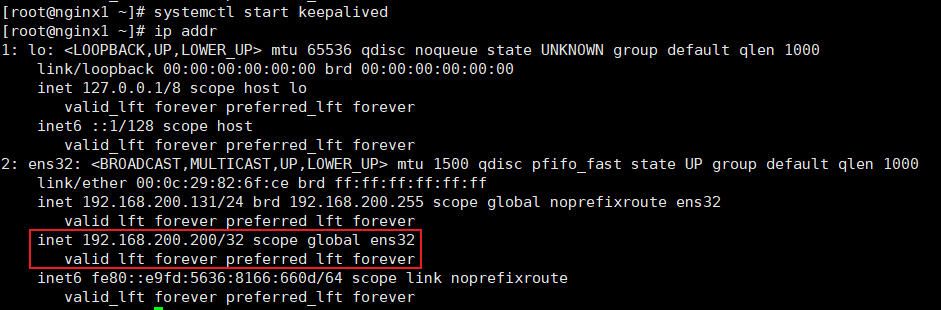

systemctl start keepalived运行keepalived

-

安装成功测试

-

启动keepalived后使用命令

ip addr查询ip信息在ens32下在原来真实的ip下会多出来一个inet虚拟ip:

192.168.200.200

-

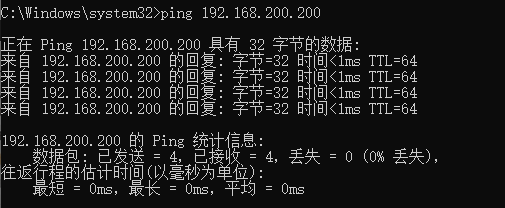

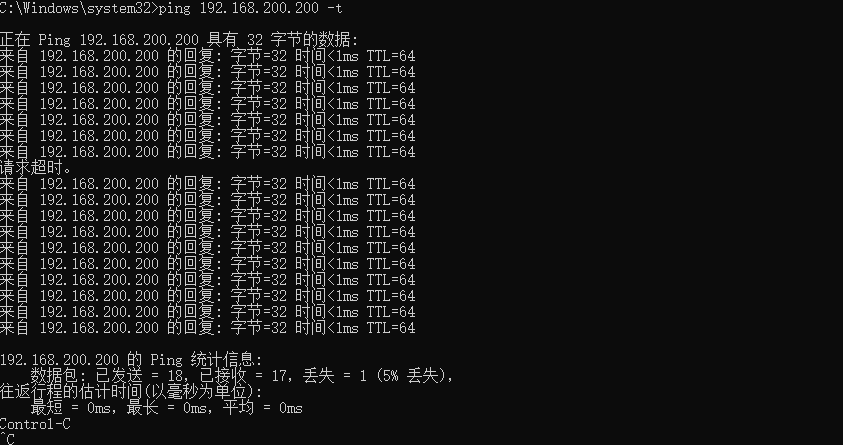

在windows命令窗口使用命令

ping 192.168.200.200 -t在windows系统ping一下这个虚拟IP,观察该ip是否能ping通没有-t只会ping4次,像下图这种情况

-

在linux系统下使用命令

init 0关掉master机器模拟nginx服务器宕机,在windows命令窗口观察网络通信情况windows通信切换时丢包一次,然后再次ping通,在备用机132上使用命令

ip addr能够观察到虚拟ip漂移到132机器上

安装Tomcat

tomcat的运行需要jdk

安装步骤

-

将tomcat的安装包文件拷贝到linux系统下的

/opt/tomcat目录下 -

使用命令

tar zxvf apache-tomcat-9.0.62.tar.gz -C /usr/local/将压缩包解压到/usr/local目录 -

tomcat的目录结构

- bin --启动命令目录

- conf --配置文件目录 *重点

- lib --库文件目录

- logs --日志文件目录 *重点

- temp --临时缓存文件

- webapps --web应用家目录 *重点

- work --工作缓存目录

-

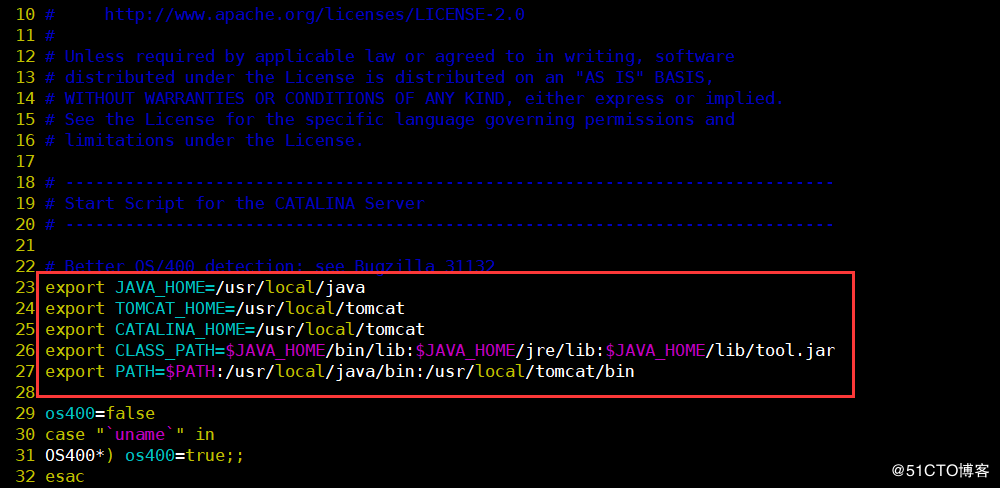

修改tomcat环境变量

解压后需要再tomcat中配置jdk的目录,修改tomcat环境变量有三种方法

- **第一种:**定义在全局里;如果装有多个JDK的话,定义全局会冲突,不建议

[root@Tomcat ~]# vim /etc/profile-

**第二种:**写用户家目录下的环境变量文件.bash_profile

-

**第三种:**是定义在单个tomcat的启动和关闭程序里,建议使用这种

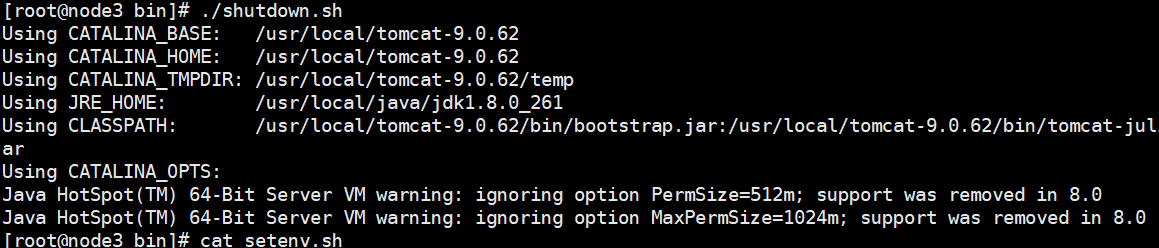

[root@Tomcat ~]# vim /usr/local/tomcat-9.0.62/bin/startup.sh --tomcat的启动程序 [root@Tomcat ~]# vim /usr/local/tomcat-9.0.62/bin/shutdown.sh --tomcat的关闭程序把startup.sh和shutdown.sh这两个脚本里的最前面加上下面一段:

export JAVA_HOME=/usr/local/java/jdk1.8.0_261 export TOMCAT_HOME=/usr/local/tomcat-9.0.62 export CATALINA_HOME=/usr/local/tomcat-9.0.62 export CLASS_PATH=$JAVA_HOME/bin/lib:$JAVA_HOME/jre/lib:$JAVA_HOME/lib/tool.jar export PATH=$PATH:/usr/local/java/jdk1.8.0_261/bin:/usr/local/tomcat-9.0.62/bin【配置详情】

classpath是指定你在程序中所使用的类(.class)文件所在的位置

path是系统用来指定可执行文件的完整路径

-

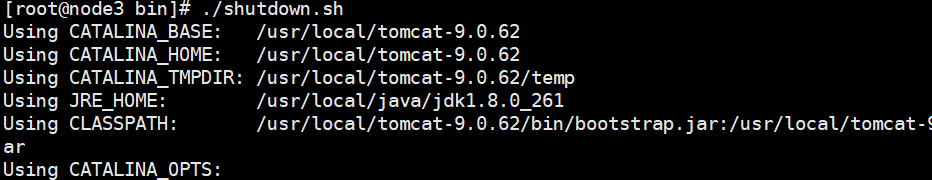

使用命令

/usr/local/tomcat/bin/startup.sh启动tomcat -

使用命令

/usr/local/tomcat/bin/shutdown.sh可以关闭tomcat -

使用命令

vim /usr/local/tomcat/conf/server.xml更改配置文件的port=80可以将tomcat的端口由8080改为8069 <Connector port="80" protocol="HTTP/1.1" ----把8080改成80的话,重启后就监听80端口 70 connectionTimeout="20000" 71 redirectPort="8443" /> -

注意这种方式安装的tomcat存在无法关闭的现象

-

提示信息

-

目前的解决方式是在bin目录下创建setenv.sh文件,写入以下配置【来自于stackOverflow】

目前测试能关闭,能起来;使用shutdown.sh进行关闭,网上有执行流程的帖子,虽然使用shutdown.sh关闭,但是实际还是用catalina.sh关闭的帖子,catalina.sh中提示缺少的参数JAVA_OPTS要写在setenv.sh文件中

export JAVA_OPTS="${JAVA_OPTS} -Xms1200m -Xmx1200m -Xss1024K -XX:PermSize=512m -XX:MaxPermSize=1024m" -

关闭效果

-

安装成功测试

-

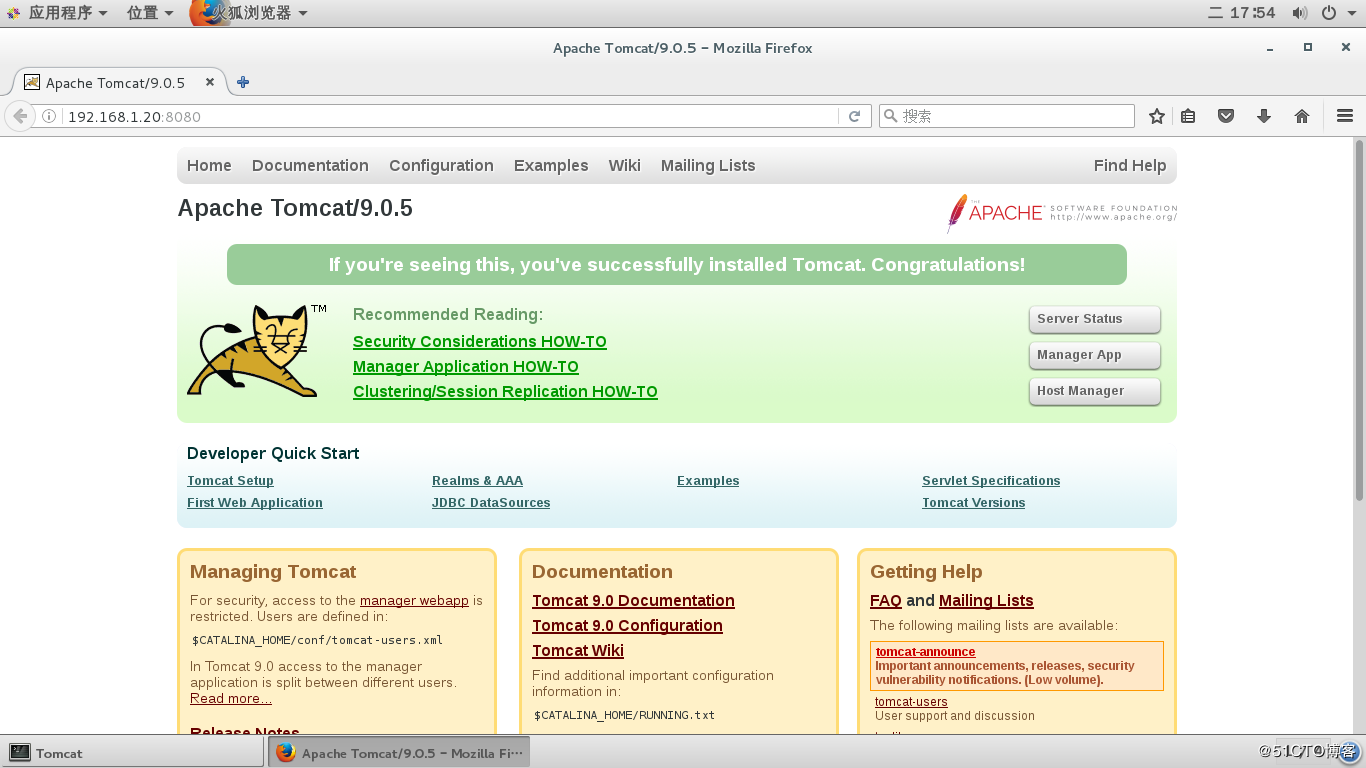

浏览器输入URL``访问机器上的tomcat,出现如下界面即安装成功

安装Gzip

用来压缩和解压缩文件为后缀

.gz的文件

- 使用命令

yum install gzip安装gzip

安装Unzip

用来解压缩文件为后缀

.zip的文件

- 使用命令

yum install -y unzip安装unzip

安装Tree

以树形结构查看文件目录结构的

- 使用命令

yum install -y tree安装tree

安装Strace

追踪进程在干什么

- 使用命令

yum install -y strace安装strace

安装Redis

Redis比memcache的流行程度更高,功能强大,性能不低,非常哇塞的缓存中间件

Nginx可以通过插件化的第三方模块

redis2-nginx-module去连接Redis,redis2-nginx-module【这里的redis的2指的是协议2.0,不是版本2.0】是一个支持 Redis 2.0 协议的 Nginx upstream 模块,它可以让 Nginx 以非阻塞方式直接访问远方的 Redis 服务,同时支持 TCP 协议和 Unix Domain Socket 模式,并且可以启用强大的 Redis 连接池功能。

-

使用命令

yum install epel-release更新yum源关于源的这部分基本都没讲过,以后自己研究

-

使用命令

yum install -y redis安装redisyum安装的坏处是软件的版本可能比较老,只是安装比较方便,但是redis的协议没怎么变化,不影响使用效果

安装ElasticSearch

安装步骤

-

从地址

https://www.elastic.co/cn/downloads/past-releases/elasticsearch-7-8-0选中LINUX_X86_64 sha下载指定版本的Linux版本的ES压缩包elasticsearch-7.8.0-linux-x86_64.tar.gz并上传至Linux系统 -

在上传目录使用命令

tar -zxvf elasticsearch-7.8.0-linux-x86_64.tar.gz解压缩压缩包,解压后的名字较长,使用命令mv elasticsearch-7.8.0 es-7.8.0将ES的名字修改为es-7.8.0 -

使用root用户创建新用户来使用es

- 出于安全问题,ES不允许root用户直接运行,需要在root用户中创建新的用户

- 使用命令

useradd es为linux系统创建es用户 - 使用命令

passwd es在弹窗界面为es用户设置密码 - 如果用户创建错误可以使用命令

userdel -r es删除用户es - 使用命令

chown -R es:es /opt/elasticsearch/es-7.8.0设置es用户为es解压文件的所有者

-

修改根目录下的配置文件

/config/elasticsearch.yml,添加以下配置# 加入如下配置 # 设置集群名称为elasticsearch,默认就叫elasticsearch cluster.name: elasticsearch # 设置节点名称为node-1 node.name: node-1 # 不用管,按样子配置,这个没讲 network.host: 0.0.0.0 #配置HTTP端口号为9200 http.port: 9200 # 将当前节点作为master节点,中括号中的名称要和当前节点的名称保持一致 cluster.initial_master_nodes: ["node-1"] -

修改Linux系统配置文件

ES生成的数据和文件比较多,生成文件时使用系统默认配置可能会出一些问题,需要对系统的配置进行修改

-

使用

vim /etc/security/limits.conf修改文件/etc/security/limits.conf,在文件末尾添加每个进程可以打开的文件数的限制的以下配置# 在文件末尾中增加下面内容 # 每个进程可以打开的文件数的限制 es soft nofile 65536 es hard nofile 65536 -

使用命令

vim /etc/security/limits.d/20-nproc.conf修改系统配置文件,在文件末尾添加以下配置# 在文件末尾中增加下面内容 # 每个进程可以打开的文件数的限制 es soft nofile 65536 es hard nofile 65536 # 操作系统级别对每个用户创建的进程数的限制 * hard nproc 4096 # 注: * 带表 Linux 所有用户名称 -

使用命令

vim /etc/sysctl.conf在文件末尾追加配置一个进程可以拥有的虚拟内存的数量# 在文件中增加下面内容 # 一个进程可以拥有的 VMA(虚拟内存区域)的数量,默认值为 65536 vm.max_map_count=655360 -

使用命令

sysctl -p重新加载系统配置

-

-

启动ES

- 不能直接进入es根目录使用命令

bin/elasticsearch运行程序,会直接报错,因为不允许使用root用户运行程序,要使用命令su es将当前系统用户切换成es【或者自定义用户】再使用命令bin/elasticsearch来运行程序,此时如果之前没有设置chown -R es:es /opt/elasticsearch/es-7.8.0用户权限,此时启动创建文件会出现问题

- 不能直接进入es根目录使用命令

安装成功测试

-

正常启动的效果和windows是一样的,只要出现控制台日志

[2024-04-13T17:59:19,809][INFO ][o.e.n.Node] [node-1] node name [node-1], node ID [7aDVXWxuRMirgLOTBBgUPw], cluster name [my-application]没有报错就是正常启动了,更准确的测试是像服务器发送请求http://192.168.200.136:9200/_cluster/health查询节点状态并响应如下内容注意这一步发送请求需要关闭防火墙或者放开对应的端口

{ "cluster_name": "elasticsearch", "status": "green", "timed_out": false, "number_of_nodes": 1, "number_of_data_nodes": 1, "active_primary_shards": 0, "active_shards": 0, "relocating_shards": 0, "initializing_shards": 0, "unassigned_shards": 0, "delayed_unassigned_shards": 0, "number_of_pending_tasks": 0, "number_of_in_flight_fetch": 0, "task_max_waiting_in_queue_millis": 0, "active_shards_percent_as_number": 100.0 }

集群部署

-

从地址

https://www.elastic.co/cn/downloads/past-releases/elasticsearch-7-8-0选中LINUX_X86_64 sha下载指定版本的Linux版本的ES压缩包elasticsearch-7.8.0-linux-x86_64.tar.gz并上传至Linux系统 -

在上传目录使用命令

tar -zxvf elasticsearch-7.8.0-linux-x86_64.tar.gz解压缩压缩包,解压后的名字较长,使用命令mv elasticsearch-7.8.0 es-7.8.0-cluster将ES的名字修改为es-7.8.0-cluster -

在虚拟机ip分别为131和135的机器上再重复解压安装一遍,实际上可以使用命令进行其他机器的文件分发,这个在尚硅谷的Hadoop课程中有讲文件分发,后续补充,这里先试用手动解压的方式安装

-

为所有es创建操作用户,赋予操作用户对应文件目录的权限,自定义三台主机的主机名

自定义主机名的方法见本文档命令大全中系统操作中的主机名,三台机器136、131、135对应的主机名分别为elasticsearch1、nginx1和elasticsearch3

-

修改136机器上的配置文件

/opt/elasticsearch/es-7.8.0-cluster/config/elasticsearch.yml-

136的配置文件

初始默认配置文件全是注释,直接在文件末尾添加以下配置即可

# 加入如下配置 #集群名称 cluster.name: cluster-es #节点名称, 每个节点的名称不能重复 node.name: node-1 #ip 地址, 每个节点的地址不能重复 network.host: elasticsearch1 #是不是有资格主节点 node.master: true node.data: true http.port: 9200 # head 插件需要这打开这两个配置 http.cors.allow-origin: "*" http.cors.enabled: true http.max_content_length: 200mb #es7.x 之后新增的配置,初始化一个新的集群时需要此配置来选举 master cluster.initial_master_nodes: ["node-1"] #es7.x 之后新增的配置,节点发现,9300是es内部节点默认的通信地址 discovery.seed_hosts: ["elasticsearch1:9300","nginx1:9300","elasticsearch3:9300"] gateway.recover_after_nodes: 2 network.tcp.keep_alive: true network.tcp.no_delay: true transport.tcp.compress: true #集群内同时启动的数据任务个数,默认是 2 个 cluster.routing.allocation.cluster_concurrent_rebalance: 16 #添加或删除节点及负载均衡时并发恢复的线程个数,默认 4 个 cluster.routing.allocation.node_concurrent_recoveries: 16 #初始化数据恢复时,并发恢复线程的个数,默认 4 个 cluster.routing.allocation.node_initial_primaries_recoveries: 16 -

131的配置文件

相比于136只是修改了节点名称和主机名称

# 加入如下配置 #集群名称 cluster.name: cluster-es #节点名称, 每个节点的名称不能重复 node.name: node-2 #ip 地址, 每个节点的地址不能重复 network.host: nginx1 #是不是有资格主节点 node.master: true node.data: true http.port: 9200 # head 插件需要这打开这两个配置 http.cors.allow-origin: "*" http.cors.enabled: true http.max_content_length: 200mb #es7.x 之后新增的配置,初始化一个新的集群时需要此配置来选举 master cluster.initial_master_nodes: ["node-1"] #es7.x 之后新增的配置,节点发现,9300是es内部节点默认的通信地址 discovery.seed_hosts: ["elasticsearch1:9300","nginx1:9300","elasticsearch3:9300"] gateway.recover_after_nodes: 2 network.tcp.keep_alive: true network.tcp.no_delay: true transport.tcp.compress: true #集群内同时启动的数据任务个数,默认是 2 个 cluster.routing.allocation.cluster_concurrent_rebalance: 16 #添加或删除节点及负载均衡时并发恢复的线程个数,默认 4 个 cluster.routing.allocation.node_concurrent_recoveries: 16 #初始化数据恢复时,并发恢复线程的个数,默认 4 个 cluster.routing.allocation.node_initial_primaries_recoveries: 16 -

135的配置

同样相比于136只是修改了节点名称和主机名称

# 加入如下配置 #集群名称 cluster.name: cluster-es #节点名称, 每个节点的名称不能重复 node.name: node-3 #ip 地址, 每个节点的地址不能重复 network.host: elasticsearch3 #是不是有资格主节点 node.master: true node.data: true http.port: 9200 # head 插件需要这打开这两个配置 http.cors.allow-origin: "*" http.cors.enabled: true http.max_content_length: 200mb #es7.x 之后新增的配置,初始化一个新的集群时需要此配置来选举 master cluster.initial_master_nodes: ["node-1"] #es7.x 之后新增的配置,节点发现,9300是es内部节点默认的通信地址 discovery.seed_hosts: ["elasticsearch1:9300","nginx1:9300","elasticsearch3:9300"] gateway.recover_after_nodes: 2 network.tcp.keep_alive: true network.tcp.no_delay: true transport.tcp.compress: true #集群内同时启动的数据任务个数,默认是 2 个 cluster.routing.allocation.cluster_concurrent_rebalance: 16 #添加或删除节点及负载均衡时并发恢复的线程个数,默认 4 个 cluster.routing.allocation.node_concurrent_recoveries: 16 #初始化数据恢复时,并发恢复线程的个数,默认 4 个 cluster.routing.allocation.node_initial_primaries_recoveries: 16

-

-

每台主机都修改对应的系统配置文件

ES生成的数据和文件比较多,生成文件时使用系统默认配置可能会出一些问题,需要对系统的配置进行修改

-

使用

vim /etc/security/limits.conf修改文件/etc/security/limits.conf,在文件末尾添加每个进程可以打开的文件数的限制的以下配置# 在文件末尾中增加下面内容 # 每个进程可以打开的文件数的限制 es soft nofile 65536 es hard nofile 65536 -

使用命令

vim /etc/security/limits.d/20-nproc.conf修改系统配置文件,在文件末尾添加以下配置# 在文件末尾中增加下面内容 # 每个进程可以打开的文件数的限制 es soft nofile 65536 es hard nofile 65536 # 操作系统级别对每个用户创建的进程数的限制 * hard nproc 4096 # 注: * 带表 Linux 所有用户名称 -

使用命令

vim /etc/sysctl.conf在文件末尾追加配置一个进程可以拥有的虚拟内存的数量# 在文件中增加下面内容 # 一个进程可以拥有的 VMA(虚拟内存区域)的数量,默认值为 65536 vm.max_map_count=655360 -

使用命令

sysctl -p重新加载系统配置

-

-

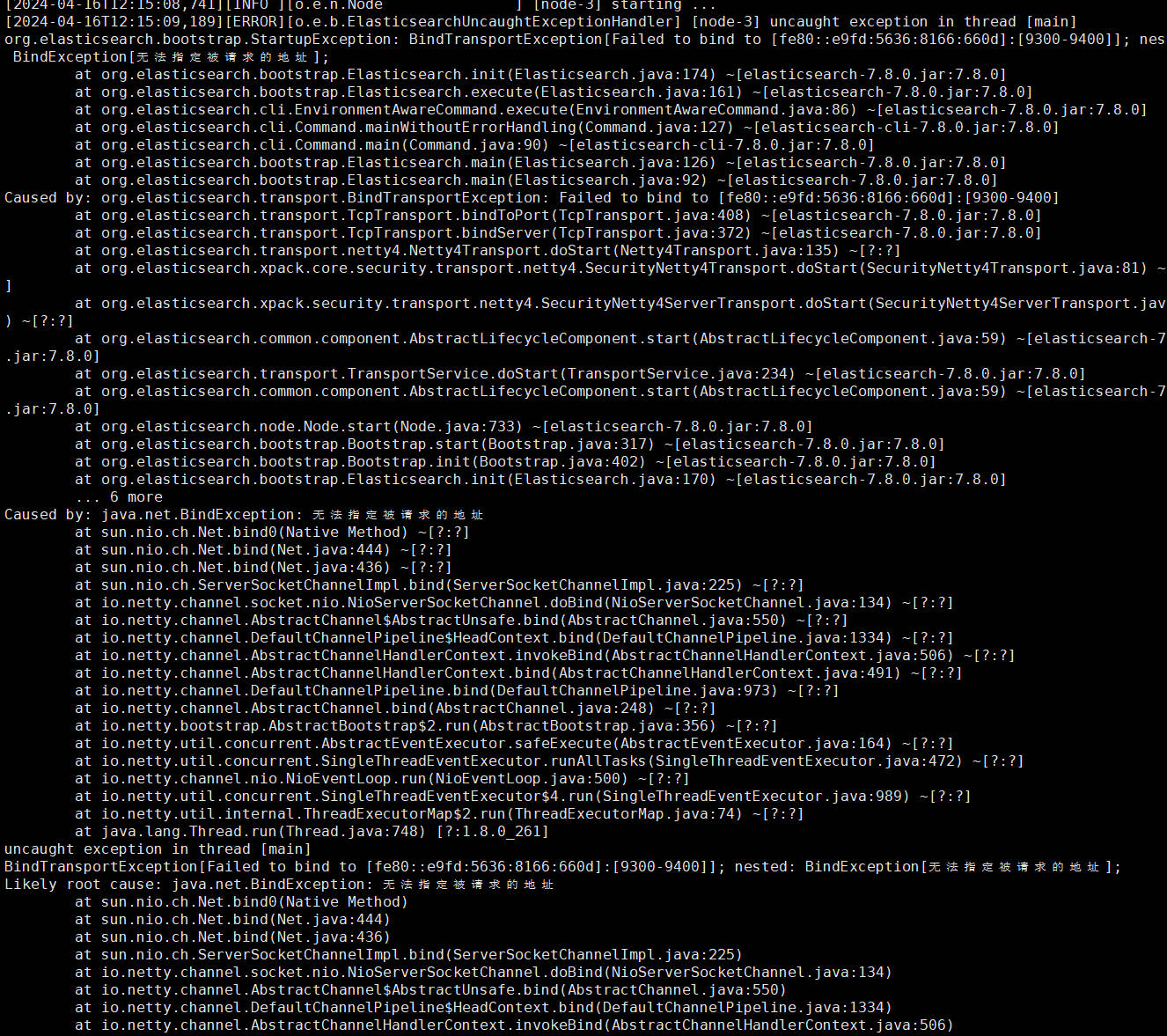

修改每个节点所在主机的

/etc/hosts文件这个必须把集群节点所在的所有主机名和ip的映射关系在每一台主机上都要全部写上,如果不写当前主机和对应的ip映射关系,ES节点中

network.host写当前主机的主机名非master的ES节点会直接启动报错,提示以下信息【以前的其他软件集群部署都是在所有节点主机的/etc/hosts文件中写上包括本机在内的完整的节点映射信息】

-

/etc/hosts文件示例即每个节点所在主机的hosts中都要配置完整集群节点的主机名IP映射,如果当前主机没有配置,非masterES中的

network.host使用当前主机的主机名会直接导致ES启动报错,此时把network.host配置成0.0.0.0能够正常启动,但是不建议这么做,此外一定不能在/etc/hosts中将主机名配置成127.0.0.1的映射关系,会直接导致包括master节点在内的ES服务器无法被访问【连HTTP端口也无法访问】[es@nginx1 es-7.8.0-cluster]$ cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.200.132 node1 192.168.200.133 node2 192.168.200.134 node3 192.168.200.136 elasticsearch1 192.168.200.135 elasticsearch3 192.168.200.131 nginx1

-

-

切换成对应机器分别切到操作用户按顺序启动三个节点,使用PostMan发送请求

http://192.168.200.136:9200/_cat/nodes【GET】查看节点所在集群的所有节点信息-

当只有136节点启动时的响应结果

172.17.0.1 28 96 2 0.06 0.07 0.06 dilmrt * node-1 -

当136和131都启动时的响应结果

192.168.200.136 55 44 0 0.21 0.12 0.08 dilmrt * node-1 192.168.200.131 21 94 4 0.09 0.08 0.07 dilmrt - node-2 -

当136、131和135都启动时的响应结果

192.168.200.136 55 44 0 0.21 0.12 0.08 dilmrt * node-1 192.168.200.131 21 94 4 0.09 0.08 0.07 dilmrt - node-2 192.168.200.135 21 96 2 0.04 0.07 0.06 dilmrt - node-3

-

Docker安装ES

-

使用命令

sudo docker pull elasticsearch:7.4.2拉取ES的Docker镜像 -

创建ElasticSearch实例

-

使用命令

mkdir -p /malldata/elasticsearch/config创建ES实例的配置挂载目录 -

使用命令

mkdir -p /malldata/elasticsearch/data创建ES数据的挂载目录 -

使用命令

mkdir -p /malldata/elasticsearch/plugins创建ES插件的挂载目录,可以放ik分词器或者npl相关的插件 -

使用命令

echo "http.host: 0.0.0.0" >> /malldata/elasticsearch/config/elasticsearch.yml向配置文件elasticsearch.yml中写入配置http.host: 0.0.0.0,该配置表示ES可以被远程的任何机器进行访问 -

使用命令

chmod -R 777 /malldata/elasticsearch/来设置容器实例【设置任何用户或者任何组都可读可写可执行】对挂载目录的相应可读可写可执行权限【这里教学图方便用的777】,如果没有该设置,容器实例会运行失败 -

使用以下命令创建并运行docker实例

- 创建一个名为

elasticsearch:7.4.2的ES实例,分别指定HTTP通信端口和内部节点通信端口分别为9200和9300 \表示当前命令要换行到下一行了-e "discovery.type=single-node"表示当前实例以单节点的方式运行-e ES_JAVA_OPTS="-Xms64m -Xmx512m"设置ES的JVM内存占用,这个非常重要,因为ES的默认JVM内存配置为1G,但是我们的虚拟机内存总共就只有1G,不将该配置减小,ES一启动就会将虚拟机卡死,实际生产环境ES服务器的内存占用单节点一般都是32G左右-v /malldata/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml将容器实例中的配置文件elasticsearch.yml和挂载到自定义挂载目录中的配置文件-v /malldata/elasticsearch/data:/usr/share/elasticsearch/data将ES的数据目录挂载到自定义挂载目录中的data目录-v /malldata/elasticsearch/plugins:/usr/share/elasticsearch/plugins将ES的插件目录挂载到自定义的插件目录来方便直接在容器外挂载目录-d elasticsearch:7.4.2是指定要运行的镜像并以后台运行的方式启动,- 注意原版文档的启动命令没有在容器数据卷后添加

--privileged=true,能正常启动,但是无法正常通过浏览器访问,需要在挂载的容器数据卷后面添加--privileged=true启动后才能正常访问,原因还是Docker挂载主机目录Docker访问出现cannot open directory .: Permission denied,解决办法:在挂载目录后多加一个--privileged=true参数即可,猜测是因为容器没有读取到挂载目录的配置文件配置http.host: 0.0.0.0,所以无法被访问【Docker的ES配置和windows以及linux上的配置不同,其他两个上即使没有http.host: 0.0.0.0浏览器和其他工具也照样能访问,而且单机情况下的配置Docker明显更简单】

docker run --name elasticsearch -p 9200:9200 -p 9300:9300 \ -e "discovery.type=single-node" \ -e ES_JAVA_OPTS="-Xms64m -Xmx512m" \ -v /malldata/elasticsearch/config/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml --privileged=true \ -v /malldata/elasticsearch/data:/usr/share/elasticsearch/data --privileged=true \ -v /malldata/elasticsearch/plugins:/usr/share/elasticsearch/plugins --privileged=true \ -d elasticsearch:7.4.2 - 创建一个名为

-

通过浏览器访问

http://192.168.56.10:9200看到如下信息说明安装成功{ "name": "ecb880026b14", "cluster_name": "elasticsearch", "cluster_uuid": "zxPeJYB9SraGL0p_R4dT0g", "version": { "number": "7.4.2", "build_flavor": "default", "build_type": "docker", "build_hash": "2f90bbf7b93631e52bafb59b3b049cb44ec25e96", "build_date": "2019-10-28T20:40:44.881551Z", "build_snapshot": false, "lucene_version": "8.2.0", "minimum_wire_compatibility_version": "6.8.0", "minimum_index_compatibility_version": "6.0.0-beta1" }, "tagline": "You Know, for Search" }

-

-

ES容器实例安装ik分词器

-

使用命令

docker exec -it 容器id /bin/bash进入容器的控制台,使用命令pwd能看见当前路径,默认路径就在/usr/share/elasticsearch目录下,该目录就是ElasticSearch的根目录 -

使用命令

cd plugins进入ES容器的plugins目录,使用命令wget https://github.com/medcl/elasticsearch-analysis-ik/releases/download/v7.4.2/elasticsearch-analysis-ik-7.4.2.zip获取ik分词器的压缩文件,plugins目录由于已经挂载到malldata目录,也可以直接在malldata目录进行操作- 注意,基础的容器实例不包含wget命令,无法在容器中使用wget,所以还是在挂载目录使用wget下载和操作对应的插件比较好

- virtual box安装的CentOS也是没有wget和unzip的,需要先试用命令

yum install wget和yum install unzip安装wget和unzip

-

使用命令

unzip elasticsearch-analysis-ik-7.4.2.zip -d ik解压缩ik分词器的压缩文件 -

使用命令

rm -rf *.zip删除ik分词器的压缩文件 -

进入

/bin目录,其中有一个命令elasticsearch plugin list:列出当前系统安装的插件,观察是否有ik分词器 -

退出容器,使用命令

docker restart elasticsearch重启容器实例

-

安装Kibana

- kibana的作用是可视化检索数据,就像Navicat、Sqlyog一样的可视化操作数据库数据,不使用这些工具就需要在命令行控制台上进行操作和展示数据

Docker安装

-

Docker安装Kibana7.4.2

- 使用命令

sudo docker pull kibana:7.4.2拉取kibana镜像 - 使用命令

sudo docker run --name kibana -e ELASTICSEARCH_HOSTS=http://192.168.56.10:9200 -p 5601:5601 \ -d kibana:7.4.2来运行kibana实例ELASTICSEARCH_HOSTS=http://192.168.56.10:9200是指定kibana要链接ES服务器的地址-p 5601:5601是指定kibana的HTTP访问地址

- 使用命令

命令大全

man命令能查看常用的linux命令

yum操作

更新linux的yum源

- 使用命令

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup备份原yum源 - 使用命令

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.163.com/.help/CentOS7-Base-163.repo配置使用新yum源 - 使用命令

yum makecache生成缓存

系统操作

-

uname -a查看当前系统的版本 -

uname -r查看当前系统的linux内核版本,系统类型 -

reboot重启linux系统 -

使用

top命令能查看系统CPU和内存的消耗情况 -

使用命令

free -h也能查看当前CPU和内存的消耗情况 -

cat /etc/redhat-release查看当前系统的发行版版本 -

使用命令

df -h能查看linux上磁盘空间使用情况[root@localhost ~]# df -h 文件系统 容量 已用 可用 已用% 挂载点 /dev/mapper/centos-root 17G 2.1G 15G 13% / devtmpfs 898M 0 898M 0% /dev tmpfs 910M 0 910M 0% /dev/shm tmpfs 910M 9.5M 901M 2% /run tmpfs 910M 0 910M 0% /sys/fs/cgroup /dev/sda1 1014M 146M 869M 15% /boot tmpfs 182M 0 182M 0% /run/user/0 -

su root将当前用户切换为root用户 -

whoami可以查看当前用户的用户名 -

使用命令

free -m可以查看当前系统的内存使用情况available是可用内存

[vagrant@localhost network-scripts]$ free -m total used free shared buff/cache available Mem: 990 423 91 6 475 381 Swap: 2047 122 1925

远程操作

-

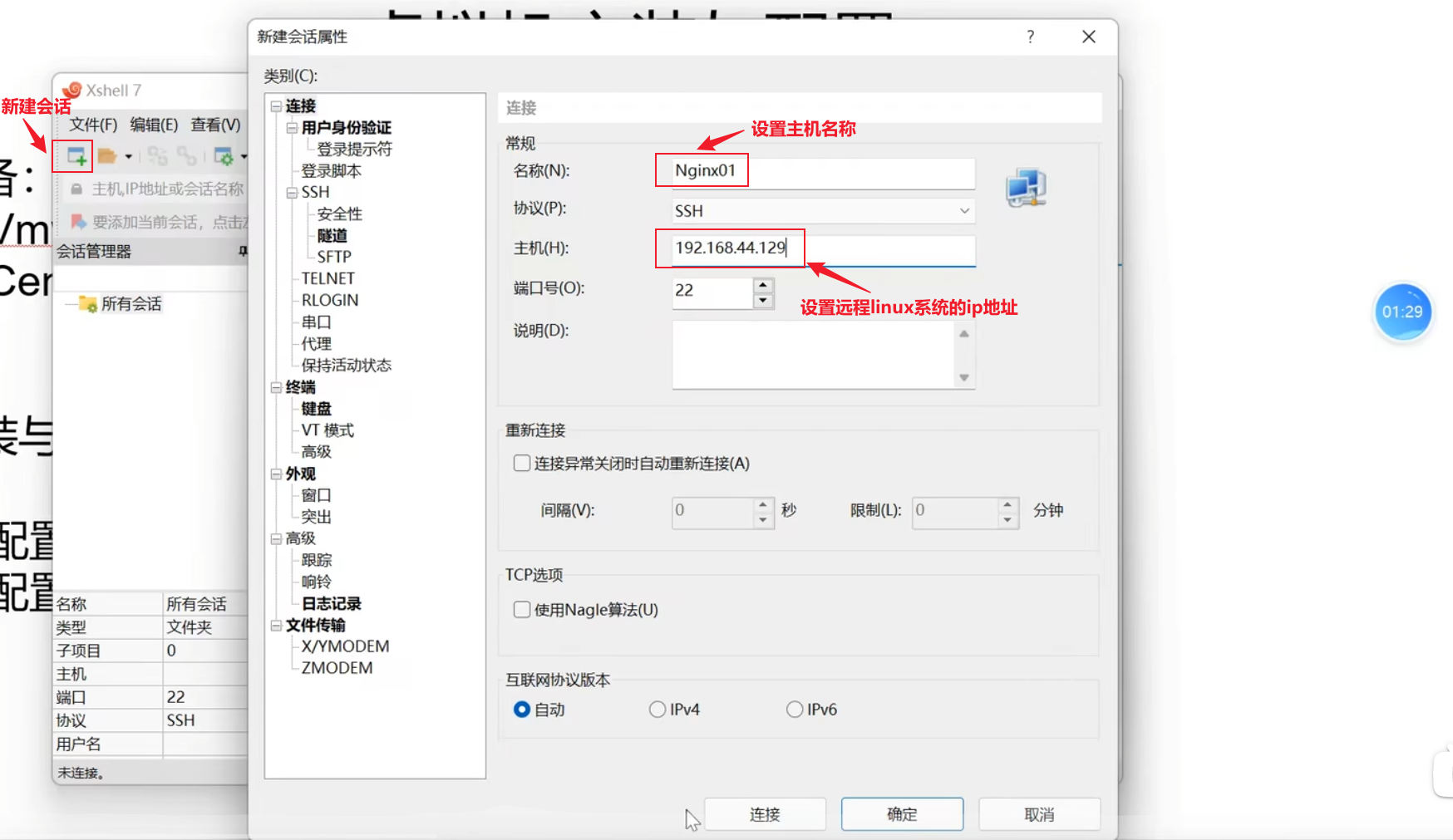

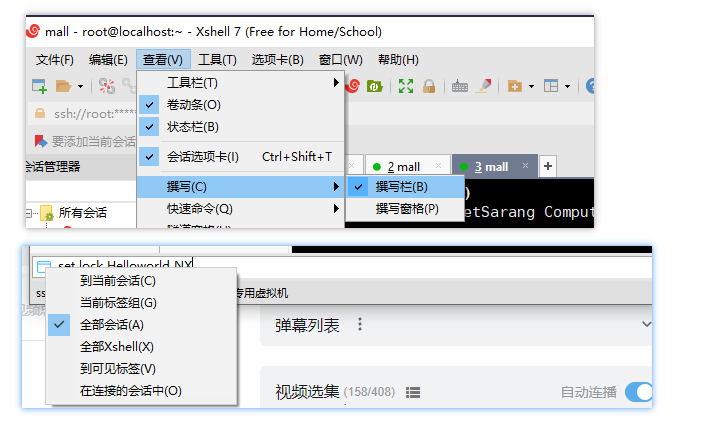

使用Xshell对linux进行远程连接

多台linux虚拟机、多客户端、修改配置文件情况下原版界面不好用

-

使用命令

ip addr查看本机ip【inet】 -

在Xshell点击新建会话,设置主机名称和远程linux主机地址

-

主机名

-

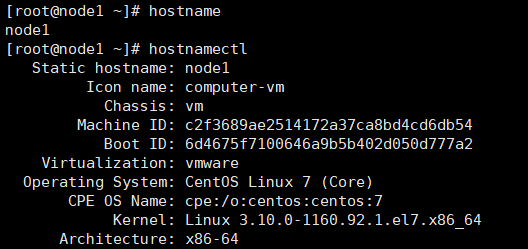

使用命令

hostname或hostnamectl可以查看本机主机名

-

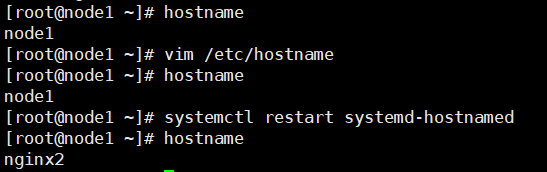

使用命令

vim /etc/hostname修改主机名称,修改后重启系统或者使用命令systemctl restart systemd-hostnamed重新加载主机名

文件操作

创建文件

mkdir /usr/local/zookeeper- 创建zookeeper目录,该路径可以是相对路径,也可以是绝对路径

echo "www" >> /usr/local/nginx/1- 将内容www写入文件

/usr/local/nginx/1中

- 将内容www写入文件

移动文件

-

cp canal.deployer-1.1.4.tar.gz /usr/local/canal/- 将当前目录下的文件

canal.deployer-1.1.4.tar.gz拷贝一份到/usr/local/canal目录下,如果目标目录下存在同名文件会直接将同名文件内容覆盖

- 将当前目录下的文件

-

cp ngx_http_brotli_filter_module.so /usr/local/nginx/modulesmodules是一个空目录

- 将当前目录下的文件

ngx_http_brotli_filter_module.so拷贝到nginx目录下并重命名为modules

- 将当前目录下的文件

-

mv /opt/jdk/jdk1.8.0_261 /usr/local/java/jdk1.8.0_261-

将

/opt/jdk/jdk1.8.0_261文件移动到/usr/local/java目录下mv /opt/jdk/jdk1.8.0_261 /usr/local/java会直接把文件移至/usr/local目录下并将文件改名为java

-

-

mv ./* /opt/nginx/ngx_brotli-1.0.0rc/deps/brotli将当前目录下的所有文件移动【不保留】到指定目录

/opt/nginx/ngx_brotli-1.0.0rc/deps/brotli下 -

tail -f /usr/local/nginx/logs/access.log能在linux客户端实时显示文件内容

文件名更改

-

mv apache-zookeeper-3.5.7-bin zookeeper-3.5.7- 将文件apache-zookeeper-3.5.7-bin改名为zookeeper-3.5.7【名字一般都要带版本号】

删除文件

rm -rf conf/- 删除conf目录下的所有文件

压缩文件

gzip命令是一款强大的文件压缩工具,它可以通过压缩文件的方式显著减小文件大小,常用于文件备份、数据传输和发布软件包

Gzip

-

参数列表

参数 参数详情 功能 -c –stdout 将压缩数据输出到标准输出,保留原文件 -d –decompress 解压缩文件 -f –force 强制压缩文件,覆盖已有压缩文件 -r –recursive 递归地压缩目录及其内容 -t –test 测试压缩文件是否损坏 -v –verbose 显示压缩进度信息 -# #表示数字 #取值范围1-9,默认是6 -h –help 显示帮助信息 -k 压缩文件后保留原文件 -

常用命令示例

-

gzip example.txt将当前目录下的example.txt的文本文件压缩成压缩文件example.txt.gz,原始文件example.txt将被删除。

-

gzip -d example.txt.gz将压缩文件

example.txt.gz解压为example.txt -

gzip -r my_directory递归压缩当前目录下名为

my_directory的目录中的所有文件 -

gzip -cd example.txt.gz在不解压压缩文件的前提下将压缩文件内容输出到终端

-

gzip -t example.txt.gz测试压缩文件是否完整或已经损坏,输出显示"example.txt.gz: OK",则表示文件完整无损。若显示错误消息,则表明文件可能已损坏。

-

gzip -f example.txt默认情况下,若压缩文件已经存在,gzip不会对该文件进行覆盖,使用该命令可以强制压缩文件并覆盖已有同名压缩文件

-

gzip -9 example.txt调整压缩级别来平衡压缩比和压缩速度。默认压缩级别为6,可以在1到9之间进行调整。较低的级别(例如1)可以更快地完成压缩,但压缩比较低;较高的级别(例如9)会产生更好的压缩比,但速度较慢

-

ls -l | gzip > file_list.gz显示当前目录的文件列表,并将列表内容压缩到名为file_list.gz的文件中

-

gzip file1.txt file2.txt file3.txt同时压缩file1.txt、file2.txt和file3.txt三个文件:

-

gzip -k example.txt压缩example.txt文件并保留原文件

-

解压文件

Gunzip

-

gunzip -r ./能够解压当前目录下的所有gzip压缩方式压缩的文件,如果当前目录下有其他格式的文件会自动忽略

Unzip

- VirtualBox安装的CentOS 7需要使用命令

yum install unzip进行安装unzip

unzip elasticsearch-analysis-ik-7.4.2.zip -d ik- 解压文件

elasticsearch-analysis-ik-7.4.2.zip到指定目录ik中

- 解压文件

unzip elasticsearch-analysis-ik-7.4.2.zip- 解压文件

elasticsearch-analysis-ik-7.4.2.zip到当前目录,当前目录下会出现很多文件

- 解压文件

Tar

tar zxvf canal.deployer-1.1.4.tar.gz- 解压文件

canal.deployer-1.1.4.tar.gz到当前目录

- 解压文件

tar zxvf canal.deployer-1.1.4.tar.gz -C /usr/local/canal

- 解压文件

canal.deployer-1.1.4.tar.gz到指定目录/usr/local/canal,该目录必须已经存在,且解压到指定目录时不能指定解压文件的名称,需要在解压目录单独更改文件名称

查找文件绝对路径

find / -name "hudson.model.UpdateCenter.xml"- 查找文件hudson.model.UpdateCenter.xml的绝对路径,如果找到了会返回路径,没找到说明没有该文件

查看文件内容

tail -n 20 Dockerfile- 查看文件的最后20行

目录操作

-

pwd显示当前目录绝对路径

-

rm -rf brotli-1.0.9强制递归删除当前目录下的

brotli-1.0.9目录,如果没有任何参数,会提示删除的是一个目录,如果只有递归删除-r会每删除一个文件确认一次删除单个文件,使用-rf就可以直接强制递归删除一个目录 -

cd直接进入家目录

-

tree /usr/local/nginx/以树形图的形式展示一个目录的结构

[root@nginx1 ngx_tmp]#tree /ngx_tmp/ /ngx_tmp/ ├── 3 │ └── 25 ├── 5 │ └── 13 │ └── 4cb14647de7a8b8654a4ae94f15a0135 └── 9 └── 7d └── 6666cd76f96956469e7be39d750cc7d9 -

mkdir -p /usr/local/nginx/ngx_logs递归创建子目录

/usr/local/nginx/ngx_logs -

where is mysql查看本机上mysql目录的所有路径

查找软件安装位置

which jdk

进程操作

查看端口

-

netstat -ntlp- 查看所有服务以及端口和进程号,可以使用grep进行过滤

-

使用命令

netstat -anp | grep 80- 查看端口80被占用的服务

-

使用命令

lsof -i:8080能查看指定的8080端口运行情况[root@Tomcat ~]# lsof -i:8080 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME java 31259 root 49u IPv6 465528 0t0 TCP *:webcache (LISTEN) -

ps -ef- 查看所有进程,结合管道命令使用grep过滤掉非指定信息如

ps -ef|grep java

- 查看所有进程,结合管道命令使用grep过滤掉非指定信息如

-

ps -Tf -p <PID>- 查看指定PID进程的所有线程的信息,显示信息包括进程运行者、PID、进程可执行文件的位置

-

kill <PID>- 杀死指定PID进程

-

kill -9 <PID>- 强制杀死指定PID进程

-

top-

以动态的方式查看当前系统运行的所有进程的进程名、PID和对应的CPU、内存消耗情况

-

按Shift+h切换是否显示线程信息

-

使用快捷键Ctrl+c退出监控窗口

-

-

top -H -p <PID>- 查看指定PID对应进程和进程中的所有线程信息,也包含每个线程占用的CPU和内存占用情况和线程对应的PID

- java程序比较常见的有finalizer线程【垃圾回收线程】,C1 CompilerThre、C2 CompilerThre【这两个线程是jdk提供的即时编译器线程】

- 查看指定PID对应进程和进程中的所有线程信息,也包含每个线程占用的CPU和内存占用情况和线程对应的PID

防火墙操作

-

systemctl start firewalld- 开启防火墙

-

systemctl restart firewalld- 重启防火墙

-

firewall-cmd --list-all- 查看防火墙已经配置的规则

-

systemctl stop firewalld- 关闭防火墙

-