倒排索引实战(多job串联)

0)需求:有大量的文本(文档、网页),需要建立搜索索引

a.txt:

atguigu pingping

atguigu ss

atguigu ss

b.txt

atguigu pingping

atguigu pingping

pingping ss

c.txt

atguigu ss

atguigu pingping

最终输出结果:

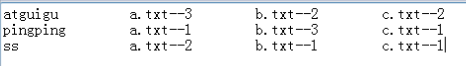

(1)第一次预期输出结果

atguigu--a.txt 3

atguigu--b.txt 2

atguigu--c.txt 2

pingping--a.txt 1

pingping--b.txt 3

pingping--c.txt 1

ss--a.txt 2

ss--b.txt 1

ss--c.txt 1

(2)第二次预期输出结果

atguigu c.txt-->2 b.txt-->2 a.txt-->3

pingping c.txt-->1 b.txt-->3 a.txt-->1

ss c.txt-->1 b.txt-->1 a.txt-->2

1)第一次处理

(1)第一次处理,编写OneIndexMapper

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.lib.input.FileSplit;

public class OneIndexMapper extends Mapper<LongWritable, Text, Text, IntWritable> {

Text k = new Text();

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// 1 获取一行

String line = value.toString();

// 2 截取

String[] fields = line.split(" ");

// 3 获取文件名称

FileSplit inputSplit = (FileSplit) context.getInputSplit();

String name = inputSplit.getPath().getName();

// 4 拼接

for (int i = 0; i < fields.length; i++) {

k.set(fields[i] + "--" + name);

// 5 输出

context.write(k, new IntWritable(1));

}

}

}

(2)第一次处理,编写OneIndexReducer

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class OneIndexReducer extends Reducer<Text, IntWritable, Text, IntWritable>{

@Override

protected void reduce(Text key, Iterable<IntWritable> values,

Context context) throws IOException, InterruptedException {

// 累加求和

int count = 0;

for(IntWritable value:values){

count += value.get();

}

// 写出去

context.write(key, new IntWritable(count));

}

}

(3)第一次处理,编写OneIndexDriver

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class OneIndexDriver {

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(OneIndexDriver.class);

job.setMapperClass(OneIndexMapper.class);

job.setReducerClass(OneIndexReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.waitForCompletion(true);

}

}

(4)查看第一次输出结果

atguigu--a.txt 3

atguigu--b.txt 2

atguigu--c.txt 2

pingping--a.txt 1

pingping--b.txt 3

pingping--c.txt 1

ss--a.txt 2

ss--b.txt 1

ss--c.txt 1

2)第二次处理

(1)第二次处理,编写TwoIndexMapper

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

public class TwoIndexMapper extends Mapper<LongWritable, Text, Text, Text>{

Text k = new Text();

Text v = new Text();

@Override

protected void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

// 1) 获取一行

String line = value.toString();

// 2)截取--

String[] fields = line.split("--");

// 3)赋值key和value

k.set(fields[0]);

v.set(fields[1]);

// 4)写出

context.write(k, v);

}

}

(2)第二次处理,编写TwoIndexReducer

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class TwoIndexReducer extends Reducer<Text, Text, Text, Text>{

Text v = new Text();

@Override

protected void reduce(Text key, Iterable<Text> values, Context context)

throws IOException, InterruptedException {

StringBuilder sb = new StringBuilder();

// 拼接

for(Text text :values){

sb.append(text.toString() + "\t");

}

// 设置输出value

v.set(sb.toString());

// 写出

context.write(key, v);

}

}

(3)第二次处理,编写TwoIndexDriver

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class TwoIndexDriver {

public static void main(String[] args) throws Exception {

Configuration config = new Configuration();

Job job = Job.getInstance(config);

job.setJarByClass(TwoIndexDriver.class);

job.setMapperClass(TwoIndexMapper.class);

job.setReducerClass(TwoIndexReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(Text.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

(4)第二次查看最终结果

atguigu c.txt-->2 b.txt-->2 a.txt-->3

pingping c.txt-->1 b.txt-->3 a.txt-->1

ss c.txt-->1 b.txt-->1 a.txt-->2

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?