文章目录

1. 安装openMPI

这是一个后续分布式训练用到的一个调度框架,官网下载编译安装,这里使用较稳定的4.1.5,最新版本5.0也出来了

# 本机直接安装会报一个/usr/lib64/libstdc++.so.6找不到GLIBCXX3....,需要指定新安装GCC动态链接库路径

echo export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lib64 >> ~/.bashrc

#这里指定CC CXX,否则可能使用旧版本gcc编译安装,且开启了Java mpi编译

./configure CC=/usr/local/bin/gcc CXX=/usr/local/bin/g++ --enable-mpi-java && make -j4 && make instal

2. mpirun基本命令

# 避免每次命令加--allow-run-as-root

echo 'export OMPI_ALLOW_RUN_AS_ROOT_CONFIRM=1' >> ~/.bashrc

echo 'export OMPI_ALLOW_RUN_AS_ROOT=1' >> ~/.bashrc

# mpirun有几个等效命令,都链接到了同一个二进制脚本,mpirun的help比较特别,--help 可指定参数以查看其它二级help

mpirun --help generral/mapping/launch/output.....

#

3. mpirun单机多进程

[root@node-1 ~]# mpirun -np 2 python3 --version

Python 3.9.17

Python 3.9.17

[root@node-1 ~]# mpirun -x ENV1=111 -x ENV2=222 -np 2 env|grep ENV

ENV1=111

ENV2=222

ENV1=111

ENV2=222

4. mpirun多机多进程

这里使用3个host(126,127,128)。 运行mpirun命令的为头节点(这里用126),它是通过ssh远程命令来拉起其他host(127,128)的业务进程的,故它需要密码访问其他host

# 在126生成RSA公钥,并copy给127,128即可

ssh-keygen -t rsa

ssh-copy-id -i ~/.ssh/id_rsa.pub 192.168.72.127

ssh-copy-id -i ~/.ssh/id_rsa.pub 192.168.72.128

使用-host指定多个节点及计划启动的进程数,IP可以用hostname替换,但需要配置/etc/hosts解析,也可以使用-hostfile来指定节点及进程数

[root@node-1 ~]# mpirun -host 192.168.72.126:2,192.168.72.127:2,192.168.72.128:2 ip addr|grep eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000

inet 192.168.72.126/24 brd 192.168.72.255 scope global noprefixroute eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000

inet 192.168.72.126/24 brd 192.168.72.255 scope global noprefixroute eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000

inet 192.168.72.127/24 brd 192.168.72.255 scope global noprefixroute eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000

inet 192.168.72.128/24 brd 192.168.72.255 scope global noprefixroute eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000

inet 192.168.72.127/24 brd 192.168.72.255 scope global noprefixroute eth0

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UNKNOWN group default qlen 1000

inet 192.168.72.128/24 brd 192.168.72.255 scope global noprefixroute eth0

5. mpi多机多进程的进程信息及端口等

126启动分别在127,128启动两个python死循环进程打印时间,头结点126不跑业务进程

mpirun -host 192.168.72.127:2,192.168.72.128:2 python3 -c 'while True: import time; print(time.localtime());time.sleep(3);'

126 上为mpirun主进程及其拉起的两个ssh远程启动进程

[root@node-1 ~]# ps -ef|grep -v grep|egrep 'mpirun|orte|python3'

root 27661 29396 4 17:39 pts/3 00:00:00 mpirun -host 192.168.72.127:2,192.168.72.128:2 python3 -c while True: import time; print(time.localtime());time.sleep(3);

root 27684 27661 1 17:39 pts/3 00:00:00 /usr/bin/ssh -x 192.168.72.127 orted -mca ess "env" -mca ess_base_jobid "4102619136" -mca ess_base_vpid 1 -mca ess_base_num_procs "3" -mca orte_node_regex "node-[1:1],[3:192].168.72.127,[3:192].168.72.128@0(3)" -mca orte_hnp_uri "4102619136.0;tcp://192.168.72.126,172.17.0.1,172.18.0.1:45543" -mca plm "rsh" --tree-spawn -mca routed "radix" -mca orte_parent_uri "4102619136.0;tcp://192.168.72.126,172.17.0.1,172.18.0.1:45543" -mca pmix "^s1,s2,cray,isolated"

root 27685 27661 1 17:39 pts/3 00:00:00 /usr/bin/ssh -x 192.168.72.128 orted -mca ess "env" -mca ess_base_jobid "4102619136" -mca ess_base_vpid 2 -mca ess_base_num_procs "3" -mca orte_node_regex "node-[1:1],[3:192].168.72.127,[3:192].168.72.128@0(3)" -mca orte_hnp_uri "4102619136.0;tcp://192.168.72.126,172.17.0.1,172.18.0.1:45543" -mca plm "rsh" --tree-spawn -mca routed "radix" -mca orte_parent_uri "4102619136.0;tcp://192.168.72.126,172.17.0.1,172.18.0.1:45543" -mca pmix "^s1,s2,cray,isolated"

[root@node-1 ~]# netstat -ntlp|egrep 'mpirun|orte|python3'

tcp 0 0 127.0.0.1:34834 0.0.0.0:* LISTEN 27661/mpirun

tcp 0 0 0.0.0.0:45543 0.0.0.0:* LISTEN 27661/mpirun

127,128运行的是126远程ssh拉起的orted进程及orted拉起的2个业务进程

[root@node-1 ~]# ps -ef|grep -v grep|egrep 'mpirun|orte|python3'

root 8633 8631 0 17:39 ? 00:00:00 orted -mca ess env -mca ess_base_jobid 4102619136 -mca ess_base_vpid 2 -mca ess_base_num_procs 3 -mca orte_node_regex node-[1:1],[3:192].168.72.127,[3:192].168.72.128@0(3) -mca orte_hnp_uri 4102619136.0;tcp://192.168.72.126,172.17.0.1,172.18.0.1:45543 -mca plm rsh --tree-spawn -mca routed radix -mca orte_parent_uri 4102619136.0;tcp://192.168.72.126,172.17.0.1,172.18.0.1:45543 -mca pmix ^s1,s2,cray,isolated

root 8640 8633 0 17:39 ? 00:00:00 python3 -c while True: import time; print(time.localtime());time.sleep(3);

root 8641 8633 0 17:39 ? 00:00:00 python3 -c while True: import time; print(time.localtime());time.sleep(3);

6. 测试openMPI的examples之hello_c.c

使用包装编译器编译hello_c.c与单机执行

[root@node-1 examples]# mpicc -o helloc hello_c.c

[root@node-1 examples]# ls

connectivity_c.c Hello.java hello_oshmemfh.f90 Makefile.include oshmem_strided_puts.c ring_cxx.cc ring_oshmemfh.f90

helloc hello_mpifh.f hello_usempif08.f90 oshmem_circular_shift.c oshmem_symmetric_data.c Ring.java ring_usempif08.f90

hello_c.c hello_oshmem_c.c hello_usempi.f90 oshmem_max_reduction.c README ring_mpifh.f ring_usempi.f90

hello_cxx.cc hello_oshmem_cxx.cc Makefile oshmem_shmalloc.c ring_c.c ring_oshmem_c.c spc_example.c

[root@node-1 examples]# ./helloc

Hello, world, I am 0 of 1, (Open MPI v4.1.5, package: Open MPI root@node-1 Distribution, ident: 4.1.5, repo rev: v4.1.5, Feb 23, 2023, 106)

[root@node-1 examples]# mpicc --showme

/usr/local/bin/gcc -I/usr/local/include -pthread -L/usr/local/lib -Wl,-rpath -Wl,/usr/local/lib -Wl,--enable-new-dtags -lmpi

使用IDE来编译及运行openmpi examples下的案例helloc_c.c

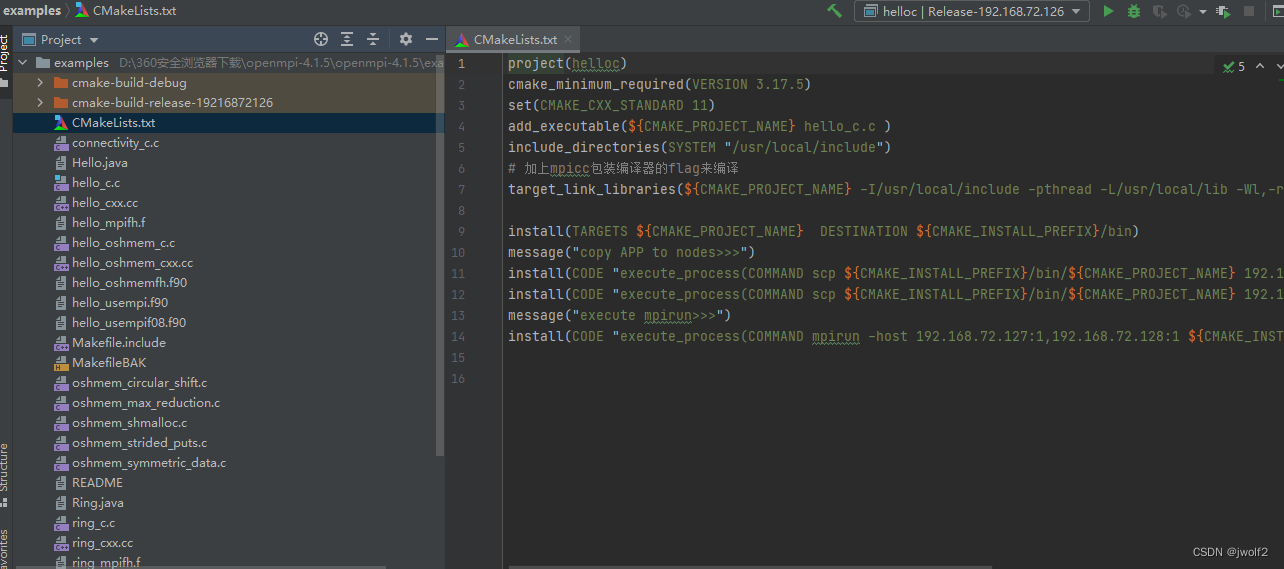

解压4.1.5.tar.gz ,删除Makefile,新增CMakeLists.txt (Cmake语法更简单),用Clion打开examples目录,编辑CMakeLists.txt 如下(这里在install后执行命令cp新编译的target到各个nodes,在执行mpirun命令启动)

project(helloc)

cmake_minimum_required(VERSION 3.17.5)

set(CMAKE_CXX_STANDARD 11)

add_executable(${CMAKE_PROJECT_NAME} hello_c.c )

include_directories(SYSTEM "/usr/local/include")

# 加上mpicc包装编译器的flag来编译

target_link_libraries(${CMAKE_PROJECT_NAME} -I/usr/local/include -pthread -L/usr/local/lib -Wl,-rpath -Wl,/usr/local/lib -Wl,--enable-new-dtags -lmpi)

install(TARGETS ${CMAKE_PROJECT_NAME} DESTINATION ${CMAKE_INSTALL_PREFIX}/bin)

message("copy APP to nodes>>>")

install(CODE "execute_process(COMMAND scp ${CMAKE_INSTALL_PREFIX}/bin/${CMAKE_PROJECT_NAME} 192.168.72.127:${CMAKE_INSTALL_PREFIX}/bin)")

install(CODE "execute_process(COMMAND scp ${CMAKE_INSTALL_PREFIX}/bin/${CMAKE_PROJECT_NAME} 192.168.72.128:${CMAKE_INSTALL_PREFIX}/bin)")

message("execute mpirun>>>")

install(CODE "execute_process(COMMAND mpirun -host 192.168.72.127:1,192.168.72.128:1 ${CMAKE_INSTALL_PREFIX}/bin/${CMAKE_PROJECT_NAME} 10)")

修改hello_c.c,循环打印,避免进程过快退出

#include <stdio.h>

#include <stdlib.h>

#include <time.h>

#include "mpi.h"

int main(int argc, char* argv[])

{

int rank, size, len;

char version[MPI_MAX_LIBRARY_VERSION_STRING];

MPI_Init(&argc, &argv);

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

MPI_Comm_size(MPI_COMM_WORLD, &size);

MPI_Get_library_version(version, &len);

int count=0;

while (count<atoi(argv[1])){

printf("Hello, world, I am %d of %d, (%s, %d)\n",rank, size, version, len);

sleep(3);

count++;

}

MPI_Finalize();

return 0;

}

新的业务进程helloc较之前的业务进程python3多了一个端口监听,这是分布式计算各个节点通信要用的端口,引入了mpi框架就会多这么一个端口监听。其实这里的多个helloc进程并没有通信,复杂一点的案例ring_c.c中就调用了mpi通信API

[root@node-1 ~]# netstat -ntlp|egrep 'mpirun|orte|helloc'

tcp 0 0 0.0.0.0:1024 0.0.0.0:* LISTEN 18314/helloc

tcp 0 0 127.0.0.1:50885 0.0.0.0:* LISTEN 18306/orted

tcp 0 0 0.0.0.0:58889 0.0.0.0:* LISTEN 18306/orted

3033

3033

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?