● 难度:新手入门⭐

● 语言:Python3、Pytorch3

🍺要求:

1、训练过程中保存效果最好的模型参数。

2、调用官方的VGG-16网络框架

🍻拔高(可选):

1、测试集accuracy到达60%

2、手动搭建VGG-16网络框架。

注:这次打卡比较重要,首先他是一个多分类问题,其次,相比前几次的任务,能更体会到一些参数的重要性

🍨 本文为🔗365天深度学习训练营 中的学习记录博客

🍖 原作者:K同学啊|接辅导、项目定制

365天深度学习训练营打卡

第6周-好莱坞明星识别

一、问题分析

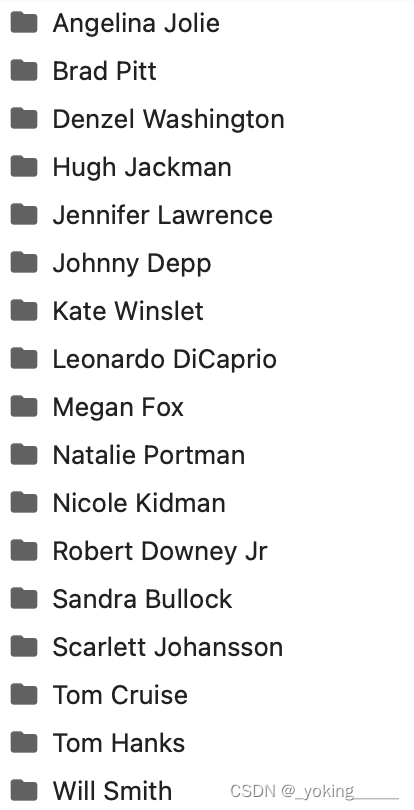

数据一共有17个类别,所以任务的目标就是,训练一个区分17个类的多分类任务。

所以,使用0~16标识这17个类别。

1.设置GPU

device = "cuda" if torch.cuda.is_available() else 'cpu'

2.导入数据

# 导入数据

import PIL, random, pathlib

data_dir = './data/'

data_dir = pathlib.Path(data_dir)

print(data_dir)

data_paths = list(data_dir.glob('*'))

print(data_paths)

classNames = [str(path).split("\\")[1] for path in data_paths]

classNames

3.划分数据集

image_count = len(list(data_dir.glob('*/*.jpg')))

print("Sum of pictures:", image_count)

from PIL import Image

jennifer = list(data_dir.glob('Jennifer Lawrence/*.jpg'))

PIL.Image.open(jennifer[0])

这里做了参考的优化,将输入图片的大小改为256X256

train_transforms = transforms.Compose([

transforms.Resize([256, 256]),

transforms.ToTensor(),

transforms.Normalize(

mean=[0.485, .456, 0.406],

std=[0.229, 0.224, 0.225]

)

])

total_data = datasets.ImageFolder("./data/", transform=train_transforms)

total_data

划分训练数据集和测试集

train_size = int(0.8 * len(total_data))

test_size = len(total_data) - train_size

train_dataset, test_dataset = torch.utils.data.random_split(total_data, [train_size, test_size])

train_dataset, test_dataset

dataloader 包装

batch_size = 32

train_dl = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size,

shuffle=True,

num_workers=1)

test_dl = torch.utils.data.DataLoader(test_dataset,

batch_size=batch_size,

shuffle=False,

num_workers=1)

二、构建简单的CNN网络

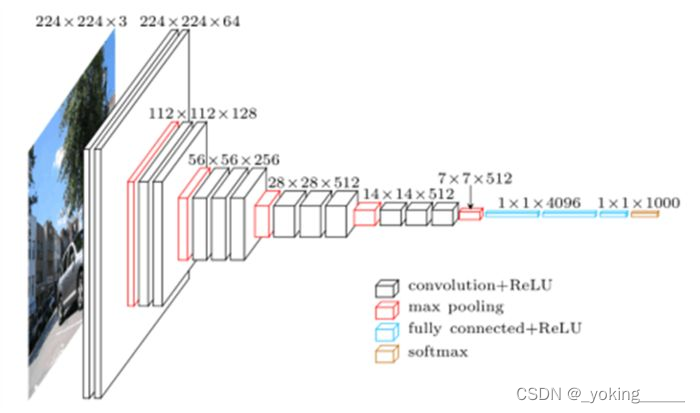

VGG16网络框架:

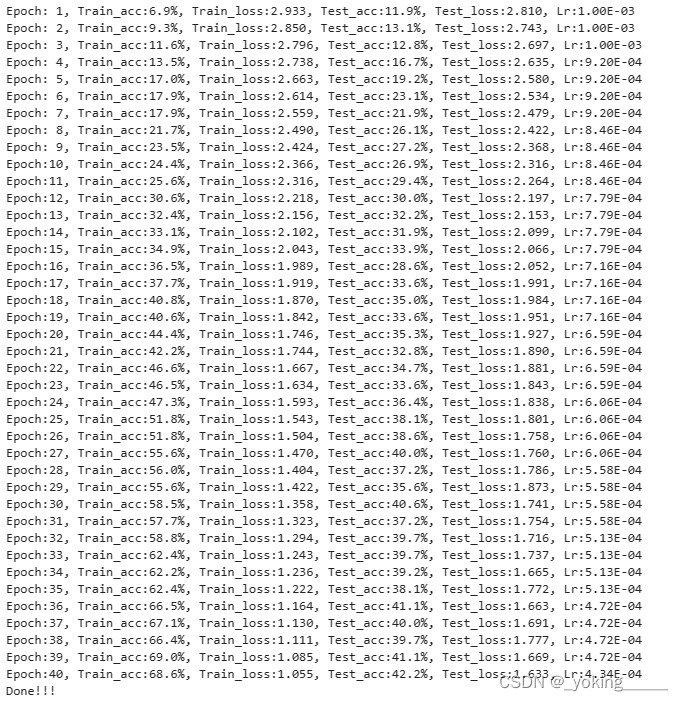

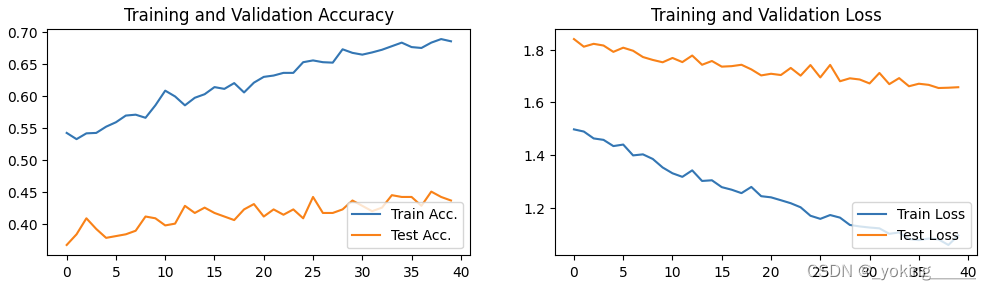

- 这里如果直接使用VGG-16的网络框架拟合效果很差,会出现较为明显的过拟合现象,如下图,是直接使用原始框架训练时的误差以及Acc.的情况:

- 可以看到误差在2左右,而精确度只有20%左右

因为训练数据只有1800张图片,而对于VGG-16网络来说,可能数据量不够,所以需要调整网络,防止过拟合!!

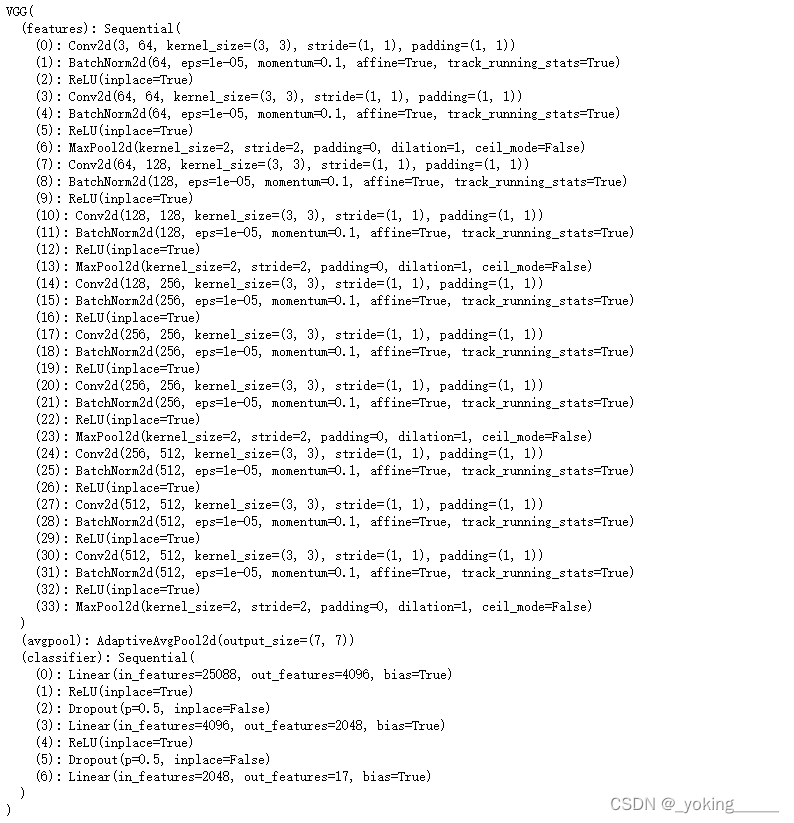

网络改进如下:

- 添加BN层:Batch_Norm的作用

- 调整图片的尺寸,从224x224调整到256x256

- 减小全连接层神经元的数量

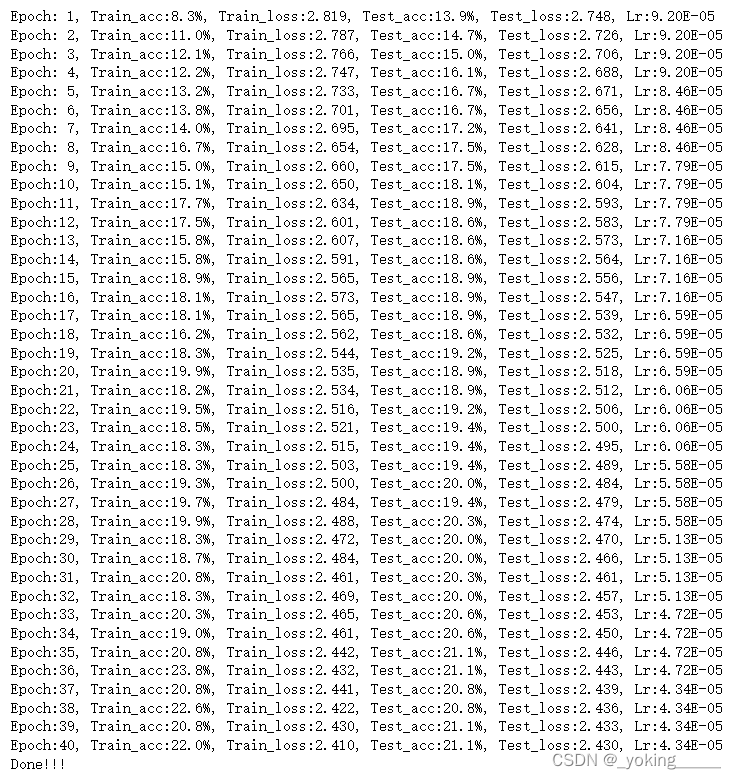

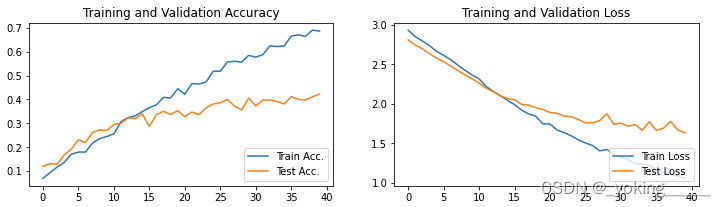

第一次训练结果:采用13个卷积层的训练结果

Loss and Acc.

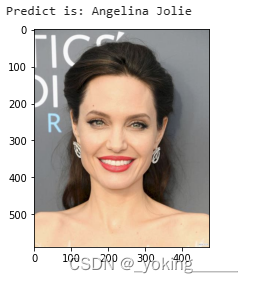

预测:

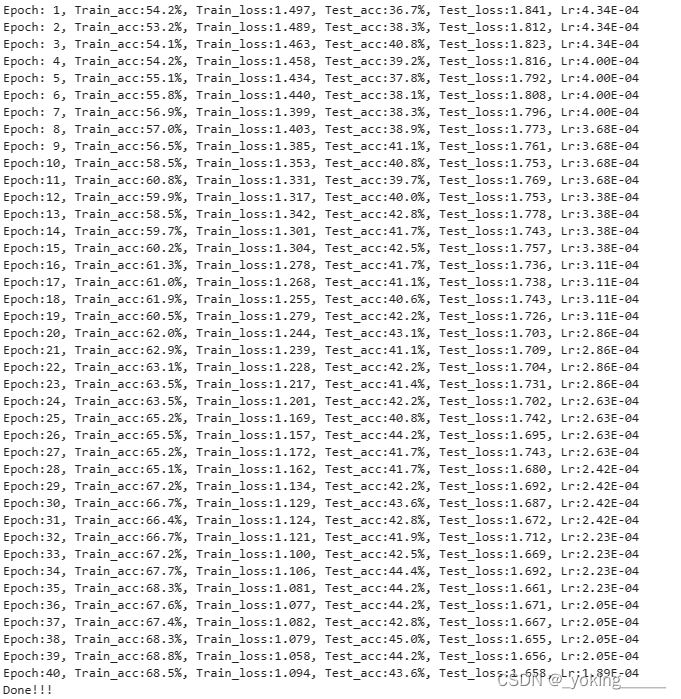

第二次训练结果:采用9个卷积层的训练结果

(多训练了几次:))

Loss and Acc.

预测:

修改之后的网络结构如下:

完整VGG-16调整网络结构代码:(可能有点蠢的方式)

# 构建VGG-16

from torchvision.models import vgg16

model = vgg16(pretrained=False).to(device)

for param in model.parameters():

param.requires_grad = True

# 添加BatchNorm层

model.features._modules['1'] = nn.BatchNorm2d(64, eps=1e-05, momentum=0.1,

affine=True, track_running_stats=True)

model.features._modules['2'] = nn.ReLU(inplace=True)

model.features._modules['3'] = nn.Conv2d(64, 64, kernel_size=(3, 3),

stride=(1, 1), padding=(1, 1))

model.features._modules['4'] = nn.BatchNorm2d(64, eps=1e-05, momentum=0.1,

affine=True, track_running_stats=True)

model.features._modules['5'] = nn.ReLU(inplace=True)

model.features._modules['6'] = nn.MaxPool2d(kernel_size=2, stride=2,

padding=0, dilation=1, ceil_mode=False)

model.features._modules['7'] = nn.Conv2d(64, 128, kernel_size=(3, 3),

stride=(1, 1), padding=(1, 1))

model.features._modules['8'] = nn.BatchNorm2d(128, eps=1e-05, momentum=0.1,

affine=True, track_running_stats=True)

model.features._modules['9'] = nn.ReLU(inplace=True)

model.features._modules['10'] = nn.Conv2d(128, 128, kernel_size=(3, 3),

stride=(1, 1), padding=(1, 1))

model.features._modules['11'] = nn.BatchNorm2d(128, eps=1e-05, momentum=0.1,

affine=True, track_running_stats=True)

model.features._modules['12'] = nn.ReLU(inplace=True)

model.features._modules['13'] = nn.MaxPool2d(kernel_size=2, stride=2,

padding=0, dilation=1, ceil_mode=False)

model.features._modules['14'] = nn.Conv2d(128, 256, kernel_size=(3, 3),

stride=(1, 1), padding=(1, 1))

model.features._modules['15'] = nn.BatchNorm2d(256, eps=1e-05, momentum=0.1,

affine=True, track_running_stats=True)

model.features._modules['16'] = nn.ReLU(inplace=True)

model.features._modules['17'] = nn.Conv2d(256, 256, kernel_size=(3, 3),

stride=(1, 1), padding=(1, 1))

model.features._modules['18'] = nn.BatchNorm2d(256, eps=1e-05, momentum=0.1,

affine=True, track_running_stats=True)

model.features._modules['19'] = nn.ReLU(inplace=True)

model.features._modules['20'] = nn.Conv2d(256, 256, kernel_size=(3, 3),

stride=(1, 1), padding=(1, 1))

model.features._modules['21'] = nn.BatchNorm2d(256, eps=1e-05, momentum=0.1,

affine=True, track_running_stats=True)

model.features._modules['22'] = nn.ReLU(inplace=True)

model.features._modules['23'] = nn.MaxPool2d(kernel_size=2, stride=2,

padding=0, dilation=1, ceil_mode=False)

model.features._modules['24'] = nn.Conv2d(256, 512, kernel_size=(3, 3),

stride=(1, 1), padding=(1, 1))

model.features._modules['25'] = nn.BatchNorm2d(512, eps=1e-05, momentum=0.1,

affine=True, track_running_stats=True)

model.features._modules['26'] = nn.ReLU(inplace=True)

model.features._modules['27'] = nn.Conv2d(512, 512, kernel_size=(3, 3),

stride=(1, 1), padding=(1, 1))

model.features._modules['28'] = nn.BatchNorm2d(512, eps=1e-05, momentum=0.1,

affine=True, track_running_stats=True)

model.features._modules['29'] = nn.ReLU(inplace=True)

model.features._modules['30'] = nn.Conv2d(512, 512, kernel_size=(3, 3),

stride=(1, 1), padding=(1, 1))

model.features.add_module('31', nn.BatchNorm2d(512, eps=1e-05, momentum=0.1,

affine=True, track_running_stats=True))

model.features.add_module('32', nn.ReLU(inplace=True))

model.features.add_module('33', nn.MaxPool2d(kernel_size=2, stride=2,

padding=0, dilation=1, ceil_mode=False))

# model.features.add_module('34', nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))

# model.features.add_module('35', nn.BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True))

# model.features.add_module('36', nn.ReLU(inplace=True))

# model.features.add_module('37', nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))

# model.features.add_module('38', nn.BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True))

# model.features.add_module('39', nn.ReLU(inplace=True))

# model.features.add_module('40', nn.Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)))

# model.features.add_module('41', nn.BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True))

# model.features.add_module('42', nn.ReLU(inplace=True))

# model.features.add_module('43', nn.MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False))

# 减小全连接层神经元的个数

model.classifier._modules['3'] = nn.Linear(4096, 4096//2)

model.classifier._modules['6'] = nn.Linear(4096//2, len(classNames))

model.to(device)

model

三、训练模型

1.设置超参数

lr = 1e-3

lambda1 = lambda epoch : 0.92 **(epoch // 4)

optimizer = torch.optim.SGD(model.parameters(), lr=lr)

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda=lambda1)

2.编写训练函数

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

num_batches = len(dataloader)

train_loss, train_acc = 0, 0

for X, y in dataloader:

X, y = X.to(device), y.to(device)

# loss

pred = model(X)

loss = loss_fn(pred, y)

# back

optimizer.zero_grad()

loss.backward()

optimizer.step()

# log

train_loss += loss.item()

train_acc += (pred.argmax(1) == y).type(torch.float).sum().item()

train_acc /= size

train_loss /= num_batches

return train_acc, train_loss

3.编写测试函数

def test(dataloader, model, loss_fn):

size = len(dataloader.dataset)

num_batches = len(dataloader)

test_loss, test_acc = 0, 0

with torch.no_grad():

for imgs, target in dataloader:

imgs, target = imgs.to(device), target.to(device)

# loss

target_pred = model(imgs)

loss = loss_fn(target_pred, target)

test_loss += loss.item()

test_acc += (target_pred.argmax(1) == target).type(torch.float).sum().item()

test_acc /= size

test_loss /= num_batches

return test_acc, test_loss

4.正式训练

import copy

loss_fn = nn.CrossEntropyLoss()

epochs = 40

train_loss, train_acc = [], []

test_loss, test_acc = [], []

best_acc = 0

for epoch in range(epochs):

model.train()

epoch_train_acc, epoch_train_loss = train(train_dl, model, loss_fn, optimizer)

scheduler.step()

model.eval()

epoch_test_acc, epoch_test_loss = test(test_dl, model, loss_fn)

if epoch_test_acc > best_acc:

best_acc = epoch_test_acc

best_model = copy.deepcopy(model)

train_acc.append(epoch_train_acc)

train_loss.append(epoch_train_loss)

test_acc.append(epoch_test_acc)

test_loss.append(epoch_test_loss)

lr = optimizer.state_dict()['param_groups'][0]['lr']

template = "Epoch:{:2d}, Train_acc:{:.1f}%, Train_loss:{:.3f}, Test_acc:{:.1f}%, Test_loss:{:.3f}, Lr:{:.2E}"

print(template.format(epoch+1,

epoch_train_acc*100, epoch_train_loss,

epoch_test_acc*100, epoch_test_loss,

lr))

PATH = './best_model.pth'

torch.save(model.state_dict(), PATH)

print('Done!!!')

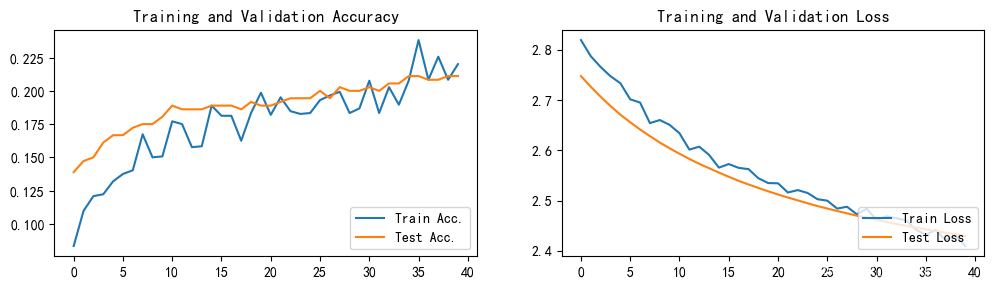

四、结果可视化

1.Loss和Accuracy图

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings("ignore")

plt.rcParams['font.sans-serif'] = ['SimHei']

plt.rcParams['axes.unicode_minus'] = False

plt.rcParams['figure.dpi'] = 100

epochs_range = range(epochs)

plt.figure(figsize=(12, 3))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, train_acc, label='Train Acc.')

plt.plot(epochs_range, test_acc, label='Test Acc.')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')

plt.subplot(1, 2, 2)

plt.plot(epochs_range, train_loss, label='Train Loss')

plt.plot(epochs_range, test_loss, label='Test Loss')

plt.legend(loc='lower right')

plt.title('Training and Validation Loss')

plt.show()

2.指定图片进行预测

from PIL import Image

classes = list(total_data.class_to_idx)

def predict_one_image(image_path, model, transform, classes):

test_img = Image.open(image_path).convert('RGB')

plt.imshow(test_img)

test_img = transform(test_img)

img = test_img.to(device).unsqueeze(0)

model.eval()

output = model(img)

_, pred = torch.max(output, 1)

pred_class = classes[pred]

print(f'Predict is: {pred_class}')

predict_one_image(image_path='./data/Jennifer Lawrence/009_bcd380a7.jpg',

model=model,

transform=train_transforms,

classes=classes

)

参考:

VGG16_BN网络框架

1330

1330

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?