笔记一:https://blog.csdn.net/qq_41059755/article/details/118807910

笔记二:https://blog.csdn.net/qq_41059755/article/details/118971872

学习视频看的是up主:遇见狂神说

一、Docker网络

Docker网络:实现容器间通信、容器与外网通信以及容器的跨主机访问。

1.1、理解Docker0

清空所有镜像和容器,再来学习Docker网络

docker rm -f $(docker ps -aq)

docker rmi -f $(docker images -aq)

[root@centos ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

[root@centos ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ip addr查看网络IP:

[root@centos ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:fe:74:e3 brd ff:ff:ff:ff:ff:ff

inet 192.168.78.128/24 brd 192.168.78.255 scope global noprefixroute dynamic eno16777736

valid_lft 1044sec preferred_lft 1044sec

inet6 fe80::20c:29ff:fefe:74e3/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: virbr0: <BROADCAST,MULTICAST> mtu 1500 qdisc noqueue state DOWN group default qlen 1000

link/ether 52:54:00:af:03:b9 brd ff:ff:ff:ff:ff:ff

4: virbr0-nic: <BROADCAST,MULTICAST> mtu 1500 qdisc pfifo_fast master virbr0 state DOWN group default qlen 1000

link/ether 52:54:00:af:03:b9 brd ff:ff:ff:ff:ff:ff

5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:74:a3:5b:73 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:74ff:fea3:5b73/64 scope link

valid_lft forever preferred_lft forever

其中最后一个就是docker0,IP地址为172.17.0.1,这相当于是一个路由器。每启动一个容器,docker就会给这个容器分配一个IP(172.17.0.2、172.17.0.3等等)。此时容器和宿主机在同一个网段,可以ping通。

启动tomcat:

docker run -d -P --name tomcat01 tomcat

查看容器的内部网络地址:

[root@centos ~]# docker exec -it tomcat01 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

72: eth0@if73: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

172.17.0.2,宿主机是可以ping通这个ip的。

再次查看ip addr,发现多了一个:

5: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:74:a3:5b:73 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:74ff:fea3:5b73/64 scope link

valid_lft forever preferred_lft forever

73: veth8085884@if72: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 0a:7e:93:7c:b9:2a brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::87e:93ff:fe7c:b92a/64 scope link

valid_lft forever preferred_lft forever

再启动tomcat02,查看ip addr:(发现又多了一对网卡)

5: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:74:a3:5b:73 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:74ff:fea3:5b73/64 scope link

valid_lft forever preferred_lft forever

73: veth8085884@if72: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 0a:7e:93:7c:b9:2a brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::87e:93ff:fe7c:b92a/64 scope link

valid_lft forever preferred_lft forever

75: veth8ea3216@if74: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 62:0a:a3:2c:b0:59 brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::600a:a3ff:fe2c:b059/64 scope link

valid_lft forever preferred_lft forever

查看tomcat02的内部网络地址:

[root@centos ~]# docker exec -it tomcat02 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

74: eth0@if75: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

宿主机可以和每个容器ping通,那么容器间可以ping通吗? 结果当然是可以的,但它们不是直接相连,而是借助路由器进行转发。

我们用tomcat02去ping 127.17.0.2:(反过来ping也是可以的)

[root@centos ~]# docker exec -it tomcat02 ping 127.17.0.2

PING 127.17.0.2 (127.17.0.2) 56(84) bytes of data.

64 bytes from 127.17.0.2: icmp_seq=1 ttl=64 time=0.120 ms

64 bytes from 127.17.0.2: icmp_seq=2 ttl=64 time=0.100 ms

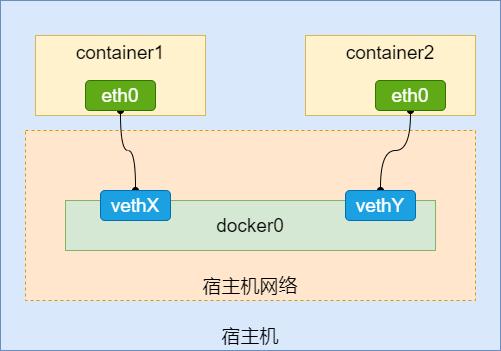

docker通信的原理图:

Docker中的所有网络接口都是虚拟的,虚拟的转发效率高!

1.2、–link

我们考虑这样一个场景,每次启动一个容器,就会重新分配IP,也就是说每次IP一改变,容器之间想要连接(比如Spring Boot项目连接MySQL),就需要修改IP,非常不方便。所以我们想要通过容器名进行连接。那么–link就是一种方式,虽然它已经被淘汰,但我们也可以了解一下。

首先试着使用容器名ping:

[root@centos ~]# docker exec -it tomcat02 ping tomcat01

ping: tomcat01: Name or service not known

使用–link连接:(启动tomcat03ping通tomcat01)

docker run -d -P --name tomcat03 --link tomcat01 tomcat

再ping:

[root@centos ~]# docker exec -it tomcat03 ping tomcat01

PING tomcat01 (172.17.0.2) 56(84) bytes of data.

64 bytes from tomcat01 (172.17.0.2): icmp_seq=1 ttl=64 time=0.211 ms

64 bytes from tomcat01 (172.17.0.2): icmp_seq=2 ttl=64 time=0.048 ms

–link可以连接多个容器:(启动tomcat04ping通tomcat01和tomcat02)

docker run -d -P --name tomcat04 --link tomcat01 --link tomcat02 tomcat

使用–link只能实现单向连接,也就是说反向ping是ping不通的。

我们来研究一下–link是怎么实现单向ping通的:

查看tomcat03的hosts文件:

[root@centos ~]# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.2 tomcat01 df72155812e1

172.17.0.4 ba10cd4d5c60

我们发现tomcat01的IP印射是配置在这里的,而且已经写死了。–link的原理就是往hosts文件加一个印射。

再看tomcat01的hosts文件是没有tomcat03的印射的,所以ping不通。

1.3、创建自定义网络

我们用ES和Kibana举例,之前是这样运行这两个容器:

docker run --name elasticsearch -d -e ES_JAVA_OPTS="-Xms512m -Xmx512m" -e "discovery.type=single-node" -p 9200:9200 -p 9300:9300 elasticsearch:7.6.2

docker run --link elasticsearch:elasticsearch -p 5601:5601 -d kibana:7.6.2

其中运行Kibana时使用–link和ES容器ping通,现在已经不推荐这种方法,建议创建自定义网络进行ping通。

在创建自定义网络之前,我们查看Docker已经帮我们创建好的默认网络:

[root@centos ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

520a51cb280e bridge bridge local

8f34eca18acb host host local

0856a7ff8bb1 none null local

在默认情况下(就是不指定–net),container都是使用上面的bridge网络(即默认–net bridge)。

默认的bridge网络是有很多限制的,为此,我们可以自行创建bridge类型的网络。默认的bridge网络与自建bridge网络有以下区别:

-

端口不会自行发布,必须使用-p参数才能为外界访问,而使用自建的bridge网络时,container的端口可直接被相同网络下的其他container访问。

-

container之间的如果需要通过名字访问,需要–link参数,而如果使用自建的bridge网络,container之间可以通过名字互访。

接下来,我们创建自定义网络:

docker network create --driver bridge --subnet 192.168.0.0/24 --gateway 192.168.0.1 mynet

其中–driver bridge可以不写,默认的driver就是bridge

–subnet 192.168.0.0/24声明子网

–gateway 192.168.0.1声明网关

创建成功后再次查看:(mynet创建成功)

[root@centos ~]# docker network ls

NETWORK ID NAME DRIVER SCOPE

520a51cb280e bridge bridge local

8f34eca18acb host host local

3148958b18be mynet bridge local

0856a7ff8bb1 none null local

使用自定义网络运行ES和Kibana:

docker run --name elasticsearch --net mynet -d -e ES_JAVA_OPTS="-Xms512m -Xmx512m" -e "discovery.type=single-node" -p 9200:9200 -p 9300:9300 elasticsearch:7.6.2

docker run --name kibana --net mynet -d -p 5601:5601 kibana:7.6.2

创建自定义的网络可以实现双向ping。

运行tomcat01和tomcat02:

docker run -d -P --name tomcat01 --net mynet tomcat

docker run -d -P --name tomcat02 --net mynet tomcat

测试双向ping:

[root@centos ~]# docker exec -it tomcat01 ping tomcat02

PING tomcat02 (192.168.0.5) 56(84) bytes of data.

64 bytes from tomcat02.mynet (192.168.0.5): icmp_seq=1 ttl=64 time=0.079 ms

64 bytes from tomcat02.mynet (192.168.0.5): icmp_seq=2 ttl=64 time=0.054 ms

64 bytes from tomcat02.mynet (192.168.0.5): icmp_seq=3 ttl=64 time=0.054 ms

^C

--- tomcat02 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 5ms

rtt min/avg/max/mdev = 0.054/0.062/0.079/0.013 ms

[root@centos ~]# docker exec -it tomcat02 ping tomcat01

PING tomcat01 (192.168.0.4) 56(84) bytes of data.

64 bytes from tomcat01.mynet (192.168.0.4): icmp_seq=1 ttl=64 time=0.042 ms

64 bytes from tomcat01.mynet (192.168.0.4): icmp_seq=2 ttl=64 time=0.158 ms

64 bytes from tomcat01.mynet (192.168.0.4): icmp_seq=3 ttl=64 time=0.155 ms

^C

--- tomcat01 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 3ms

rtt min/avg/max/mdev = 0.042/0.118/0.158/0.054 ms

1.4、网络打通

网络打通指的是多个网络之间进行打通,比如我们在mynet网络下创建了tomcat01和tomcat02,又在docker0网络下创建了tomcat03和tomcat04。

先测试一下tomcat03能否ping通tomcat01:

[root@centos ~]# docker exec -it tomcat03 ping tomcat01

ping: tomcat01: Name or service not known

重新创建tomcat03使其–link tomcat01:

[root@centos ~]# docker run -d -P --name tomcat03 --link tomcat01 tomcat

c5a25abca2a88021791b81d50414637b29ab4dd2d11c30e1d15479071cae802f

docker: Error response from daemon: Cannot link to /tomcat01, as it does not belong to the default network.

显示不能link,因为不在同一个网络。

接下来我们实现网络打通,网络打通不是真正意义上的网络直接相连,而是网络1下的所有容器连接到网络2,这样间接地实现网络间的打通。使用命令docker network connect。

[root@centos ~]# docker network connect --help

Usage: docker network connect [OPTIONS] NETWORK CONTAINER

Connect a container to a network

我们将tomcat03和tomcat04连接到mynet:

[root@centos ~]# docker network connect mynet tomcat03

[root@centos ~]# docker network connect mynet tomcat04

[root@centos ~]# docker exec -it tomcat03 ping tomcat01

PING tomcat01 (192.168.0.4) 56(84) bytes of data.

64 bytes from tomcat01.mynet (192.168.0.4): icmp_seq=1 ttl=64 time=0.112 ms

64 bytes from tomcat01.mynet (192.168.0.4): icmp_seq=2 ttl=64 time=0.046 ms

^C

--- tomcat01 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 2ms

rtt min/avg/max/mdev = 0.046/0.079/0.112/0.033 ms

此时tomcat03已经能ping通,再测试反向ping:

[root@centos ~]# docker exec -it tomcat01 ping tomcat03

PING tomcat03 (192.168.0.7) 56(84) bytes of data.

64 bytes from tomcat03.mynet (192.168.0.7): icmp_seq=1 ttl=64 time=0.157 ms

64 bytes from tomcat03.mynet (192.168.0.7): icmp_seq=2 ttl=64 time=0.053 ms

^C

--- tomcat03 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 2ms

rtt min/avg/max/mdev = 0.053/0.105/0.157/0.052 ms

说明这样实现网络的打通是双向的。

为了测试网络打通是否具有传递性,我们创建自定义网络mynet2,新建容器tomcat05,和mynet进行打通,然后测试能否ping通docker0下的tomcat03。

[root@centos ~]# docker network create --driver bridge --subnet 192.168.1.0/24 --gateway 192.168.1.1 mynet2

33c94a401aced8219627555ff709c8caebe0b78637fe665e9364243a435b0d21

[root@centos ~]# docker run -d -P --name tomcat05 --net mynet2 tomcat

8646b44bab57861e63653b569d682e43acb6eb95aba9fdaeebb1a8a345c4e468

[root@centos ~]# docker network connect mynet tomcat05

[root@centos ~]# docker exec -it tomcat05 ping tomcat03

PING tomcat03 (192.168.0.7) 56(84) bytes of data.

64 bytes from tomcat03.mynet (192.168.0.7): icmp_seq=1 ttl=64 time=0.073 ms

64 bytes from tomcat03.mynet (192.168.0.7): icmp_seq=2 ttl=64 time=0.049 ms

^C

--- tomcat03 ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 3ms

rtt min/avg/max/mdev = 0.049/0.061/0.073/0.012 ms

测试结果是具有传递性的。

1.5、实战:Redis集群部署(笑死,没学过Redis,根本听不懂,只能照着搞)

创建redis专用的网络:

docker network create --subnet 172.38.0.0/24 redis-net

通过脚本run.sh创建六个redis配置并且运行六个redis:

# 通过脚本创建六个redis配置

for port in $(seq 1 6);\

do \

mkdir -p /mydata/redis/node-${port}/conf

touch /mydata/redis/node-${port}/conf/redis.conf

cat << EOF >> /mydata/redis/node-${port}/conf/redis.conf

port 6379

bind 0.0.0.0

cluster-enabled yes

cluster-config-file nodes.conf

cluster-node-timeout 5000

cluster-announce-ip 172.38.0.1${port}

cluster-announce-port 6379

cluster-announce-bus-port 16379

appendonly yes

EOF

done

# 通过脚本运行六个redis

for port in $(seq 1 6);\

do \

docker run -p 637${port}:6379 -p 1667${port}:16379 --name redis-${port} \

-v /mydata/redis/node-${port}/data:/data \

-v /mydata/redis/node-${port}/conf/redis.conf:/etc/redis/redis.conf \

-d --net redis-net --ip 172.38.0.1${port} redis:5.0.9-alpine3.11 redis-server /etc/redis/redis.conf

done

运行run.sh:

[root@centos ~]# sh run.sh

[root@centos ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a518313a127b redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 10 seconds ago Up 9 seconds 0.0.0.0:6376->6379/tcp, :::6376->6379/tcp, 0.0.0.0:16676->16379/tcp, :::16676->16379/tcp redis-6

22130a66b33d redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 12 seconds ago Up 11 seconds 0.0.0.0:6375->6379/tcp, :::6375->6379/tcp, 0.0.0.0:16675->16379/tcp, :::16675->16379/tcp redis-5

09fff3ed38d8 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 14 seconds ago Up 13 seconds 0.0.0.0:6374->6379/tcp, :::6374->6379/tcp, 0.0.0.0:16674->16379/tcp, :::16674->16379/tcp redis-4

33d10eb91b19 redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 16 seconds ago Up 15 seconds 0.0.0.0:6373->6379/tcp, :::6373->6379/tcp, 0.0.0.0:16673->16379/tcp, :::16673->16379/tcp redis-3

d7a4cadcc84e redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 49 seconds ago Up 48 seconds 0.0.0.0:6372->6379/tcp, :::6372->6379/tcp, 0.0.0.0:16672->16379/tcp, :::16672->16379/tcp redis-2

d40a7b6a473d redis:5.0.9-alpine3.11 "docker-entrypoint.s…" 2 minutes ago Up 2 minutes 0.0.0.0:6371->6379/tcp, :::6371->6379/tcp, 0.0.0.0:16671->16379/tcp, :::16671->16379/tcp redis-1

创建集群:

docker exec -it redis-1 /bin/sh #redis默认没有bash

redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 --cluster-replicas 1

[root@centos ~]# docker exec -it redis-1 /bin/sh

/data # redis-cli --cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 172.38.0.15:6379 to 172.38.0.11:6379

Adding replica 172.38.0.16:6379 to 172.38.0.12:6379

Adding replica 172.38.0.14:6379 to 172.38.0.13:6379

M: 5456bb041e6a156e4ed30e2df200934a600f1ece 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

M: 9cdcc95449837fb8565312c7cfabe04869c61678 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

M: 5a750d7ba3e0d072786918d02a1e637887cb7356 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

S: d3da0971a9767ecb9d0652f87a87f447ed119265 172.38.0.14:6379

replicates 5a750d7ba3e0d072786918d02a1e637887cb7356

S: 6b12e8fdf6fb1794231ae424cfcf751c020398eb 172.38.0.15:6379

replicates 5456bb041e6a156e4ed30e2df200934a600f1ece

S: ed871b3006d8f148e879f8f893f218686da8f9ff 172.38.0.16:6379

replicates 9cdcc95449837fb8565312c7cfabe04869c61678

Can I set the above configuration? (type 'yes' to accept): yes

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

...

>>> Performing Cluster Check (using node 172.38.0.11:6379)

M: 5456bb041e6a156e4ed30e2df200934a600f1ece 172.38.0.11:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 9cdcc95449837fb8565312c7cfabe04869c61678 172.38.0.12:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

M: 5a750d7ba3e0d072786918d02a1e637887cb7356 172.38.0.13:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: 6b12e8fdf6fb1794231ae424cfcf751c020398eb 172.38.0.15:6379

slots: (0 slots) slave

replicates 5456bb041e6a156e4ed30e2df200934a600f1ece

S: d3da0971a9767ecb9d0652f87a87f447ed119265 172.38.0.14:6379

slots: (0 slots) slave

replicates 5a750d7ba3e0d072786918d02a1e637887cb7356

S: ed871b3006d8f148e879f8f893f218686da8f9ff 172.38.0.16:6379

slots: (0 slots) slave

replicates 9cdcc95449837fb8565312c7cfabe04869c61678

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

/data #

1.6、SpringBoot项目打包Docker镜像

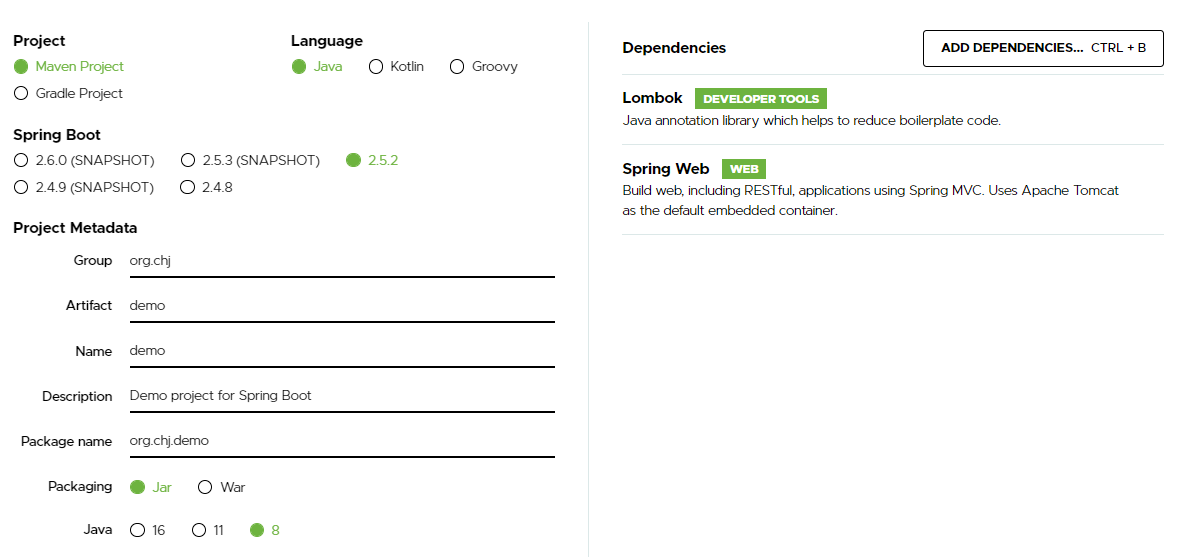

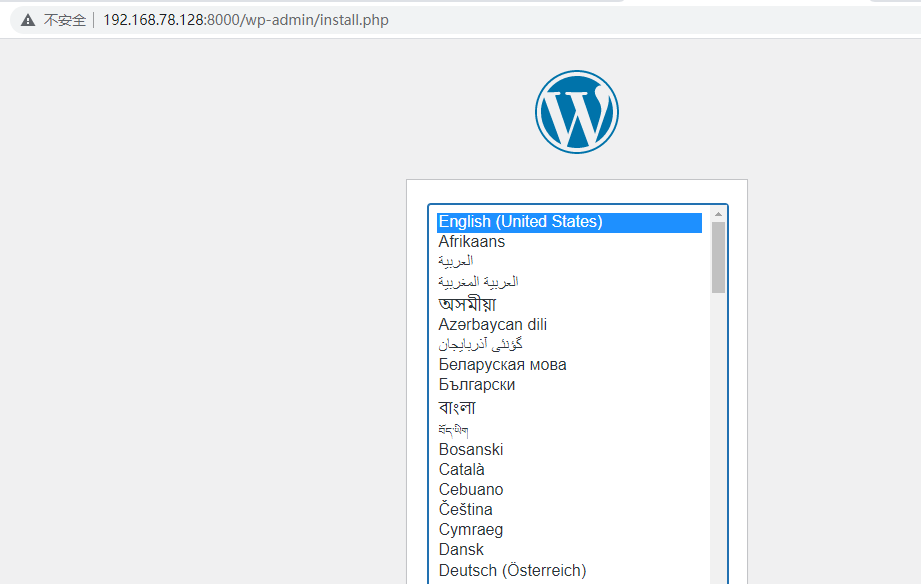

构建SpringBoot项目:https://start.spring.io/

然后写一个接口:

@RestController

public class HelloController {

@RequestMapping("/hello")

public String hello() {

return "hello,world";

}

}

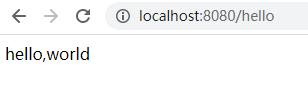

本地启动访问一下:

mvn clean package打包。

新建Dockerfile:

FROM java:8

COPY *.jar /app.jar

CMD ["--server.port = 8080"]

EXPOSE 8080

ENTRYPOINT ["java","-jar","/app.jar"]

把jar包和DockerFile上传到Linux:

[root@centos springboot]# ls

demo-0.0.1-SNAPSHOT.jar Dockerfile

直接build:

[root@centos springboot]# docker build -t hello-demo .

运行一下:

[root@centos springboot]# docker run -d -p 9090:8080 hello-demo

访问:

二、Docker Compose

-

前面我们使用 Docker 的时候,定义 Dockerfile 文件,然后使用 docker build、docker run 等命令操作容器。然而微服务架构的应用系统一般包含若干个微服务,每个微服务一般都会部署多个实例,如果每个微服务都要手动启停,那么效率之低,维护量之大可想而知。

-

使用 Docker Compose 可以轻松、高效的管理容器,它是一个用于定义和运行多容器 Docker 的应用程序工具。

安装 Docker Compose 可以通过下面命令自动下载适应版本的 Compose,并为安装脚本添加执行权限:

curl -L https://github.com/docker/compose/releases/download/1.21.2/docker-compose-$(uname -s)-$(uname -m) -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

查看是否安装成功:

[root@centos springboot]# docker-compose -v

docker-compose version 1.21.2, build a133471

Docker Compose是如何实现批量容器的编排呢?利用配置文件docker-compose.yml。然后docker-compose up就可以了。

- Docker Compose 将所管理的容器分为三层,分别是工程(project)、服务(service)、容器(container)。

- Docker Compose 运行目录下的所有文件(docker-compose.yml)组成一个工程,一个工程包含多个服务,每个服务中定义了容器运行的镜像、参数、依赖,一个服务可包括多个容器实例。

2.1、初体验

按照官方文档一步一步照着来:https://docs.docker.com/compose/gettingstarted/

[root@centos ~]# mkdir composetest

[root@centos ~]# cd composetest/

[root@centos composetest]# vim app.py

[root@centos composetest]# vim requirements.txt

[root@centos composetest]# vim Dockerfile

[root@centos composetest]# vim docker-compose.yml

[root@centos composetest]# docker-compose up

最后一个命令如果报错,按照提示修改docker-compose.yml文件就行。

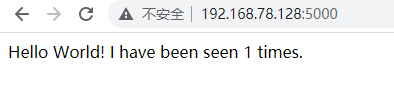

访问:

每刷新一下浏览器,这个数字就会加1。

即使重启后,这个数字也不会恢复成1,这是Redis缓存的功劳。

查看容器,有两个容器:

[root@centos ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f288cb48a6a9 composetest_web "flask run" 28 minutes ago Up 19 seconds 0.0.0.0:5000->5000/tcp, :::5000->5000/tcp composetest_web_1

8e854c48d9e1 redis:alpine "docker-entrypoint.s…" 28 minutes ago Up 18 seconds 6379/tcp composetest_redis_1

查看镜像,发现多了3个:(composetest_web、redis、python)

[root@centos composetest]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

composetest_web latest 2f15527ffe48 10 minutes ago 184MB

hello-demo latest 91e6ced01669 About an hour ago 660MB

tomcat latest 921ef208ab56 13 hours ago 668MB

redis alpine 500703a12fa4 2 weeks ago 32.3MB

python 3.7-alpine 93ac4b41defe 3 weeks ago 41.9MB

redis 5.0.9-alpine3.11 3661c84ee9d0 15 months ago 29.8MB

kibana 7.6.2 f70986bc5191 16 months ago 1.01GB

elasticsearch 7.6.2 f29a1ee41030 16 months ago 791MB

java 8 d23bdf5b1b1b 4 years ago 643MB

网络也多了一个:(composetest_default,它是执行docker-compose up时自动生成的)

[root@centos composetest]# docker network ls

NETWORK ID NAME DRIVER SCOPE

520a51cb280e bridge bridge local

06cfc3e8f961 composetest_default bridge local

8f34eca18acb host host local

0856a7ff8bb1 none null local

fd57af6cb86a redis-net bridge local

2.2、Compose配置编写规则

docker-compose.yml 配置文件编写详解:https://blog.csdn.net/qq_36148847/article/details/79427878

官方文档:https://docs.docker.com/compose/compose-file/compose-file-v3/

2.3、使用Compose一键部署WP博客

根据文档一步步来:https://docs.docker.com/samples/wordpress/

访问:

WP博客是一个开源项目,我们部署它只需要1分钟的时间。这就是Compose的好处。

2.4、实战:自己编写微服务上线

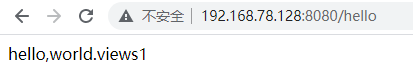

微服务功能:计数器

@RestController

public class HelloController {

@Autowired

StringRedisTemplate redisTemplate;

@RequestMapping("/hello")

public String hello() {

Long views = redisTemplate.opsForValue().increment("views", 1);

return "hello,world.views" + views;

}

}

pom添加依赖:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

<version>2.5.2</version>

</dependency>

application.properties:

server.port = 8080

spring.redis.host=localhost

本地启动看一下(确保本地的Redis已启动):

修改application.properties:

server.port = 8080

spring.redis.host=redis

编写Dockerfile:

FROM java:8

COPY *.jar /app.jar

CMD ["--server.port=8080"]

EXPOSE 8080

ENTRYPOINT ["java","-jar","/app.jar"]

编写docker-compose.yml:

version: '3.3'

services:

demoapp:

build: .

image: xiaojie1423/demoapp

depends_on:

- redis

ports:

- "8080:8080"

redis:

image: "library/redis:alpine"

打包mvn clean package,把需要的文件放到Linux上:

[root@centos demo]# ls

demo-0.0.1-SNAPSHOT.jar docker-compose.yml Dockerfile

[root@centos demo]# docker-compose up

查看:

Docker学习完结。

2562

2562

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?