服务器系统:CentOS7.2

前提:jdk1.8、maven、mysql等基础环境已经装好

一、flink1.16.2

直接用单机版

//解压

tar -zxvf flink-1.16.2-bin-scala_2.12.tgz

//进入bin目录运行启动脚本

./start-cluster.sh --daemon

jps

//如果有已下内容证明启动成功

StandaloneSessionClusterEntrypoint

TaskManagerRunner二、streampark apache-streampark_2.12-2.1.1-incubating-bin

主要安装步骤就两个:

1.下载文件

2.初始化streampark所用的表

注意:本文不用Hadoop,如果初始化表用mysql,需要自行下载连接jar放入lib下,具体操作见官方streampark官方部署

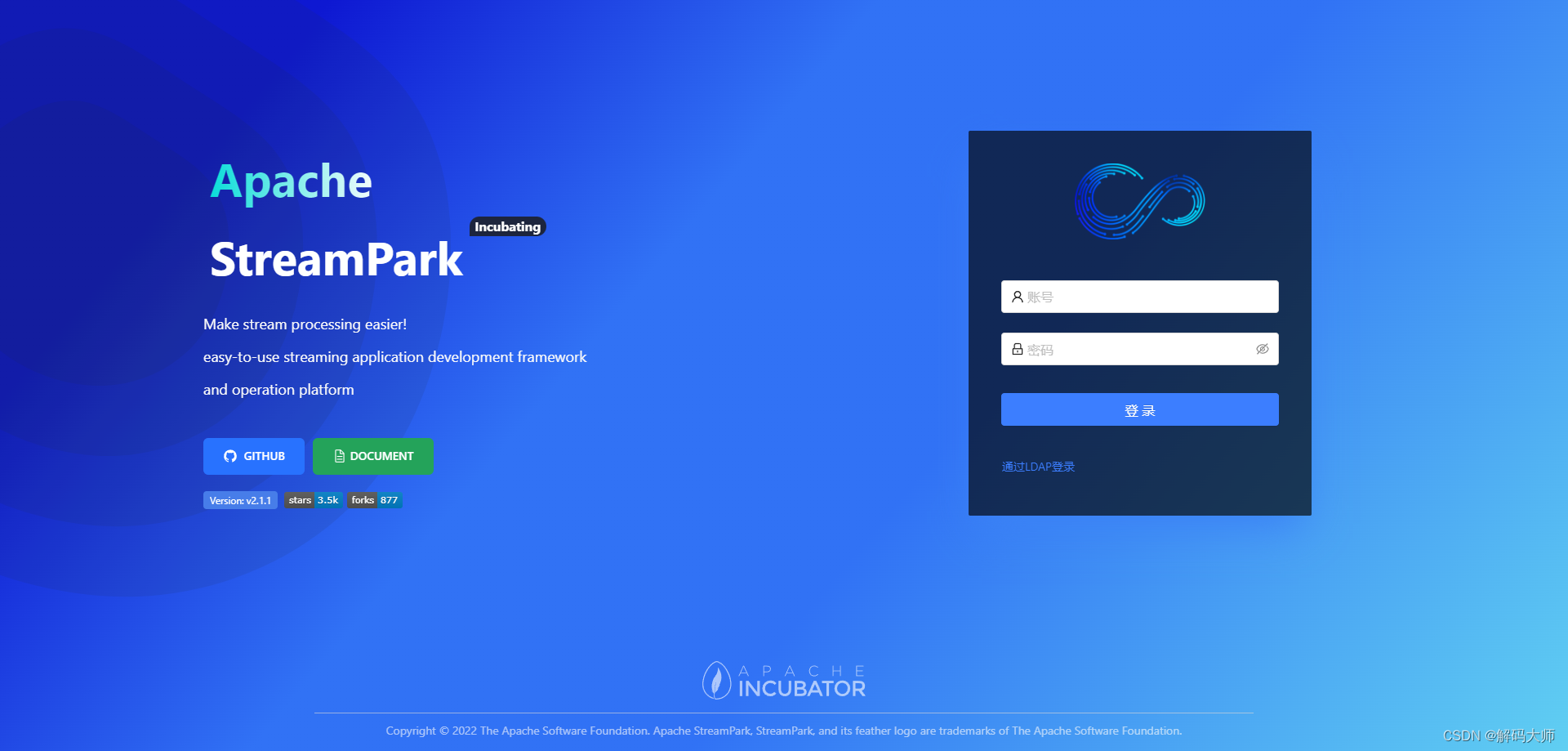

部署完成如果用默认端口,浏览器输入ip:10000 直接进入streampark登录界面,账号admin,密码streampark。

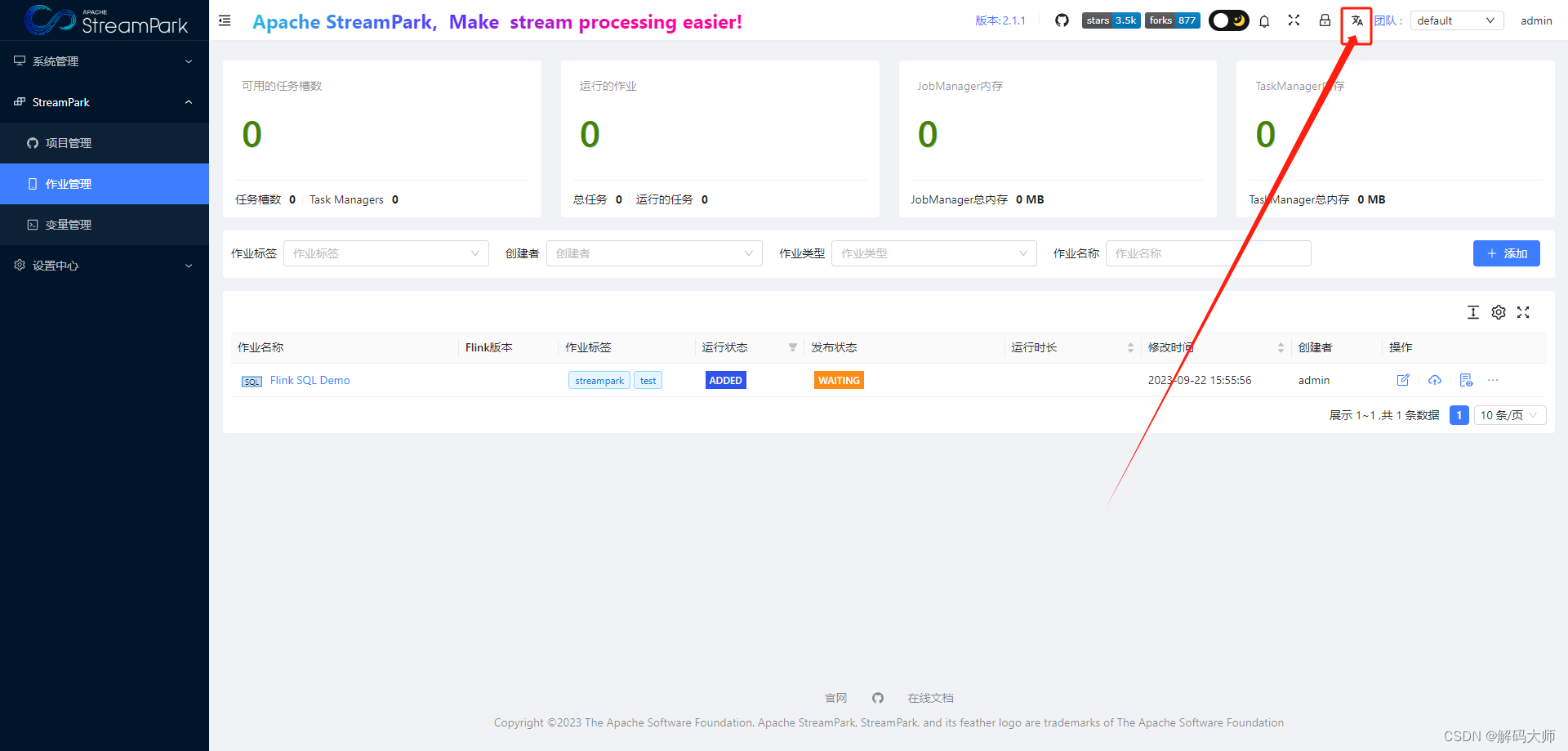

这里切换语言

三、streampark配置flink

streampark官方的快速开始可查看具体操作步骤,主要就是配置flink版本和集群,连接在上面,不过多描述。

四、安装部署doris apache-doris-2.0.1.1-bin-x64

1台机器,1个FE 1个BE

1.解压 tar 包 同时创建 doris-meta 和 storage,这两个文件夹后续将分别用于FE和BE的配置。

tar -zxvf apache-streampark_2.12-2.1.1-incubating-bin.tar.gz && mkdir doris-meta && mkdir storage2.分别修改fe和be-conf文件夹下的be.conf和fe.conf

修改fe.conf

########### 修改第一项 meta_dir ###########

meta_dir = 上一步创建的doris-meta的路径

########### 修改第二项 priority_networks 改为本机的ip/24 ###########

priority_networks = 172.16.10.240/24;

JAVA_HOME=本机jdk的路径

修改be.conf

########### 修改第一项 priority_networks ###########

storage_root_path = 上一步创建的storage的路径

########### 修改第二项 priority_networks 改为本机的ip/24 ###########

priority_networks = 172.16.10.240/24

# 增加 BE 的一些额外配置

# 日志配置

sys_log_dir = ${DORIS_HOME}/log

sys_log_roll_mode = SIZE-MB-1024

sys_log_roll_num = 1

# 回收站清理间隔 24h

trash_file_expire_time_sec = 864003.进入fe的bin下启动fe

./start_fe.sh --daemon4.查看fe

mysql -uroot -P9030 -h127.0.0.1

-- 查看FE运行状态 如果 IsMaster、Join 和 Alive 三列均为true,则表示节点正常。

mysql> show frontends\G;

*************************** 1. row ***************************

Name: 172.21.32.5_9010_1660549353220

IP: 172.21.32.5

EditLogPort: 9010

HttpPort: 8030

QueryPort: 9030

RpcPort: 9020

Role: FOLLOWER

IsMaster: true

ClusterId: 1685821635

Join: true

Alive: true

ReplayedJournalId: 49292

LastHeartbeat: 2022-08-17 13:00:45

IsHelper: true

ErrMsg:

Version: 1.1.2-rc03-ca55ac2

CurrentConnected: Yes

1 row in set (0.03 sec)5.进入be的bin下启动be

./start_be.sh --daemon中间可能出现一些系统设置,按照提示执行就行

[root@localhost bin]# ./start_be.sh --daemon

Please set vm.max_map_count to be 2000000 under root using 'sysctl -w vm.max_map_count=2000000'.

[root@localhost bin]# sysctl -w vm.max_map_count=2000000

vm.max_map_count = 2000000

[root@localhost bin]# ./start_be.sh --daemon

Please set the maximum number of open file descriptors to be 65536 using 'ulimit -n 65536'.

[root@localhost bin]# ulimit -n 65536

[root@localhost bin]# ./start_be.sh --daemon6.添加be到节点

mysql -uroot -P9030 -h127.0.0.1

-- 添加be到集群

-- be_host_ip:这里是你 BE 的 IP 地址,和你在 be.conf 里的 priority_networks 匹配

-- heartbeat_service_port:这里是你 BE 的心跳上报端口,和你在 be.conf 里的 heartbeat_service_port 匹配,默认是 9050。

mysql> ALTER SYSTEM ADD BACKEND "be_host_ip:heartbeat_service_port";

-- 查看be状态 Alive : true表示节点运行正常

mysql> SHOW BACKENDS\G

*************************** 1. row ***************************

BackendId: 10003

Cluster: default_cluster

IP: 172.21.32.5

HeartbeatPort: 9050

BePort: 9060

HttpPort: 8040

BrpcPort: 8060

LastStartTime: 2022-08-16 15:31:37

LastHeartbeat: 2022-08-17 13:33:17

Alive: true

SystemDecommissioned: false

ClusterDecommissioned: false

TabletNum: 170

DataUsedCapacity: 985.787 KB

AvailCapacity: 782.729 GB

TotalCapacity: 984.180 GB

UsedPct: 20.47 %

MaxDiskUsedPct: 20.47 %

Tag: {"location" : "default"}

ErrMsg:

Version: 1.1.2-rc03-ca55ac2

Status: {"lastSuccessReportTabletsTime":"2022-08-17 13:33:05","lastStreamLoadTime":-1,"isQueryDisabled":false,"isLoadDisabled":false}

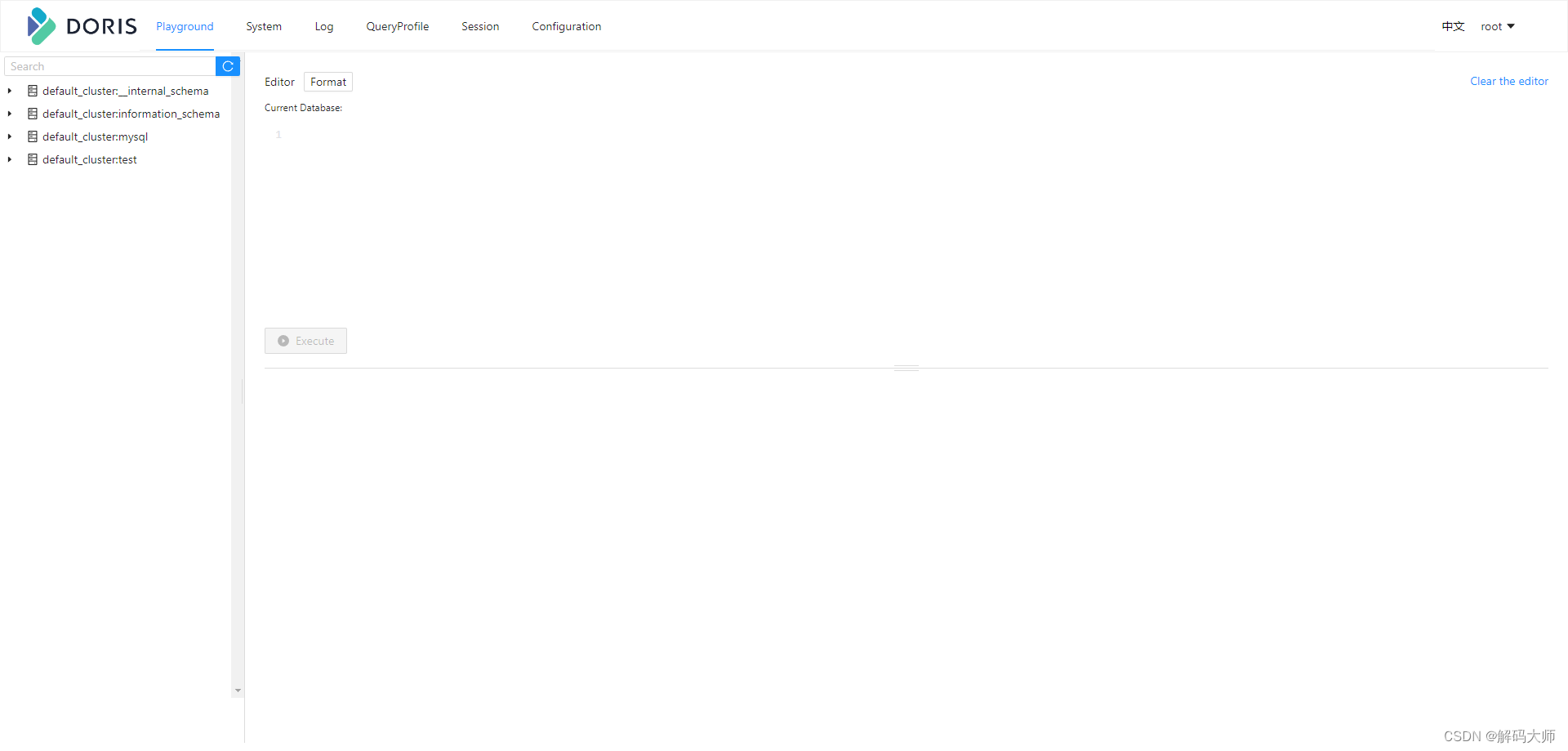

7.登录doris

浏览器输入 ip:8030/login 账号root,密码无

可以通过这里建立需要同步的表,为下边同步做准备。或者通过datagrip等可视化工具建表。

以上为服务器需要部署的各种环境,下面是代码部分,当然streampark是支持flinksql同步的,下面是通过flinkcdc jar包形式同步

五、pom.xml

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns="http://maven.apache.org/POM/4.0.0"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.flink</groupId>

<artifactId>mysql-doris</artifactId>

<version>1.0-SNAPSHOT</version>

<name>flink-mysql-doris</name>

<properties>

<scala.version>2.12</scala.version>

<java.version>1.8</java.version>

<flink.version>1.16.2</flink.version>

<fastjson.version>1.2.62</fastjson.version>

<scope.mode>compile</scope.mode>

<slf4j.version>1.7.30</slf4j.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-scala-bridge_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_${scala.version}</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-jdbc</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka</artifactId>

<version>${flink.version}</version>

</dependency>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>${fastjson.version}</version>

</dependency>

<!-- Add log dependencies when debugging locally -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>${slf4j.version}</version>

</dependency>

<!-- flink-doris-connector -->

<dependency>

<groupId>org.apache.doris</groupId>

<artifactId>flink-doris-connector-1.16</artifactId>

<version>1.4.0</version>

</dependency>

<dependency>

<groupId>com.ververica</groupId>

<artifactId>flink-connector-mysql-cdc</artifactId>

<version>2.3.0</version>

<exclusions>

<exclusion>

<artifactId>flink-shaded-guava</artifactId>

<groupId>org.apache.flink</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-runtime-web</artifactId>

<version>${flink.version}</version>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>net.alchim31.maven</groupId>

<artifactId>scala-maven-plugin</artifactId>

<version>3.2.1</version>

<executions>

<execution>

<id>scala-compile-first</id>

<phase>process-resources</phase>

<goals>

<goal>compile</goal>

</goals>

</execution>

<execution>

<id>scala-test-compile</id>

<phase>process-test-resources</phase>

<goals>

<goal>testCompile</goal>

</goals>

</execution>

</executions>

<configuration>

<args>

<arg>-feature</arg>

</args>

</configuration>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.8.1</version>

<configuration>

<source>8</source>

<target>8</target>

</configuration>

</plugin>

</plugins>

</build>

</project>六、代码

package com.flink.mysql;

import com.alibaba.fastjson.JSON;

import com.alibaba.fastjson.JSONObject;

import com.ververica.cdc.connectors.mysql.source.MySqlSource;

import com.ververica.cdc.connectors.mysql.table.StartupOptions;

import com.ververica.cdc.debezium.JsonDebeziumDeserializationSchema;

import org.apache.doris.flink.cfg.DorisExecutionOptions;

import org.apache.doris.flink.cfg.DorisOptions;

import org.apache.doris.flink.cfg.DorisReadOptions;

import org.apache.doris.flink.sink.DorisSink;

import org.apache.doris.flink.sink.writer.SimpleStringSerializer;

import org.apache.flink.api.common.eventtime.WatermarkStrategy;

import org.apache.flink.api.common.functions.FilterFunction;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.restartstrategy.RestartStrategies;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.CheckpointConfig;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.Arrays;

import java.util.List;

import java.util.Properties;

import java.util.UUID;

import java.util.stream.Collectors;

public class DatabaseFullSyncV2 {

private static final Logger LOG = LoggerFactory.getLogger(DatabaseFullSyncV2.class);

private final static String MYSQL_HOST = "172.18.116.98";

private final static int MYSQL_PORT = 33061;

private final static String MYSQL_USER = "rldbuser";

private final static String MYSQL_PASSWD = "XT7dmBy98KdWuYCe";

private final static String SYNC_DB = "account";

private final static String SYNC_TBLS = "account.account,account.account_copy";

private final static String DORIS_HOST = "172.18.116.211";

private final static int DORIS_PORT = 8030;

private final static String DORIS_USER = "root";

private final static String DORIS_PWD = "";

private final static String TARGET_DORIS_DB = "account";

public static void main(String[] args) throws Exception {

Properties debeziumProperties = new Properties();

debeziumProperties.setProperty("converters", "dateConverters");

debeziumProperties.setProperty("dateConverters.type", "com.flink.mysql.DateToStringConverter"); // 日期格式处理

MySqlSource<String> mySqlSource = MySqlSource.<String>builder()

.hostname(MYSQL_HOST)

.port(MYSQL_PORT)

.databaseList(SYNC_DB) // set captured database

.tableList(SYNC_TBLS) // set captured table

.username(MYSQL_USER)

.password(MYSQL_PASSWD)

.scanNewlyAddedTableEnabled(true)

.debeziumProperties(debeziumProperties)

.deserializer(new JsonDebeziumDeserializationSchema()) // converts SourceRecord to JSON String

.includeSchemaChanges(true)

.startupOptions(StartupOptions.initial())

.build();

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.enableCheckpointing(5000L, CheckpointingMode.EXACTLY_ONCE);//5秒执行一次,模式:精准一次性

env.getCheckpointConfig().setTolerableCheckpointFailureNumber(2);

//2.2 设置检查点超时时间

env.getCheckpointConfig().setCheckpointTimeout(60*1000);

//2.3 设置重启策略

env.setRestartStrategy(RestartStrategies.fixedDelayRestart(2, 2*1000));//两次,两秒执行一次

//2.4 设置job取消后检查点是否保留

env.getCheckpointConfig().enableExternalizedCheckpoints(

CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);//保留

DataStreamSource<String> cdcSource = env.fromSource(mySqlSource, WatermarkStrategy.noWatermarks(), "MySQL CDC JI");

//get table list

List<String> tableList = Arrays.stream(SYNC_TBLS.split(",")).map(a->a.split("\\.")[1]).collect(Collectors.toList());

for(String tbl : tableList){

SingleOutputStreamOperator<String> filterStream = filterTableData(cdcSource, tbl);

SingleOutputStreamOperator<String> cleanStream = clean(filterStream);

DorisSink<String> dorisSink = buildDorisSink(tbl);

cleanStream.sinkTo(dorisSink).name("sink " + tbl);

}

env.execute("DORIS TABLES Sync JI");

}

/**

* Get real data

* {

* "before":null,

* "after":{

* "id":1,

* "name":"zhangsan-1",

* "age":18

* },

* "source":{

* "db":"test",

* "table":"test_1",

* ...

* },

* "op":"c",

* ...

* }

* */

private static SingleOutputStreamOperator<String> clean(SingleOutputStreamOperator<String> source) {

return source.flatMap(new FlatMapFunction<String,String>(){

@Override

public void flatMap(String row, Collector<String> out) throws Exception {

try{

JSONObject rowJson = JSON.parseObject(row);

String op = rowJson.getString("op");

if (Arrays.asList("c", "r", "u").contains(op)) {

JSONObject after = rowJson.getJSONObject("after");

after.put("__DORIS_DELETE_SIGN__", 0);

out.collect(after.toJSONString());

} else if ("d".equals(op)) {

JSONObject before = rowJson.getJSONObject("before");

before.put("__DORIS_DELETE_SIGN__", 1);

out.collect(before.toJSONString());

} else {

LOG.info("filter other op:{}", op);

}

}catch (Exception ex){

LOG.warn("filter other format binlog:{}",row);

}

}

});

}

/**

* Divide according to tablename

* */

private static SingleOutputStreamOperator<String> filterTableData(DataStreamSource<String> source, String table) {

return source.filter(new FilterFunction<String>() {

@Override

public boolean filter(String row) throws Exception {

try {

JSONObject rowJson = JSON.parseObject(row);

JSONObject source = rowJson.getJSONObject("source");

String tbl = source.getString("table");

return table.equals(tbl);

}catch (Exception ex){

ex.printStackTrace();

return false;

}

}

});

}

/**

* create doris sink

* */

public static DorisSink<String> buildDorisSink(String table){

DorisSink.Builder<String> builder = DorisSink.builder();

DorisOptions.Builder dorisBuilder = DorisOptions.builder();

dorisBuilder.setFenodes(DORIS_HOST + ":" + DORIS_PORT)

.setTableIdentifier(TARGET_DORIS_DB + "." + table)

.setUsername(DORIS_USER)

.setPassword(DORIS_PWD);

Properties pro = new Properties();

//json data format

pro.setProperty("format", "json");

pro.setProperty("read_json_by_line", "true");

DorisExecutionOptions executionOptions = DorisExecutionOptions.builder()

.setLabelPrefix("label-" + table + UUID.randomUUID()) //streamload label prefix,

.setStreamLoadProp(pro)

.setDeletable(true)

.build();

builder.setDorisReadOptions(DorisReadOptions.builder().build())

.setDorisExecutionOptions(executionOptions)

.setSerializer(new SimpleStringSerializer()) //serialize according to string

.setDorisOptions(dorisBuilder.build());

return builder.build();

}

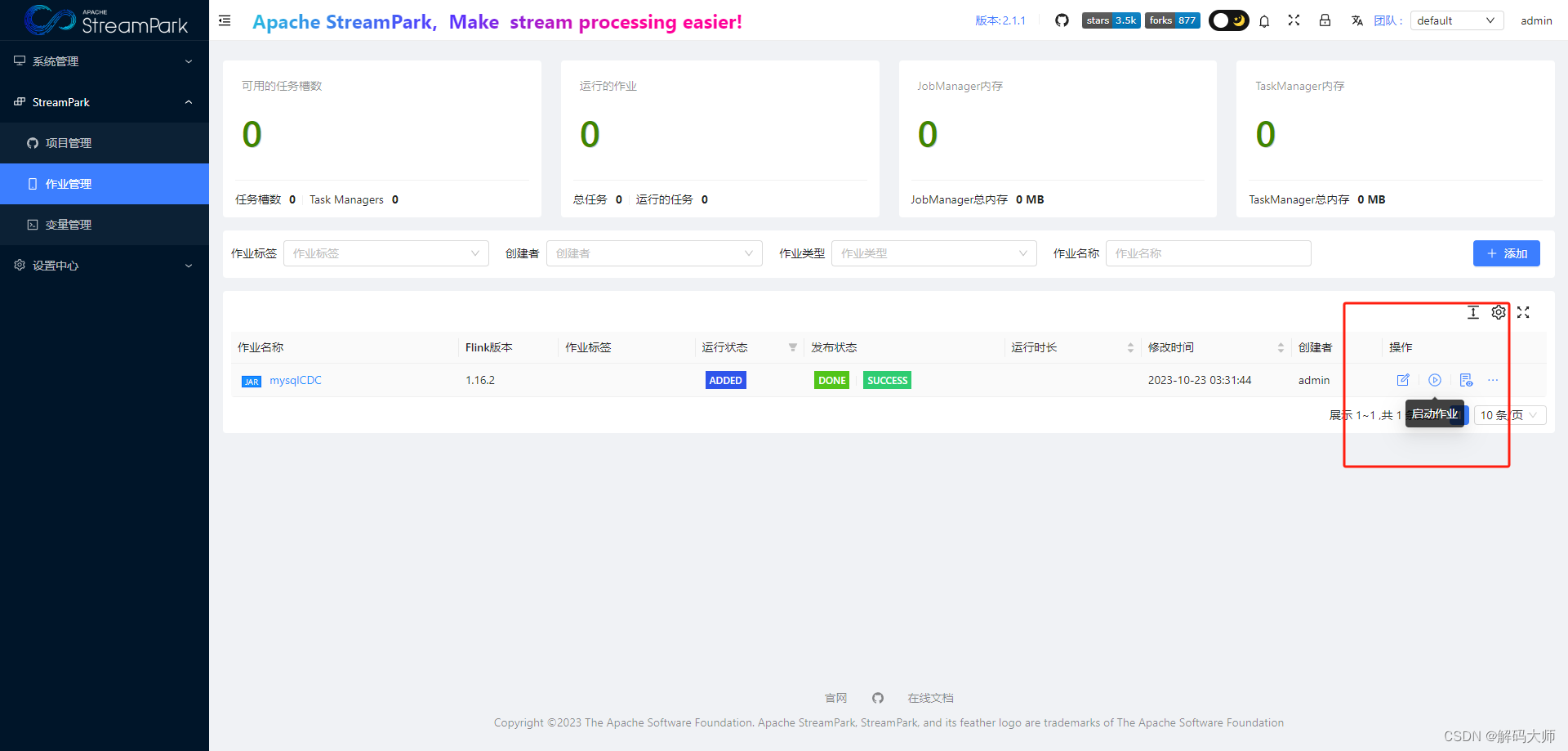

}七、代码打包并上传streampark的新作业,发布并启动

2492

2492

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?