目录

3.在apache-zookeeper-3.6.0-bin目录下添加data和log文件夹(因为步骤3)

4.在dataDir目录即/apache-zookeeper-3.6.0-bin/data下新建文件myid

zookeeper的安装与部署

1.解压压缩包

tar -zxvf apache-zookeeper-3.6.0-bin.tar.gz2.修改配置文件

进入cd apache-zookeeper-3.6.0-bin/conf/目录下,复制zoo_sample.cfg文件为zoo.cfg:

cp zoo_sample.cfg zoo.cfg

编辑zoo.cfg文件,vi zoo.cfg,添加下列配置:

dataDir=/apache-zookeeper-3.6.0-bin/data

dataLogDir=/apache-zookeeper-3.6.0-bin/log

clientPort=2181

server.1=10.159.1.1:2888:38883.在apache-zookeeper-3.6.0-bin目录下添加data和log文件夹(因为步骤3)

4.在dataDir目录即/apache-zookeeper-3.6.0-bin/data下新建文件myid

touch myid,编辑文件vi myid,内容为:1 5.启动zookeeper,进入bin目录下

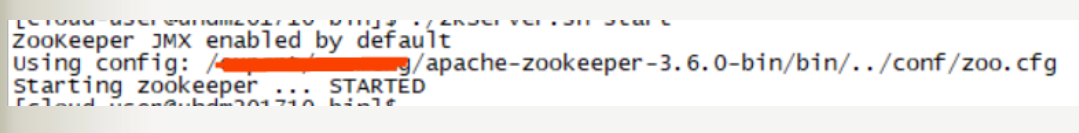

执行命令:./zkServer.sh start

kafka的安装与部署

1.下载kafka并解压到目录/kafka

tar -zxvf kafka_2.11-2.3.1.tgz2.配置kafka

创建kafka日志目录 mkdir /kafka/log/kafka

进入kafka config目录下,编辑vi server.properties,修改内容如下:

port=9092

host.name=10.159.1.1

log.dirs=/kafka/log/kafka #日志存放路径,上面创建的目录

zookeeper.connect=localhost:2181

创建zookeeper日志目录如下:

mkdir /kafka/zookeeper #创建zookeeper目录

mkdir /kafka/log/zookeeper #创建zookeeper日志目录

进入kafka config目录下,编辑vi zookeeper.properties,修改内容如下:

dataDir=/kafka/zookeeper #zookeeper数据目录

dataLogDir=/kafka/log/zookeeper #zookeeper日志目录

clientPort=2181

maxClientCnxns=100

tickTime=2000

initLimit=10

syncLimit=53.启动kafka

bin/kafka-server-start.sh config/server.properties

flume的安装与部署

1.下载并解压安装包

tar -zxvf apache-flume-1.9.0-bin.tar.gz2.配置

mv apache-flume-1.6.0-bin flume

cd flume

cp conf/flume-conf.properties.template conf/flume-conf.properties

==================================================================

vi flume-conf.properties

# The configuration file needs to define the sources,

# the channels and the sinks.

# Sources, channels and sinks are defined per agent,

# in this case called 'agent'

agent.sources = r1

agent.channels = c1

agent.sinks = s1

# For each one of the sources, the type is defined

agent.sources.r1.type = netcat

agent.sources.r1.bind = localhost

agent.sources.r1.port = 8888

# The channel can be defined as follows.

agent.sources.r1.channels = c1

# Each sink's type must be defined

agent.sinks.s1.type = file_roll

agent.sinks.s1.sink.directory = /tmp/log/flume

#Specify the channel the sink should use

agent.sinks.s1.channel = c1

# Each channel's type is defined.

agent.channels.c1.type = memory

# Other config values specific to each type of channel(sink or source)

# can be defined as well

# In this case, it specifies the capacity of the memory channel

agent.channels.c1.capacity = 100

与kafka的集成

Flume 可以灵活地与Kafka 集成,Flume侧重数据收集,Kafka侧重数据分发。

Flume可配置source为Kafka,也可配置sink 为Kafka。 配置sink为kafka例子如下

agent.sinks.s1.type = org.apache.flume.sink.kafka.KafkaSink

agent.sinks.s1.topic = mytopic

agent.sinks.s1.brokerList = localhost:9092

agent.sinks.s1.requiredAcks = 1

agent.sinks.s1.batchSize = 20

agent.sinks.s1.channel = c1

Flume 收集的数据经由Kafka分发到其它大数据平台进一步处理。

3.建立日志输出目录

mkdir -p /tmp/log/flume4.启动服务

[root@zhongkeyuan flume]# bin/flume-ng agent --conf conf --conf-file conf/flume-conf.properties --name a1 -Dflume.root.logger=INFO,console

5.模拟发送数据

telnet localhost 8888

输入

hello world!

hello Flume!

cat /tmp/log/flume/1447671188760-2

hello world!

hello Flume!

545

545

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?