1.2 torch.nn.BCELoss

Binary Cross Entropy between the target and the input probabilities

(1)计算公式

计算N个标签的损失

ℓ

(

x

,

y

)

=

L

=

{

l

1

,

…

,

l

N

}

⊤

,

l

n

=

−

w

n

[

y

n

⋅

log

x

n

+

(

1

−

y

n

)

⋅

log

(

1

−

x

n

)

]

\ell(x, y) = L = \{l_1,\dots,l_N\}^\top, \quad l_n = - w_n \left[ y_n \cdot \log x_n + (1 - y_n) \cdot \log (1 - x_n) \right]

ℓ(x,y)=L={l1,…,lN}⊤,ln=−wn[yn⋅logxn+(1−yn)⋅log(1−xn)]

将N个标签的损失求和或平均(默认是平均)

ℓ

(

x

,

y

)

=

L

=

{

l

1

,

…

,

l

N

}

⊤

,

l

n

=

−

w

n

[

y

n

⋅

log

x

n

+

(

1

−

y

n

)

⋅

log

(

1

−

x

n

)

]

\ell(x, y) = L = \{l_1,\dots,l_N\}^\top, \quad l_n = - w_n \left[ y_n \cdot \log x_n + (1 - y_n) \cdot \log (1 - x_n) \right]

ℓ(x,y)=L={l1,…,lN}⊤,ln=−wn[yn⋅logxn+(1−yn)⋅log(1−xn)]

(2)输入

1)模型经过softmax后预测输出 ,shape(n_samples), dtype(torch.float)

2)真实标签,shape=(n_samples), dtype(torch.float)

(3)例子1

>>> m = nn.Sigmoid()

>>> loss = nn.BCELoss()

>>> input = torch.randn(3, requires_grad=True)

>>> target = torch.empty(3).random_(2)

>>> output = loss(m(input), target)

>>> output.backward()

In [66]: input

Out[66]: tensor([-1.2269, 1.3667, 0.0922], requires_grad=True)

In [67]: target

Out[67]: tensor([1., 1., 0.])

(4)例子2

data

from sklearn.datasets import load_breast_cancer

data = load_breast_cancer()

x = data['data']

y = data['target']

#数据缩放,平均0, 正态1

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

x = sc.fit_transform(x)

from sklearn.model_selection import train_test_split

x_train,x_test,y_train,y_test = train_test_split(x,y,test_size=0.2)

#

from torch.utils.data import TensorDataset, DataLoader

x_train = torch.Tensor(x_train) # transform to torch tensor

x_test = torch.Tensor(x_test)

y_train = torch.Tensor(y_train)

y_test = torch.Tensor(y_test)

train_dataset = TensorDataset(x_train,y_train) # create your datset

#test_dataset = TensorDataset(x_test,y_test)

train_loader = DataLoader(train_dataset,batch_size=32,shuffle=True) # create your dataloader

#test_loader = DataLoader(train_dataset) # create your dataloader

model

from torch import nn

from torch.nn import functional as F

class Net(nn.Module):

def __init__(self,input_shape):

super(Net,self).__init__()

self.fc1 = nn.Linear(input_shape,128)

self.fc2 = nn.Linear(128,128)

self.fc3 = nn.Linear(128,1)

def forward(self,x):

x = torch.relu(self.fc1(x))

x = torch.relu(self.fc2(x))

x = torch.sigmoid(self.fc3(x))

return x

model = Net(x_train.shape[1])

optimizer = torch.optim.SGD(model.parameters(),lr=0.001)

#optimizer = torch.optim.Adam(model.parameters(),lr=0.001)

loss_fn = nn.BCELoss()

trainning

train_acc_list = []

val_acc_list = []

train_loss_list = []

val_loss_list = []

epochs=1000

for i in range(epochs):

for j,(input,target) in enumerate(train_loader):

#calculate output

output = model(input) #shape(n_sample,1)

#calculate loss

loss = loss_fn(output,target.reshape(-1,1))

#backprop

optimizer.zero_grad()

loss.backward()

optimizer.step()

with torch.no_grad():

y_pred = model(x_test).reshape(-1)

val_acc = (torch.round(y_pred) == np.round(y_test)).type(torch.float).mean()

val_loss = loss_fn(y_pred.reshape(-1,1), y_test.reshape(-1,1))

y_pred = model(x_train).reshape(-1)

train_acc = (torch.round(y_pred) == np.round(y_train)).type(torch.float).mean()

train_loss = loss_fn(y_pred.reshape(-1,1), y_train.reshape(-1,1))

print(f'epoch:{i} train_acc:{train_acc:.3f} train_loss: {train_loss:.3f} val_acc:{val_acc:.3f} val_loss: {val_loss:.3f}')

train_acc_list.append(train_acc)

train_loss_list.append(train_loss)

val_acc_list.append(val_acc)

val_loss_list.append(val_loss)

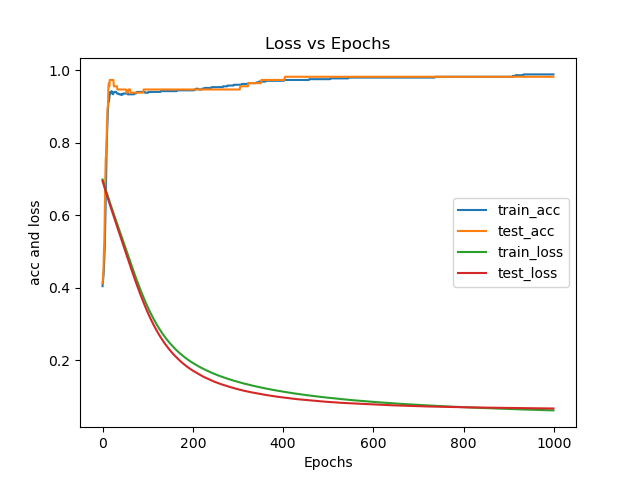

analysis of the model

plt.title('Loss vs Epochs')

plt.xlabel('Epochs')

plt.ylabel('acc and loss')

plt.plot(train_acc_list,label='train_acc')

plt.plot(val_acc_list,label='test_acc')

plt.plot(train_loss_list,label='train_loss')

plt.plot(val_loss_list,label='test_loss')

plt.legend()

plt.show()

5716

5716

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?