一、hive的安装:

1)直接解压,然后在conf配置文件中找到hive-default.xml.template 改名为 hive-site-xml

2)修改其配置为:

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://namenode:3306/hive?createDatabaseIfNotExist=true</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>123</value>

</property>

</configuration>

3)在lib中导入mysql jar 包 如报错,检查mysql jar包版本信息是否对应。

4)按中成功之后再bin目录中直接./hive 打开hive

报错集合

错误1

Wed May 29 13:56:54 EDT 2019 WARN: Establishing SSL connection without server’s identity verification is not recommended. According to MySQL 5.5.45+,

5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn’t set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to ‘false’. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

解决方法:

在此添加useSSL=false即可

错误2

解决方法:

错误3

Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:591)

at org.apache.hadoop.hive.ql.session.SessionState.beginStart(SessionState.java:531)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:705)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:641)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.util.RunJar.run(RunJar.java:221)

at org.apache.hadoop.util.RunJar.main(RunJar.java:136)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:226)

at org.apache.hadoop.hive.ql.metadata.Hive.<init>(Hive.java:366)

at org.apache.hadoop.hive.ql.metadata.Hive.create(Hive.java:310)

at org.apache.hadoop.hive.ql.metadata.Hive.getInternal(Hive.java:290)

at org.apache.hadoop.hive.ql.metadata.Hive.get(Hive.java:266)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:558)

... 9 more

Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1654)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:80)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:130)

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:101)

at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:3367)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3406)

at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:3386)

at org.apache.hadoop.hive.ql.metadata.Hive.getAllFunctions(Hive.java:3640)

at org.apache.hadoop.hive.ql.metadata.Hive.reloadFunctions(Hive.java:236)

at org.apache.hadoop.hive.ql.metadata.Hive.registerAllFunctionsOnce(Hive.java:221)

... 14 more

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:526)

at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1652)

... 23 more

Caused by: MetaException(message:Version information not found in metastore. )

at org.apache.hadoop.hive.metastore.ObjectStore.checkSchema(ObjectStore.java:7753)

at org.apache.hadoop.hive.metastore.ObjectStore.verifySchema(ObjectStore.java:7731)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:606)

at org.apache.hadoop.hive.metastore.RawStoreProxy.invoke(RawStoreProxy.java:101)

at com.sun.proxy.$Proxy20.verifySchema(Unknown Source)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.getMS(HiveMetaStore.java:565)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.createDefaultDB(HiveMetaStore.java:626)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.init(HiveMetaStore.java:416)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.<init>(RetryingHMSHandler.java:78)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.getProxy(RetryingHMSHandler.java:84)

at org.apache.hadoop.hive.metastore.HiveMetaStore.newRetryingHMSHandler(HiveMetaStore.java:6490)

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:238)

at org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClient.<init>(SessionHiveMetaStoreClient.java:70)

... 28 more

解决方法:

出错原因:重新安装Hive和MySQL,导致版本、配置不一致。在终端执行如下命令:

schematool -dbType mysql -initSchema

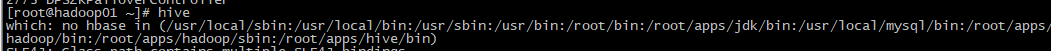

异常1

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/root/apps/hive/lib/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/root/apps/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory

解决方法:

Root Issue; slf4j在两处找到了jar包。分别是在Hadoop和hive的安装目录。 删除一个就好。

[root@hadoop01 lib]# mv /root/apps/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar /root/apps/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar.complate

二、hive的基本语法

1、创建表:

创建表1

create table table1 ( id int,name string,price double)

//以‘行’为一条数据

row format delimited

以‘tab’为字段的分界

fields terminated by ‘\t’

创建表2

create external table table2 ( id int,name string,price double)

row format delimited

fields terminated by ‘\t’

//location 将文件中的数据加载到表中

//path:所需数据文件路径

location ‘/path’

注意:创建表默认创建为MANAGED类型

表类型:

MANAGED:当load data时将指定的该文件移动到当前在HDFS目>录下

EXTERNAL:将其加载的文件路径指定到其表中

//insert from select 用于向临时表中追加数据

create table table3 like table2;insert overwrite table3

select * from table2;

//CATS 用于创建一些临时表存储中间结果

//根据别的表的的查询结果中建表

create table table4

as

select id new_id,name,ip,new_ip

from table1

sort by new_id;

注意:

如果表中有四个字段,别的表在加载过程中如有缺少字段(3个字段)则字段将自动向前迁移,最后一个字段为null。

如果表中有四个字段,别的表在加载过程中如有缺少字段(5个字段)则字段将自动向前迁移,最后一个字段不加载进来。

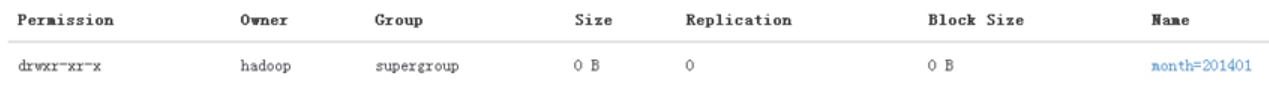

Managed如何存储

在HDFS目录下自动生成文件

partitioned 分区

将数据以区域划分储存

语法

create table table1 ( id int,name string,price double)

partitioned by (month string)

row format delimited fields terminated by ‘\t’

向分区中load data

load data local inpath '/homehadoop/yyy.data' into table table5 partition (month='201401');

储存方式:

在HDFS上的该目录下创建以分区标识为文件名的文件夹,文件夹下再放数据文件;

查询使用方式

select count(*) from table5 where month='201401';

select count(*) from table5 where month=‘201401’; 查询报错

报错内容:

SemanticException MetaException(message:You have an error in your SQL syntax; check the manual that corresponds to

your MySQL server version for the right syntax to use near 'OPTION SQL_SELECT_LIMIT=DEFAULT' at line 1)

问题原因:

mysql jar包版本不符,

mysql版本为5.7.24,jar包版本为5.1.7

更换jar版本为5.1.47之后报错消失

原因:

5.1.7中无“OPTION SQL_SELECT_LIMIT=DEFAULT”;

向表中添加分区

ALTER TABLE tab_cts ADD PARTITION (partCol = 'dt')

location '/externaml/hive/dt';

查看表中的分区情况

show partitions tab_ip_part;

write to hdfs 把运行结果写道文件中

//向本地写入

insert overwrite local directory '路径/文件类型.txt'

select * from 表名 where 条件

向HDFS写入文件

insert overwrite directory '文件'

select * from 表名 where 条件

array 建表字段类型

create table tab_array(a array<int>, b array<string>)

row format delimited fields terminated by '/t'

collection items terminated by ',';

map建表字段类型

create table tab_array(name string,

info map<string,string>)

row format delimited fields terminated by '/t'

collection items terminated by ','

map keys terminated by ':';

示例:

load data local inpatch '/home/hadoop/hivetemp/tab_map.txt' overwrite into table tab_map;

insert into table tab_map sekect name,map('name',name,'ip',ip) from tab_ext;

shell脚本执行

hive -S -e 'select country,count(*) from 指定数据库.tab_ext' > /home/hadoop/hivetemp/e.txt;

自定义函数

hive> add jar /root/hiveDemo.jar;

Added [/root/hiveDemo.jar] to class path

Added resources: [/root/hiveDemo.jar]

hive> create temporary function aabbcc as 'com.cjp.FuckYou';

OK

Time taken: 0.006 seconds

hive> select aabbcc(num),name,sex from b1;

OK

15224992222 河南周口 张三 男

18339115522 河南焦作 李四 女

15122223312 河南驻马店 小晚报 男

22114141241 五大 女

Time taken: 0.098 seconds, Fetched: 4 row(s)

jar包中的类:

package com.cjp;

import java.util.HashMap;

import java.util.Map;

import org.apache.hadoop.hive.ql.exec.UDF;

public class FuckYou extends UDF {

private static Map<String, String> map = new HashMap<>() ;

static {

map.put("1522", "河南周口");

map.put("1512", "河南驻马店");

map.put("1833", "河南焦作");

}

public String evaluate(String a) {

String result = map.get(a.substring(0,4))==null?(a+" "):(a+" "+map.get(a.substring(0, 4)));

return result;

}

}

数据:

[root@namenode ~]# cat data1.data

15224992222 张三 男

18339115522 李四 女

15122223312 小晚报 男

22114141241 五大 女

在hive中查询hadoop中所有文件:

hive>dfs -ls -R /

//加-R是显示所有文件路径

3206

3206

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?