目录

- 一、使用kubeadmin方式安装k8s

- 二、k8s卸载

- 三、安装其他组件

- 1、安装nfs

- 2、安装nfs动态供应

- 3、安装helm

- 4、使用charts来安装prometheus和grafana(不推荐,原因是不好掌控,可以看我总结的普通安装方式)

- 5、安装harbor

- 6、安装jenkins

- 7、使用docker安装Kuboard

- 8、使用k8s安装gitlab

- 9、安装单机版mysql

- 10、安装minIO

- 11、安装rabbitmq

- 12、安装redis

- 13、安装nacos

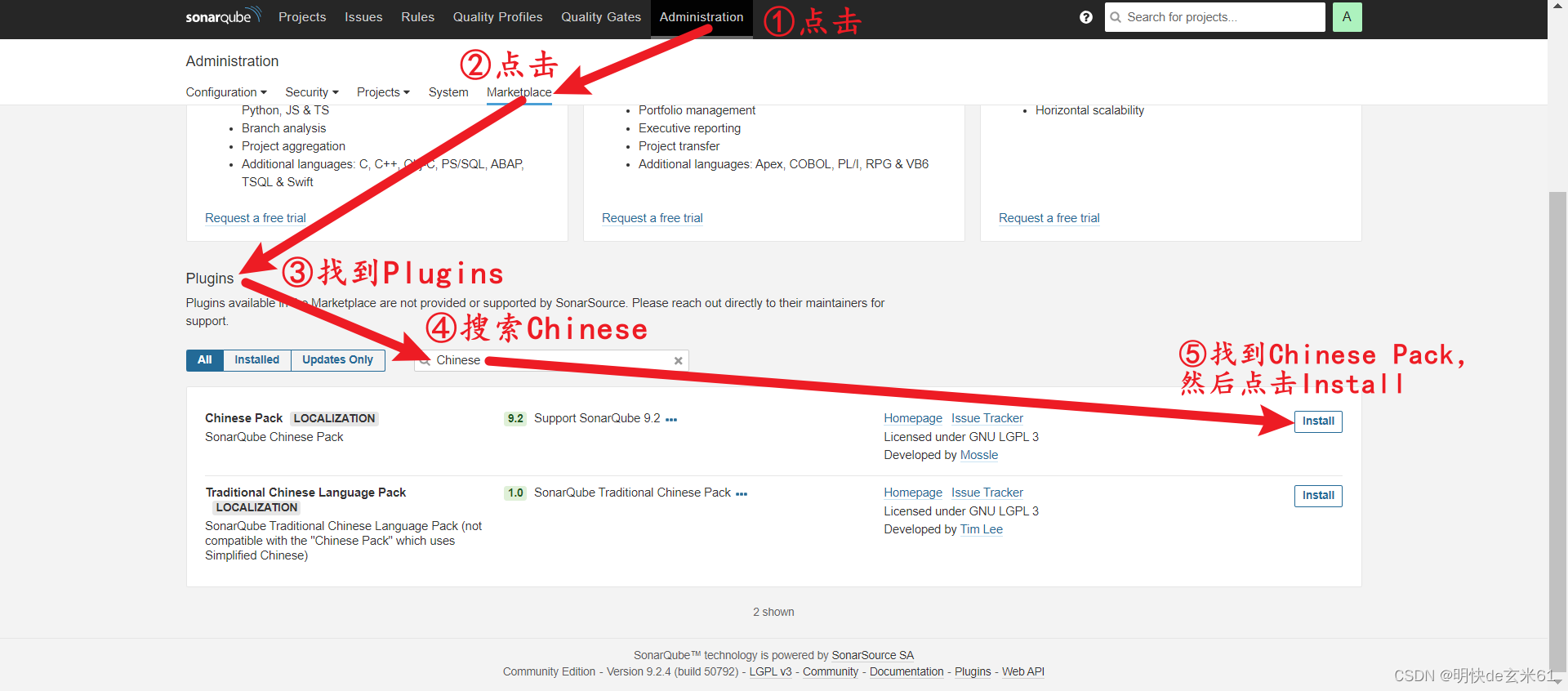

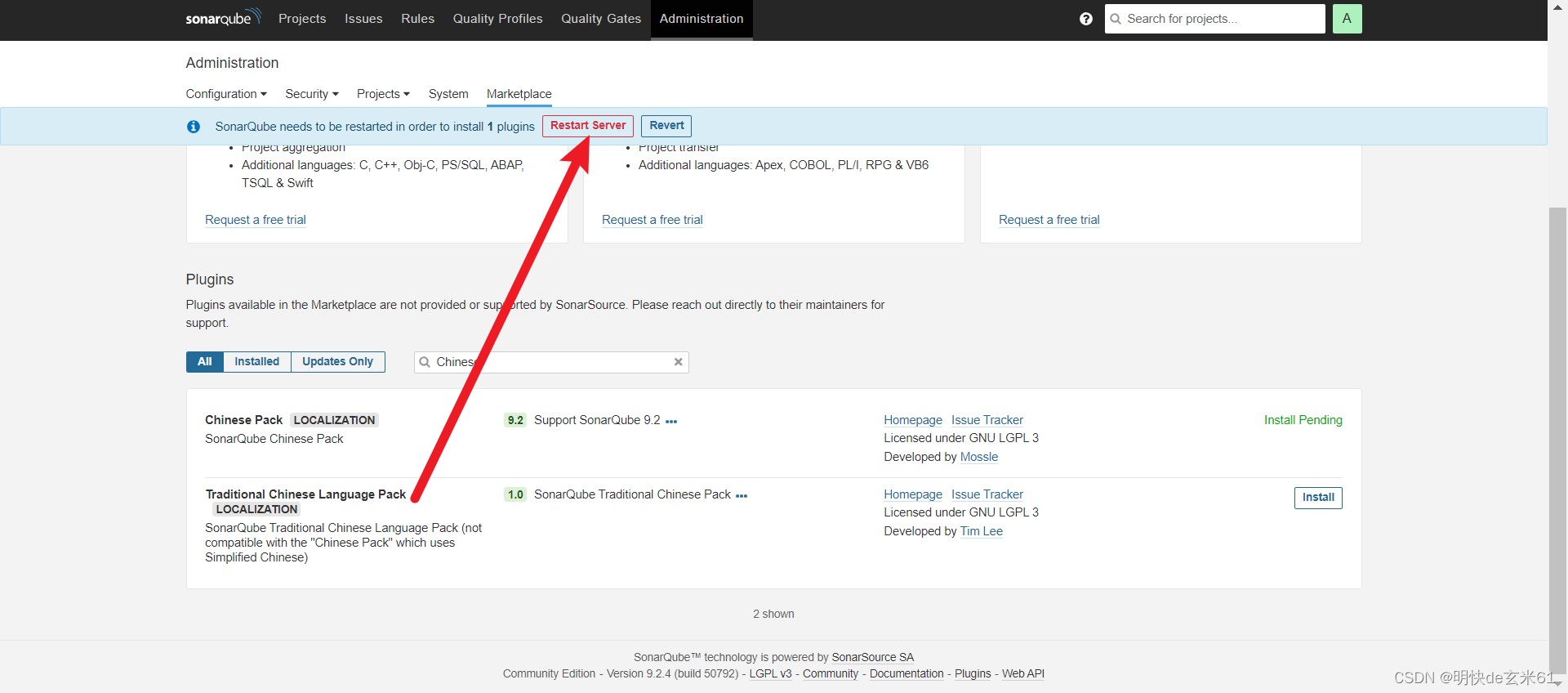

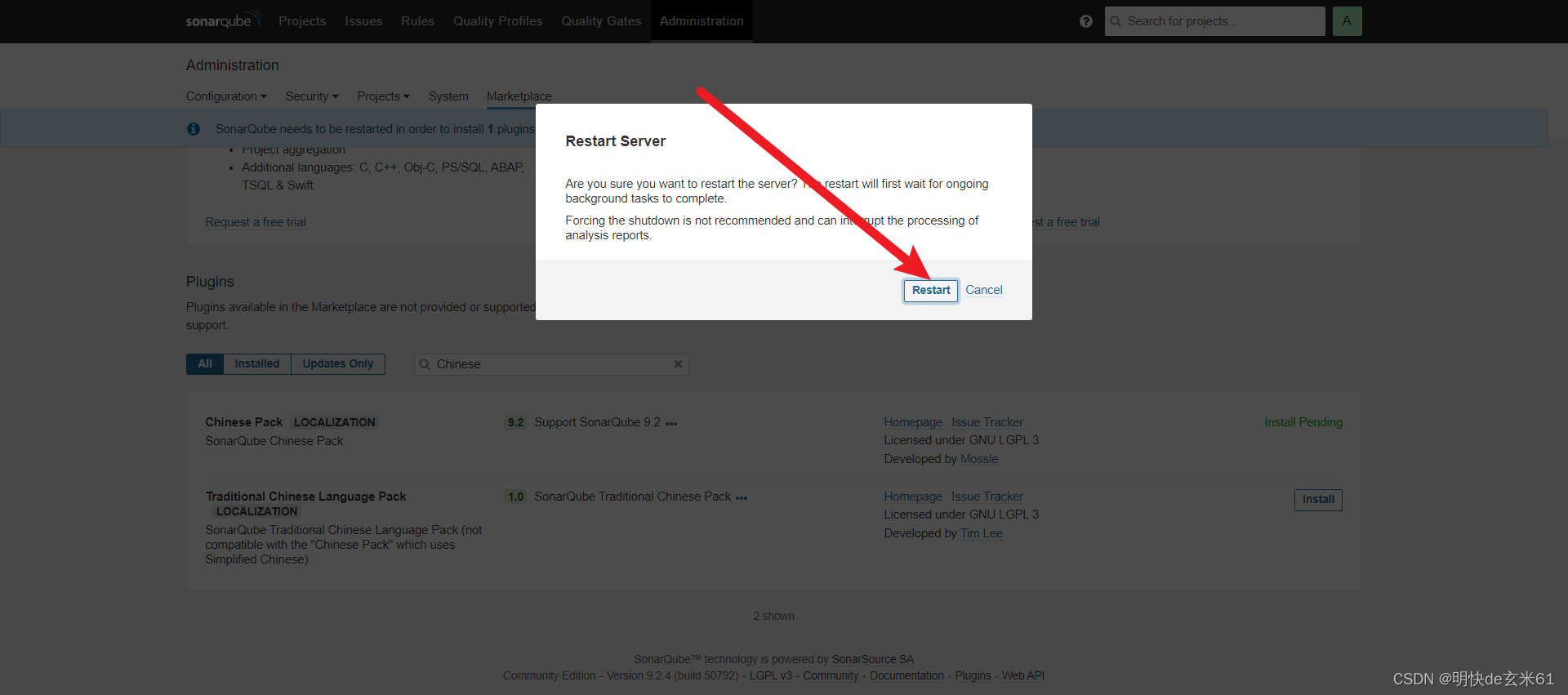

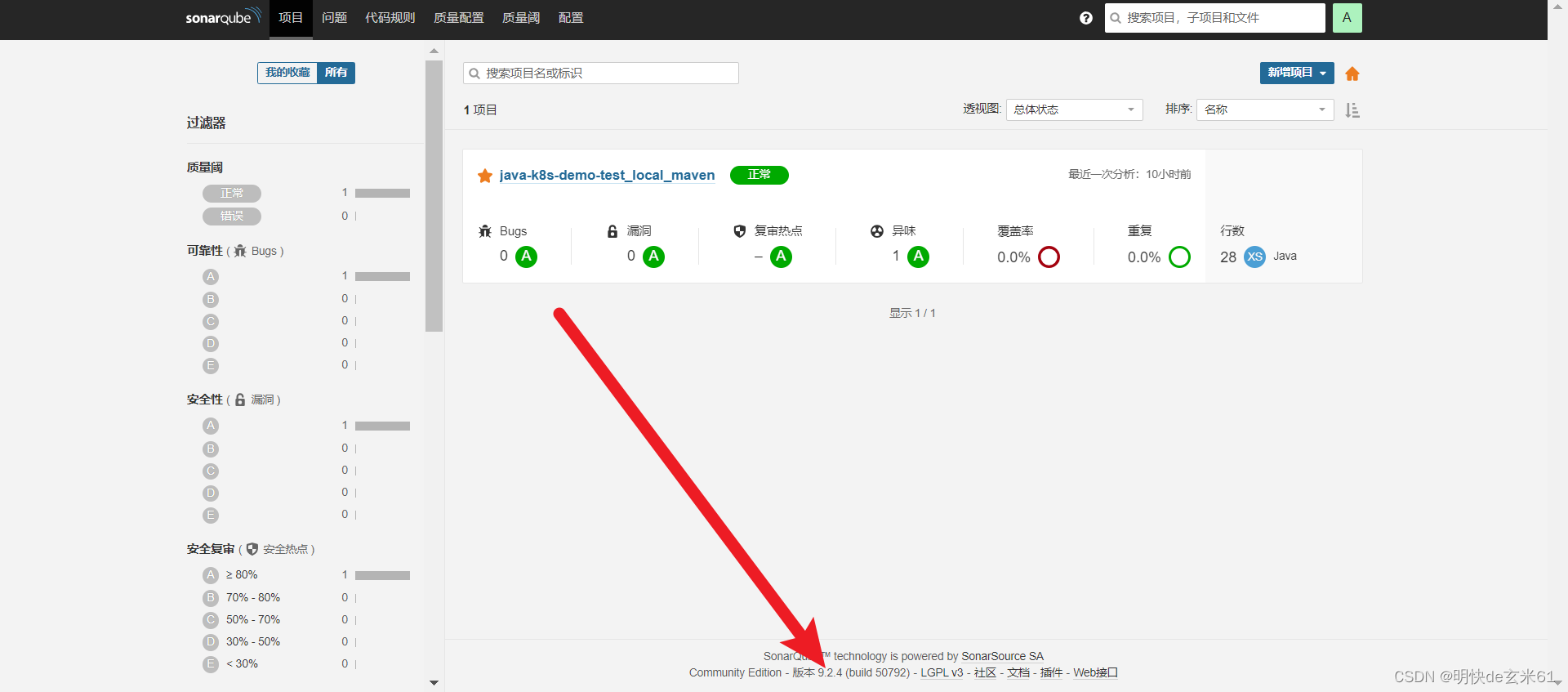

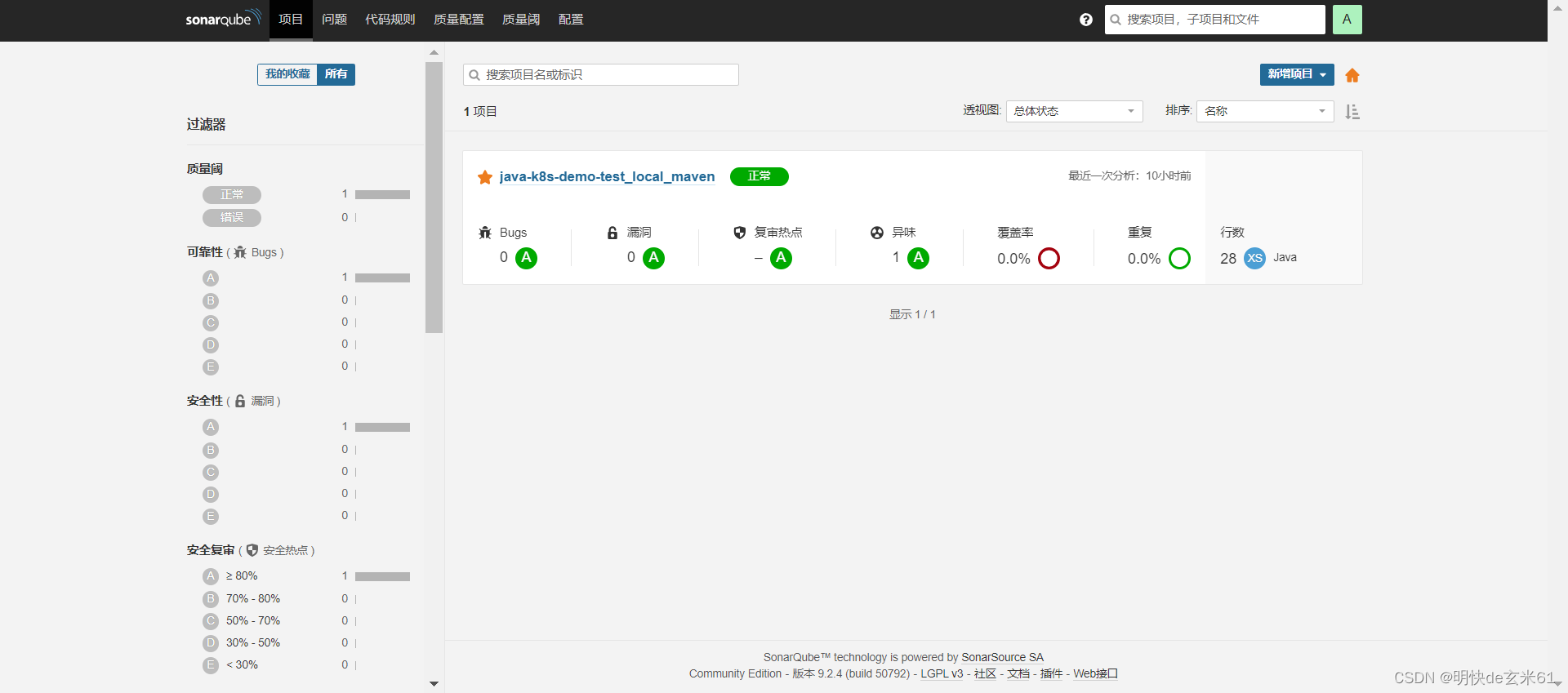

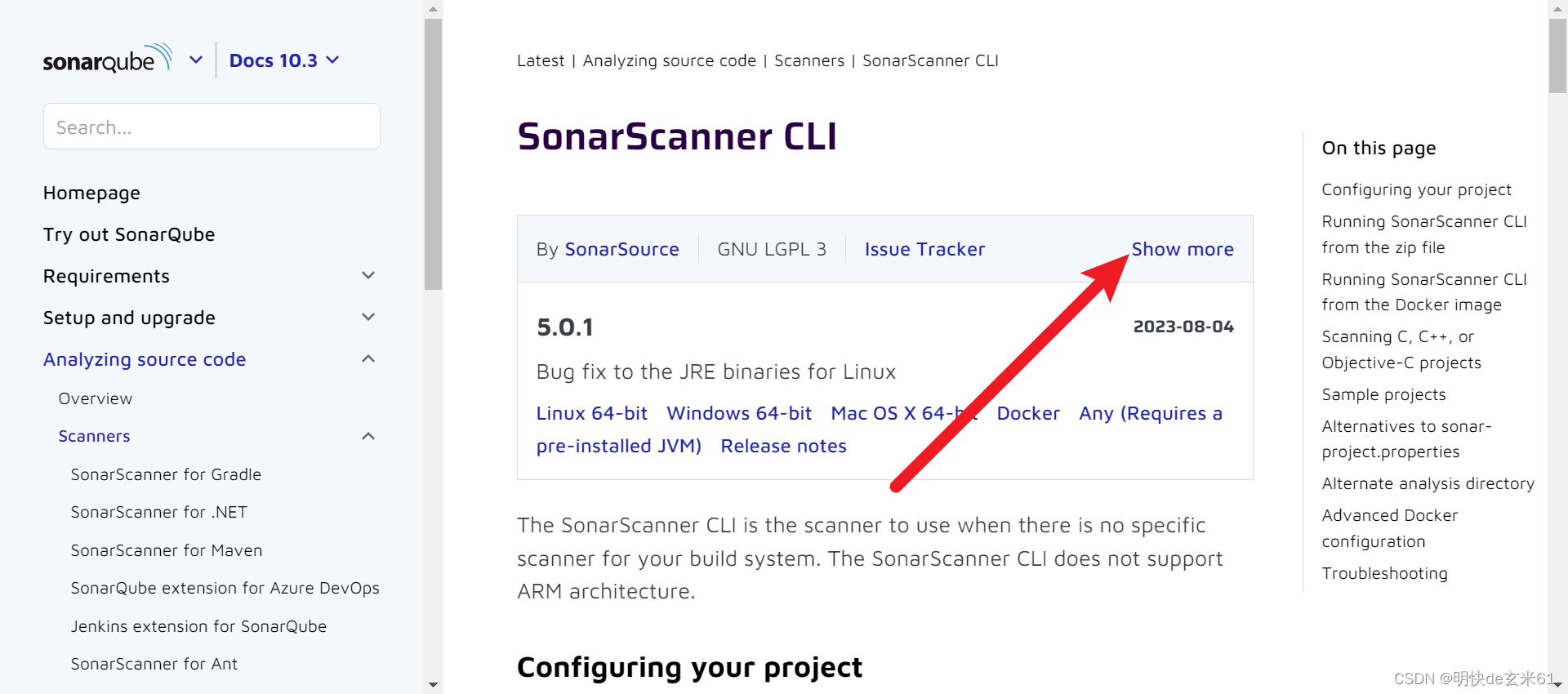

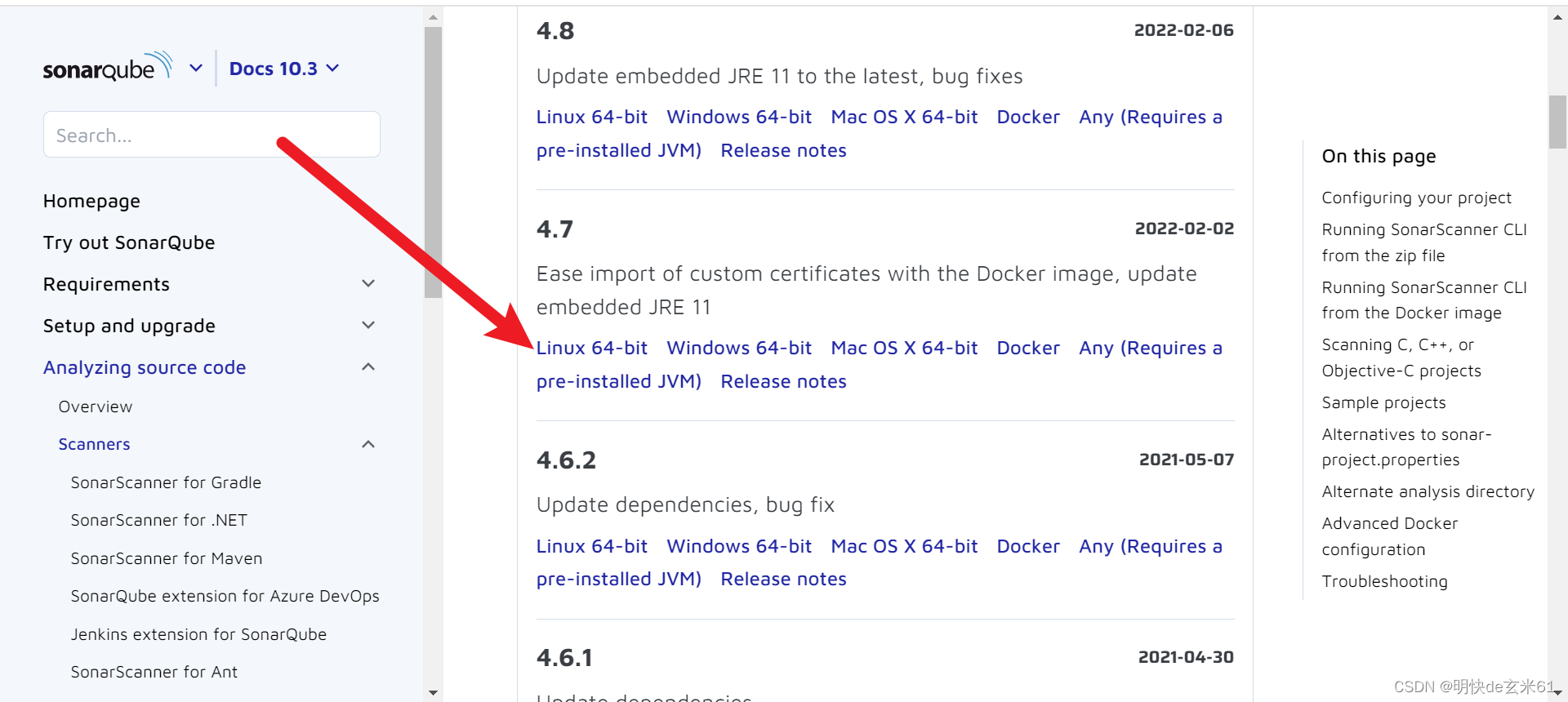

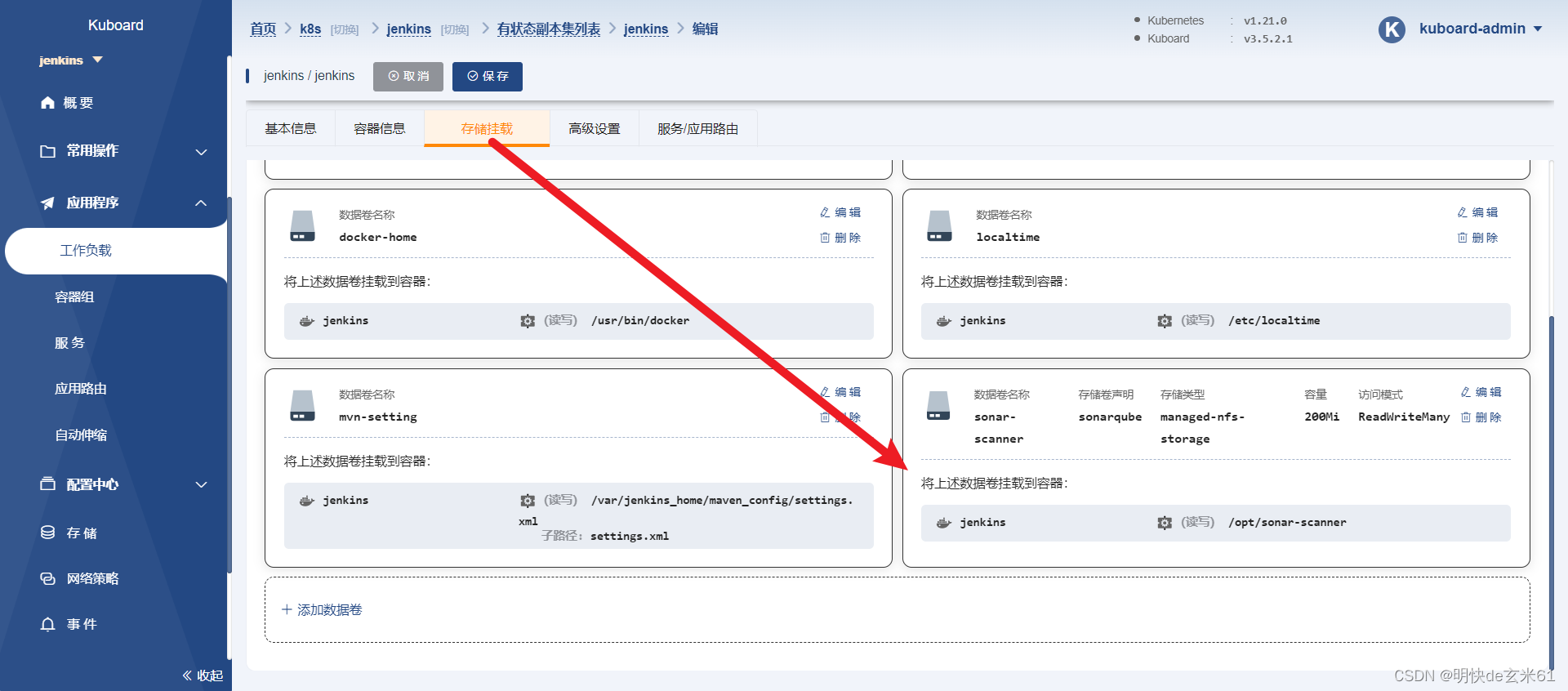

- 14、安装sonar

- 15、单机版mongodb安装

- 16、单机版Elasticsearch安装

- 17、安装nexus

- 18、安装onlyoffice

- 19、常用缩写

- 20、常用命令

一、使用kubeadmin方式安装k8s

1、准备虚拟机

2台虚拟机即可,一台做master节点,另外一台做worker节点不会安装的可以看这个:在VMvare中安装CentOS,建议安装centos7

安装之后需要配置静态ip和语言,记得先切换root用户(CentOS如何切换超级用户/root用户),然后根据以下文章配置即可:

2、安装前置环境(注意:所有虚拟机都执行)

2.1、基础环境

修改虚拟机名称(注意:每个虚拟机单独执行,不能是localhost,每个虚拟机的主机名称必须不同,比如可以是k8s-01、k8s-02……):

# 例如:hostnamectl set-hostname k8s-01

hostnamectl set-hostname 主机名称

修改其他配置,直接复制执行即可(注意:在所有虚拟机上都执行相同指令即可)

#########################################################################

#关闭防火墙: 如果是云服务器,需要设置安全组策略放行端口

# https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#check-required-ports

systemctl stop firewalld

systemctl disable firewalld

# 查看修改结果

hostnamectl status

# 设置 hostname 解析

echo "127.0.0.1 $(hostname)" >> /etc/hosts

#关闭 selinux:

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

#关闭 swap:

swapoff -a

sed -ri 's/.*swap.*/#&/' /etc/fstab

#允许 iptables 检查桥接流量

#https://kubernetes.io/zh/docs/setup/production-environment/tools/kubeadm/install-kubeadm/#%E5%85%81%E8%AE%B8-iptables-%E6%A3%80%E6%9F%A5%E6%A1%A5%E6%8E%A5%E6%B5%81%E9%87%8F

## 开启br_netfilter

## sudo modprobe br_netfilter

## 确认下

## lsmod | grep br_netfilter

## 修改配置

#####这里用这个,不要用课堂上的配置。。。。。。。。。

#将桥接的 IPv4 流量传递到 iptables 的链:

# 修改 /etc/sysctl.conf

# 如果有配置,则修改

sed -i "s#^net.ipv4.ip_forward.*#net.ipv4.ip_forward=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-ip6tables.*#net.bridge.bridge-nf-call-ip6tables=1#g" /etc/sysctl.conf

sed -i "s#^net.bridge.bridge-nf-call-iptables.*#net.bridge.bridge-nf-call-iptables=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.all.disable_ipv6.*#net.ipv6.conf.all.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.default.disable_ipv6.*#net.ipv6.conf.default.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.lo.disable_ipv6.*#net.ipv6.conf.lo.disable_ipv6=1#g" /etc/sysctl.conf

sed -i "s#^net.ipv6.conf.all.forwarding.*#net.ipv6.conf.all.forwarding=1#g" /etc/sysctl.conf

# 可能没有,追加

echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.all.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.default.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.lo.disable_ipv6 = 1" >> /etc/sysctl.conf

echo "net.ipv6.conf.all.forwarding = 1" >> /etc/sysctl.conf

# 执行命令以应用

sysctl -p

2.2、docker环境(在所有虚拟机上都执行下列指令即可)

sudo yum remove docker*

sudo yum install -y yum-utils

#配置docker yum 源

sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#安装docker 19.03.9

yum install -y docker-ce-3:19.03.9-3.el7.x86_64 docker-ce-cli-3:19.03.9-3.el7.x86_64 containerd.io

# 首先启动docker,然后让docker随虚拟机一起启动

systemctl start docker

systemctl enable docker

#配置加速,使用网易镜像加速器

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["http://hub-mirror.c.163.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

2.3、安装k8s核心组件(在所有虚拟机上都执行相同指令即可)

# 配置K8S的yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 卸载旧版本

yum remove -y kubelet kubeadm kubectl

# 查看可以安装的版本

yum list kubelet --showduplicates | sort -r

# 安装kubelet、kubeadm、kubectl 指定版本

yum install -y kubelet-1.21.0 kubeadm-1.21.0 kubectl-1.21.0

# 开机启动kubelet

systemctl enable kubelet && systemctl start kubelet

2.4、导入k8s所需镜像

直接使用docker load -i 镜像tar包名称即可导入,镜像在下面

链接:https://pan.baidu.com/s/17LEprW3CeEAQYC4Dn_Klxg?pwd=s5bt

提取码:s5bt

说明:

这些镜像是atguigu雷丰阳老师传到阿里云上的,我直接放到百度网盘中,大家可以直接导入即可

2.5、导入calico镜像

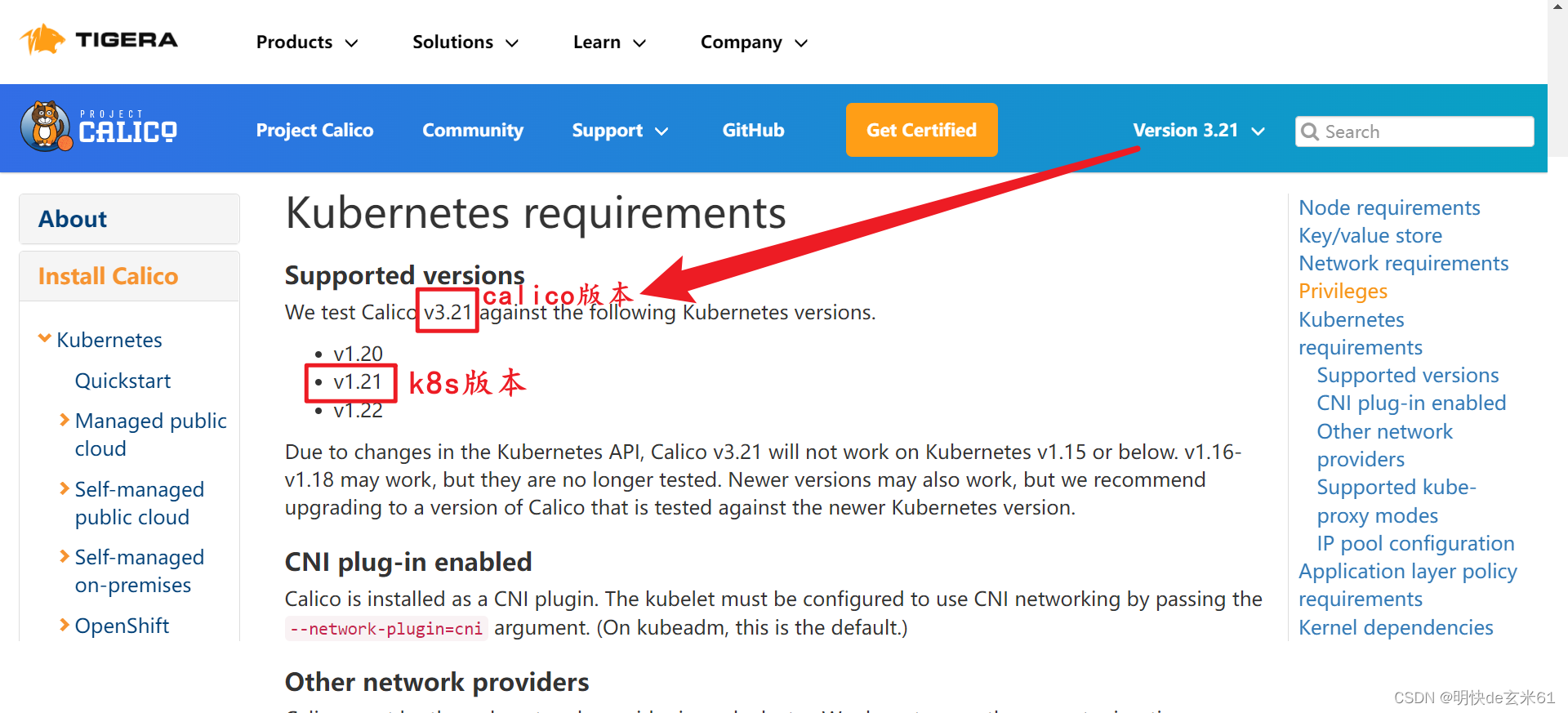

因为我们所用的k8s版本是1.21.0,根据https://projectcalico.docs.tigera.io/archive/v3.21/getting-started/kubernetes/requirements可以看到对应的calico版本是v3.21,截图在下面

直接使用docker load -i 镜像tar包名称即可导入,镜像在下面

链接:https://pan.baidu.com/s/122EoickH6jsSMJ8ECJ3P5g?pwd=vv8n

提取码:vv8n

3、初始化master节点(注意:只能在master节点虚拟机上执行)

3.1、确定master节点ip

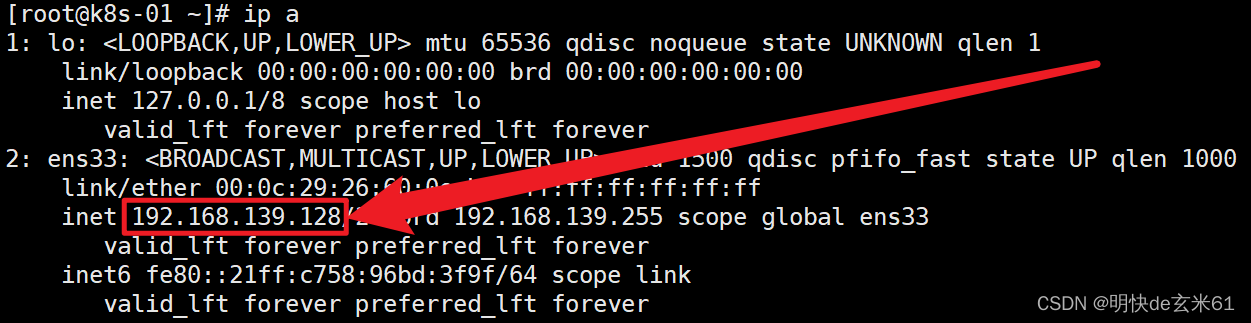

执行ip a就可以找到了,如下:

3.2、找到合适的service地址区间、pod地址区间

service地址区间、pod地址区间和master节点的ip只要不重复就可以了,由于我的是192.168.139.128,所以我选择的两个地址区间如下:

service地址区间:10.98.0.0/16(注意:16代表前面XX.XX是固定的)

pod地址区间:10.99.0.0/16(注意:16代表前面XX.XX是固定的)

3.3、执行kubeadm init操作

注意:将master主节点id、service地址区间、pod地址区间改成你自己主节点的,查找方法在上面

kubeadm init \

--apiserver-advertise-address=master主节点id \

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

--kubernetes-version v1.21.0 \

--service-cidr=service地址区间 \

--pod-network-cidr=pod地址区间

比如我的就是

kubeadm init \

--apiserver-advertise-address=192.168.139.128 \

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

--kubernetes-version v1.21.0 \

--service-cidr=10.98.0.0/16 \

--pod-network-cidr=10.99.0.0/16

说明:

指令中的registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images对应k8s相关镜像的前缀,这些镜像来自于atguigu雷丰阳老师的阿里云镜像仓库,我直接把它放到百度网盘中了,大家可以直接下载导入即可

3.4、根据初始化结果来执行操作

下面是我的初始化结果:

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

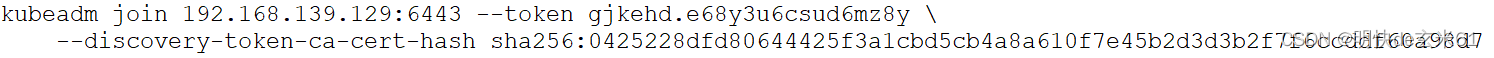

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.139.129:6443 --token gjkehd.e68y3u6csud6mz8y \

--discovery-token-ca-cert-hash sha256:0425228dfd80644425f3a1cbd5cb4a8a610f7e45b2d3d3b2f7f6ccddf60a98d7

3.4.1、复制相关文件夹

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

3.4.2、导出环境变量

export KUBECONFIG=/etc/kubernetes/admin.conf

3.4.3、导入calico.yaml

链接:https://pan.baidu.com/s/1EWJNtWQuekb0LYXernjWew?pwd=70ku

提取码:70ku

说明:

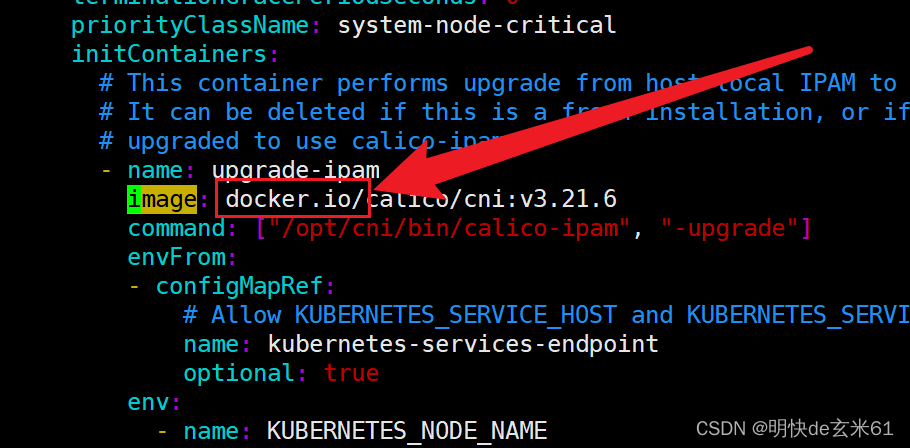

虽然我已经给大家提供了calico.yaml文件,但是还想和大家说一下该文件的来源,首先在2.5、导入calico镜像中可以知道,我们本次使用的calico版本是v3.21,那么可以在linux上使用wget https://docs.projectcalico.org/v3.21/manifests/calico.yaml --no-check-certificate指令下载(注意链接中的版本号是v3.21),其中--no-check-certificate代表非安全方式下载,必须这样操作,不然无法下载

另外对于calico.yaml来说,我们将所有image的值前面的docker.io/去掉了,毕竟我们已经将calico镜像导入了,就不用去docker.io下载镜像了

下一个需要修改的位置就是3.4.4、修改calico.yaml里面的值了,这个直接跟着下面修改即可

3.4.4、修改calico.yaml(注意:必须修改)

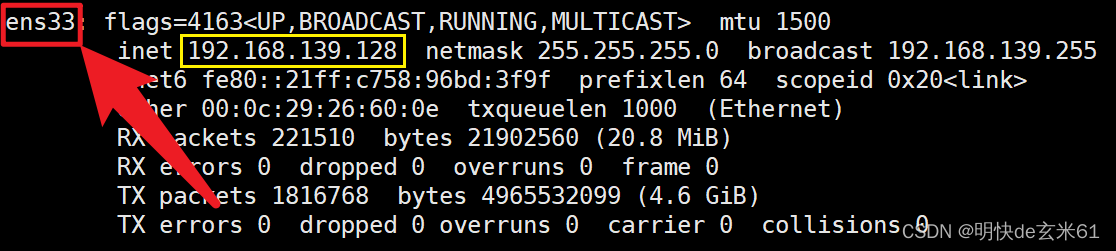

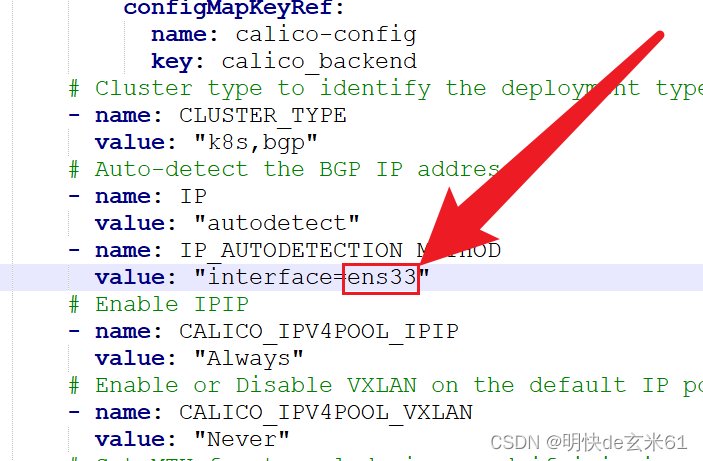

根据ifconfig找到我们所用ip前面的内容,比如我的是ens33,如下:

根据你ip前面的结果来修改文件中interface的值,如下:

其实下面这些指令都是我自己添加的,原来的calico.yaml中是没有的,添加的原因是执行calico.yaml报错了,然后根据这篇calico/node is not ready来添加的,添加之后在执行yaml文件,然后calico的所有容器都运行正常了

- name: IP_AUTODETECTION_METHOD

value: "interface=ens33"

3.4.5、执行calico.yaml

kubectl apply -f calico.yaml

4、初始化worker节点(注意:只能在所有worker节点虚拟机上执行)

找到3.4、根据初始化结果来执行操作的master主节点中的最后结果,记得用你自己的初始化结果哈,然后在所有worker节点执行即可

如果命令中的token失效,可以在主节点虚拟机中通过kubeadm token create --print-join-command得到最新的指令,然后在所有worker节点的虚拟机中执行即可

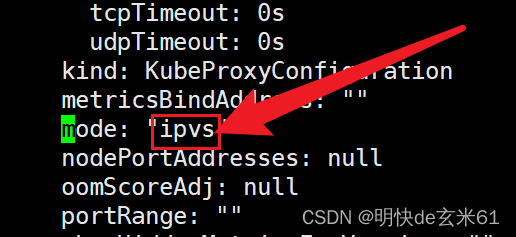

5、设置ipvs模式(推荐执行,但是不执行也没影响;注意:只能在master节点虚拟机上执行)

5.1、设置ipvs模式

默认是iptables模式,但是这种模式在大集群中会占用很多空间,所以建议使用ipvs模式

首先执行kubectl edit cm kube-proxy -n kube-system,然后输入/mode,将mode的值设置成ipvs,保存退出即可,如下:

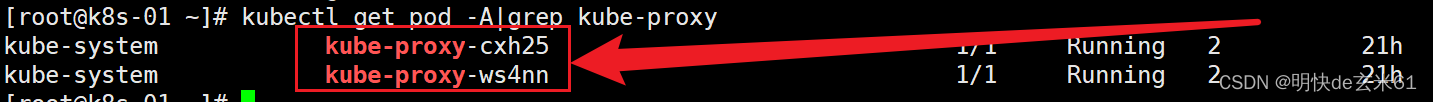

5.2、找到kube-proxy的pod

kubectl get pod -A|grep kube-proxy

例如pod如下:

5.3、删除kube-proxy的pod

kubectl delete pod pod1名称 pod2名称…… -n kube-system

根据上面的命令,可以找到kube-proxy的pod名称,比如我上面的就是kube-proxy-cxh25、kube-proxy-ws4nn,那么删除命令就是:kubectl delete pod kube-proxy-cxh25 kube-proxy-ws4nn -n kube-system,由于k8s拥有自愈能力,所以proxy删除之后就会重新拉起一个pod

5.4、查看pod启动情况

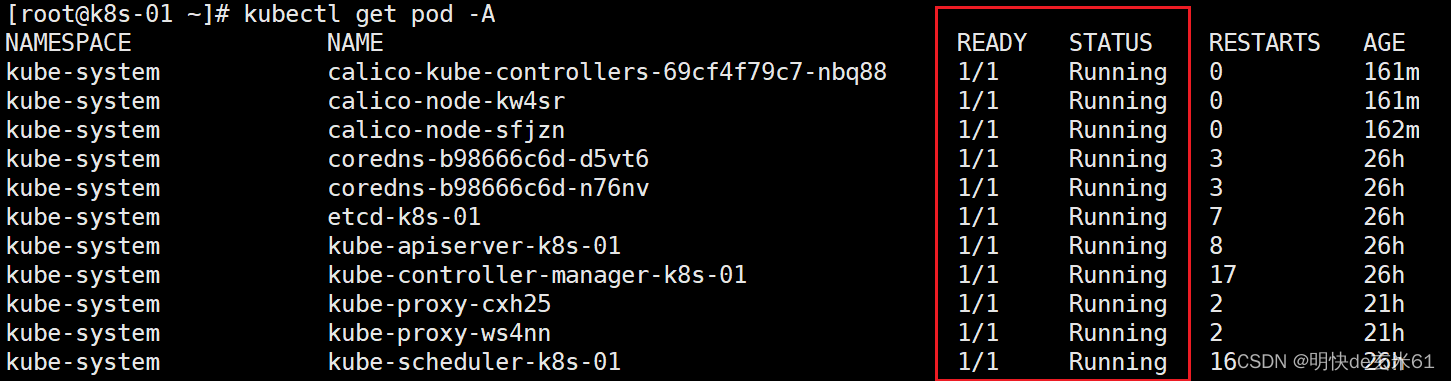

使用kubectl get pod -A命令即可,我们只看NAMESPACE下面的kube-system,只要看到所有STATUS都是Running,并且READY都是1/1就可以了,如果pod一直不满足要求,那就可以使用kubectl describe pod pod名称 -n kube-system查看一下pod执行进度,如果把握不准,可以使用reboot命令对所有虚拟机执行重启操作,最终结果是下面这样就可以了

6、部署k8s-dashboard(注意:只能在master节点虚拟机上执行)

6.1、下载并执行recommended.yaml

6.1.1、下载recommended.yaml

链接:https://pan.baidu.com/s/1rNnCUa7B7GaX2SFjiPgZTQ?pwd=z2zp

提取码:z2zp

说明:

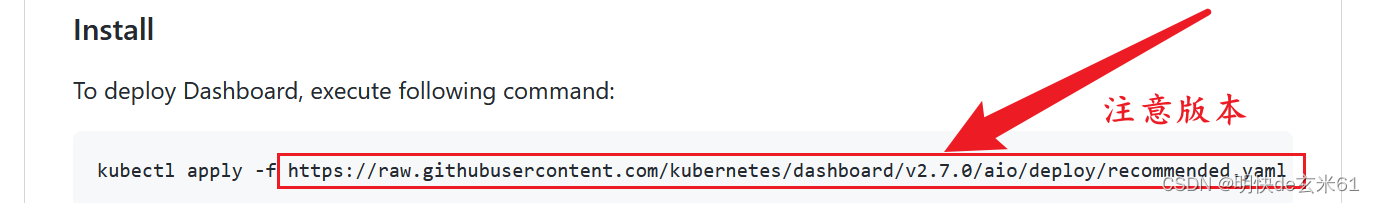

该yaml来自于https://github.com/kubernetes/dashboard中的该位置:

直接通过wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml下载该yaml即可

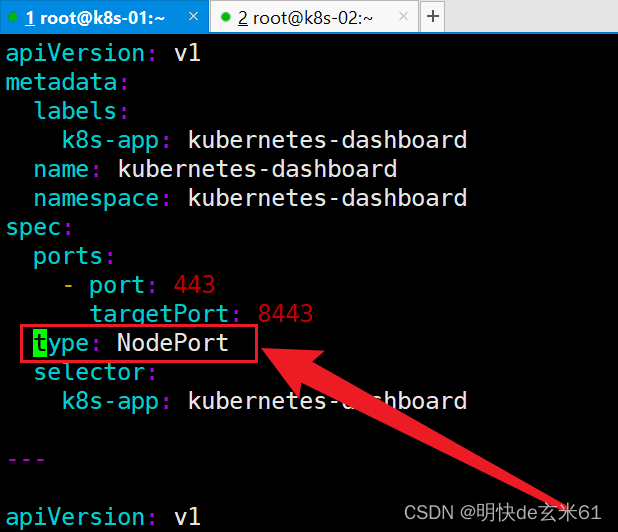

由于我们通过浏览器直接访问kubernetes dashboard,所以还需要在yaml文件中添加type:NodePort,如下:

然后直接执行kubectl apply -f recommended.yaml即可

6.1.2、执行recommended.yaml

kubectl apply -f recommended.yaml

6.2、下载并执行dashboard-admin.yaml

6.2.1、下载dashboard-admin.yaml

链接:https://pan.baidu.com/s/14upSiYdrZaw5EVFWRDNswg?pwd=co3g

提取码:co3g

说明:

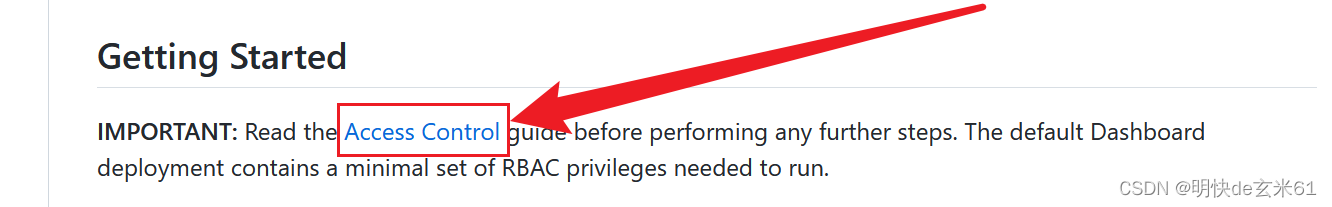

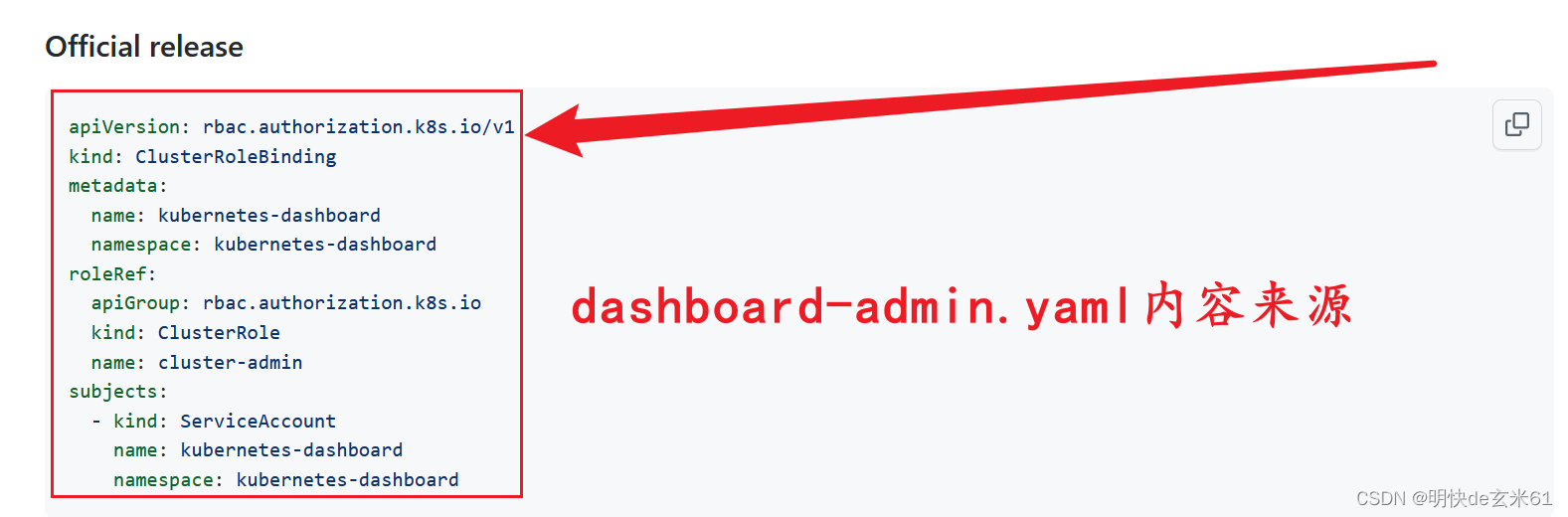

该yaml来自于https://github.com/kubernetes/dashboard中的该位置:

进入Access Control链接之后,找到下列位置即可,复制到dashboard-admin.yaml文件中即可

6.2.2、执行dashboard-admin.yaml

# 删除原有用户,避免启动报错

kubectl delete -f dashboard-admin.yaml

# 添加新用户

kubectl apply -f dashboard-admin.yaml

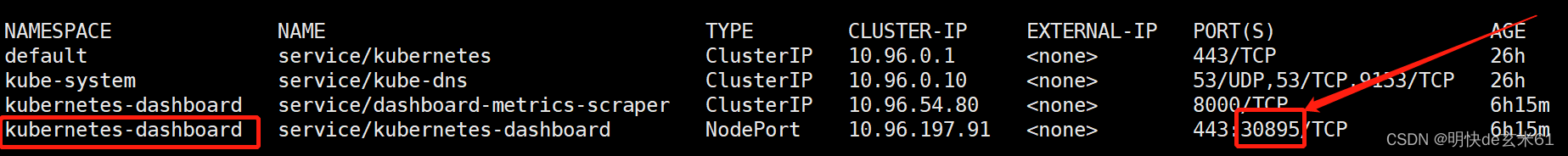

6.3、找到k8s-dashboard访问端口

使用kubectl get all -A命令即可,找到:

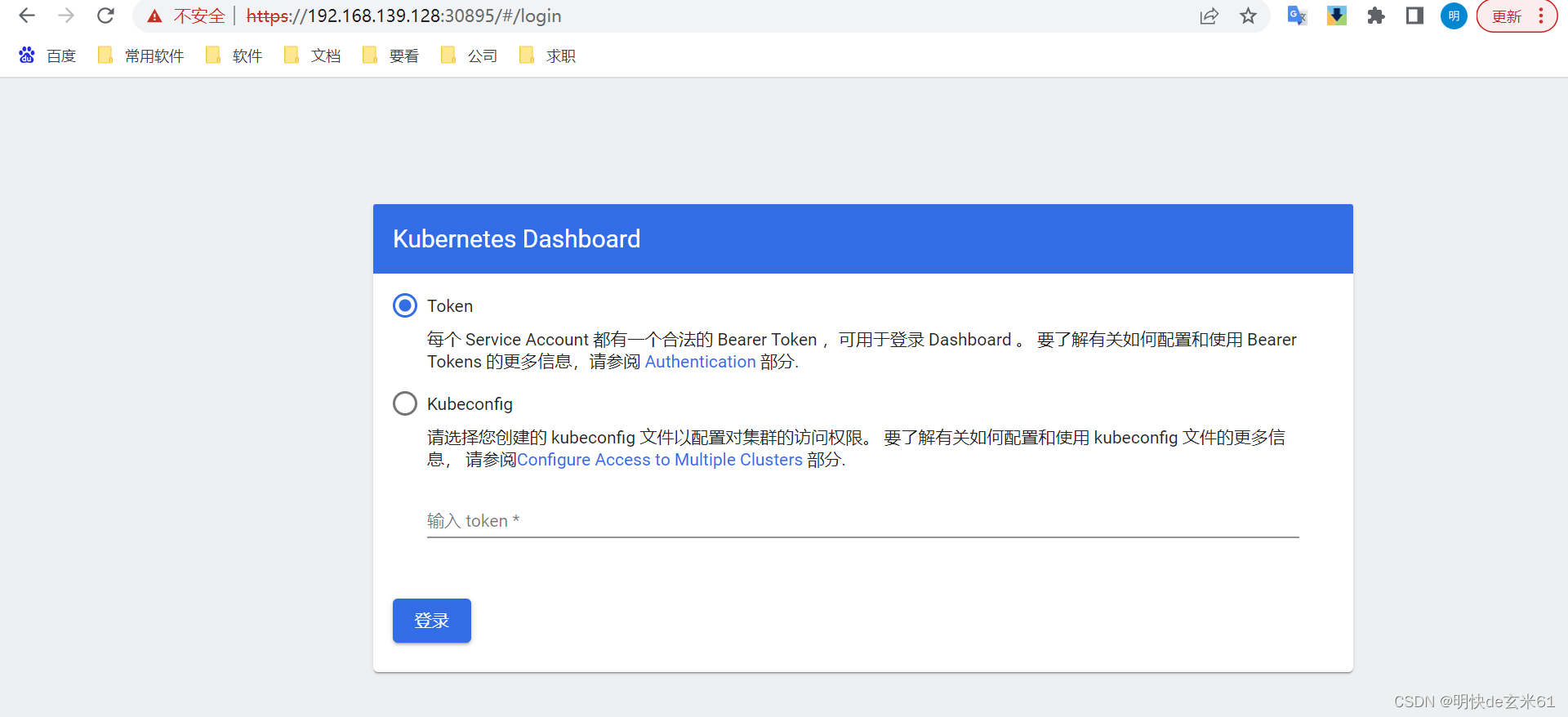

6.4、访问k8s-dashboard

直接在浏览器上根据你的虚拟机ip和上述端口访问即可,如下:

我们需要获取token,指令如下:

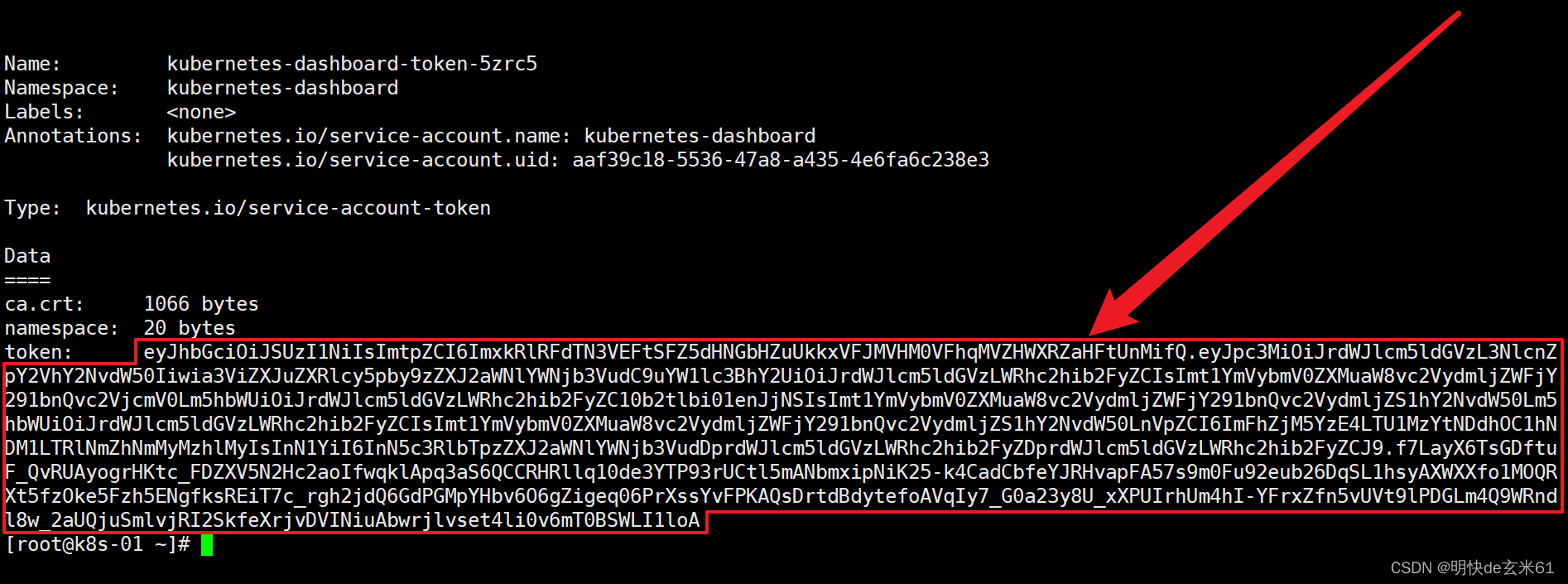

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

复制红框框中的内容输入到文本框中,然后点击登录按钮即可,如下:

然后k8s-dashboard首页如下:

二、k8s卸载

1、只有master节点执行

# 必须删除,否则会出现https://www.cnblogs.com/lfl17718347843/p/14122407.html中的问题

rm -rf $HOME/.kube

2、master和worker节点都要执行

# 停掉kubelet

systemctl stop kubelet.service

# 重新初始化节点配置,输入字母y回车

kubeadm reset

# 卸载管理组件

yum erase -y kubelet kubectl kubeadm kubernetes-cni

# 重启docker

systemctl restart docker

三、安装其他组件

1、安装nfs

1.1、master节点安装nfs(说明:可以是其他节点也行)

// 安装nfs-utils工具包

yum install -y nfs-utils

// 创建nfs文件夹

mkdir -p /nfs/data

// 确定访问ip,其中*代表所有ip,当然也可以设置cidr,比如我的master节点ip是192.168.139.128,那么下面的*就可以换成192.168.0.0/16,这样可以限制访问nfs目录的ip

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

// 开机启动nfs相关服务

systemctl enable rpcbind

systemctl enable nfs-server

systemctl start rpcbind

systemctl start nfs-server

// 检查配置是否生效

exportfs -r

exportfs

1.2、所有node节点安装nfs

// 安装nfs-utils工具包

yum install -y nfs-utils

// 检查 nfs 服务器端是否有设置共享目录,如果输出结果如下就是正常的:Export list for 192.168.139.128: /nfs/data *

showmount -e master节点所在服务器ip

// 创建nd共享master节点的/nfs/data目录

mkdir /nd

// 将node节点nfs目录和master节点nfs目录同步(说明:假设node节点的nfs目录是/nd,而master节点的nfs目录是/nfs/data)

mount -t nfs master节点所在服务器ip:/nfs/data /nd

// 测试nfs目录共享效果

比如在node节点的/nd中执行echo "111" > a.txt,然后就可以去master节点的/nfs/data目录中看下是否存在a.txt文件

// 开启自动挂载nfs目录(注意:先启动master节点虚拟机,然后在启动node节点虚拟机,如果node节点虚拟机先启动,除非重新启动,不然只能通过mount -t nfs master节点所在服务器ip:/nfs/data /nd指令进行手动挂载)

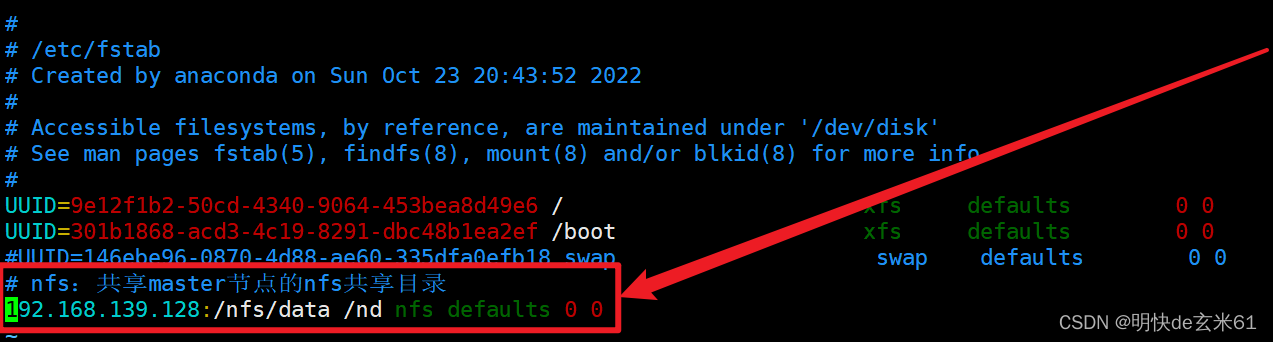

执行 vim /etc/fstab 命令打开fstab文件,然后将

master节点所在服务器ip:/nfs/data /nd nfs defaults 0 0

添加到文件中,最终结果如下图,其中ip、nfs共享目录、

当前节点的nfs目录都是我自己的,大家可以看着配置成自己的即可

2、安装nfs动态供应

2.1、概念

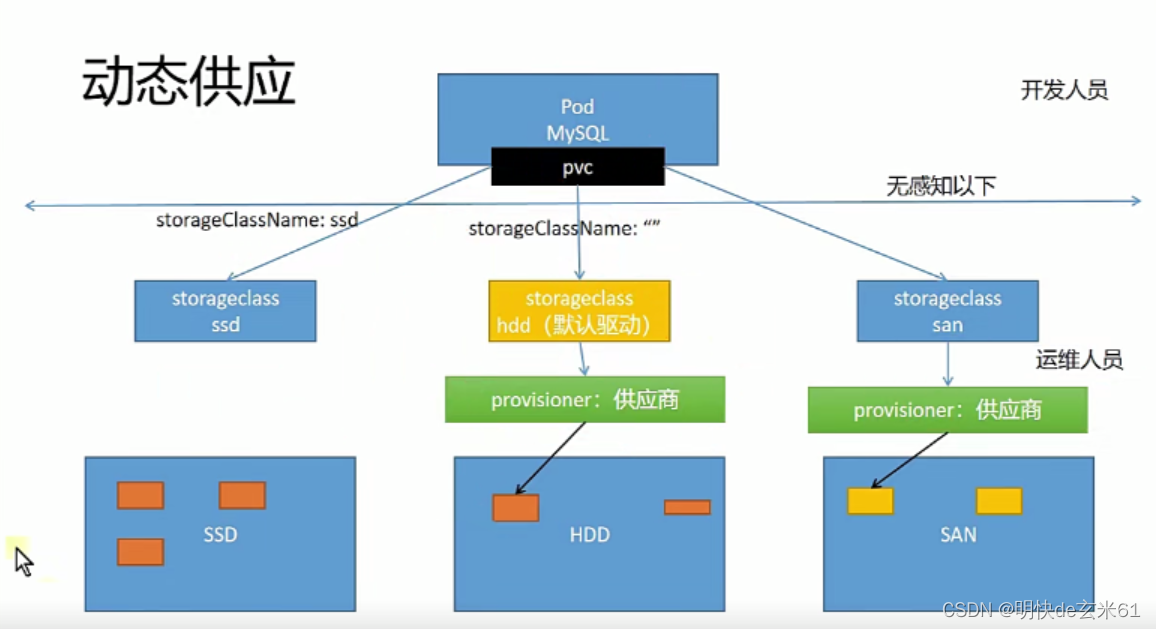

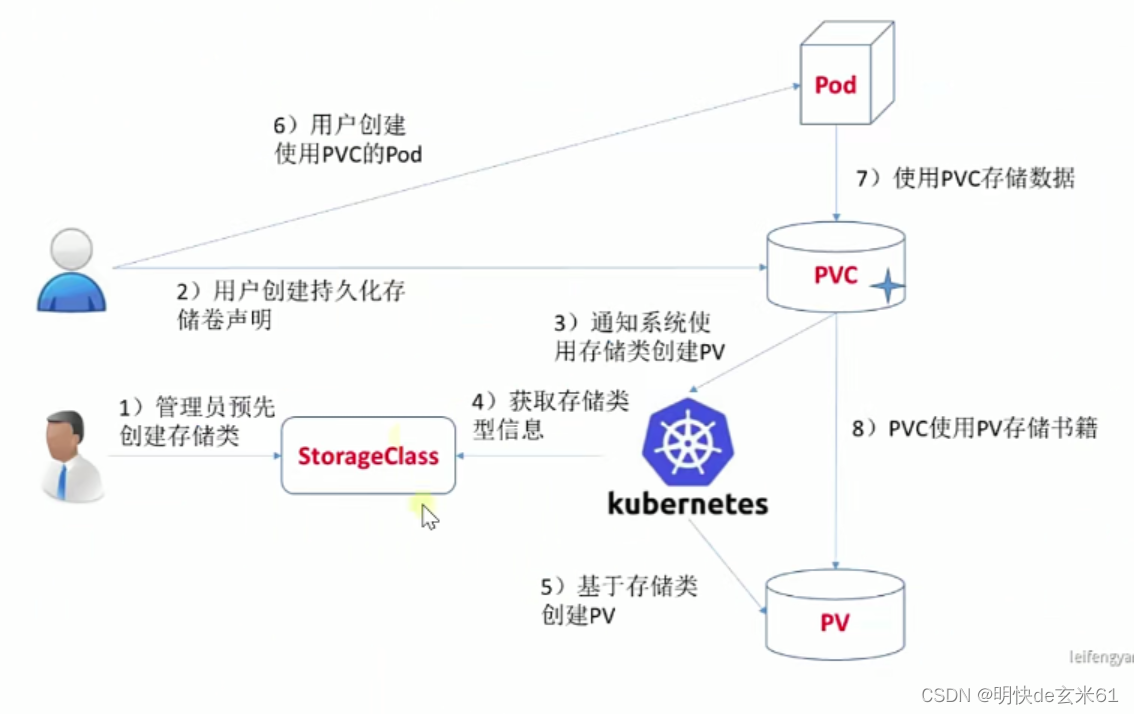

开发人员只用说明自己的需求,也就是pvc,在pvc里面指定对应的存储类,我们的需求到时候自然会被满足

运维人员会把供应商(比如nfs)创建好,另外会设置好存储类的类型,开发人员只用根据要求去选择合适的存储类即可

具体过程如下:

2.2、安装步骤(只用在nfs主节点执行)

2.2.1、三合一操作(注意:本节操作之后,下面2.2.2、2.2.3、2.2.4就不用操作了)

下面两个链接看下就行,主要从说明看起

操作文件所在路径:https://github.com/kubernetes-retired/external-storage/tree/master/nfs-client

目前使用deploy安装方式,具体路径是:https://github.com/kubernetes-retired/external-storage/tree/master/nfs-client/deploy

说明: 下面三个yaml可以整合到一个yaml之中,然后用---进行分隔,然后一次性执行即可,我这里提供一下合并之后的yaml,大家按照下面的说明自己改下

链接:https://pan.baidu.com/s/1mtMjSDNqipi-oBTPDNjbNg?pwd=tgcj

提取码:tgcj

2.2.2、创建存储类(根据自己要求设置,可以设置多个)

地址:https://github.com/kubernetes-retired/external-storage/blob/master/nfs-client/deploy/class.yaml

我使用的内容可能和上面不一样,建议大家使用我下面的yaml,可以直接使用kubectl apply -f 下面的yaml文件执行即可

## 创建了一个存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage # 存储类名称

annotations:

storageclass.kubernetes.io/is-default-class: "true" # 是否是默认分类,也就是pvc不选择存储分类的时候就用这个,一般只用设置一个默认的即可

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

#provisioner指定一个供应商的名字。

#必须匹配 k8s-deployment 的 env PROVISIONER_NAME的值

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份,这个也是可选参数

#### 这里可以调整供应商能力。

2.2.3、设置供应商信息

我使用的内容可能和上面不一样,建议大家使用我下面的yaml,不能直接复制粘贴使用,注意把yaml里面XXX代表的nfs主机ip和nfs共享目录修改成自己的,修改完成之后可以直接使用kubectl apply -f 下面的yaml文件执行即可

另外里面使用的镜像是尚硅谷雷丰阳老师的阿里云仓库镜像,如果哪一天他的镜像不在了,大家可以使用docker load -i 镜像tar包名称导入下面的镜像即可

链接:https://pan.baidu.com/s/1VT9pbmCIsh4tHdcLx5ryvA?pwd=o17g

提取码:o17g

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2 # 使用尚硅谷雷丰阳的镜像

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: XXX ## 指定自己nfs服务器地址,也就是装nfs服务器的主机ip,比如我的就是192.168.139.128

- name: NFS_PATH

value: XXX ## 指定自己nfs服务器共享的目录,比如我的就是/nfs/data

volumes:

- name: nfs-client-root

nfs:

server: XXX ## 指定自己nfs服务器地址,也就是装nfs服务器的主机ip,比如我的就是192.168.139.128

path: XXX ## 指定自己nfs服务器共享的目录,比如我的就是/nfs/data

2.2.4、设置rbac权限

地址:https://github.com/kubernetes-retired/external-storage/blob/master/nfs-client/deploy/rbac.yaml

我使用的内容可能和上面不一样,建议大家使用我下面的yaml,直接使用kubectl apply -f 下面的yaml文件执行即可

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

2.3、验证

将下面yaml文件通过kubectl apply -f yaml文件命令执行,然后通过kubectl get pvc、kubectl get pv看到对应的pvc和pv信息,并且能在nfs共享目录下面看到对应的文件夹,那就说明没有问题

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc-2

namespace: default

labels:

app: nginx-pvc-2

spec:

storageClassName: managed-nfs-storage ## 存储类的名字,按照你自己的填写,如果设置了默认存储类,可以把不设置该值

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100m

3、安装helm

3.1、helm安装包

链接:https://pan.baidu.com/s/1qF4zrQm8FYUqMZxMYU0aAg?pwd=ju13

提取码:ju13

3.2、解压

tar -zxvf helm-v3.5.4-linux-amd64.tar.gz

3.3、移动

mv linux-amd64/helm /usr/local/bin/helm

3.4、验证

helm

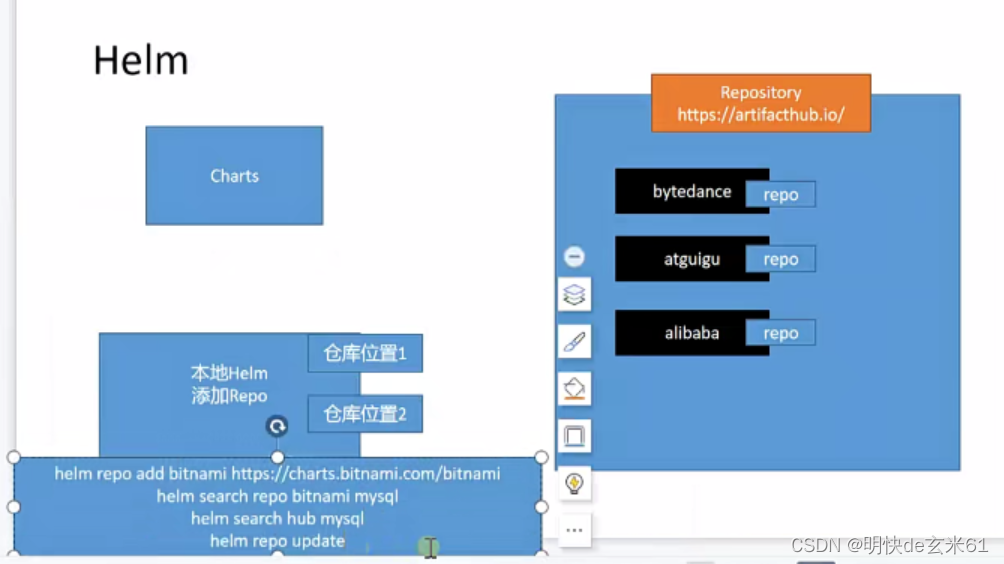

3.5、添加repo仓库

helm repo add bitnami https://charts.bitnami.com/bitnami

仓库说明:默认仓库是hub,常用的第三方仓库是bitnami,这些仓库都隶属于https://artifacthub.io/平台

4、使用charts来安装prometheus和grafana(不推荐,原因是不好掌控,可以看我总结的普通安装方式)

4.1、charts下载

在linux上找个合适的位置新建目录,然后进入目录之后依次执行下面脚本

# 1、添加仓库

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

# 2、拉取压缩包

helm pull prometheus-community/kube-prometheus-stack --version 16.0.0

# 3、解压压缩包

tar -zxvf kube-prometheus-stack-16.0.0.tgz

来源:

https://artifacthub.io/packages/helm/prometheus-community/kube-prometheus-stack?modal=install

4.2、修改values.yaml

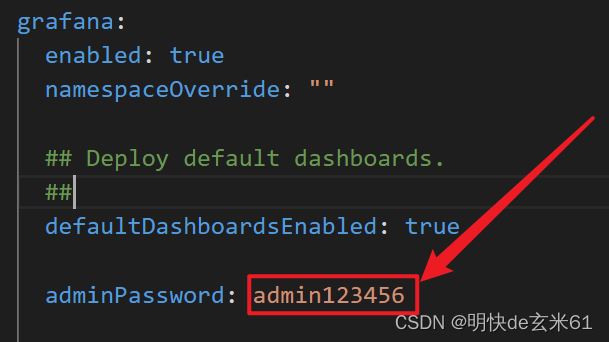

进入解压之后的kube-prometheus-stack目录,然后将values.yaml通过xftp拉到windows桌面上,之后打开values.yaml,搜索adminPassword,然后将prom-operator修改成grafana的密码,用于登录grafana,比如admin123456,修改完成效果如下:

然后将values.yaml通过xftp传到linux上,并且覆盖values.yaml

4.3、导入镜像

有部分镜像是外国网站的,我们直接拉取会失败,所以我给大家已经准备好了,大家直接下载导入即可,其中quay.io_prometheus_node-exporter_v1.1.2.tar要导入所有节点中(包括主节点);而quay.io_prometheus_alertmanager_v0.21.0.tar、quay.io_prometheus_prometheus_v2.26.0.tar、k8s.gcr.io_kube-state-metrics_kube-state-metrics_v2.0.0.tar只需要导入工作节点就可以了,其中导入命令如下:docker load -i 镜像tar包名称

其中百度网盘链接如下:

链接:https://pan.baidu.com/s/17q8QX-LRfxyaSTl9arsvcw?pwd=wxyb

提取码:wxyb

4.4、创建命名空间

未来我们的prometheus和grafana的相关内容都会安装到这个命名空间下面

kubectl create ns monitor

4.5、安装

在我们解压之后的kube-prometheus-stack目录中执行下述命令即可,执行过程中会出现很多warn信息,这个不需要关注

helm install -f values.yaml prometheus-stack ./ -n monitor

4.6、保证pod运行完好

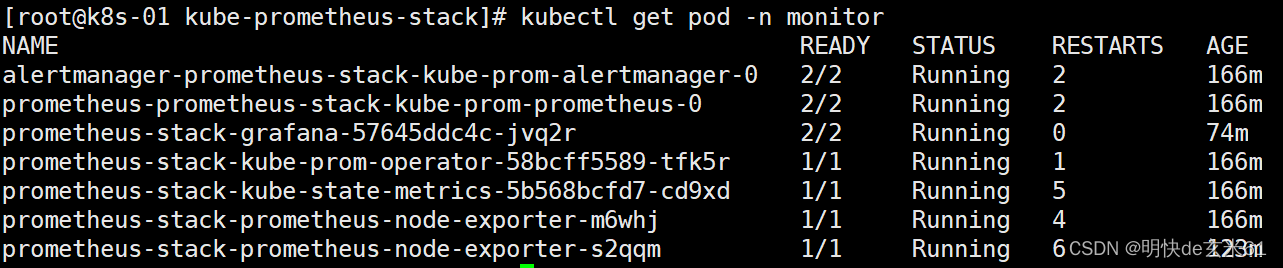

通过kubectl get pod -n monitor查看pod运行情况,如果所有的pod状态都是Running,并且READY比例都是1,那就正常了,例如:

如果不正常的话,可以通过kubectl describe pod pod名称 -n 命名空间查看原因并解决

4.7、改变prometheus、grafana的service暴露方式

更改原因:

默认prometheus、grafana的service暴露方式都是ClusterIP,这种方式无法在浏览器上直接访问,所以我们需要把service暴露方式改成NodePort

更改方式:

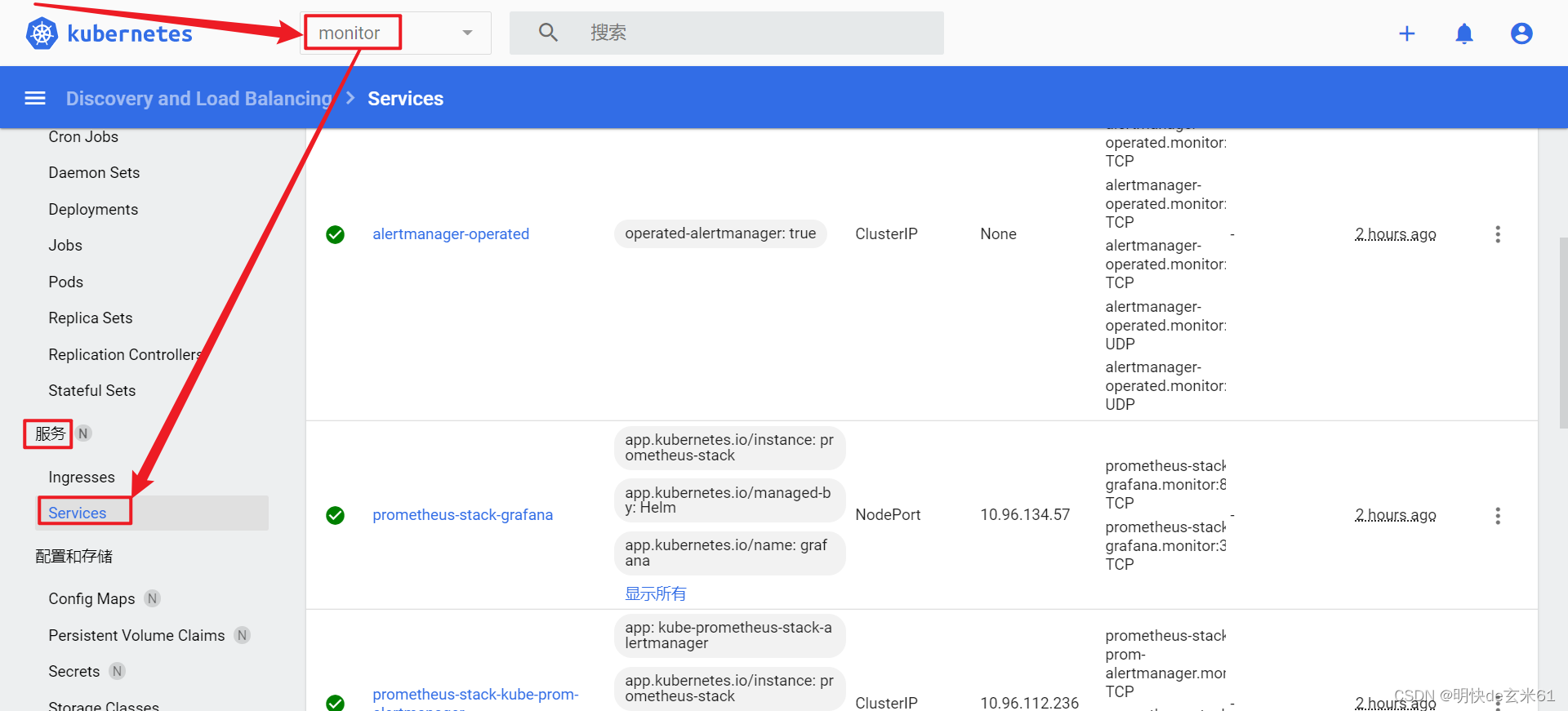

使用一、使用kubeadmin方式安装k8s 》 6.4、访问k8s-dashboard访问k8s dashboard,然后将更改命名空间为monitor,之后点击服务下面的Services,如下:

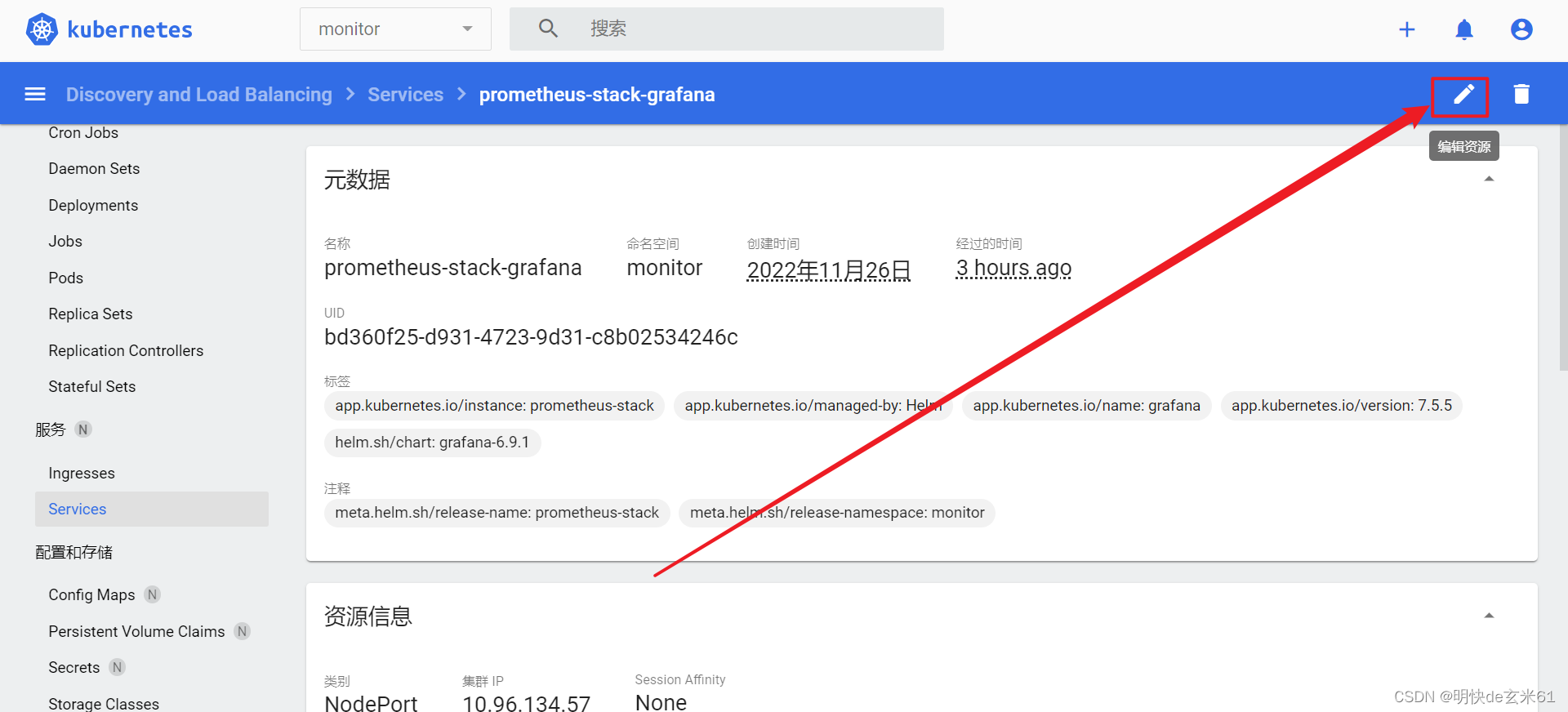

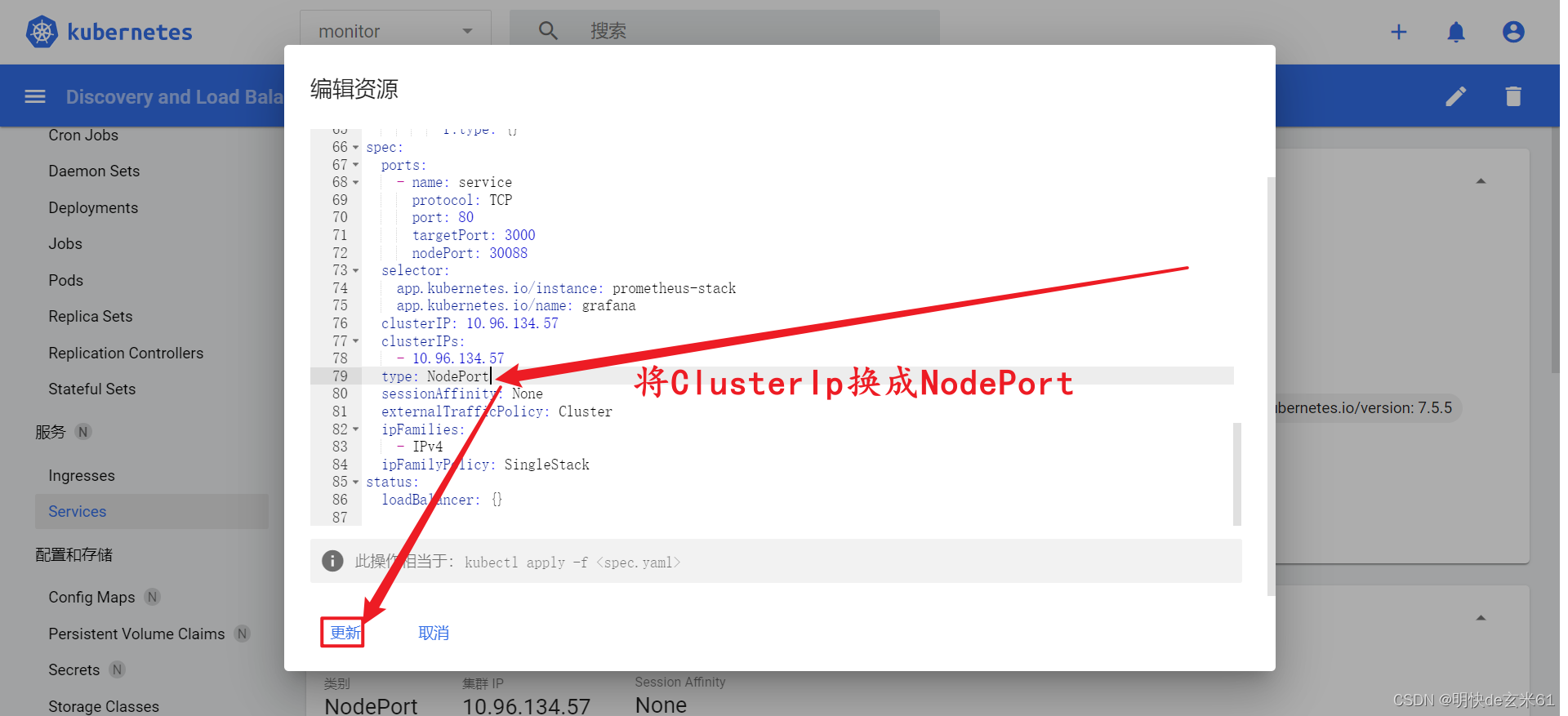

之后分别点击prometheus-stack-grafana和 prometheus-stack-kube-prom-prometheus进行更改操作,我们以prometheus-stack-grafana举例,点击prometheus-stack-grafana之后,需要点击右上角的修改按钮,如下:

然后将ClusterIp切换成NodePort,点击更新按钮

4.8、使用浏览器访问prometheus、grafana

grafana:

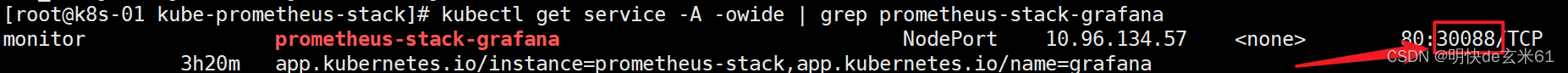

使用kubectl get service -A -owide | grep prometheus-stack-grafana查看grafana的访问端口,比如我的是30088,如下:

然后通过http://虚拟机ip:30088就可以访问grafana了,比如我的就是http://192.168.139.128:30088(注意:协议是http)

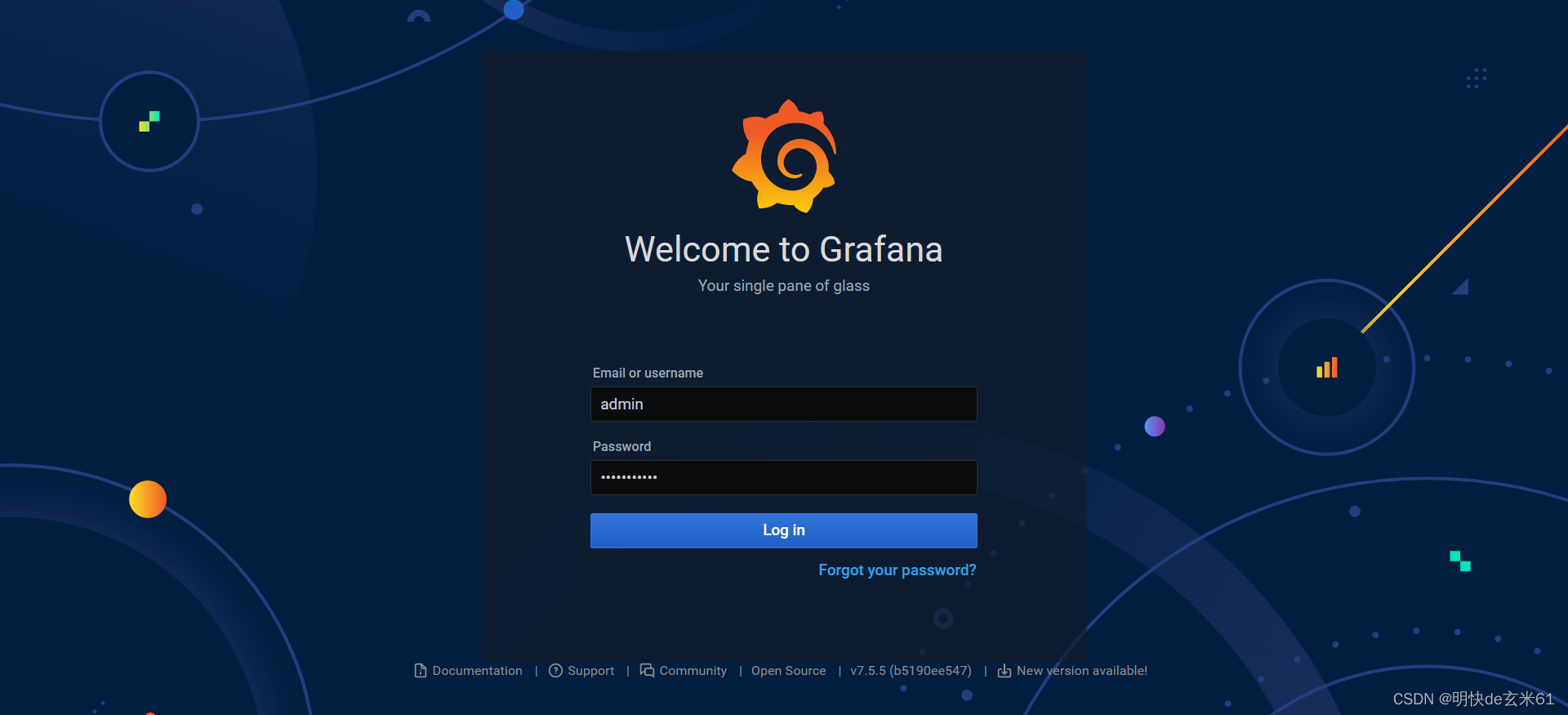

登录页面的用户名是admin,登录密码是4.2、修改values.yaml中设置的,比如我的就是admin123456

prometheus:

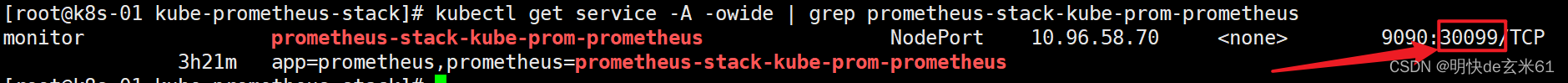

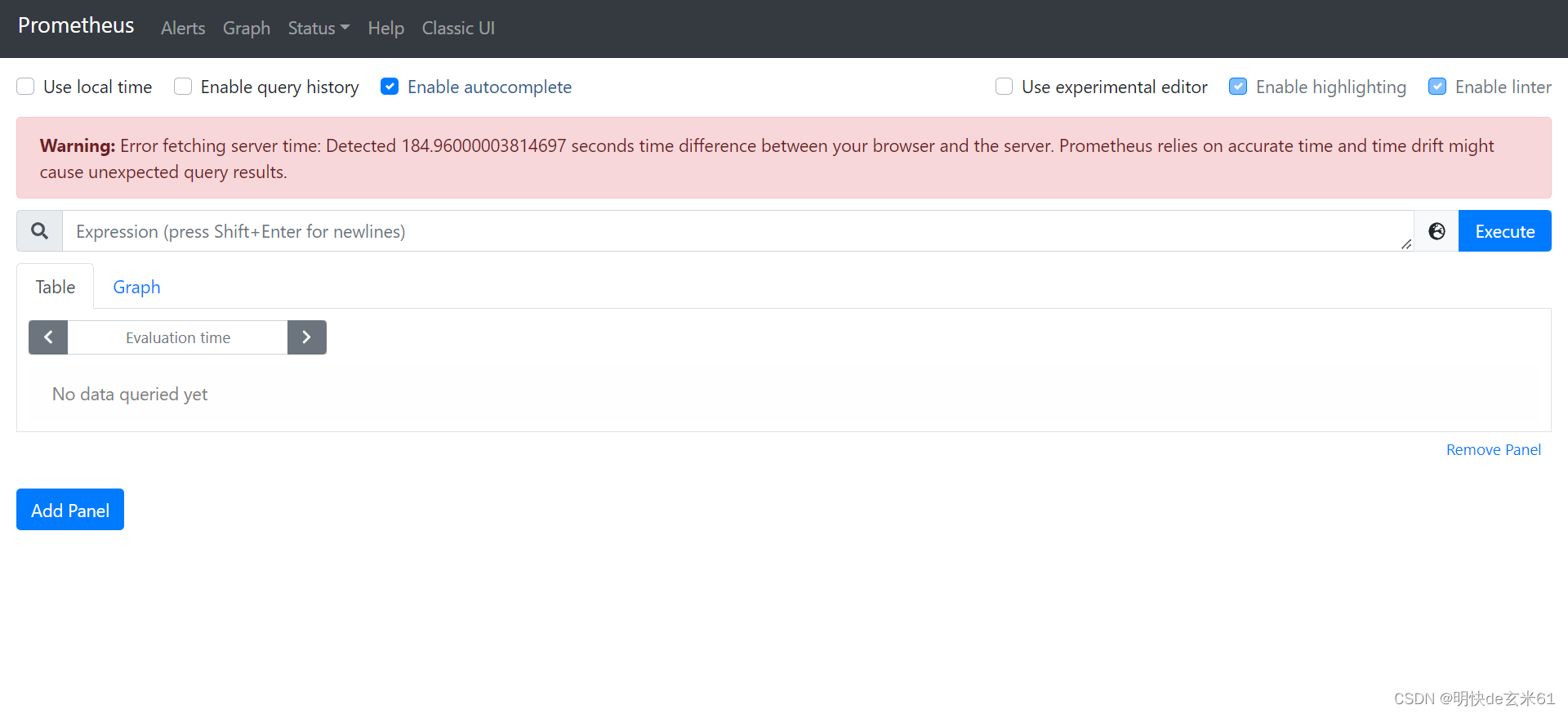

使用kubectl get service -A -owide | grep prometheus-stack-kube-prom-prometheus查看prometheus的访问端口,比如我的是30099,如下:

然后通过http://虚拟机ip:30099就可以访问prometheus了,比如我的就是http://192.168.139.128:30099(注意:协议是http)

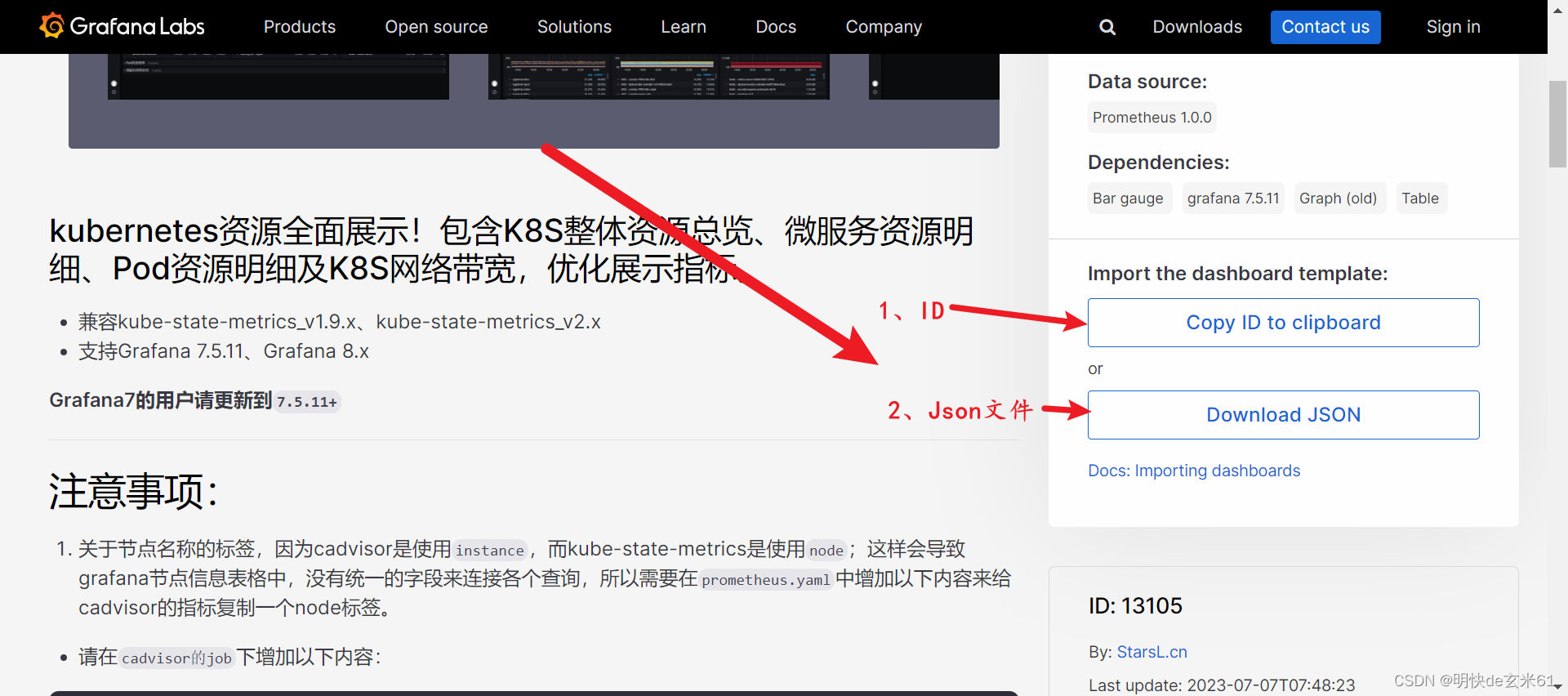

4.9、在grafana中导入查看k8s集群信息的dashboard页面

1、查找对应脚本

访问GrafanaLabs,大家可以寻找任何自己想要的内容,本次我选择的是K8S for Prometheus Dashboard 20211010中文版

2、复制ID 或者 下载json文件

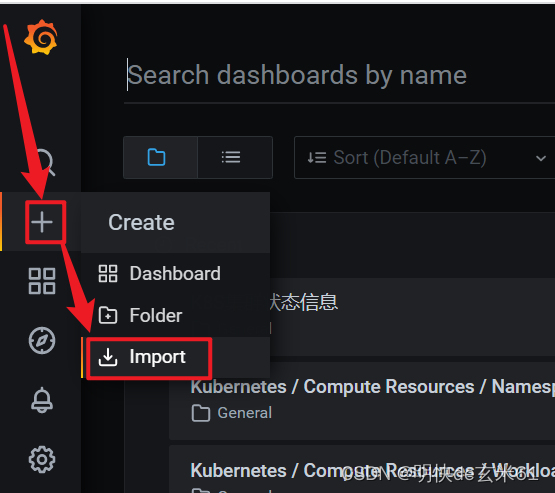

3、在grafana中导入ID或者Json文件的准备工作

如果点击Import之后有弹窗,那么点击第二个按钮就可以了

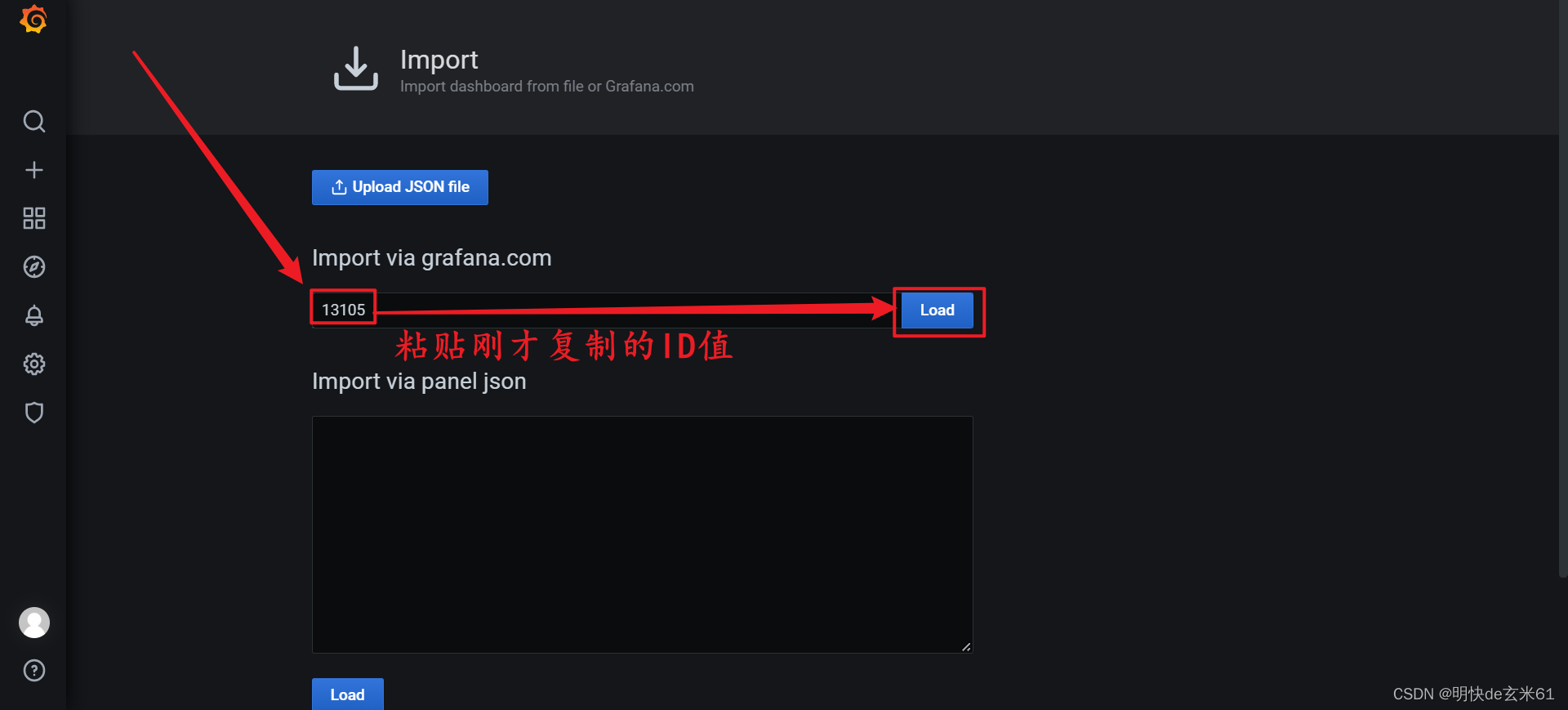

4、执行添加ID或者导入Json文件操作

首先说添加ID,点击Load按钮,如下:

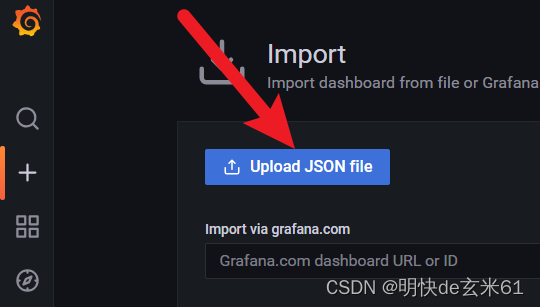

然后说导入Json文件,上面Upload Json file按钮,之后选择下载的Json文件进行导入:

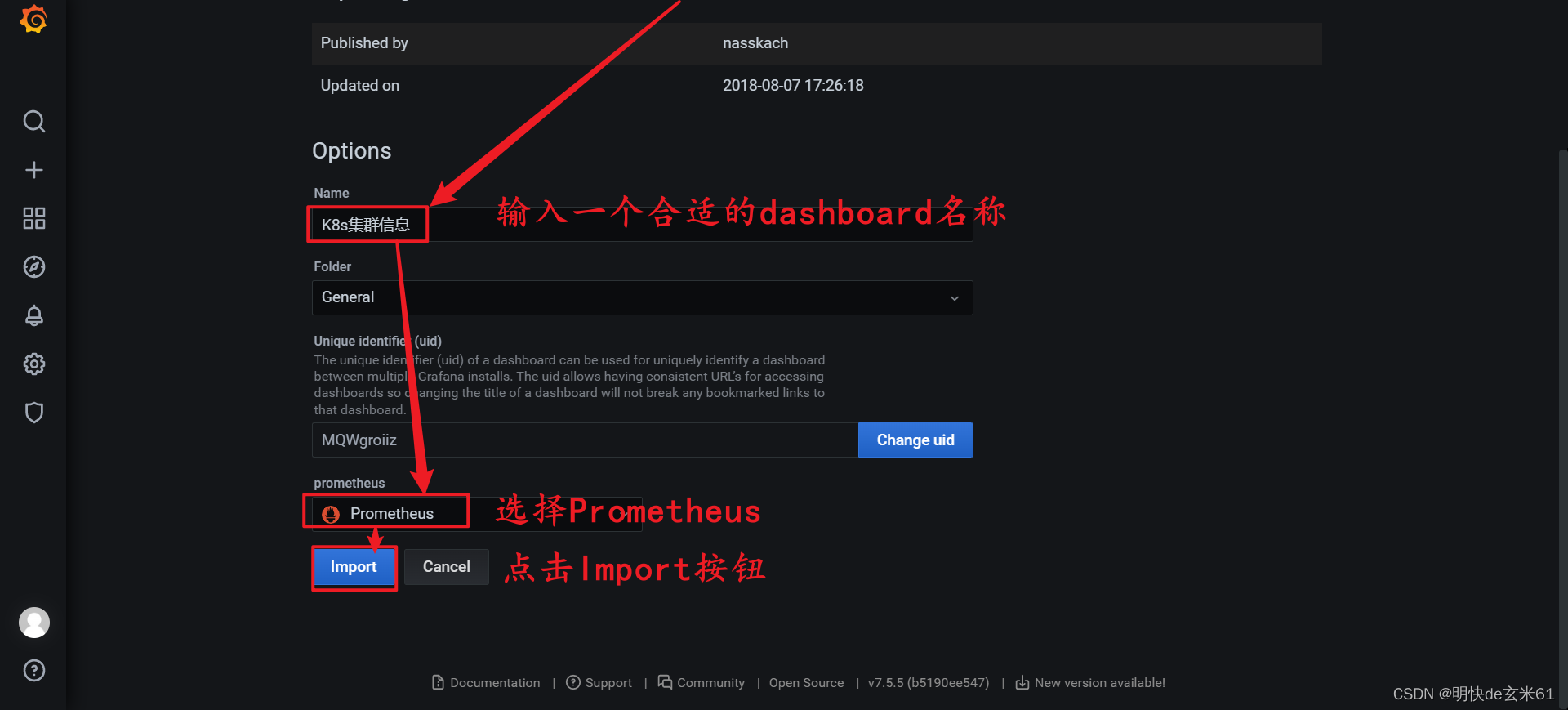

添加ID或者导入Json文件完成后,然后执行修改信息操作,最后进行导入操作,如下:

5、安装harbor

5.1、charts下载

# 添加仓库

helm repo add --insecure-skip-tls-verify harbor https://helm.goharbor.io

# 拉取镜像

helm pull --insecure-skip-tls-verify harbor/harbor --version 1.10.2

# 解压tgz压缩包

tar -zxvf harbor-1.10.2.tgz

5.2、修改values.yaml

进入解压的harbor目录中,将values.yaml传输到windows上,需要修改如下位置:

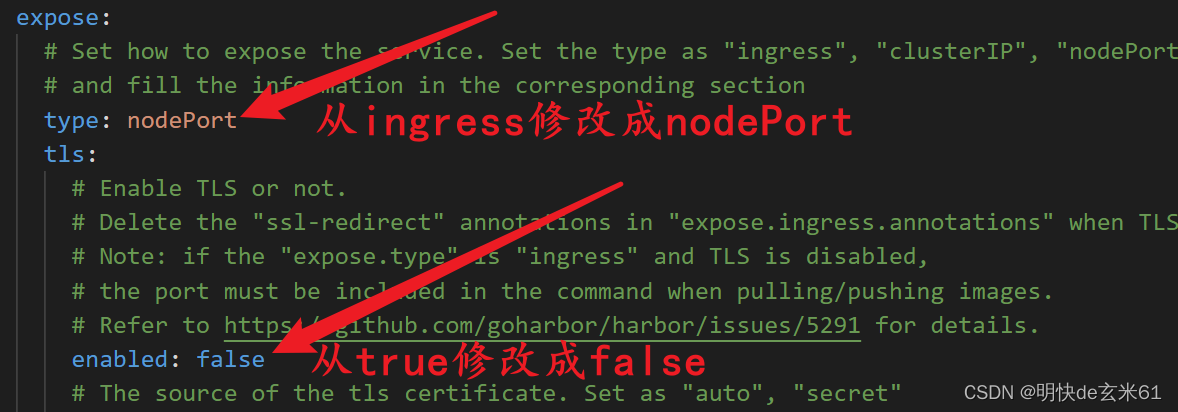

更改expose》type的值为nodePort、expose》tls》enabled的值为false

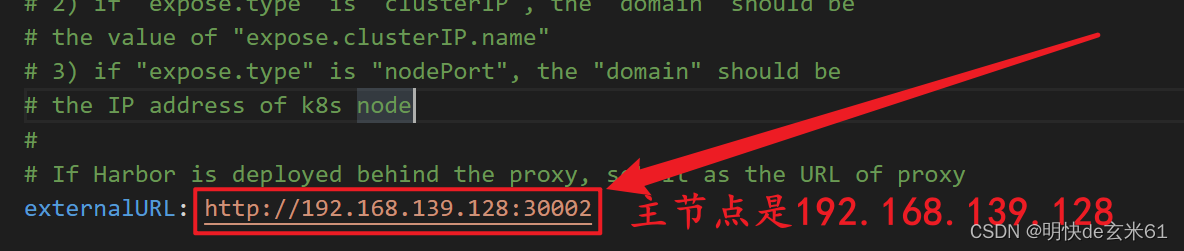

更改expose》externalURL的值为http://主节点ip:30002(注意:30002是harbor的service对外暴露的端口,这个是定死的端口)

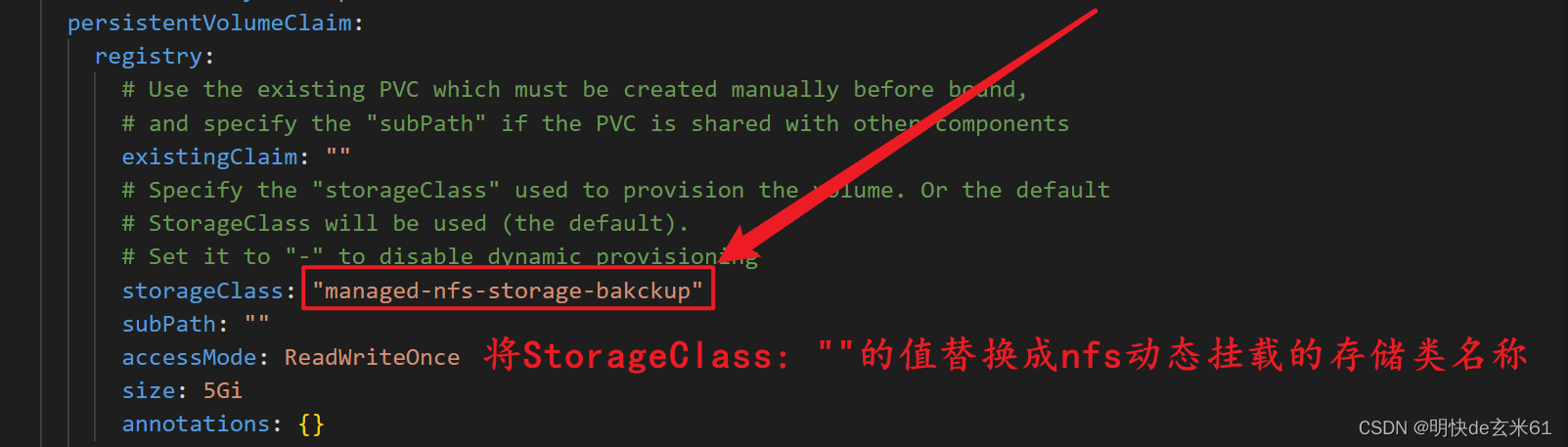

替换所有storageClass: ""为storageClass: "存储类名称"(说明:比如我用的nfs动态存储,那就是managed-nfs-storage-bakckup,其中nfs动态供应配置方式在二、安装其他组件》2、安装nfs动态供应中;如果你用的ceph存储,那就换成ceph的存储类名称即可)

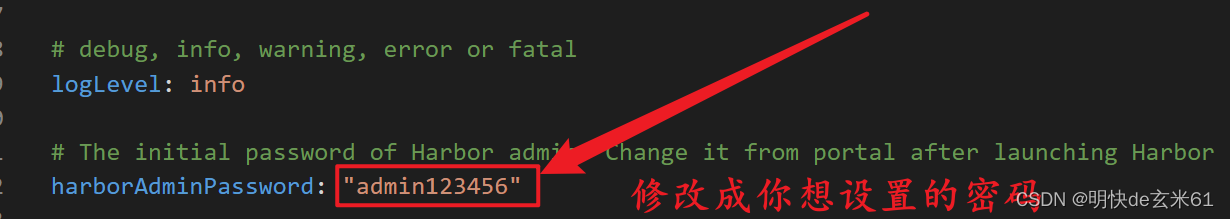

修改harborAdminPassword的值为你想设置的harbor密码

上述操作截图如下:

5.3、导入镜像

有部分镜像是外国网站的,我们直接拉取会失败,所以我给大家已经准备好了,大家直接下载导入即可,这些tar包都只需要导入工作节点就可以了,其中导入命令如下:docker load -i 镜像tar包名称

其中百度网盘链接如下:

链接:https://pan.baidu.com/s/1qPUvsnYz0guWg3khALRKTA?pwd=i1sc

提取码:i1sc

5.4、创建命名空间

未来我们的harbor会安装到这个命名空间下面

kubectl create ns devops

5.5、安装

在我们解压之后的harbor目录中执行下述命令即可

helm install -f values.yaml harbor ./ -n devops

5.6、保证pod运行完好

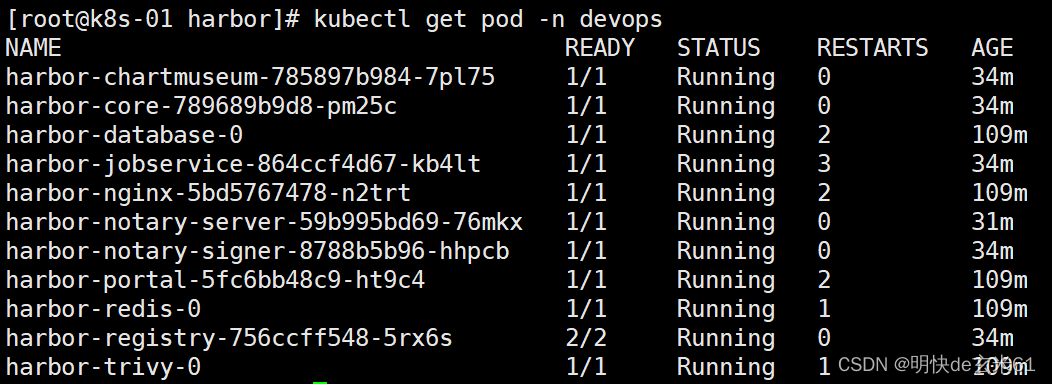

通过kubectl get pod -n devops查看pod运行情况,如果所有的pod状态都是Running,并且READY比例都是1,那就正常了,例如:

如果不正常的话,可以通过kubectl describe pod pod名称 -n 命名空间查看原因并解决

如果pod长期不正常,可以通过kubectl delete pod pod名称 -n 命名空间删除pod之后在重新生成pod

如果多个pod都不正常,我们也可以在harbor解压目录中通过helm uninstall harbor -n devops来删除老的部署,然后在重新通过helm install -f values.yaml harbor ./ -n devops来进行部署

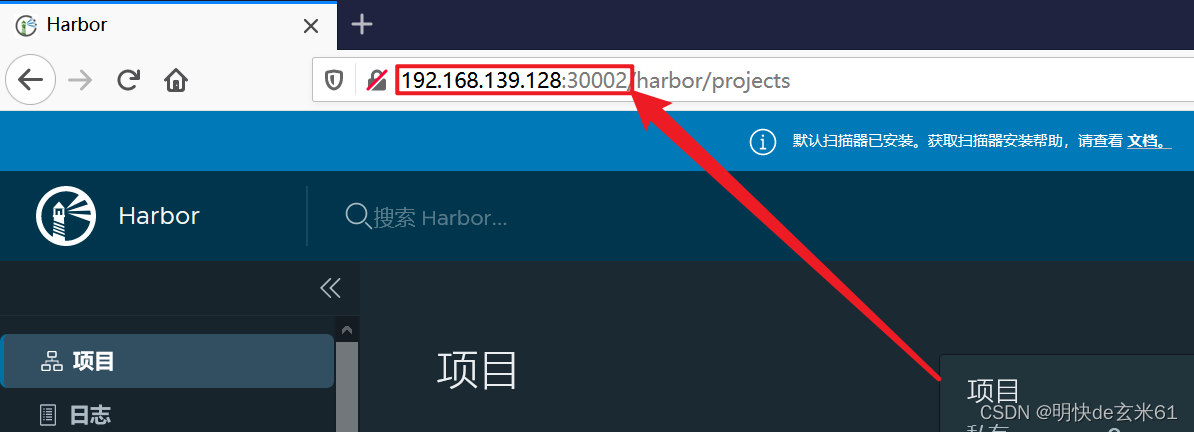

5.7、使用浏览器访问harbor

直接在浏览器上访问http://主节点ip:30002访问就可以了,其中用户名是admin,密码是你在values.yaml中的修改的harborAdminPassword值,比如我的就是admin123456,其中默认值是Harbor12345

5.8、在linux上使用docker login XXX方式登录harbor

注意事项

在所有机器(包含主节点+工作节点)上的/etc/docker/daemon.json都需要配置insecure-registries,其中insecure-registries是一个数组,而数组中唯一值就是主节点ip:30002(说明:主节点ip:30002是在浏览器上访问harbor的连接地址)

操作步骤

5.8.1、更改所有节点的daemon.json

注意:以下操作需要在所有节点都执行

使用vim /etc/docker/daemon.json打开daemon.json

将"insecure-registries": ["主节点ip:30002"]添加到文件后面;比如我在浏览器上访问harbor的连接地址是192.168.139.128:30002,那我的daemon.json配置内容如下,其中阿里云镜像加速器的配置不用管它就行

{

"registry-mirrors": ["https://a3e6u0iu.mirror.aliyuncs.com"],

"insecure-registries": ["192.168.139.128:30002"]

}

5.8.2、重启docker

systemctl restart docker

5.8.3、测试在linux上登录harbor

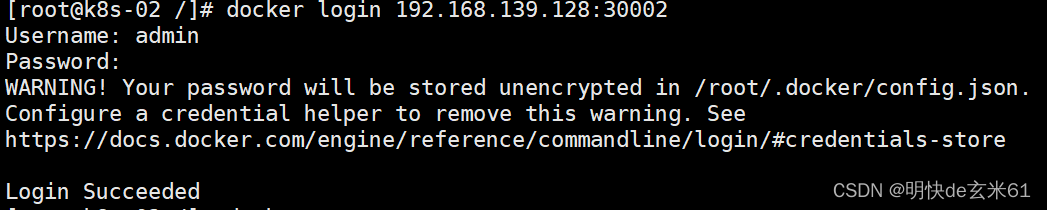

在linux上输入docker login 主节点ip:30002回车即可,比如我的就是docker login 192.168.139.128:30002,然后依次输入用户名回车、输入密码回车就可以登录harbor了,如下:

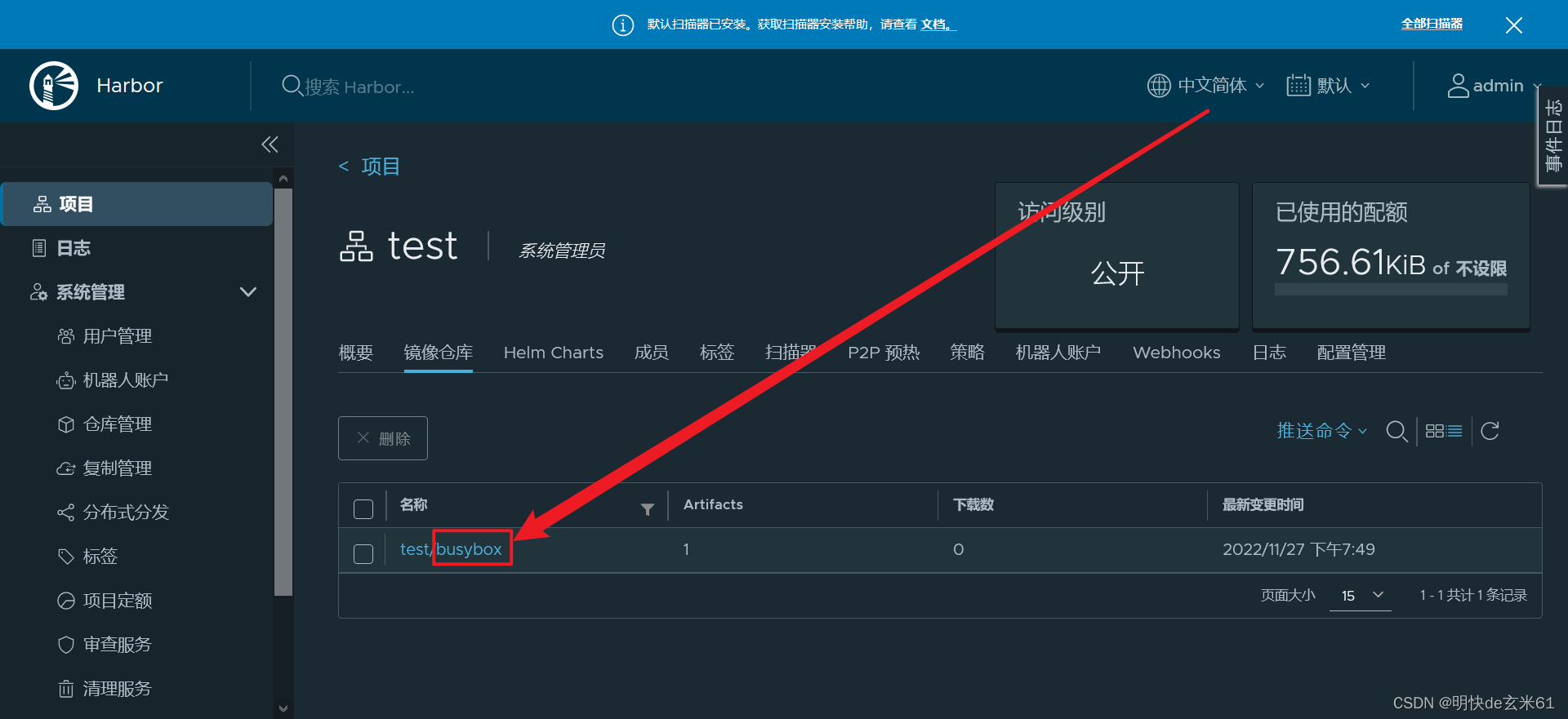

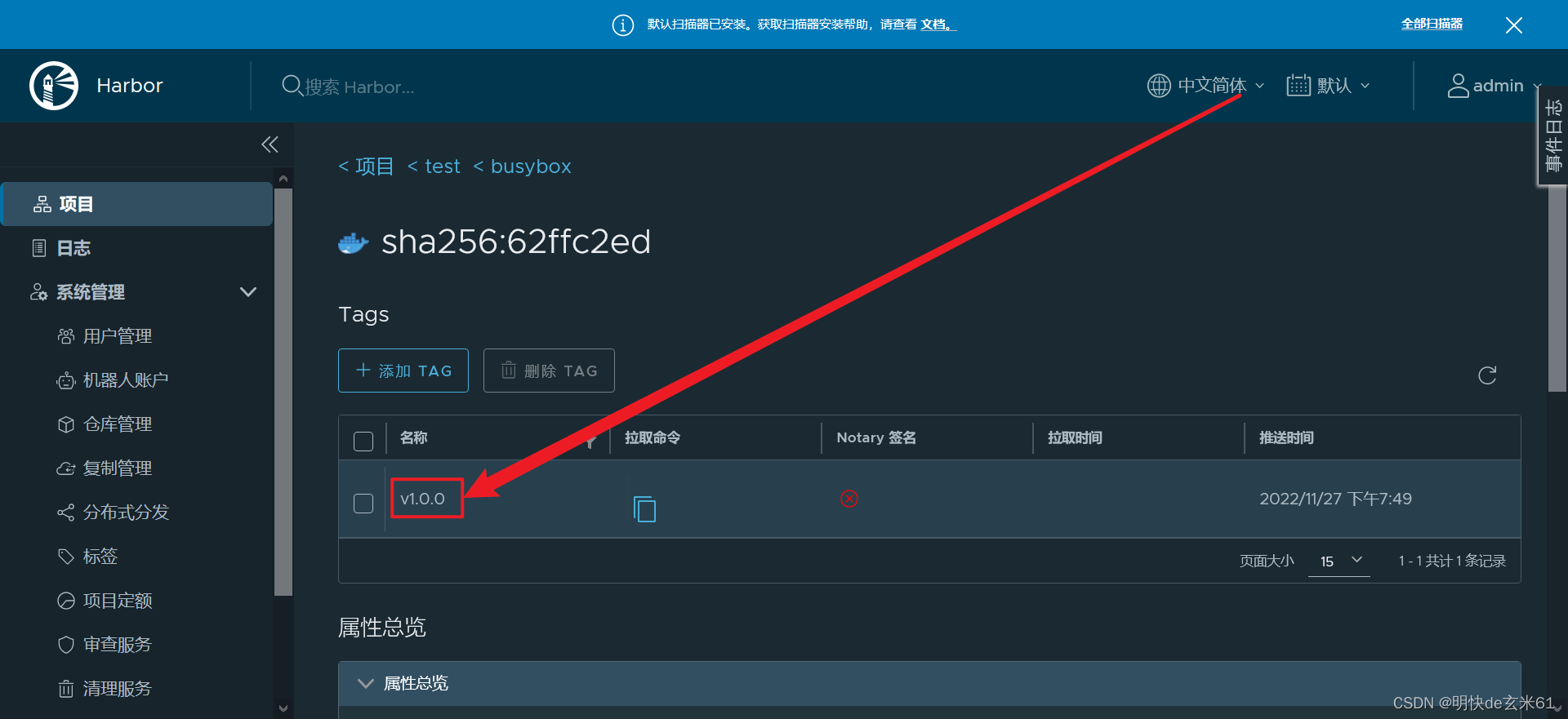

5.9、测试通过docker命令推送镜像到harbor

本次测试推送busybox到harbor仓库

首先通过docker pull busybox下载busybox镜像

然后将镜像打成符合推送要求的样子,命令是:docker tag 本地镜像名称:本地镜像版本号 仓库访问地址/项目名称/推送到harbor仓库的镜像名称:推送到harbor仓库的镜像版本号,比如我的就是:

docker tag busybox:latest 192.168.139.128:30002/test/busybox:v1.0.0

解释如下:

-

busybox:latest:本地镜像名称:本地镜像版本号

-

192.168.139.128:30002:harbor访问地址

-

test:harbor仓库中的项目名称

-

busybox:harbor仓库中的镜像名称

-

v1.0.0:harbor仓库中的镜像版本号

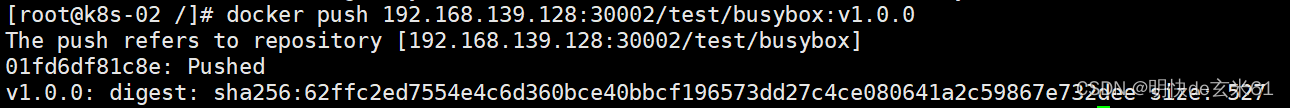

然后把镜像推送到harbor仓库中就可以了(注意:推送之前记得在harbor控制台页面中创建test项目),命令是:docker push 仓库访问地址/项目名称/推送到harbor仓库的镜像名称:推送到harbor仓库的镜像版本号,比如我的就是:

docker push 192.168.139.128:30002/test/busybox:v1.0.0

命令执行完成效果如下:

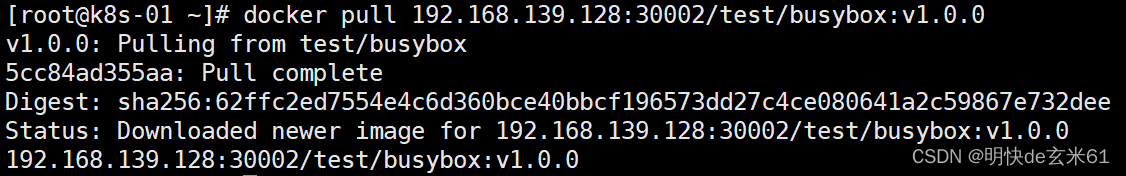

5.10、测试通过docker命令从harbor仓库下载镜像

首先删除同名镜像,命令是:docker rmi 仓库访问地址/项目名称/镜像名称:镜像版本号,例如:

docker rmi 192.168.139.128:30002/test/busybox:v1.0.0

然后下载镜像,命令是:docker pull 仓库访问地址/项目名称/镜像名称:镜像版本号,例如:

docker pull 192.168.139.128:30002/test/busybox:v1.0.0

命令执行完成效果如下:

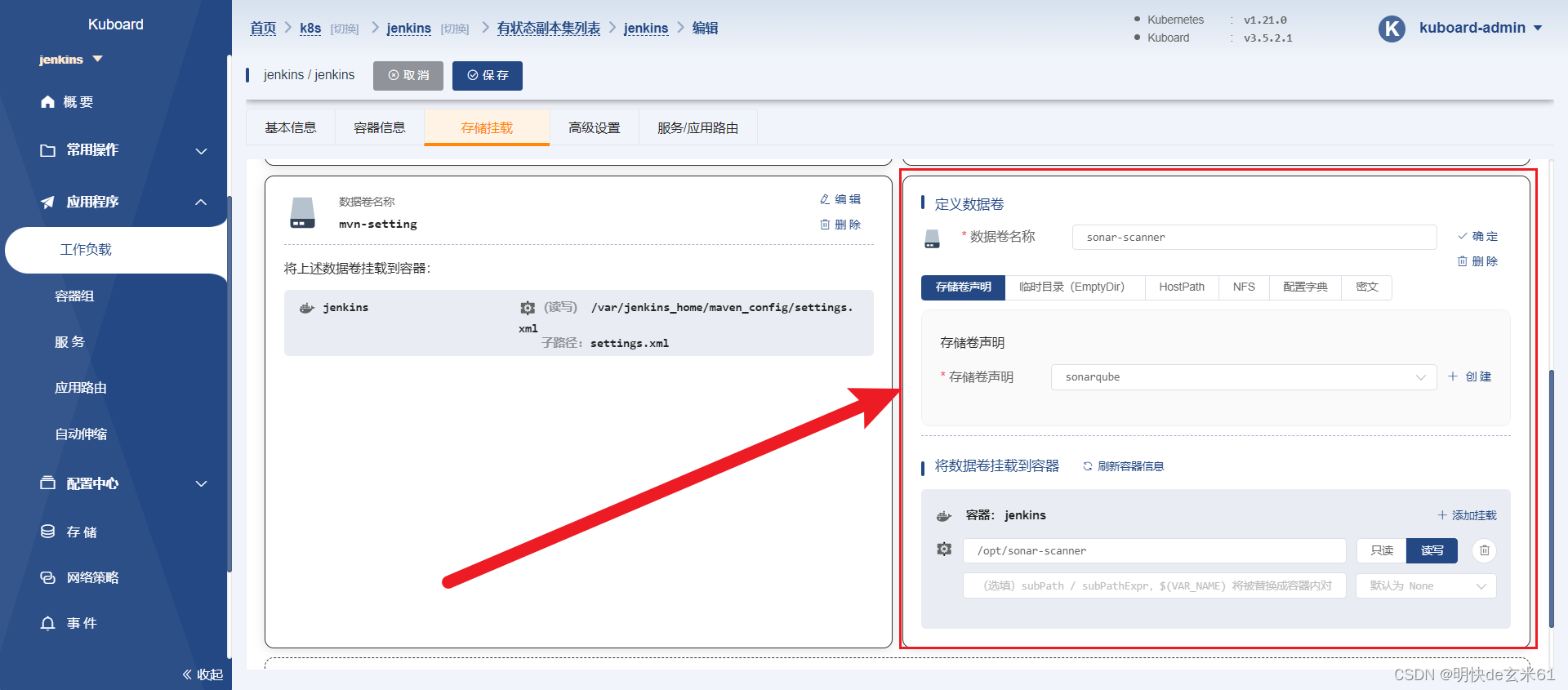

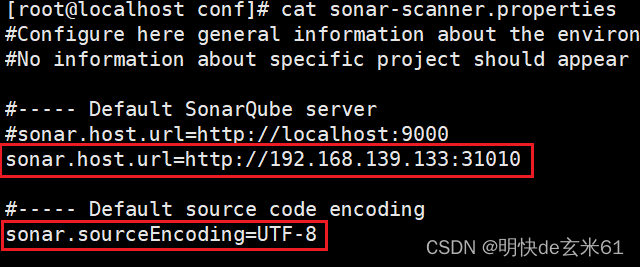

6、安装jenkins

6.1、导入镜像

由于jenkins镜像下载比较慢,我在这里给大家提供jenkins/jenkins:2.396镜像tar包,大家可以通过命令docker load -i 镜像tar包名称进行导入,镜像在下面

其中百度网盘链接如下:

链接:https://pan.baidu.com/s/1LBwsipzJuhCECdnL3fqLqg?pwd=yt1m

提取码:yt1m

6.2、创建jenkins.yaml

apiVersion: v1

kind: Namespace

metadata:

name: jenkins

---

apiVersion: v1

kind: ConfigMap

metadata:

name: settings.xml

namespace: jenkins

data:

settings.xml: "<?xml version=\"1.0\" encoding=\"UTF-8\"?>\n<settings xmlns=\"http://maven.apache.org/SETTINGS/1.0.0\"\n xmlns:xsi=\"http://www.w3.org/2001/XMLSchema-instance\"\n xsi:schemaLocation=\"http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd\">\n \n <!-- 首先/var/jenkins_home是jenkins容器用的地址,本次我们用的是nfs来存储该地址,所以我们要在nfs目录中提前创建好maven_repository目录 -->\n <localRepository>/var/jenkins_home/maven_repository</localRepository>\n\n <pluginGroups>\n </pluginGroups>\n\n <proxies>\n </proxies>\n\n <servers>\n </servers>\n <mirrors>\n\t\n <mirror>\n\t <id>central</id> \n\t <name>central</name> \n\t <url>http://maven.aliyun.com/nexus/content/groups/public</url> \n\t <mirrorOf>central</mirrorOf> \n </mirror>\n\t\n </mirrors>\n <profiles>\n\t<!-- 自己配置,目的是避免使用错误的jre版本 -->\n\t<profile>\n\t <id>jdk-1.8</id>\n\t \n\t <activation>\n\t\t<activeByDefault>true</activeByDefault>\n\t\t<jdk>1.8</jdk>\n\t </activation>\n\n\t <properties>\n\t\t<maven.compiler.source>1.8</maven.compiler.source>\n\t\t<maven.compiler.target>1.8</maven.compiler.target>\n\t\t<maven.compiler.compilerVersion>1.8</maven.compiler.compilerVersion>\n\t </properties>\n\t</profile>\n </profiles>\n</settings>\n\n"

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: jenkins

namespace: jenkins

spec:

selector:

matchLabels:

app: jenkins # has to match .spec.template.metadata.labels

serviceName: "jenkins"

replicas: 1

template:

metadata:

labels:

app: jenkins # has to match .spec.selector.matchLabels

spec:

serviceAccountName: "jenkins"

terminationGracePeriodSeconds: 10

containers:

- name: jenkins

image: jenkins/jenkins:2.396

imagePullPolicy: IfNotPresent

securityContext:

runAsUser: 0 #设置以ROOT用户运行容器,对docker文件的权限赋予以及运行起到了很大的帮助作用

privileged: true #拥有特权

ports:

- containerPort: 8080

name: web

- name: jnlp #jenkins slave与集群的通信口

containerPort: 50000

volumeMounts:

- mountPath: /var/jenkins_home

name: jenkins-home

- mountPath: /var/run/docker.sock

name: docker

- mountPath: /usr/bin/docker

name: docker-home

- mountPath: /etc/localtime

name: localtime

- mountPath: /var/jenkins_home/maven_config/settings.xml

name: mvn-setting

subPath: settings.xml

volumes:

- nfs:

server: 192.168.139.133 # nfs的主机ip,要用master节点的ip,这位后来k8s执行YamlStatefulSet.yaml文件做准备工作

path: /nfs/data/jenkins_home # 我的主机nfs路径是/nfs/data,然后/nfs/data/jenkins_home目录是我在执行此yaml之前提前就要创建好的

name: jenkins-home

- hostPath:

path: /var/run/docker.sock

name: docker

- hostPath:

path: /usr/bin/docker # 注意docker在主机的安装位置,部分docker版本在/usr/local/bin/docker中

name: docker-home

- hostPath: # 将主机的时间文件挂载到容器内部

path: /usr/share/zoneinfo/Asia/Shanghai

name: localtime

- configMap:

items:

- key: settings.xml

path: settings.xml

name: settings.xml

name: mvn-setting

---

apiVersion: v1

kind: Service

metadata:

name: jenkins

namespace: jenkins

spec:

selector:

app: jenkins

type: NodePort

ports:

- name: web

port: 8080

targetPort: 8080

protocol: TCP

- name: jnlp

port: 50000

targetPort: 50000

protocol: TCP

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: jenkins

namespace: jenkins

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: jenkins

rules:

- apiGroups: ["extensions", "apps"]

resources: ["deployments"]

verbs: ["create", "delete", "get", "list", "watch", "patch", "update"]

- apiGroups: [""]

resources: ["services"]

verbs: ["create", "delete", "get", "list", "watch", "patch", "update"]

- apiGroups: [""]

resources: ["pods"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/exec"]

verbs: ["create","delete","get","list","patch","update","watch"]

- apiGroups: [""]

resources: ["pods/log"]

verbs: ["get","list","watch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["get"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: jenkins

subjects:

- kind: ServiceAccount

name: jenkins

namespace: jenkins

roleRef:

kind: ClusterRole

name: jenkins

apiGroup: rbac.authorization.k8s.io

6.3、应用jenkins.yaml

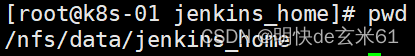

提前创建目录:

-

在master节点的主机上创建

/nfs/data/jenkins_home目录:

其中/nfs/data是nfs目录,jenkins_home需要我们新建

针对yaml文件中对jenkins工作目录/var/jenkins_home的要求,首先我们要在nfs主机目录(我的主机nfs目录是/nfs/data)中创建jenkins_home目录(如下所示),我们这样做的目的是为master节点的k8s执行YamlStatefulSet.yaml文件准备的,jenkins会把gitee中的项目拉到工作目录(即/var/jenkins_home)下,并且jenkins工作目录目前在主机的nfs目录下,然后主机的k8s也能用到nfs目录,这样我们在jenkins中通过SSH插件来调用master节点的k8s就能够执行YamlStatefulSet.yaml文件了

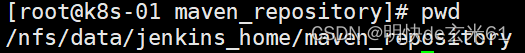

-

在master节点的主机上创建

/nfs/data/jenkins_home/maven_repository目录:

其中/nfs/data是nfs目录,jenkins_home需要我们新建,maven_repository需要我们新建

按照ConfigMap中的settings.xml文件配置,我们需要为maven仓库创建对应的目录,未来我们的maven容器是运行在jenkins之中的,所以jenkins的工作空间maven容器也能用,因此我们写的Maven仓库路径是/var/jenkins_home/maven_repository,这个路径就是jenkins的工作目录,但是现在jenkins的工作目录在master节点的nfs目录下,即/nfs/data/jenkins_home,所以我们需要在/nfs/data/jenkins_home下面在创建maven_repository目录,如下:

执行yaml文件:

kubectl apply -f jenkins.yaml

6.4、访问jenkins

直接在浏览器上输入主机ip:8080对应的nodePort回车即可访问,比如:http://192.168.139.128:31586

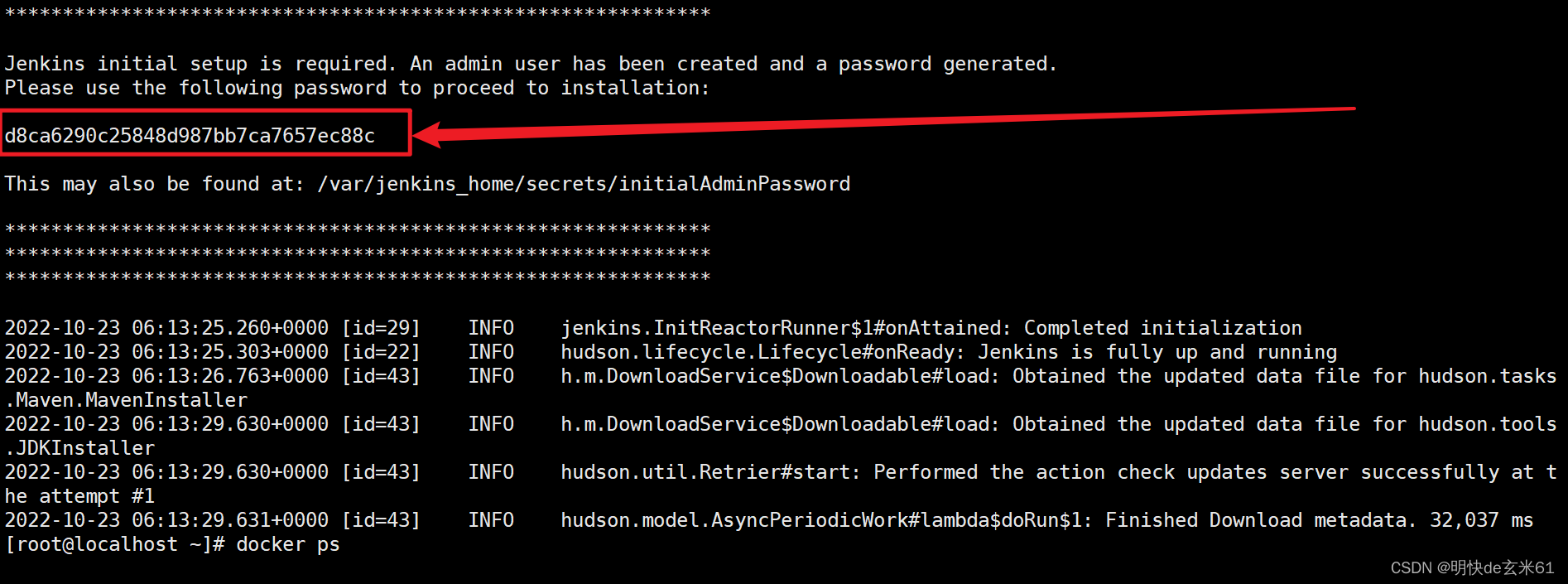

6.5、解锁 Jenkins

首次访问需要解锁,通过docker logs jenkins容器id可以查询到初始管理员密码,如果是通过k8s,可以通过k8s日志去查看密码,如下:

将上图红框框标出来的粘贴到登录页面登录即可,如下:

6.6、插件下载

直接点击第一个按钮,下载推荐的插件即可

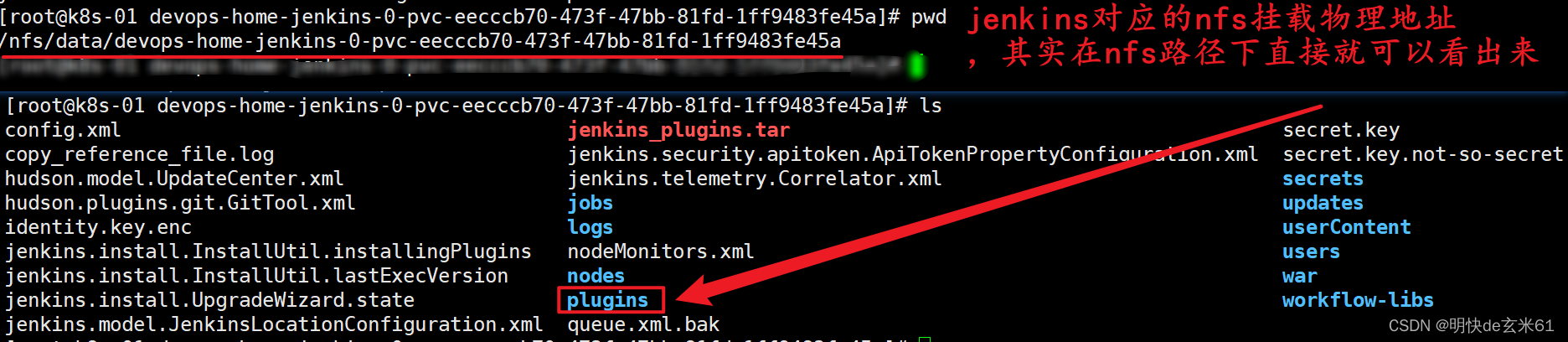

如果多次尝试下载插件都没有成功,大家也可以点击继续,我这边会把相关插件提供给大家,大家只需要把jenkins的nfs存储中/var/jenkins_home/中plugins目录下的内容换成下面目录中的就可以了

其中百度网盘链接如下:

链接:https://pan.baidu.com/s/12Gaa8UwcW6NM6y9TFM0kkQ?pwd=hwdl

提取码:hwdl

6.7、创建第一个管理员用户

建议取一个好记忆的,比如用户名/密码:admin / admin123456

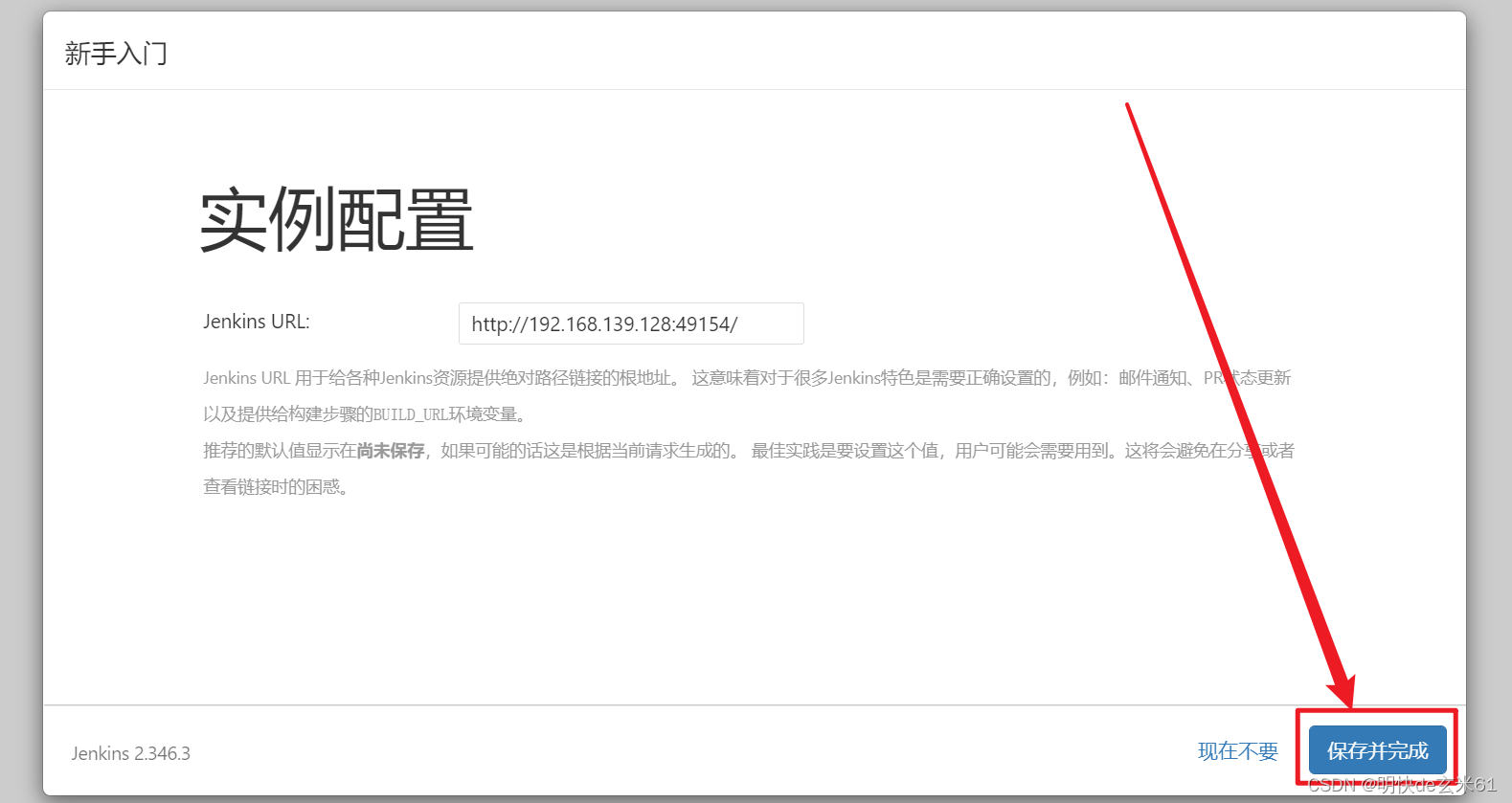

6.8、实例配置

点击保存并完成按钮即可,如下:

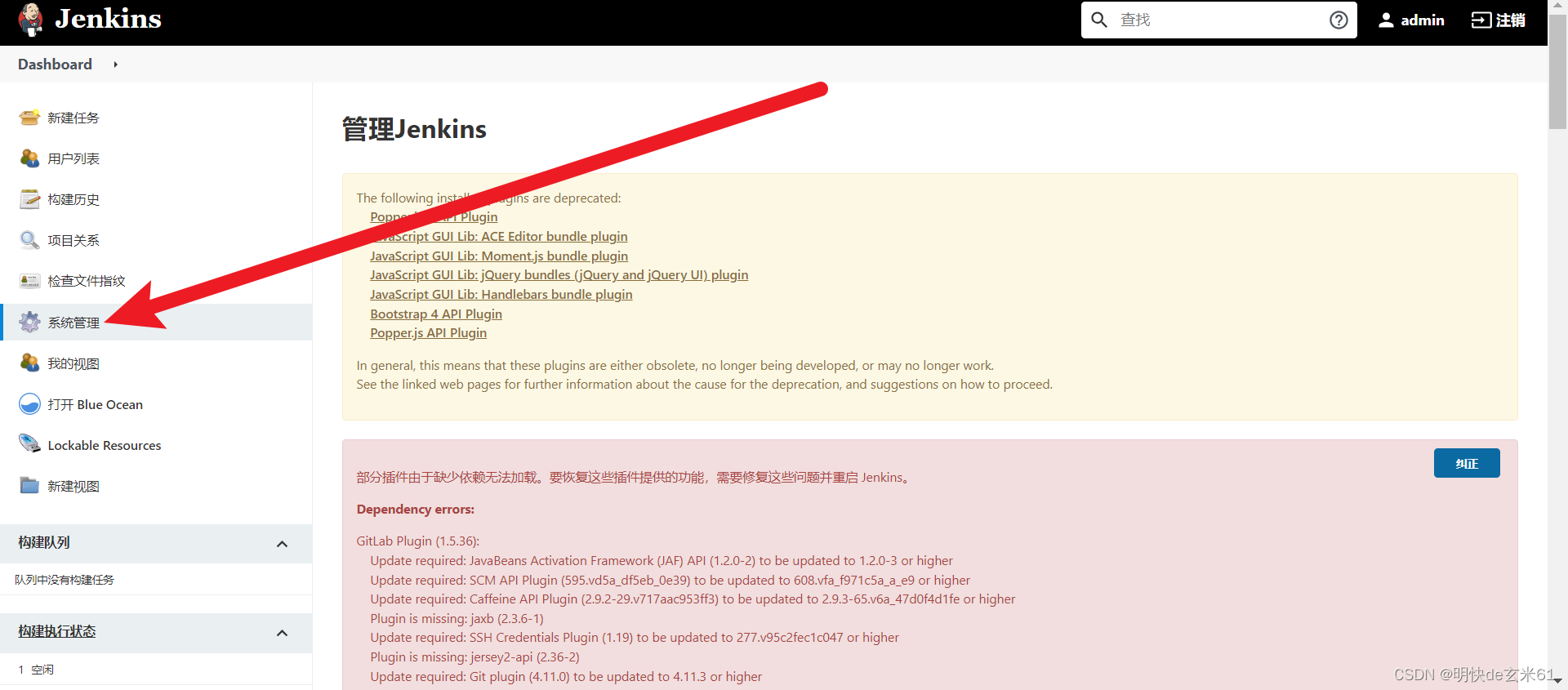

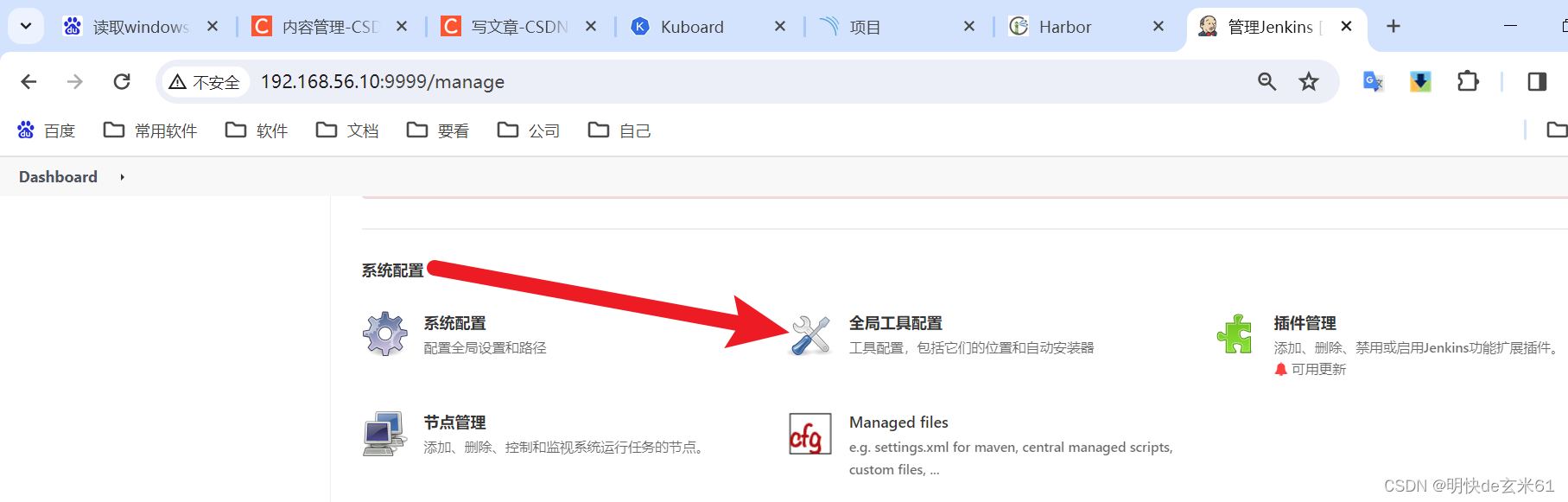

6.9、更改jenkins插件镜像源

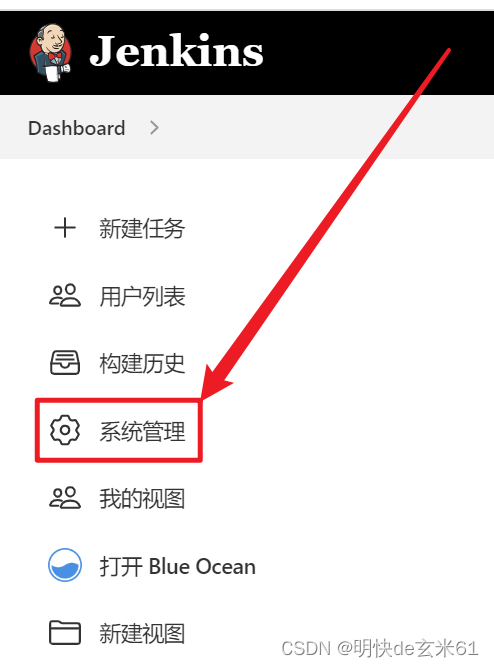

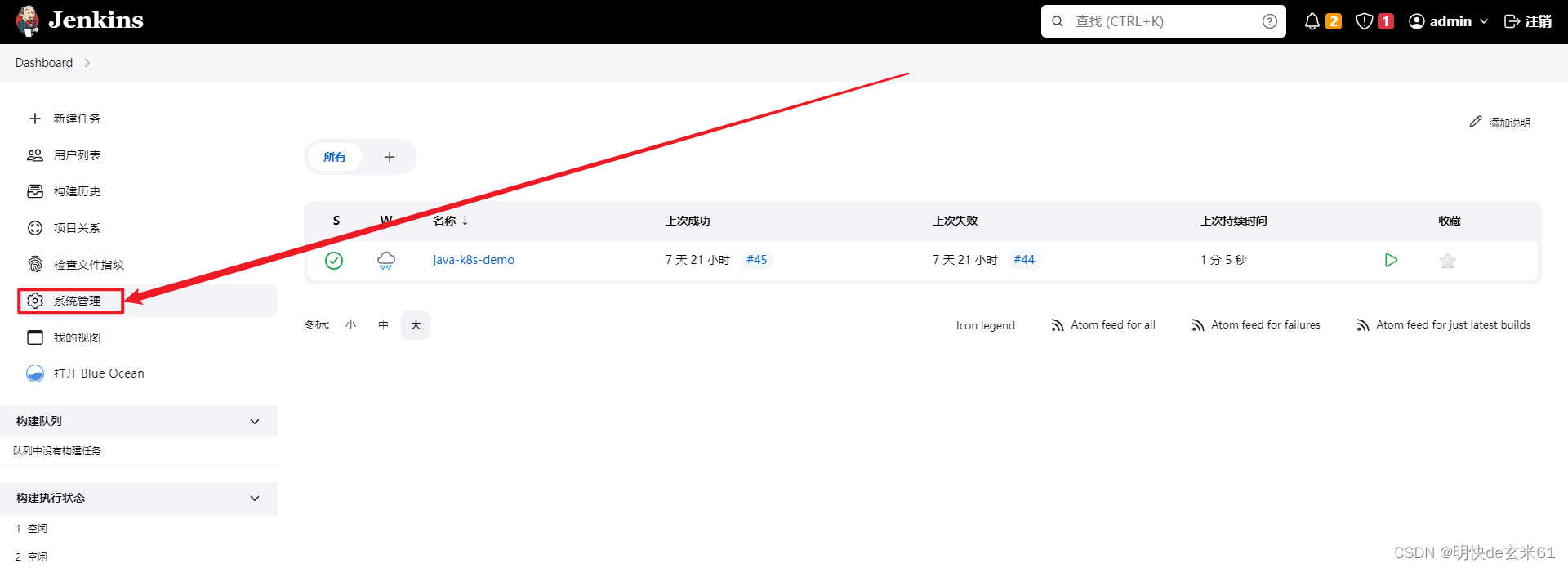

点击系统管理按钮,如下:

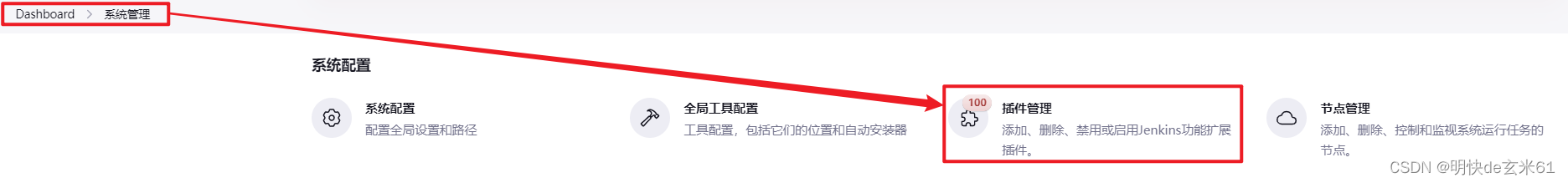

点击插件管理按钮,如下:

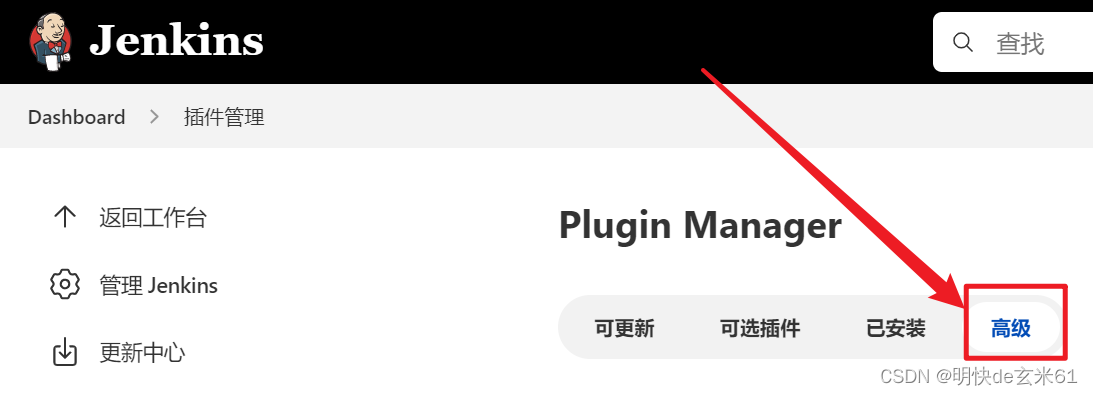

点击高级按钮,如下:

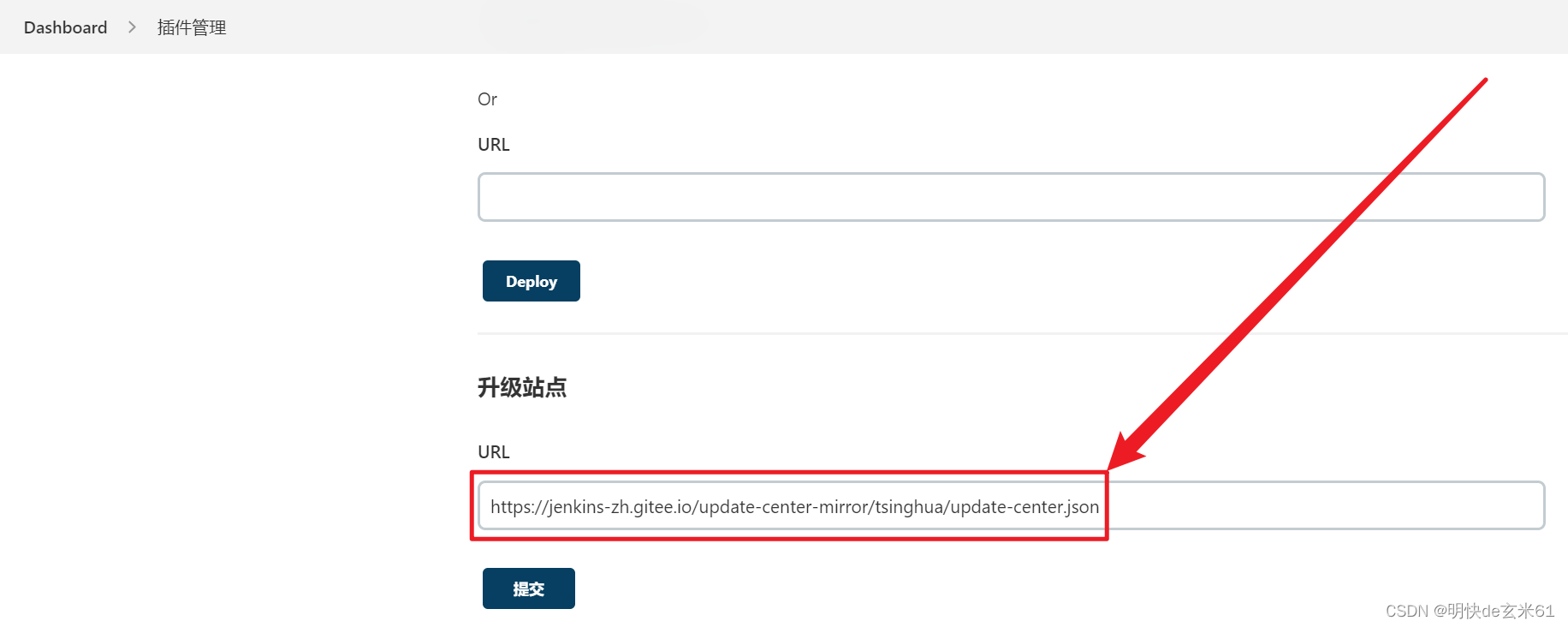

然后把页面滑到最下方,使用http://mirror.xmission.com/jenkins/updates/current/update-center.json替换掉升级站点》URL下文本框中的内容,点击保存按钮,如下:

6.10、使用public over ssh插件完成jenkins构建全过程

6.10.1、安装publish over ssh插件

首先在jenkins系统首页左侧找到系统管理,如下:

然后往下滑动页面,找到插件管理,如下:

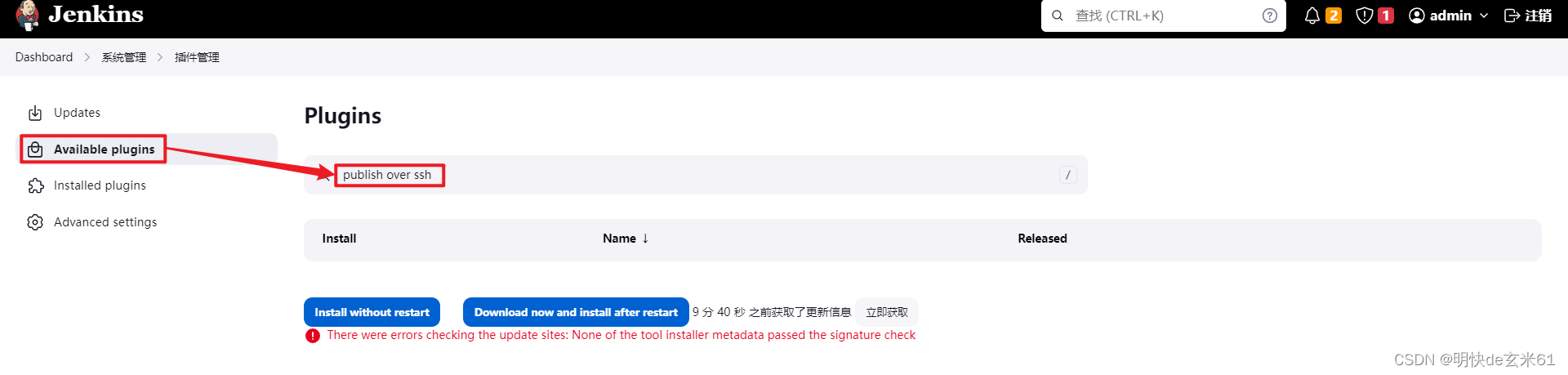

然后在左侧选中Available plugins,然后在右侧搜索框中输入publish over ssh安装即可,我这里是已经安装过了,所以无法搜索出来

6.10.2、配置ssh连接信息

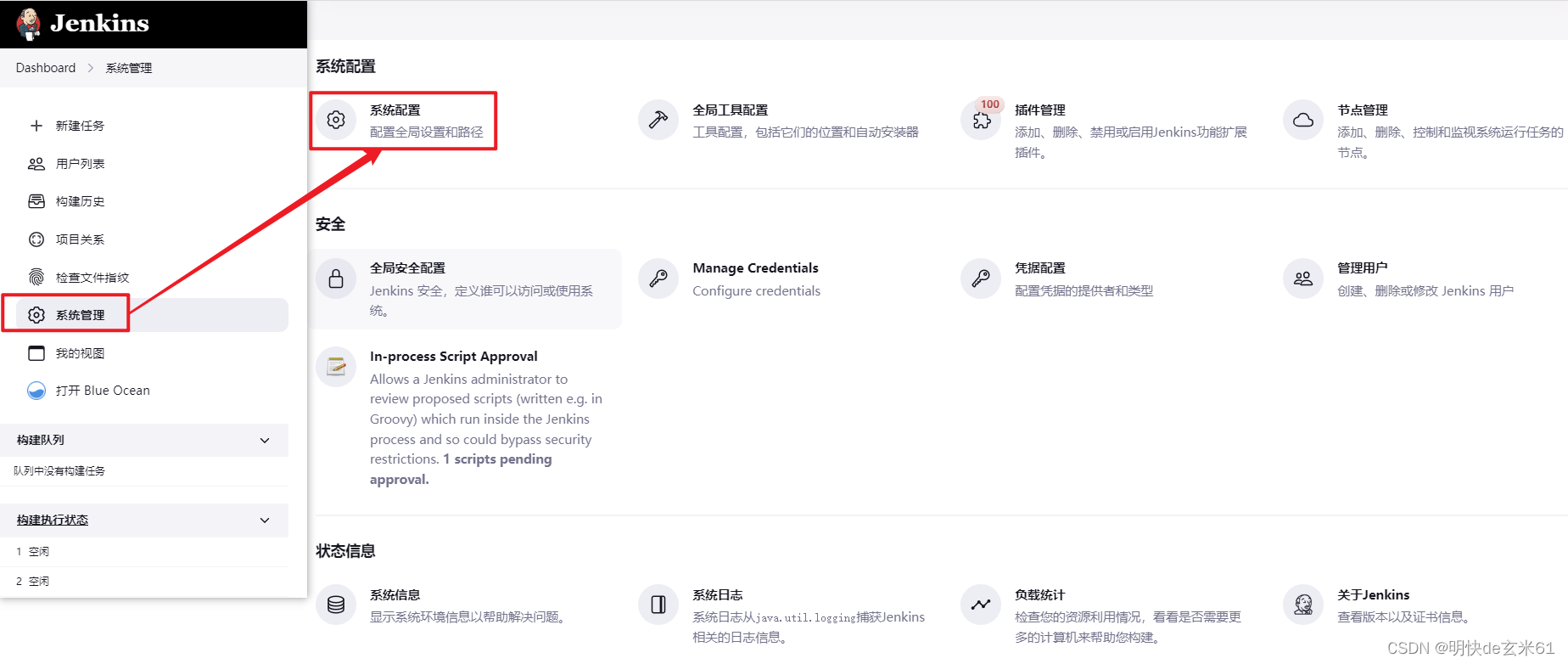

找到系统配置,如下:

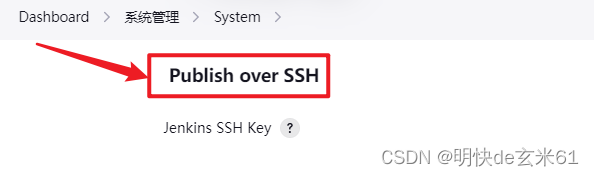

找到Publish over SSH,如下:

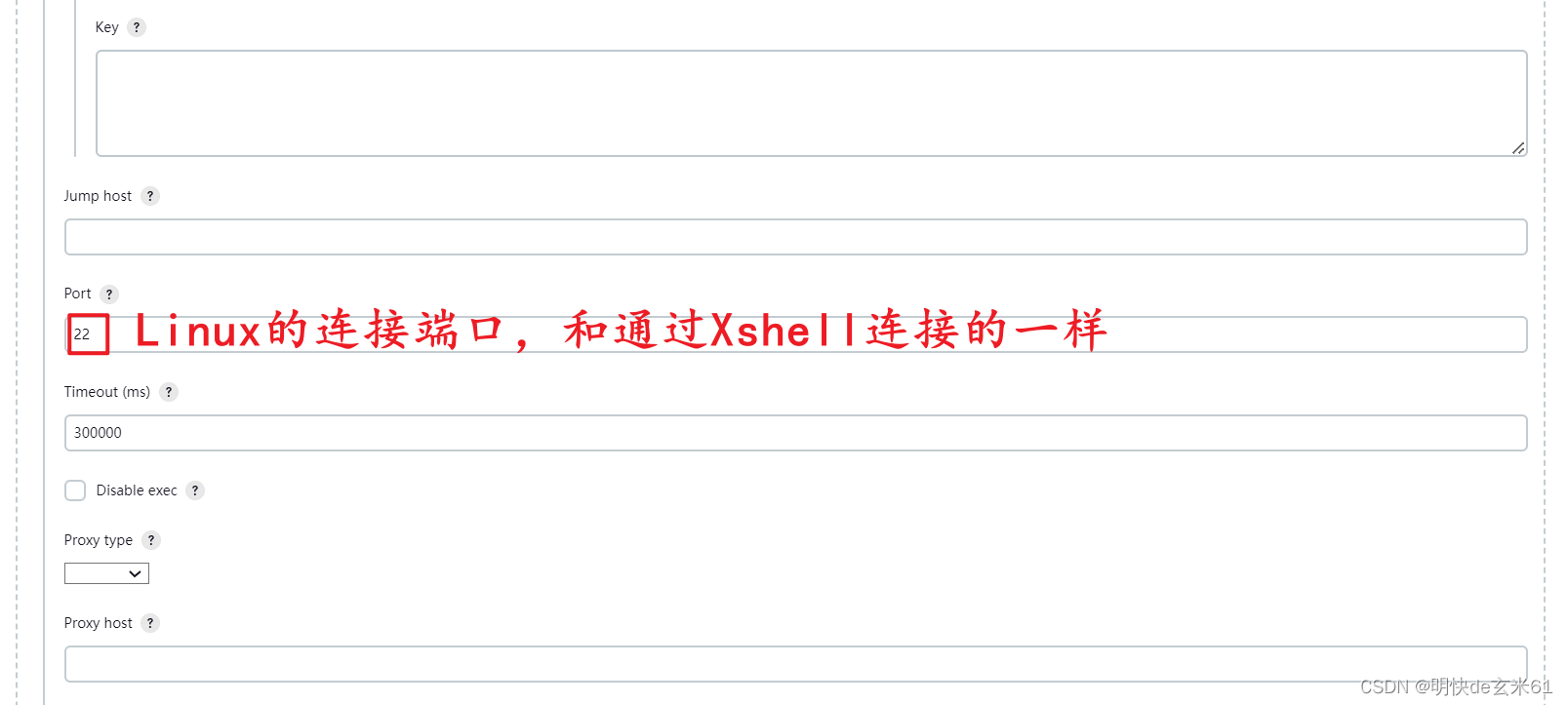

点击新增按钮,在下面需要k8s主节点的连接信息,并且该主节点的nfs目录就是jenkins的/var/jenkins_home所在的nfs目录,如下:

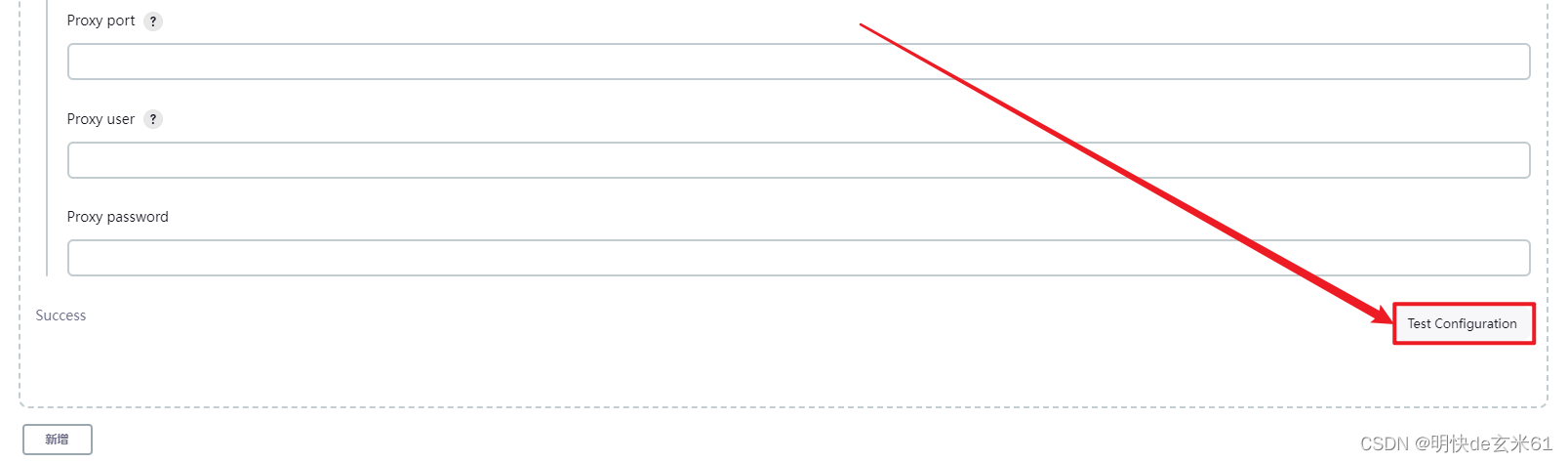

连接信息添加完成之后,点击Test Configuration可以进行连接测试

6.10.3、把相关项目奉上

链接:https://pan.baidu.com/s/1YhDn1dAuq10LAKK2w_Z39A?pwd=k7m5

提取码:k7m5

6.10.4、先来观察JenkinsFile文件内容

先介绍一下提前需要做的事情:

- 在harbor仓库中的新建相关库

然后简单介绍JenkinsFile中的相关步骤:

- 使用jenkins从gitee上拉取代码到工作空间

- 使用maven镜像打jar包

- 使用docker build进行镜像构建

- 登录harbor仓库

- 将镜像推送到harbor仓库

- 通过publish over ssh调用主节点的k8s来执行YamlStatefulSet.yaml

- k8s主节点将会

pipeline {

// 在任何可用的代理上,执行流水线或它的任何阶段。

agent any

// 环境定义

environment{

// gitee代码拉取下面的位置,以后进入maven容器执行操作的时候还能根据该变量回到该位置

WS = "${WORKSPACE}"

// 服务名称,其实就是pom依赖gav是的a

SVN_FOLD = readMavenPom().getArtifactId()

// Jenkins》系统配置》Publish over SSH》Name名称,我在Jenkins中配置的是master

SSH_PATH = "master"

// gitee分支

SVN_TYPE = "master"

// 镜像版本号

image_tag = readMavenPom().getVersion()

// harbor仓库ip和端口

ip = "192.168.139.133:30002"

// 微服务端口号,从application.yaml中得知

port = "8090"

}

options {

// 设置保留的最大历史构建数为6

buildDiscarder(logRotator(numToKeepStr: '6'))

}

// 选择参数构建 是否回滚版本

parameters {

//choice choices: ['deploy', 'rollback'], name: 'status'

choice(name: 'mode', choices: ['deploy','rollback'], description: '''deploy 发布新版本 rollback 版本回退''')

string(name: 'version_id', defaultValue: '0', description: '回滚时用,如需要回滚到更早构建,请输入对应构建ID,只支持最近五次构建的回滚,发布新版本请忽略此参数')

//string defaultValue: '0', name: 'version'

}

stages {

// 构建

stage('Maven Build') {

// maven代理(直接从官网拉取maven镜像)

agent {

docker {

image 'maven:3-alpine'

}

}

steps {

echo '编译……'

sh 'pwd & ls -alh'

sh 'mvn --version'

echo "当前工作目录:${WORKSPACE}"

echo "常态工作目录:${WS}"

// sh './gradlew build'

// 执行shell命令,settings.xml配置文件来自于创建jenkins的yaml文件中的配置,也就是ConfigMap

sh 'cd ${WS} && mvn clean package -s "/var/jenkins_home/maven_config/settings.xml" -Dmaven.test.skip=true && pwd && ls -alh'

sh 'cd ${WS}/target && ls -l'

sh 'echo ${SVN_FOLD}'

}

}

// 增加回滚步骤

stage('回滚') {

when {

environment name: 'mode',value: 'rollback'

}

steps {

script {

if(env.BRANCH_NAME=='master'){

try {

sh '''

if [ ${version_id} == "0" ];then

[ $? != 0 ] && echo "请注意,在执行回滚时出错,故而退出构建,可立即联系运维同学处理!" && exit 1

echo "=============="

echo "项目已回滚失败,version_id不能为0!"

echo "=============="

exit 1

else

echo "选择回滚的版本是:${version_id},将回滚到 ${version_id} 的制品,回滚即将进行..."

cp ${JENKINS_HOME}/jobs/${SVN_FOLD}/branches/${SVN_TYPE}/builds/${version_id}/archive/target/${SVN_FOLD}.jar ${WORKSPACE}/target/

[ $? != 0 ] && echo "请注意,在执行回滚时出错,故而退出构建,可立即联系运维同学处理!" && exit 1

echo "项目回滚到 ${version_id} 完成!"

fi

'''

} catch(err) {

echo "${err}"

// 阶段终止

currentBuild.result = 'FAILURE'

}

}

}

}

}

stage('Docker Build') {

steps {

echo 'Building'

sh "echo 当前分支 : ${env.BRANCH_NAME}"

// 分支构建

script{

// if(env.BRANCH_NAME=='master'){

// k8s分支

// 以下操作是登录harbor仓库、构建docker镜像、推送镜像到harbor仓库

echo "start to build '${SVN_FOLD}-${SVN_TYPE}' on test ..."

sh '''

#docker rmi -f $(docker images | grep "none" | awk '{print $3}')

CID=$(docker ps -a | grep "${SVN_FOLD}""-${SVN_TYPE}" | awk '{print $1}')

#IID=$(docker images | grep "${SVN_FOLD}""-${SVN_TYPE}" | awk '{print $3}')

IID=$(docker images | grep "none" | awk '{print $3}')

cp "$WORKSPACE"/target/*.jar "$WORKSPACE"

if [ -n "$IID" ]; then

echo "存在'${SVN_FOLD}-${SVN_TYPE}'镜像,IID='$IID'"

cd "$WORKSPACE"

##构建镜像到远程仓库

docker login "${ip}" -u admin -p admin123456

#docker tag "${SVN_FOLD}":"${image_tag}" "${ip}"/test/"${SVN_FOLD}":"${image_tag}"

docker build -t "${ip}"/test/"${SVN_FOLD}""-${SVN_TYPE}":"${image_tag}" .

docker push "${ip}"/test/"${SVN_FOLD}""-${SVN_TYPE}":"${image_tag}"

rm -rf "$WORKSPACE"/*.jar

else

echo "不存在'${SVN_FOLD}-${SVN_TYPE}'镜像,开始构建镜像"

cd "$WORKSPACE"

##构建镜像到远程仓库

docker login "${ip}" -u admin -p admin123456

#docker tag "${SVN_FOLD}":"${image_tag}" "${ip}"/test/"${SVN_FOLD}":"${image_tag}"

docker build -t "${ip}"/test/"${SVN_FOLD}""-${SVN_TYPE}":"${image_tag}" .

docker push "${ip}"/test/"${SVN_FOLD}""-${SVN_TYPE}":"${image_tag}"

rm -rf "$WORKSPACE"/"${SVN_FOLD}".jar

fi

'''

echo "Build '${SVN_FOLD}-${SVN_TYPE}' success on k8s ..."

// }

}

}

}

// 部署

stage('Deploy') {

steps {

echo 'Deploying'

// 分支部署

script{

// if(env.BRANCH_NAME=='master'){

//k8s部署测试

echo "k8s部署测试"

echo "start to k8s '${SVN_FOLD}-${SVN_TYPE}' on test ..."

//调用Publish Over SSH插件,执行YamlStatefulSet.yaml脚本

sshPublisher(publishers: [sshPublisherDesc(configName: "${SSH_PATH}", transfers: [sshTransfer(cleanRemote: false, excludes: '', execCommand: """#!/bin/bash

# 使用k8s构建,这里需要远程执行 k8s 主节点服务器上的命令,需要先配置 ssh 免密码登录

kubectl delete -f /nfs/data/jenkins_home/workspace/${SVN_FOLD}/YamlStatefulSet.yaml

kubectl apply -f /nfs/data/jenkins_home/workspace/${SVN_FOLD}/YamlStatefulSet.yaml

exit 0""", execTimeout: 120000, flatten: false, makeEmptyDirs: false, noDefaultExcludes: false, patternSeparator: '[, ]+', remoteDirectory: '', remoteDirectorySDF: false, removePrefix: '', sourceFiles: '')], usePromotionTimestamp: false, useWorkspaceInPromotion: false, verbose: true)]

)

// }

}

}

}

}

// 归档

post {

always {

echo 'Archive artifacts'

archiveArtifacts artifacts: "**/target/*.jar", excludes: "**/target"

}

}

}

6.10.5、再来观察YamlStatefulSet.yaml文件内容

可以看到,我们去harbor仓库中拉取了镜像进行部署,用户名和密码使用的方式是全局密钥方式,k8s主节点执行该yaml文件之后,将会完成服务StatefulSet模式的部署,以及通过Service方式将浏览器访问端口8090暴露出来了

# statefulSet yaml template

---

apiVersion: v1

kind: Service

metadata:

name: java-k8s-demo

namespace: test

labels:

app: java-k8s-demo

spec:

type: NodePort

ports:

- port: 8090

targetPort: 8090

nodePort: 27106

name: rest-http

selector:

app: java-k8s-demo

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: java-k8s-demo

namespace: test

spec:

serviceName: java-k8s-demo

replicas: 1

selector:

matchLabels:

app: java-k8s-demo

template:

metadata:

labels:

app: java-k8s-demo

spec:

imagePullSecrets:

- name: docker-login

containers:

- name: java-k8s-demo

image: 192.168.139.133:30002/test/java-k8s-demo-master:0.0.1-SNAPSHOT

imagePullPolicy: Always

ports:

- containerPort: 8090

resources:

requests:

cpu: 100m

memory: 100Mi

podManagementPolicy: Parallel

6.10.6、再来观察Dockerfile文件内容

可以看到里面将8090端口暴露了出来,其他就是对时区的修改操作

FROM openjdk:8-jre-alpine

# 修改时区

RUN ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && echo 'Asia/Shanghai' >/etc/timezone

# 从第一阶段复制app.jar

COPY /*.jar app.jar

# 定义参数

ENV JAVA_OPTS=""

ENV PARAMS=""

# 暴露端口

EXPOSE 8090

# 启动命令

ENTRYPOINT [ "sh", "-c", "java -Djava.security.egd=file:/dev/./urandom $JAVA_OPTS -jar /app.jar $PARAMS" ]

6.10.7、再来观察application.properties文件内容

可以看到,上面Dockerfile、JenkinsFile、YamlStatefulSet.yaml中提到的8090端口就来自于该properties文件

server.port=8090

6.10.8、剩余操作

我们在Jenkins中新建一个流水线任务,其中Git仓库的信息填写Gitee中项目的路径,详细操作请看:尚硅谷云原生学习笔记(1-75集)》62、jenkins文件的结构,然后整个流水线项目就会自动运转起来,最终完成项目的部署

6.11、Jenkins容器+maven服务的另外一种实现方式

6.11.1、制作带maven环境的jenkins镜像

# 基于官方镜像

FROM jenkins/jenkins:2.319.3

# 下载tar.gz安装包,参考博客:https://blog.csdn.net/qq_42449963/article/details/135489944

# 解压到/opt目录下,后续在Jenkins中配置maven工具也会使用到

ADD ./apache-maven-3.5.0-bin.tar.gz /opt/

# 配置环境变量

ENV MAVEN_HOME=/opt/apache-maven-3.5.0

# 配置环境变量,其中Jenkins镜像自带java环境

ENV PATH=$JAVA_HOME/bin:$MAVEN_HOME/bin:$PATH

# 使用root用户

USER root

# 暂时没看懂作用

RUN echo "jenkins ALL=NOPASSWD: ALL" >> /etc/sudoers

RUN git config --global http.sslVerify false

USER jenkins

6.11.2、编写jenkins.yaml

说明:

- 将名称空间修改成自己想要的

- 以下jenkins的yaml文件只能运行在某一个k8s节点上,原因是jenkins目录、maven仓库占用空间都比较大,所以可以采用标签选择器将jenkins固定在某一个固定的k8s节点上

- 不要修改外挂maven配置文件中的本地仓库地址,使用

/root/.m2/repository就可以了,如果需要修改,记得修改容器内部存储maven仓库的地址信息 - 拓展:假设想让jenkins在所有k8s节点上都可以运行,那就需要将jenkins目录和maven仓库外挂地址放到共享存储下面,比如nfs共享存储

kind: Service

apiVersion: v1

metadata:

name: jenkins

namespace: devops

labels:

name: jenkins

spec:

ports:

- name: jenkins

protocol: TCP

port: 8080

targetPort: 8080

nodePort: 30008

selector:

name: jenkins

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: jenkins

namespace: devops

labels:

app: jenkins

name: jenkins

spec:

replicas: 1

selector:

# 标签选择器,可以将jenkins限制在某个k8s节点上

matchLabels:

app: jenkins

name: jenkins

template:

metadata:

labels:

app: jenkins

name: jenkins

spec:

imagePullSecrets:

- name: docker-login

containers:

- name: jenkins

image: jenkins/jenkins_maven:2.319.3

ports:

- containerPort: 8080

protocol: TCP

resources:

limits:

cpu: 2

memory: 4Gi

requests:

cpu: 1

memory: 1Gi

volumeMounts:

- name: jenkins-home

mountPath: /var/jenkins_home

- name: docker

# docker.sock也有可能是/run/docker.sock,如果docker不能使用就换成它

mountPath: /var/run/docker.sock

- name: docker-home

mountPath: /usr/bin/docker

- name: mvn-setting

mountPath: /opt/apache-maven-3.5.0/conf/settings.xml

subPath: settings.xml

- name: repository

mountPath: /root/.m2/repository

volumes:

- name: jenkins-home

hostPath:

path: /opt/jenkins_home/

# docker.sock也有可能是/run/docker.sock,如果docker不能使用就换成它

- name: docker

hostPath:

path: /var/run/docker.sock

type: ""

# 注意docker在主机的安装位置,部分docker版本在/usr/local/bin/docker中

- name: docker-home

hostPath:

path: /usr/bin/docker

type: ""

# maven文件内容,注意本地仓库就用原始的,不然挂载就会失效

- name: mvn-setting

configMap:

name: settings.xml

items:

- key: settings.xml

path: settings.xml

# maven仓库外挂路径

- name: repository

hostPath:

path: /opt/repository

type: ""

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins

namespace: devops

spec:

# nfs存储类名称

storageClassName: "h145-nfs-storage"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

7、使用docker安装Kuboard

7.1、安装文档

7.2、安装命令

docker run -d \

--restart=unless-stopped \

--name=kuboard \

-p 30080:80/tcp \

-p 10081:10081/udp \

-p 10081:10081/tcp \

-e KUBOARD_ENDPOINT="http://虚拟机ip:30080" \

-e KUBOARD_AGENT_SERVER_UDP_PORT="10081" \

-e KUBOARD_AGENT_SERVER_TCP_PORT="10081" \

-e KUBOARD_ADMIN_DERAULT_PASSWORD="密码(用户名是admin,不一定生效)" \

-v /root/kuboard-data:/data \

eipwork/kuboard:v3

例如:

docker run -d \

--restart=unless-stopped \

--name=kuboard \

-p 30080:80/tcp \

-p 10081:10081/udp \

-p 10081:10081/tcp \

-e KUBOARD_ENDPOINT="http://192.168.139.133:30080" \

-e KUBOARD_AGENT_SERVER_UDP_PORT="10081" \

-e KUBOARD_AGENT_SERVER_TCP_PORT="10081" \

-e KUBOARD_ADMIN_DERAULT_PASSWORD="admin123456" \

-v /root/kuboard-data:/data \

eipwork/kuboard:v3

7.3、登录kuboard

- 默认用户名:

admin - 默认密码:

Kuboard123

7.4、添加k8s集群到kuboard

点击添加集群按钮:

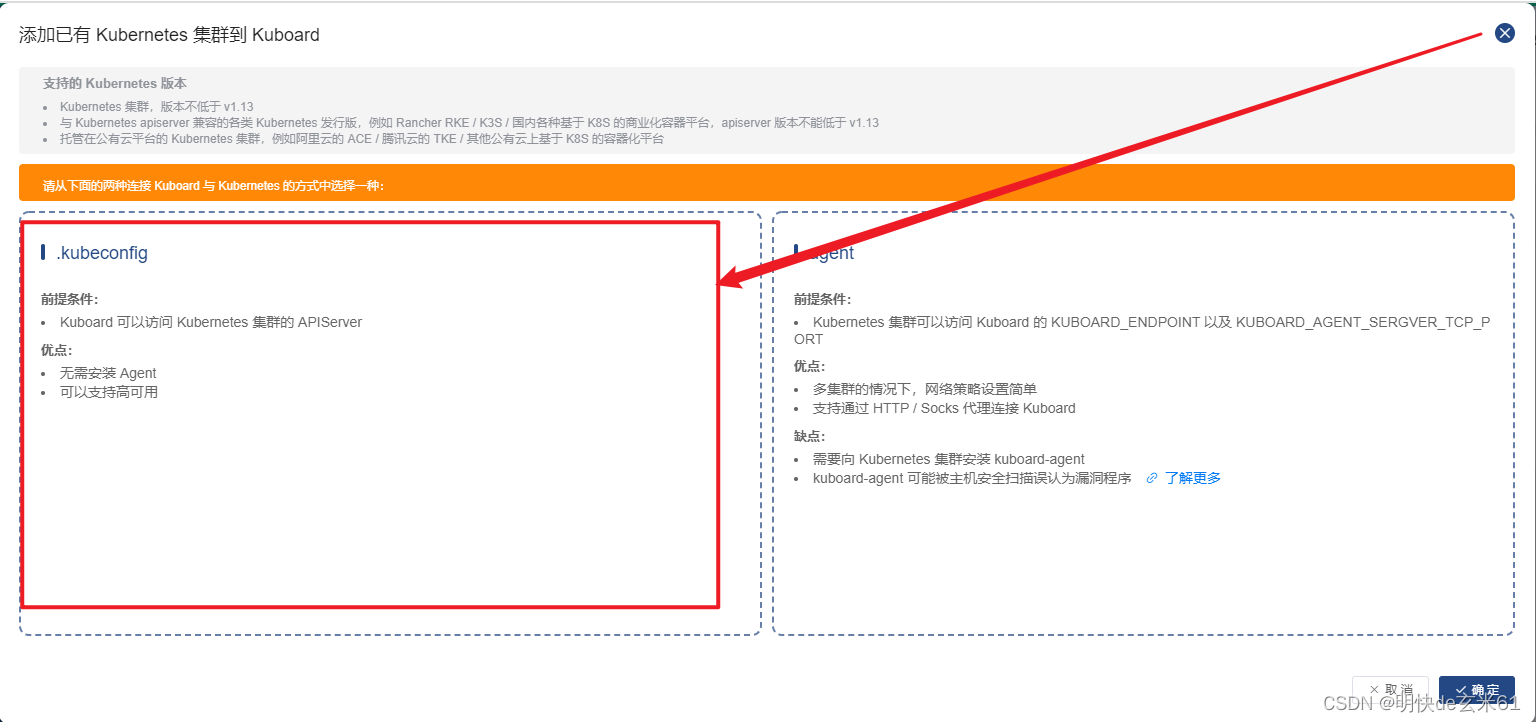

使用左侧kubeconfig的添加方式:

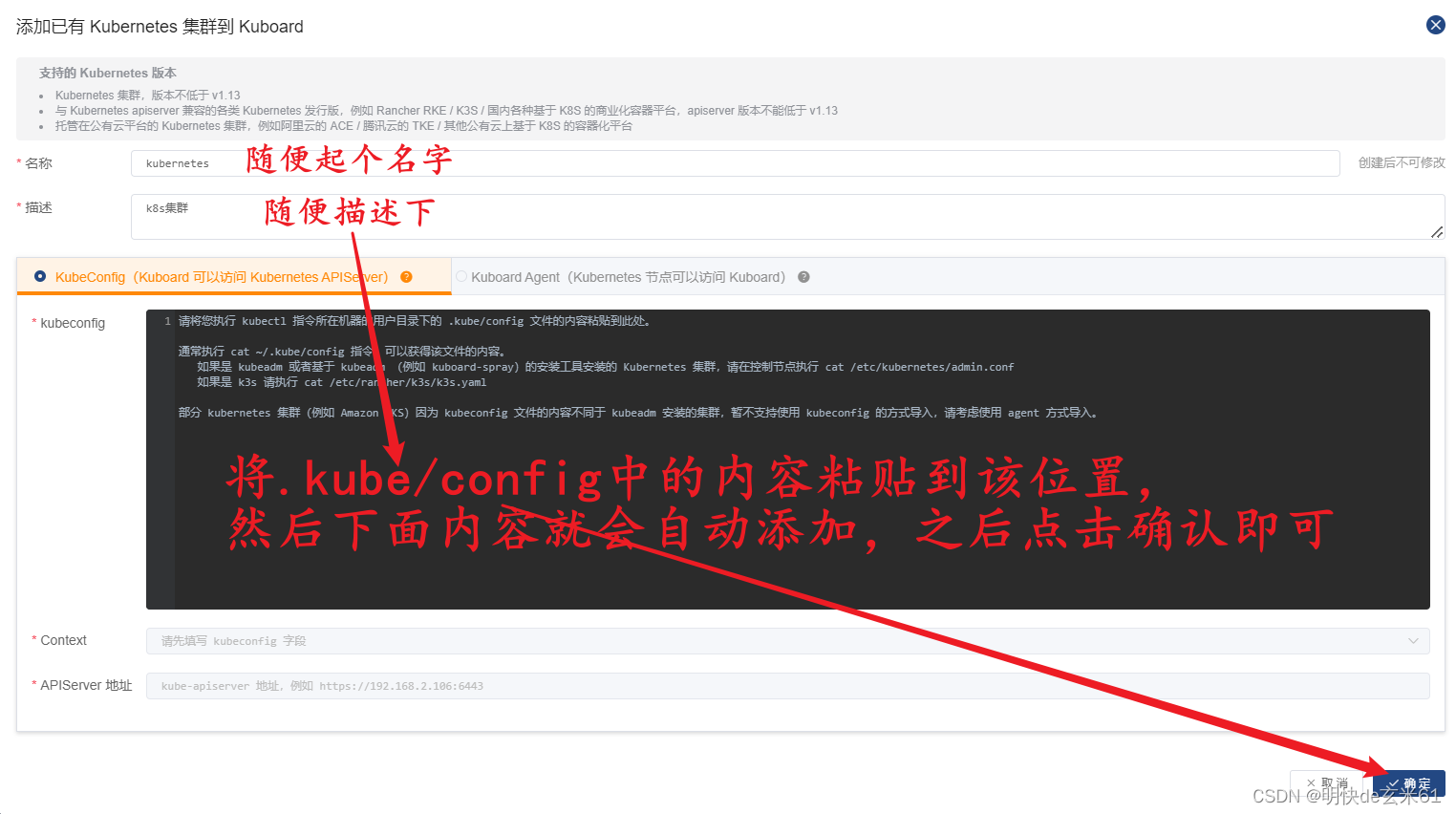

在k8s集群主节点中执行cat .kube/config指令,并且复制文件内容,然后执行以下操作:

最终执行结果就是:

8、使用k8s安装gitlab

说明:

使用kubectl apply -f XXX.yaml安装即可,不过安装完成之后要得很久才能访问,所以只要日志不报错就请耐心等待,如果需要删除gitlab容器,可能需要等很久,尽量不要强制删除,避免影响垃圾回收

参数资料:

apiVersion: v1

kind: Namespace

metadata:

name: gitlab

spec: {}

---

apiVersion: v1

kind: Service

metadata:

name: gitlab

namespace: gitlab

spec:

type: NodePort

ports:

# Port上的映射端口

- port: 443

targetPort: 443

name: gitlab443

- port: 80

targetPort: 80

nodePort: 31320

name: gitlab80

- port: 22

targetPort: 22

name: gitlab22

selector:

app: gitlab

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: gitlab

namespace: gitlab

spec:

selector:

matchLabels:

app: gitlab

template:

metadata:

namespace: gitlab

labels:

app: gitlab

spec:

containers:

# 应用的镜像

- image: gitlab/gitlab-ce

name: gitlab

# 应用的内部端口

ports:

- containerPort: 443

name: gitlab443

- containerPort: 80

name: gitlab80

- containerPort: 22

name: gitlab22

volumeMounts:

# gitlab持久化

- name: gitlab-config

mountPath: /etc/gitlab

- name: gitlab-logs

mountPath: /var/log/gitlab

- name: gitlab-data

mountPath: /var/opt/gitlab

volumes:

# 使用nfs互联网存储

- name: gitlab-config

persistentVolumeClaim: # 使用存储卷声明方式

claimName: gitlab-config # pvc名称

- name: gitlab-logs

persistentVolumeClaim: # 使用存储卷声明方式

claimName: gitlab-logs # pvc名称

- name: gitlab-data

persistentVolumeClaim: # 使用存储卷声明方式

claimName: gitlab-data # pvc名称

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gitlab-config

namespace: gitlab

labels:

app: gitlab-config

spec:

storageClassName: managed-nfs-storage ## NFS动态供应的存储类名字,按照你自己的填写,如果设置了默认存储类,就可以不设置该值,默认也会使用默认存储类

accessModes:

- ReadWriteMany

resources:

requests:

storage: 100m

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gitlab-logs

namespace: gitlab

labels:

app: gitlab-logs

spec:

storageClassName: managed-nfs-storage ## NFS动态供应的存储类名字,按照你自己的填写,如果设置了默认存储类,就可以不设置该值,默认也会使用默认存储类

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: gitlab-data

namespace: gitlab

labels:

app: gitlab-data

spec:

storageClassName: managed-nfs-storage ## NFS动态供应的存储类名字,按照你自己的填写,如果设置了默认存储类,就可以不设置该值,默认也会使用默认存储类

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

9、安装单机版mysql

9.1、创建名称空间

kubectl create ns mysql

9.2、创建mysql5.7

9.2.1、创建ConfigMap

通过kubectl apply -f XXX.yaml执行以下yaml文件即可

apiVersion: v1

data:

my.cnf: >

[client]

default-character-set=utf8

[mysql]

default-character-set=utf8

[mysqld]

# 设置client连接mysql时的字符集,防止乱码

init_connect='SET NAMES utf8'

init_connect='SET collation_connection = utf8_general_ci'

# 数据库默认字符集

character-set-server=utf8

#数据库字符集对应一些排序等规则,注意要和character-set-server对应

collation-server=utf8_general_ci

# 跳过mysql程序起动时的字符参数设置 ,使用服务器端字符集设置

skip-character-set-client-handshake

#

禁止MySQL对外部连接进行DNS解析,使用这一选项可以消除MySQL进行DNS解析的时间。但需要注意,如果开启该选项,则所有远程主机连接授权都要使用IP地址方式,否则MySQL将无法正常处理连接请求!

skip-name-resolve

kind: ConfigMap

metadata:

name: mysql-config

namespace: mysql

说明: 我们创建了一个ConfigMap配置的目的是把my.cnf配置文件提出来,生成效果如下:

9.2.2、创建mysql的Service

通过kubectl apply -f XXX.yaml执行以下yaml文件即可

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

namespace: mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql # 匹配下面spec.template中的labels中的键值对

serviceName: mysql # 一般和service的名称一致,用来和service联合,可以用来做域名访问

template:

metadata:

labels:

app: mysql # 让上面spec.selector.matchLabels用来匹配Pod

spec:

containers:

- env:

- name: MYSQL_ROOT_PASSWORD # 密码

value: "123456"

image: 'mysql:5.7'

livenessProbe: # 存活探针

exec:

command:

- mysqladmin

- '-uroot'

- '-p${MYSQL_ROOT_PASSWORD}'

- ping

failureThreshold: 3

initialDelaySeconds: 30

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

name: mysql

ports:

- containerPort: 3306 # 容器端口

name: client

protocol: TCP

readinessProbe: # 就绪探针

exec:

command:

- mysqladmin

- '-uroot'

- '-p${MYSQL_ROOT_PASSWORD}'

- ping

failureThreshold: 3

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

volumeMounts: # 挂载声明

- mountPath: /etc/mysql/conf.d/my.cnf # 配置文件

name: conf

subPath: my.cnf

- mountPath: /var/lib/mysql # 数据目录

name: data

- mountPath: /etc/localtime # 本地时间

name: localtime

readOnly: true

volumes:

- configMap: # 配置文件使用configMap挂载

name: mysql-config

name: conf

- hostPath: # 本地时间使用本地文件

path: /etc/localtime

type: File

name: localtime

volumeClaimTemplates: # 数据目录使用nfs动态挂载,下面的作用就是指定PVC

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: data # 和上面volumeMounts下面的name=data那个对应

spec:

accessModes:

- ReadWriteMany # 多节点读写

resources:

requests:

storage: 1Gi

storageClassName: managed-nfs-storage # nfs存储类名称

---

apiVersion: v1

kind: Service

metadata:

labels:

app: mysql

name: mysql # 存储类名称

namespace: mysql

spec:

ports:

- name: tcp

port: 3306

targetPort: 3306

nodePort: 32306

protocol: TCP

selector:

app: mysql # Pod选择器

type: NodePort

9.3、创建mysql8.0.2

apiVersion: v1

data:

my.cnf: >-

[client]

default-character-set=utf8mb4

[mysql]

default-character-set=utf8mb4

[mysqld]

max_connections = 2000

secure_file_priv=/var/lib/mysql

sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION # 避免严格语法,比如影响groupby使用

skip-name-resolve

open_files_limit = 65535

table_open_cache = 128

log_error = /var/lib/mysql/mysql-error.log #错误日志路径

slow_query_log = 1

long_query_time = 1 #慢查询时间 超过1秒则为慢查询

slow_query_log_file = /var/lib/mysql/mysql-slow.log

default-storage-engine = InnoDB #默认存储引擎

innodb_file_per_table = 1

innodb_open_files = 500

innodb_buffer_pool_size = 64M

innodb_write_io_threads = 4

innodb_read_io_threads = 4

innodb_thread_concurrency = 0

innodb_purge_threads = 1

innodb_flush_log_at_trx_commit = 2

innodb_log_buffer_size = 2M

innodb_log_file_size = 32M

innodb_log_files_in_group = 3

innodb_max_dirty_pages_pct = 90

innodb_lock_wait_timeout = 120

bulk_insert_buffer_size = 8M

myisam_sort_buffer_size = 8M

myisam_max_sort_file_size = 10G

myisam_repair_threads = 1

interactive_timeout = 28800

wait_timeout = 28800

lower_case_table_names = 1 # 使用小写table名称

[mysqldump]

quick

max_allowed_packet = 16M #服务器发送和接受的最大包长度

[myisamchk]

key_buffer_size = 8M

sort_buffer_size = 8M

read_buffer = 4M

write_buffer = 4M

kind: ConfigMap

metadata:

labels:

app: mysql

name: mysql8-config

namespace: mysql

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql8

namespace: mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql8

serviceName: mysql8

template:

metadata:

labels:

app: mysql8

spec:

containers:

- env:

- name: MYSQL_ROOT_PASSWORD # 设置密码

value: "123456"

image: 'mysql:8.0.29'

imagePullPolicy: IfNotPresent

name: mysql8

ports:

- containerPort: 3306

name: client

protocol: TCP

resources:

limits:

cpu: '2'

memory: 4Gi

requests:

cpu: '2'

memory: 2Gi

volumeMounts:

- mountPath: /etc/mysql/conf.d/my.cnf

name: conf

subPath: my.cnf

- mountPath: /etc/localtime

name: localtime

readOnly: true

- mountPath: /var/lib/mysql

name: data

volumes:

- configMap:

name: mysql8-config

name: conf

- hostPath:

path: /etc/localtime

type: File

name: localtime

volumeClaimTemplates: # 数据目录使用nfs动态挂载,下面的作用就是指定PVC

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: data # 和上面volumeMounts下面的name=data那个对应

spec:

accessModes:

- ReadWriteMany # 多节点读写

resources:

requests:

storage: 1Gi

storageClassName: managed-nfs-storage # nfs存储类名称

---

apiVersion: v1

kind: Service

metadata:

labels:

app: mysql8

name: mysql8 # 存储类名称

namespace: mysql

spec:

ports:

- name: tcp

port: 3306

targetPort: 3306

nodePort: 32406

protocol: TCP

selector:

app: mysql8 # Pod选择器

type: NodePort

10、安装minIO

10.1、单机版

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: minio

namespace: minio

spec:

replicas: 1

selector:

matchLabels:

app: minio

serviceName: minio

template:

metadata:

labels:

app: minio

spec:

containers:

- command:

- /bin/sh

- -c

- minio server /data --console-address ":5000"

env:

- name: MINIO_ROOT_USER

value: "admin"

- name: MINIO_ROOT_PASSWORD

value: "admin123456"

image: minio/minio:latest

imagePullPolicy: IfNotPresent

name: minio

ports:

- containerPort: 9000

name: data

protocol: TCP

- containerPort: 5000

name: console

protocol: TCP

volumeMounts:

- mountPath: /data

name: data

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: data

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

storageClassName: "managed-nfs-storage"

---

apiVersion: v1

kind: Service

metadata:

labels:

app: minio

name: minio

namespace: minio

spec:

ports:

- name: data

port: 9000

protocol: TCP

targetPort: 9000

nodePort: 30024

- name: console

port: 5000

protocol: TCP

targetPort: 5000

nodePort: 30427

selector:

app: minio

type: NodePort

10.2、集群版

10.2.1、注意点

- 集群中最少必须有

4个节点,不然启动直接报错

ERROR Invalid command line arguments: Incorrect number of endpoints provided [http://minio-{0...1}.minio.minio.svc.cluster.local/data]

> Please provide correct combination of local/remote paths

HINT:

For more information, please refer to https://docs.min.io/docs/minio-erasure-code-quickstart-guide

- minio的Pod所用的data目录必须是干净的,如果存在内容,可能会出现以下错误,如果出现这种错误,需要把data目录对应的pvc、pv手动删除掉,根据

kubectl delete -f XXX.yaml删除minio服务,然后重新执行yaml文件创建即可

ERROR Unable to initialize backend: Unsupported backend format [fs] found on /data

10.2.2、yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: minio-cm

namespace: minio

data:

user: admin

password: admin123456

---

apiVersion: v1

kind: Service

metadata:

labels:

app: minio

name: minio

namespace: minio

spec:

clusterIP: None

ports:

- name: data

port: 9000

protocol: TCP

targetPort: 9000

- name: console

port: 5000

protocol: TCP

targetPort: 5000

selector:

app: minio

type: ClusterIP

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: minio

namespace: minio

spec:

replicas: 4

selector:

matchLabels:

app: minio

serviceName: minio

template:

metadata:

labels:

app: minio

spec:

containers:

- command:

- /bin/sh

- '-c'

- >-

minio server --console-address ":5000"

http://minio-{0...3}.minio.minio.svc.cluster.local/data

env:

- name: MINIO_ACCESS_KEY

valueFrom:

configMapKeyRef:

key: user

name: minio-cm

- name: MINIO_SECRET_KEY

valueFrom:

configMapKeyRef:

key: password

name: minio-cm

image: minio/minio:latest

imagePullPolicy: IfNotPresent

name: minio

ports:

- containerPort: 9000

name: data

protocol: TCP

- containerPort: 5000

name: console

protocol: TCP

volumeMounts:

- mountPath: /data

name: data

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: data

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: managed-nfs-storage

---

apiVersion: v1

kind: Service

metadata:

name: minio-service

namespace: minio

spec:

ports:

- name: data

nodePort: 30900

port: 9000

protocol: TCP

targetPort: 9000

- name: console

nodePort: 30500

port: 5000

protocol: TCP

targetPort: 5000

selector:

app: minio

type: NodePort

11、安装rabbitmq

11.1、单机版

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: rabbitmq

namespace: rabbitmq

spec:

replicas: 1

selector:

matchLabels:

app: rabbitmq

serviceName: rabbitmq

template:

metadata:

labels:

app: rabbitmq

spec:

containers:

- env:

- name: RABBITMQ_DEFAULT_VHOST

value: /

- name: RABBITMQ_DEFAULT_USER

value: admin

- name: RABBITMQ_DEFAULT_PASS

value: admin123456

image: rabbitmq:3.8.3-management

imagePullPolicy: IfNotPresent

name: rabbitmq

ports:

- containerPort: 15672

name: rabbitmq-web

protocol: TCP

- containerPort: 5672

name: rabbitmq-app

protocol: TCP

volumeMounts:

- mountPath: /var/lib/rabbitmq

name: rabbitmq-data

subPath: rabbitmq-data

volumeClaimTemplates:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rabbitmq-data

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 500Mi

storageClassName: managed-nfs-storage

---

apiVersion: v1

kind: Service

metadata:

labels:

app: rabbitmq

name: rabbitmq

namespace: rabbitmq

spec:

ports:

- name: mq

port: 15672

protocol: TCP

targetPort: 15672

- name: mq-app

port: 5672

protocol: TCP

targetPort: 5672

selector:

app: rabbitmq

type: NodePort

11.2、集群版

kind: ConfigMap

apiVersion: v1

metadata:

name: rabbitmq-cluster-config

namespace: rabbitmq

labels:

addonmanager.kubernetes.io/mode: Reconcile

data:

enabled_plugins: |

[rabbitmq_management,rabbitmq_peer_discovery_k8s].

rabbitmq.conf: |

default_user = admin

default_pass = admin123456

## Cluster formation. See https://www.rabbitmq.com/cluster-formation.html to learn more.

cluster_formation.peer_discovery_backend = rabbit_peer_discovery_k8s

cluster_formation.k8s.host = kubernetes.default.svc.cluster.local

## Should RabbitMQ node name be computed from the pod's hostname or IP address?

## IP addresses are not stable, so using [stable] hostnames is recommended when possible.

## Set to "hostname" to use pod hostnames.

## When this value is changed, so should the variable used to set the RABBITMQ_NODENAME

## environment variable.

cluster_formation.k8s.address_type = hostname

## How often should node cleanup checks run?

cluster_formation.node_cleanup.interval = 30

## Set to false if automatic removal of unknown/absent nodes

## is desired. This can be dangerous, see

## * https://www.rabbitmq.com/cluster-formation.html#node-health-checks-and-cleanup

## * https://groups.google.com/forum/#!msg/rabbitmq-users/wuOfzEywHXo/k8z_HWIkBgAJ

cluster_formation.node_cleanup.only_log_warning = true

cluster_partition_handling = autoheal

## See https://www.rabbitmq.com/ha.html#master-migration-data-locality

queue_master_locator=min-masters

## See https://www.rabbitmq.com/access-control.html#loopback-users

loopback_users.guest = false

cluster_formation.randomized_startup_delay_range.min = 0

cluster_formation.randomized_startup_delay_range.max = 2

# default is rabbitmq-cluster's namespace

# hostname_suffix

cluster_formation.k8s.hostname_suffix = .rabbitmq-cluster.rabbitmq.svc.cluster.local

# memory

vm_memory_high_watermark.absolute = 100Mi

# disk

disk_free_limit.absolute = 100Mi

---

kind: Service

apiVersion: v1

metadata:

labels:

app: rabbitmq-cluster

name: rabbitmq-cluster

namespace: rabbitmq

spec:

clusterIP: None

ports:

- name: rmqport

port: 5672

targetPort: 5672

selector:

app: rabbitmq-cluster

---

kind: Service

apiVersion: v1

metadata:

labels:

app: rabbitmq-cluster

name: rabbitmq-cluster-manage

namespace: rabbitmq

spec:

ports:

- name: http

port: 15672

protocol: TCP

targetPort: 15672

selector:

app: rabbitmq-cluster

type: NodePort

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rabbitmq-cluster

namespace: rabbitmq

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rabbitmq-cluster

namespace: rabbitmq

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: rabbitmq-cluster

namespace: rabbitmq

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: rabbitmq-cluster

subjects:

- kind: ServiceAccount

name: rabbitmq-cluster

namespace: rabbitmq

---

kind: StatefulSet

apiVersion: apps/v1

metadata:

labels:

app: rabbitmq-cluster

name: rabbitmq-cluster

namespace: rabbitmq

spec:

replicas: 3

selector:

matchLabels:

app: rabbitmq-cluster

serviceName: rabbitmq-cluster

template:

metadata:

labels:

app: rabbitmq-cluster

spec:

containers:

- args:

- -c

- cp -v /etc/rabbitmq/rabbitmq.conf ${RABBITMQ_CONFIG_FILE}; exec docker-entrypoint.sh

rabbitmq-server

command:

- sh

env:

- name: TZ

value: 'Asia/Shanghai'

- name: RABBITMQ_ERLANG_COOKIE

value: 'SWvCP0Hrqv43NG7GybHC95ntCJKoW8UyNFWnBEWG8TY='

- name: K8S_SERVICE_NAME

value: rabbitmq-cluster

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: RABBITMQ_USE_LONGNAME

value: "true"

- name: RABBITMQ_NODENAME

value: rabbit@$(POD_NAME).$(K8S_SERVICE_NAME).$(POD_NAMESPACE).svc.cluster.local

- name: RABBITMQ_CONFIG_FILE

value: /var/lib/rabbitmq/rabbitmq.conf

image: rabbitmq:3.8.3-management

imagePullPolicy: IfNotPresent

name: rabbitmq

ports:

- containerPort: 15672

name: http

protocol: TCP

- containerPort: 5672

name: amqp

protocol: TCP

volumeMounts:

- mountPath: /etc/rabbitmq

name: config-volume

readOnly: false

- mountPath: /var/lib/rabbitmq

name: rabbitmq-storage

readOnly: false

- name: timezone

mountPath: /etc/localtime

readOnly: true

serviceAccountName: rabbitmq-cluster

terminationGracePeriodSeconds: 30

volumes:

- name: config-volume

configMap:

items:

- key: rabbitmq.conf

path: rabbitmq.conf

- key: enabled_plugins

path: enabled_plugins

name: rabbitmq-cluster-config

- name: timezone

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghai

volumeClaimTemplates:

- metadata:

name: rabbitmq-storage

spec:

accessModes:

- ReadWriteMany

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 100Mi

12、安装redis

12.1、单机版

apiVersion: v1

kind: ConfigMap

metadata:

name: redis.conf

namespace: redis

data:

redis.conf: |-

protected-mode no

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize no

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile ""

databases 16

always-show-logo yes

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

dir ./

replica-serve-stale-data yes

replica-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

replica-priority 100

requirepass admin123456

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

replica-lazy-flush no

appendonly yes

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble yes

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events Ex

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

stream-node-max-bytes 4096

stream-node-max-entries 100

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit replica 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

dynamic-hz yes

aof-rewrite-incremental-fsync yes

rdb-save-incremental-fsync yes

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

labels:

app: redis

name: redis

namespace: redis

spec:

replicas: 1

selector:

matchLabels:

app: redis

serviceName: redis

template:

metadata:

labels:

app: redis

spec:

containers:

- command:

- redis-server

- /etc/redis/redis.conf

image: 'redis:6.2.6'

imagePullPolicy: IfNotPresent

name: redis

ports:

- containerPort: 6379

name: client

protocol: TCP

- containerPort: 16379

name: gossip

protocol: TCP

volumeMounts:

- mountPath: /data

name: redis-data

- mountPath: /etc/redis/

name: redis-conf

volumes:

- configMap:

name: redis.conf

name: redis-conf

volumeClaimTemplates:

- metadata:

name: redis-data

spec:

accessModes:

- ReadWriteMany

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 100Mi

---

apiVersion: v1

kind: Service

metadata:

labels:

app: redis

name: redis

namespace: redis

spec:

ports:

- name: client

port: 6379

protocol: TCP

targetPort: 6379

- name: gossip

port: 16379

protocol: TCP

targetPort: 16379

selector:

app: redis

type: NodePort

13、安装nacos

13.1、集群版

13.1.1、创建nacos数据库

在Mysql中创建数据库,名称叫做nacos,不想叫这个名字的需要去修改下面yaml配置文件中的数据库名称,创建好数据库后,就需要执行以下sql语句,如下:

/*

* Copyright 1999-2018 Alibaba Group Holding Ltd.

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = config_info */

/******************************************/

CREATE TABLE `config_info` (

`id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT 'id',

`data_id` varchar(255) NOT NULL COMMENT 'data_id',

`group_id` varchar(255) DEFAULT NULL,

`content` longtext NOT NULL COMMENT 'content',

`md5` varchar(32) DEFAULT NULL COMMENT 'md5',

`gmt_create` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

`gmt_modified` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '修改时间',

`src_user` text COMMENT 'source user',

`src_ip` varchar(50) DEFAULT NULL COMMENT 'source ip',

`app_name` varchar(128) DEFAULT NULL,

`tenant_id` varchar(128) DEFAULT '' COMMENT '租户字段',

`c_desc` varchar(256) DEFAULT NULL,

`c_use` varchar(64) DEFAULT NULL,

`effect` varchar(64) DEFAULT NULL,

`type` varchar(64) DEFAULT NULL,

`c_schema` text,

`encrypted_data_key` text NOT NULL COMMENT '秘钥',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_configinfo_datagrouptenant` (`data_id`,`group_id`,`tenant_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='config_info';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = config_info_aggr */

/******************************************/

CREATE TABLE `config_info_aggr` (

`id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT 'id',

`data_id` varchar(255) NOT NULL COMMENT 'data_id',

`group_id` varchar(255) NOT NULL COMMENT 'group_id',

`datum_id` varchar(255) NOT NULL COMMENT 'datum_id',

`content` longtext NOT NULL COMMENT '内容',

`gmt_modified` datetime NOT NULL COMMENT '修改时间',

`app_name` varchar(128) DEFAULT NULL,

`tenant_id` varchar(128) DEFAULT '' COMMENT '租户字段',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_configinfoaggr_datagrouptenantdatum` (`data_id`,`group_id`,`tenant_id`,`datum_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='增加租户字段';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = config_info_beta */

/******************************************/

CREATE TABLE `config_info_beta` (

`id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT 'id',

`data_id` varchar(255) NOT NULL COMMENT 'data_id',

`group_id` varchar(128) NOT NULL COMMENT 'group_id',

`app_name` varchar(128) DEFAULT NULL COMMENT 'app_name',

`content` longtext NOT NULL COMMENT 'content',

`beta_ips` varchar(1024) DEFAULT NULL COMMENT 'betaIps',

`md5` varchar(32) DEFAULT NULL COMMENT 'md5',

`gmt_create` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

`gmt_modified` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '修改时间',

`src_user` text COMMENT 'source user',

`src_ip` varchar(50) DEFAULT NULL COMMENT 'source ip',

`tenant_id` varchar(128) DEFAULT '' COMMENT '租户字段',

`encrypted_data_key` text NOT NULL COMMENT '秘钥',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_configinfobeta_datagrouptenant` (`data_id`,`group_id`,`tenant_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='config_info_beta';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = config_info_tag */

/******************************************/

CREATE TABLE `config_info_tag` (

`id` bigint(20) NOT NULL AUTO_INCREMENT COMMENT 'id',

`data_id` varchar(255) NOT NULL COMMENT 'data_id',

`group_id` varchar(128) NOT NULL COMMENT 'group_id',

`tenant_id` varchar(128) DEFAULT '' COMMENT 'tenant_id',

`tag_id` varchar(128) NOT NULL COMMENT 'tag_id',

`app_name` varchar(128) DEFAULT NULL COMMENT 'app_name',

`content` longtext NOT NULL COMMENT 'content',

`md5` varchar(32) DEFAULT NULL COMMENT 'md5',

`gmt_create` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

`gmt_modified` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '修改时间',

`src_user` text COMMENT 'source user',

`src_ip` varchar(50) DEFAULT NULL COMMENT 'source ip',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_configinfotag_datagrouptenanttag` (`data_id`,`group_id`,`tenant_id`,`tag_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='config_info_tag';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = config_tags_relation */

/******************************************/

CREATE TABLE `config_tags_relation` (

`id` bigint(20) NOT NULL COMMENT 'id',

`tag_name` varchar(128) NOT NULL COMMENT 'tag_name',

`tag_type` varchar(64) DEFAULT NULL COMMENT 'tag_type',

`data_id` varchar(255) NOT NULL COMMENT 'data_id',

`group_id` varchar(128) NOT NULL COMMENT 'group_id',

`tenant_id` varchar(128) DEFAULT '' COMMENT 'tenant_id',

`nid` bigint(20) NOT NULL AUTO_INCREMENT,

PRIMARY KEY (`nid`),

UNIQUE KEY `uk_configtagrelation_configidtag` (`id`,`tag_name`,`tag_type`),

KEY `idx_tenant_id` (`tenant_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='config_tag_relation';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = group_capacity */

/******************************************/

CREATE TABLE `group_capacity` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '主键ID',

`group_id` varchar(128) NOT NULL DEFAULT '' COMMENT 'Group ID,空字符表示整个集群',

`quota` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '配额,0表示使用默认值',

`usage` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '使用量',

`max_size` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '单个配置大小上限,单位为字节,0表示使用默认值',

`max_aggr_count` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '聚合子配置最大个数,,0表示使用默认值',

`max_aggr_size` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '单个聚合数据的子配置大小上限,单位为字节,0表示使用默认值',

`max_history_count` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '最大变更历史数量',

`gmt_create` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '创建时间',

`gmt_modified` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP COMMENT '修改时间',

PRIMARY KEY (`id`),

UNIQUE KEY `uk_group_id` (`group_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='集群、各Group容量信息表';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = his_config_info */

/******************************************/

CREATE TABLE `his_config_info` (

`id` bigint(20) unsigned NOT NULL,

`nid` bigint(20) unsigned NOT NULL AUTO_INCREMENT,

`data_id` varchar(255) NOT NULL,

`group_id` varchar(128) NOT NULL,

`app_name` varchar(128) DEFAULT NULL COMMENT 'app_name',

`content` longtext NOT NULL,

`md5` varchar(32) DEFAULT NULL,

`gmt_create` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP,

`gmt_modified` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP,

`src_user` text,

`src_ip` varchar(50) DEFAULT NULL,

`op_type` char(10) DEFAULT NULL,

`tenant_id` varchar(128) DEFAULT '' COMMENT '租户字段',

`encrypted_data_key` text NOT NULL COMMENT '秘钥',

PRIMARY KEY (`nid`),

KEY `idx_gmt_create` (`gmt_create`),

KEY `idx_gmt_modified` (`gmt_modified`),

KEY `idx_did` (`data_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin COMMENT='多租户改造';

/******************************************/

/* 数据库全名 = nacos_config */

/* 表名称 = tenant_capacity */

/******************************************/

CREATE TABLE `tenant_capacity` (

`id` bigint(20) unsigned NOT NULL AUTO_INCREMENT COMMENT '主键ID',

`tenant_id` varchar(128) NOT NULL DEFAULT '' COMMENT 'Tenant ID',

`quota` int(10) unsigned NOT NULL DEFAULT '0' COMMENT '配额,0表示使用默认值',